You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

Copy file name to clipboardExpand all lines: docs/integrate/node-red/mqtt-tutorial.md

+15-13Lines changed: 15 additions & 13 deletions

Display the source diff

Display the rich diff

Original file line number

Diff line number

Diff line change

@@ -23,19 +23,7 @@ You need:

23

23

2. The [node-red-contrib-postgresql](https://github.com/alexandrainst/node-red-contrib-postgresql) module installed.

24

24

3. A running MQTT broker. This tutorial uses [HiveMQ Cloud](https://www.hivemq.com/).

25

25

26

-

## Producing data

27

-

28

-

First, generate data to populate the MQTT topic with Node-RED. If you already

29

-

have an MQTT topic with regular messages, you can skip this part.

30

-

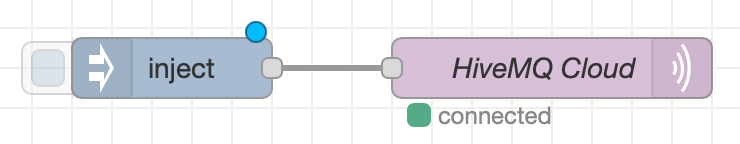

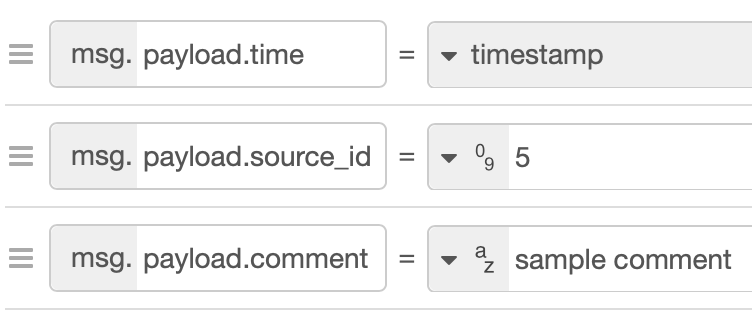

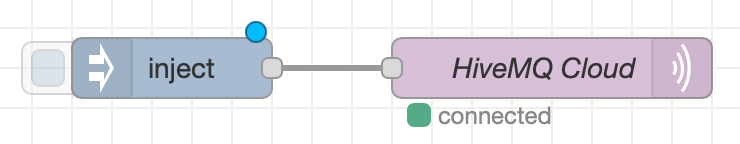

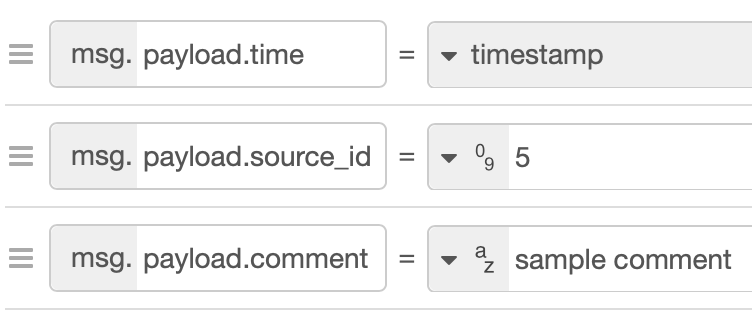

{width=480px}

31

-

32

-

The `inject` node creates a JSON payload with three attributes:

33

-

{width=480px}

34

-

35

-

In this example, two fields are static; only the timestamp changes.

36

-

Download the full workflow definition: [flows-producer.json](https://community.cratedb.com/uploads/short-url/eOvAk3XzDkRbNZjcZV0pZ0SnGu4.json) (1.3 KB)

37

-

38

-

## Consuming and ingesting data

26

+

## Provision CrateDB

39

27

40

28

First of all, we create the target table in CrateDB:

41

29

```sql

@@ -49,6 +37,20 @@ Store the payload as CrateDB’s {ref}`OBJECT data type

49

37

<crate-reference:type-object>` to accommodate an evolving schema.

50

38

For production, also consider the {ref}`partitioning and sharding guide <sharding-partitioning>`.

51

39

40

+

## Publish messages to MQTT

41

+

42

+

First, generate data to populate the MQTT topic with Node-RED. If you already

43

+

have an MQTT topic with regular messages, you can skip this part.

44

+

{width=480px}

45

+

46

+

The `inject` node creates a JSON payload with three attributes:

47

+

{width=480px}

48

+

49

+

In this example, two fields are static; only the timestamp changes.

50

+

Download the full workflow definition: [flows-producer.json](https://community.cratedb.com/uploads/short-url/eOvAk3XzDkRbNZjcZV0pZ0SnGu4.json) (1.3 KB)

51

+

52

+

## Consume messages into CrateDB

53

+

52

54

To ingest efficiently, group messages into batches and use

0 commit comments