diff --git a/units/en/_toctree.yml b/units/en/_toctree.yml

index 040e10ab..4c289141 100644

--- a/units/en/_toctree.yml

+++ b/units/en/_toctree.yml

@@ -122,6 +122,8 @@

title: Diving deeper into policy-gradient

- local: unit4/pg-theorem

title: (Optional) the Policy Gradient Theorem

+ - local: unit4/glossary

+ title: Glossary

- local: unit4/hands-on

title: Hands-on

- local: unit4/quiz

@@ -146,6 +148,8 @@

title: Hands-on

- local: unit5/bonus

title: Bonus. Learn to create your own environments with Unity and MLAgents

+ - local: unit5/quiz

+ title: Quiz

- local: unit5/conclusion

title: Conclusion

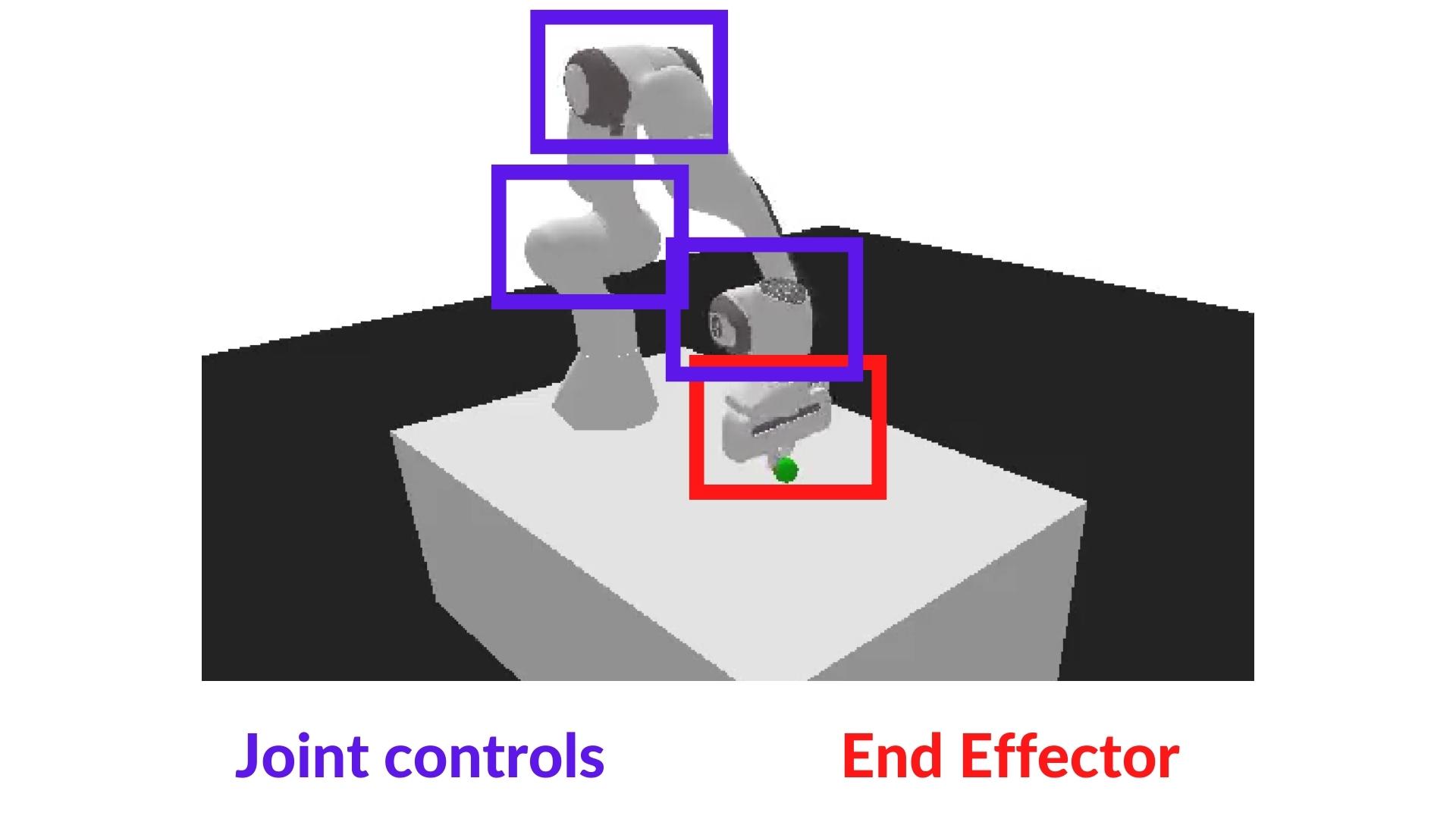

- title: Unit 6. Actor Critic methods with Robotics environments

@@ -157,7 +161,9 @@

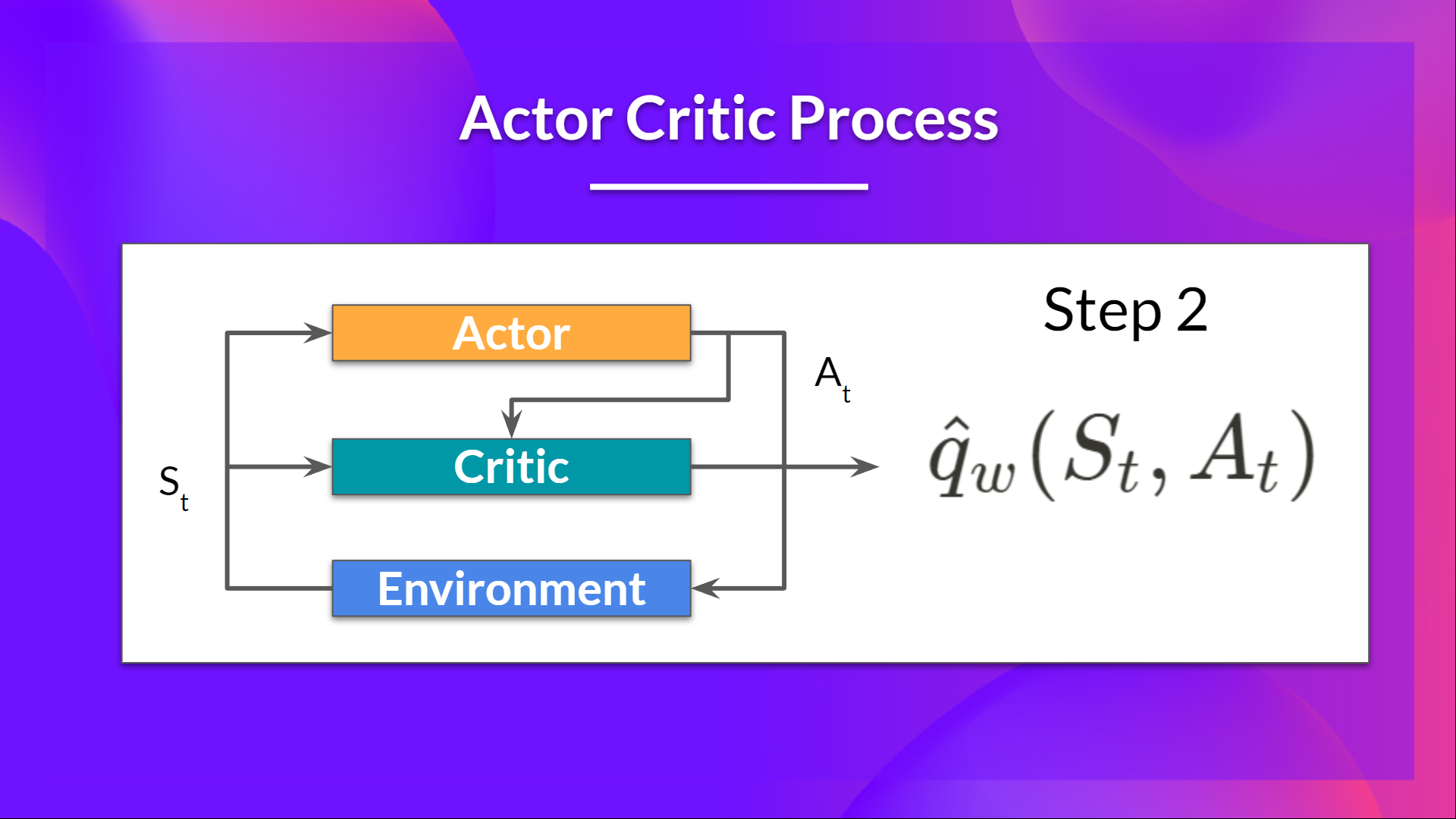

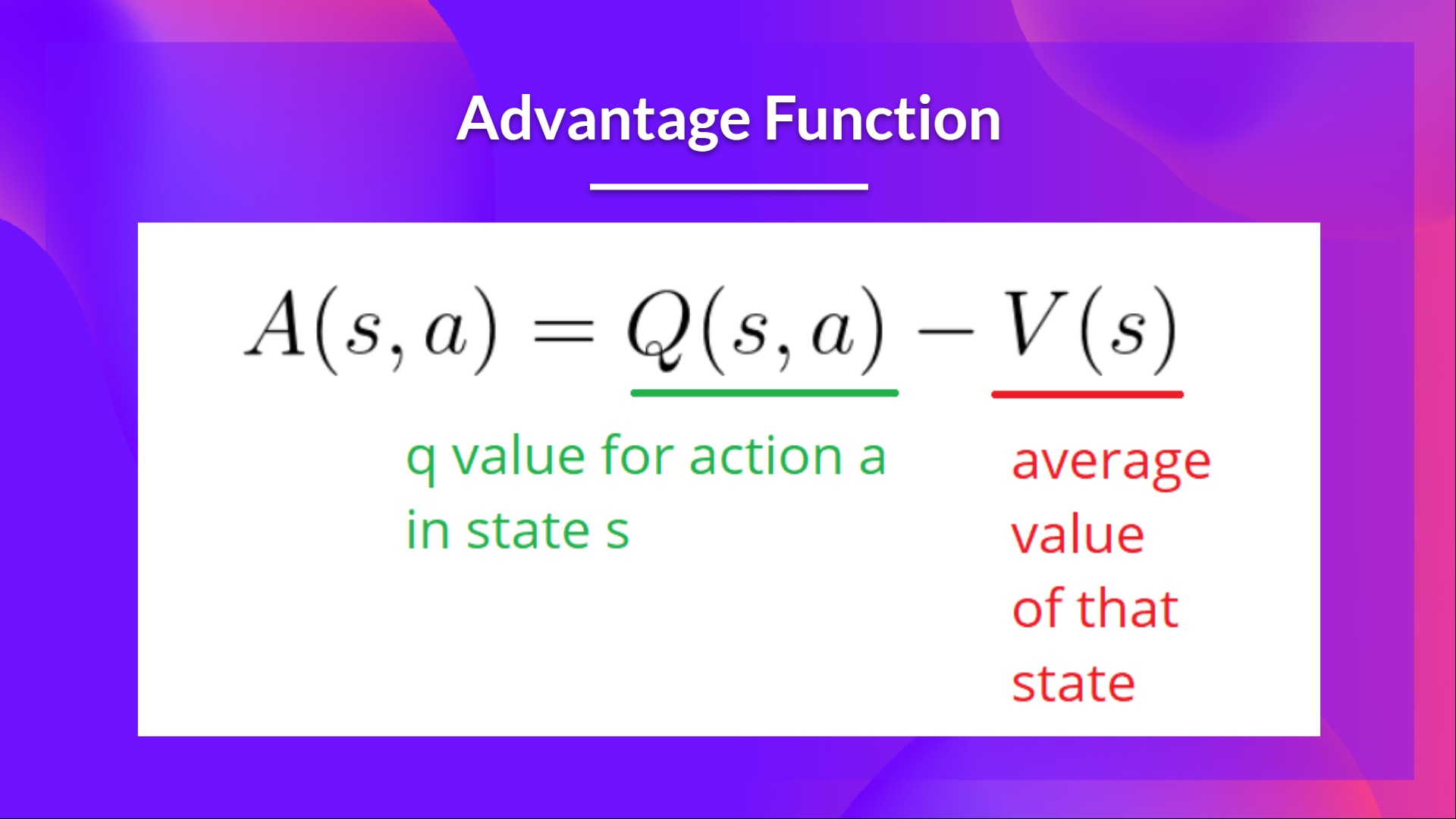

- local: unit6/advantage-actor-critic

title: Advantage Actor Critic (A2C)

- local: unit6/hands-on

- title: Advantage Actor Critic (A2C) using Robotics Simulations with PyBullet and Panda-Gym 🤖

+ title: Advantage Actor Critic (A2C) using Robotics Simulations with Panda-Gym 🤖

+ - local: unit6/quiz

+ title: Quiz

- local: unit6/conclusion

title: Conclusion

- local: unit6/additional-readings

@@ -174,6 +180,8 @@

title: Self-Play

- local: unit7/hands-on

title: Let's train our soccer team to beat your classmates' teams (AI vs. AI)

+ - local: unit7/quiz

+ title: Quiz

- local: unit7/conclusion

title: Conclusion

- local: unit7/additional-readings

@@ -210,6 +218,8 @@

title: Model-Based Reinforcement Learning

- local: unitbonus3/offline-online

title: Offline vs. Online Reinforcement Learning

+ - local: unitbonus3/generalisation

+ title: Generalisation Reinforcement Learning

- local: unitbonus3/rlhf

title: Reinforcement Learning from Human Feedback

- local: unitbonus3/decision-transformers

@@ -220,8 +230,12 @@

title: (Automatic) Curriculum Learning for RL

- local: unitbonus3/envs-to-try

title: Interesting environments to try

+ - local: unitbonus3/learning-agents

+ title: An introduction to Unreal Learning Agents

- local: unitbonus3/godotrl

title: An Introduction to Godot RL

+ - local: unitbonus3/student-works

+ title: Students projects

- local: unitbonus3/rl-documentation

title: Brief introduction to RL documentation

- title: Certification and congratulations

diff --git a/units/en/communication/certification.mdx b/units/en/communication/certification.mdx

index 6d7ab34c..d82ef653 100644

--- a/units/en/communication/certification.mdx

+++ b/units/en/communication/certification.mdx

@@ -3,8 +3,10 @@

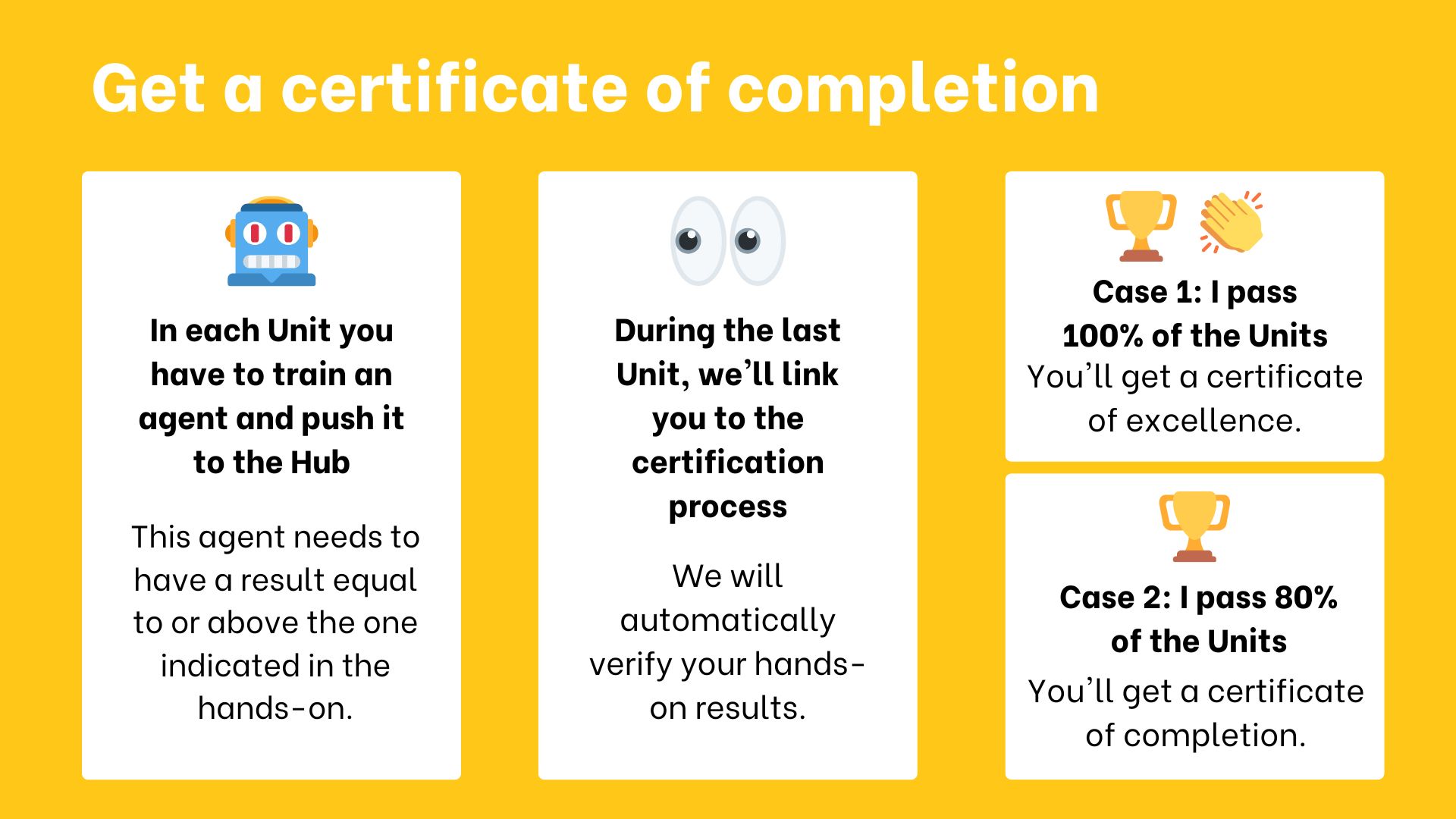

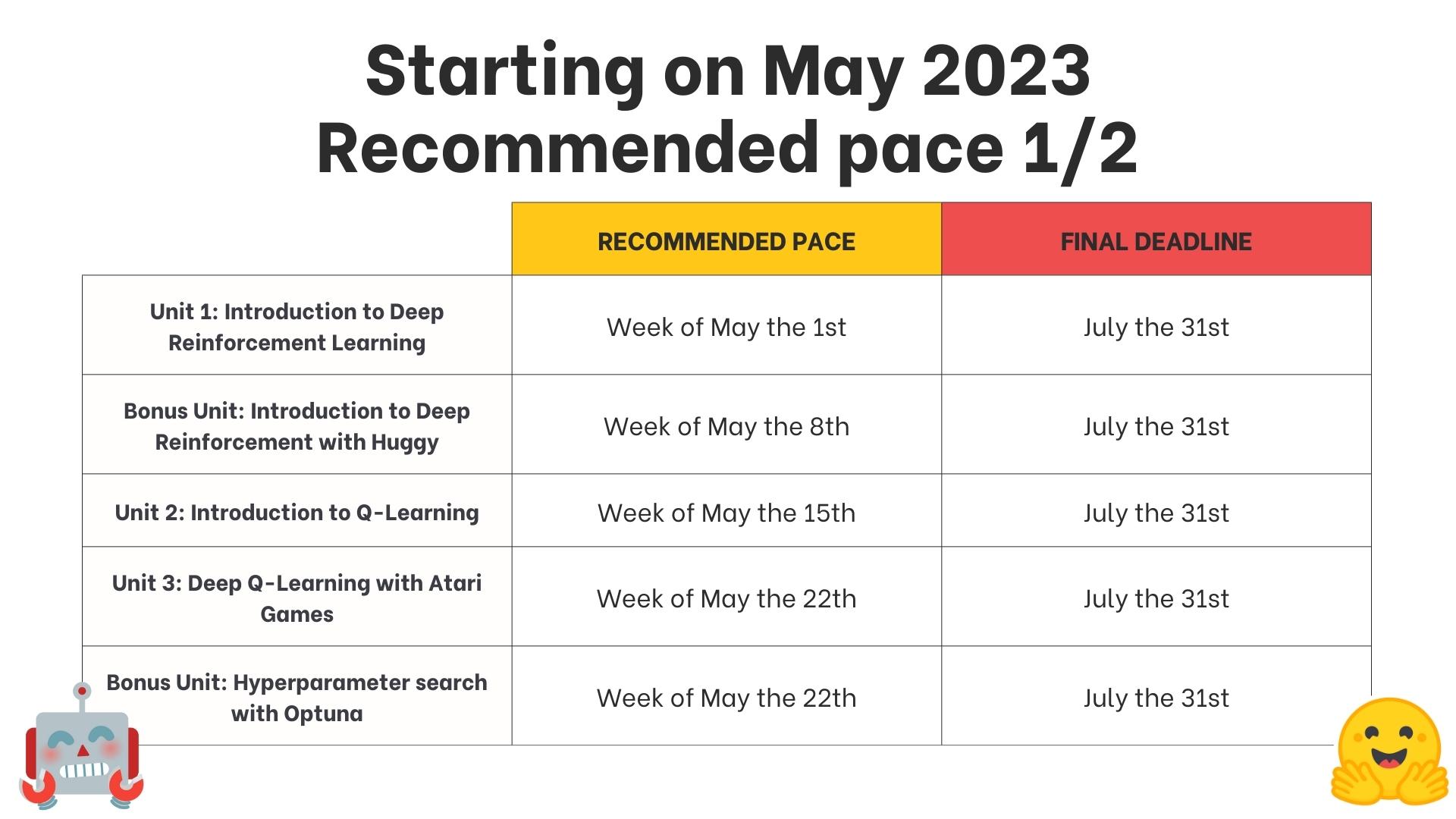

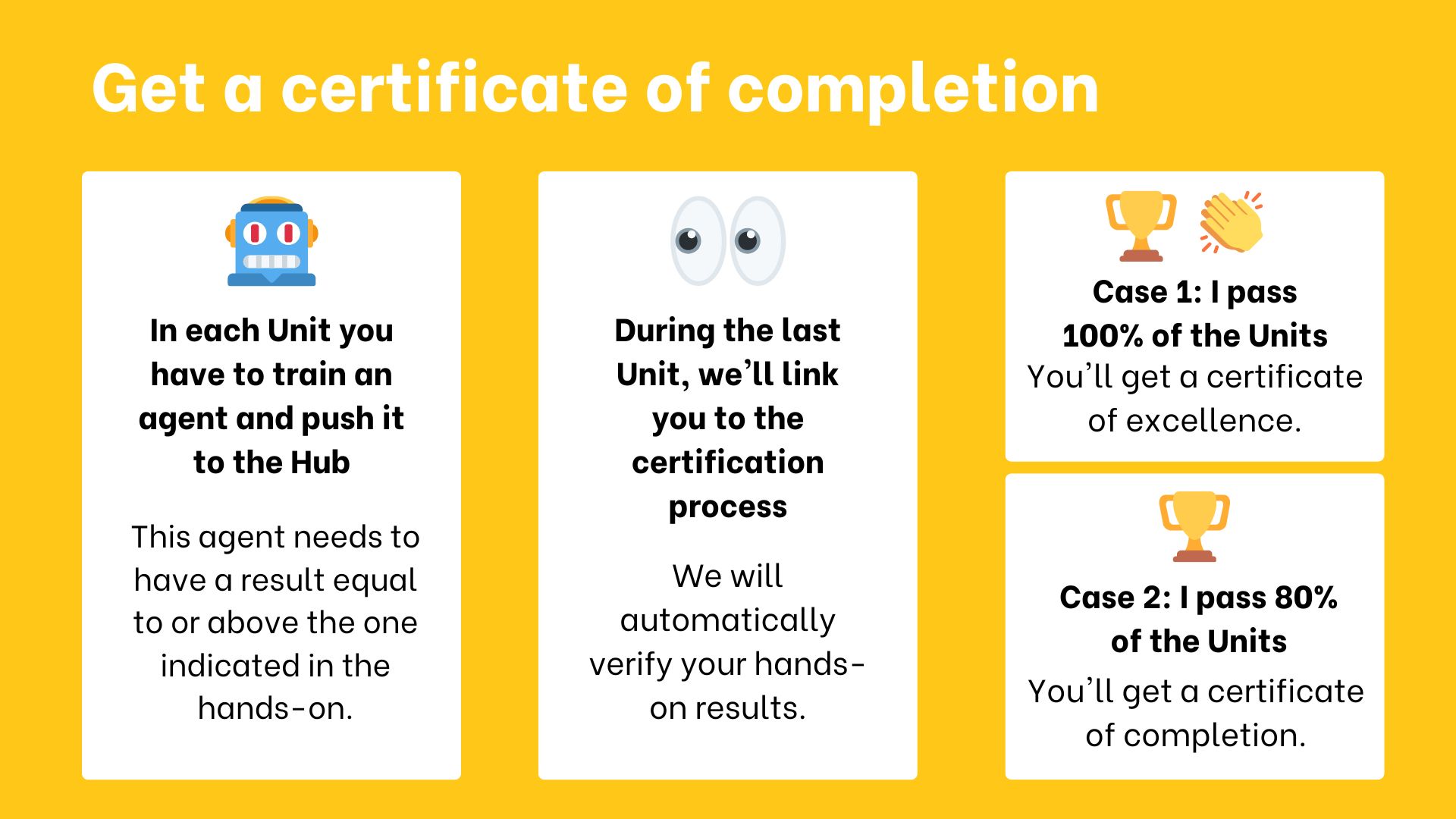

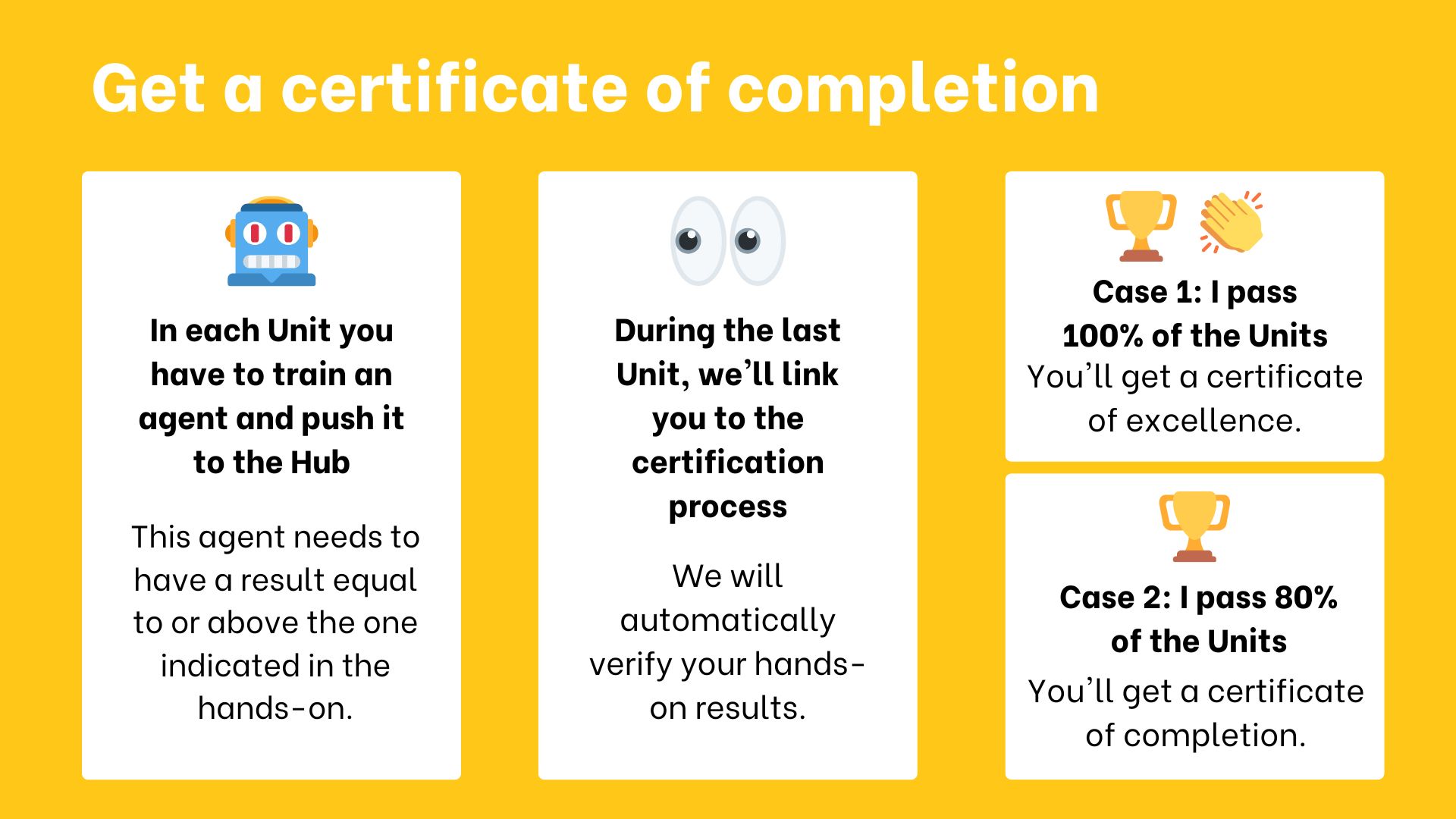

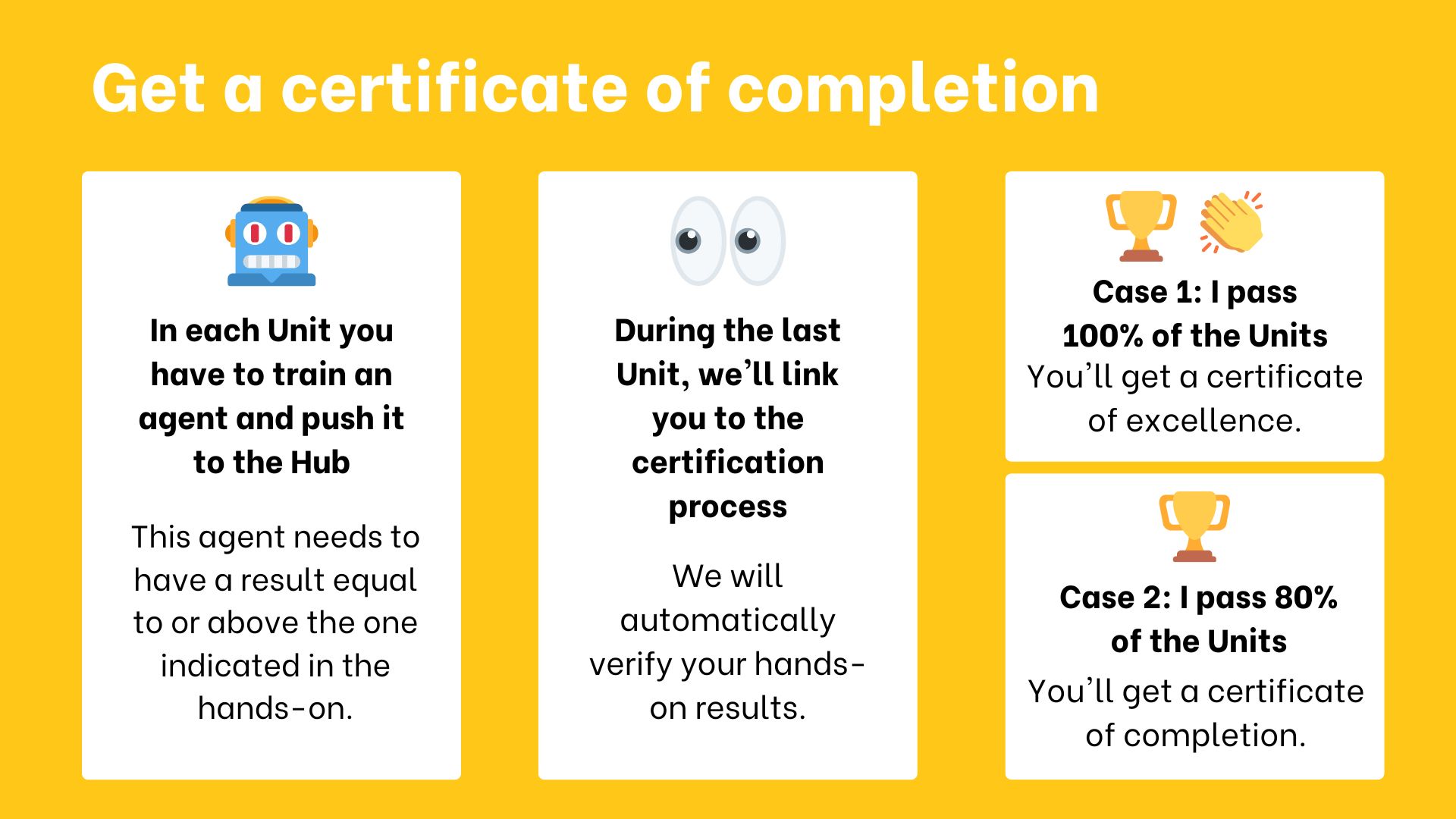

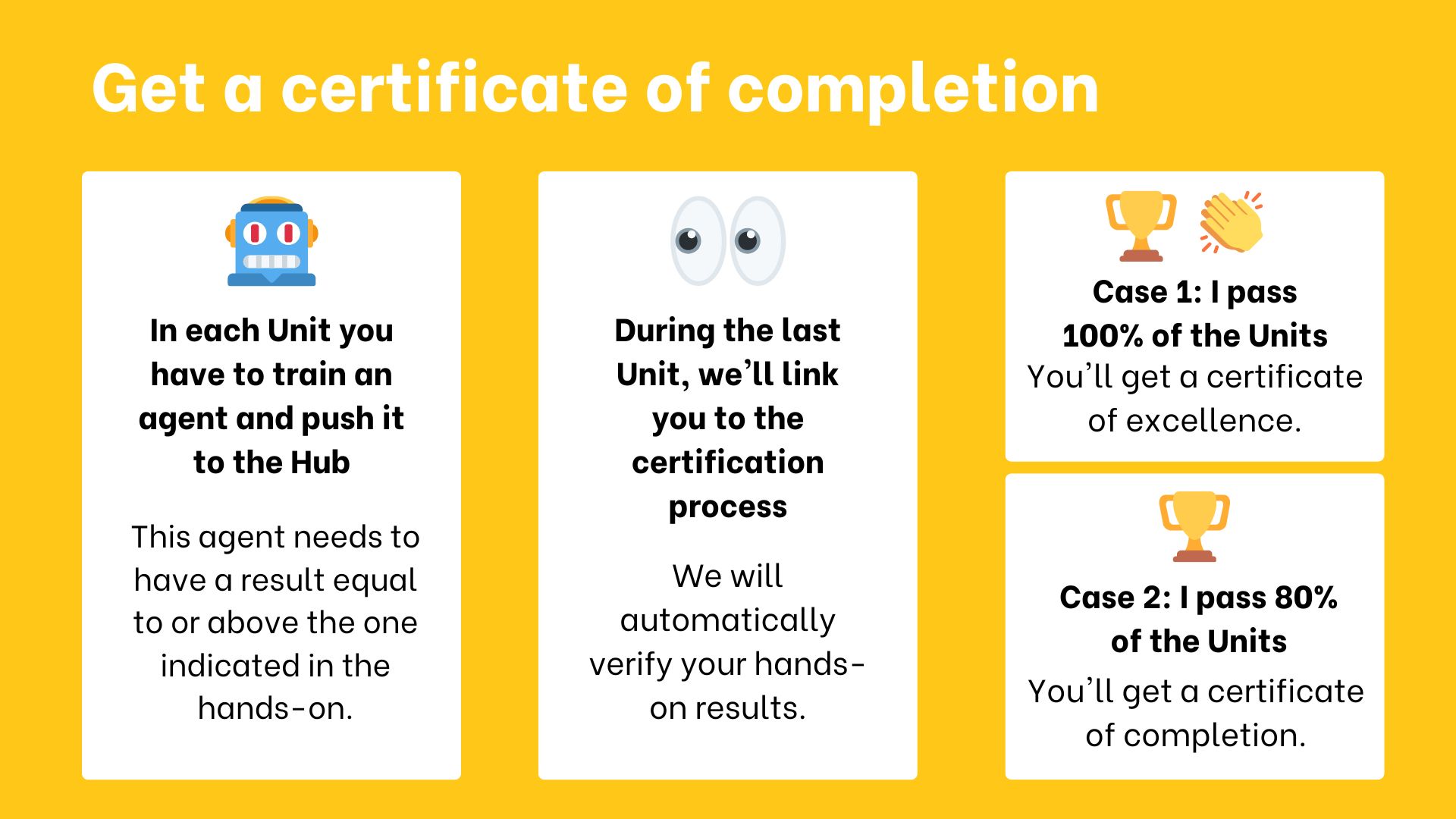

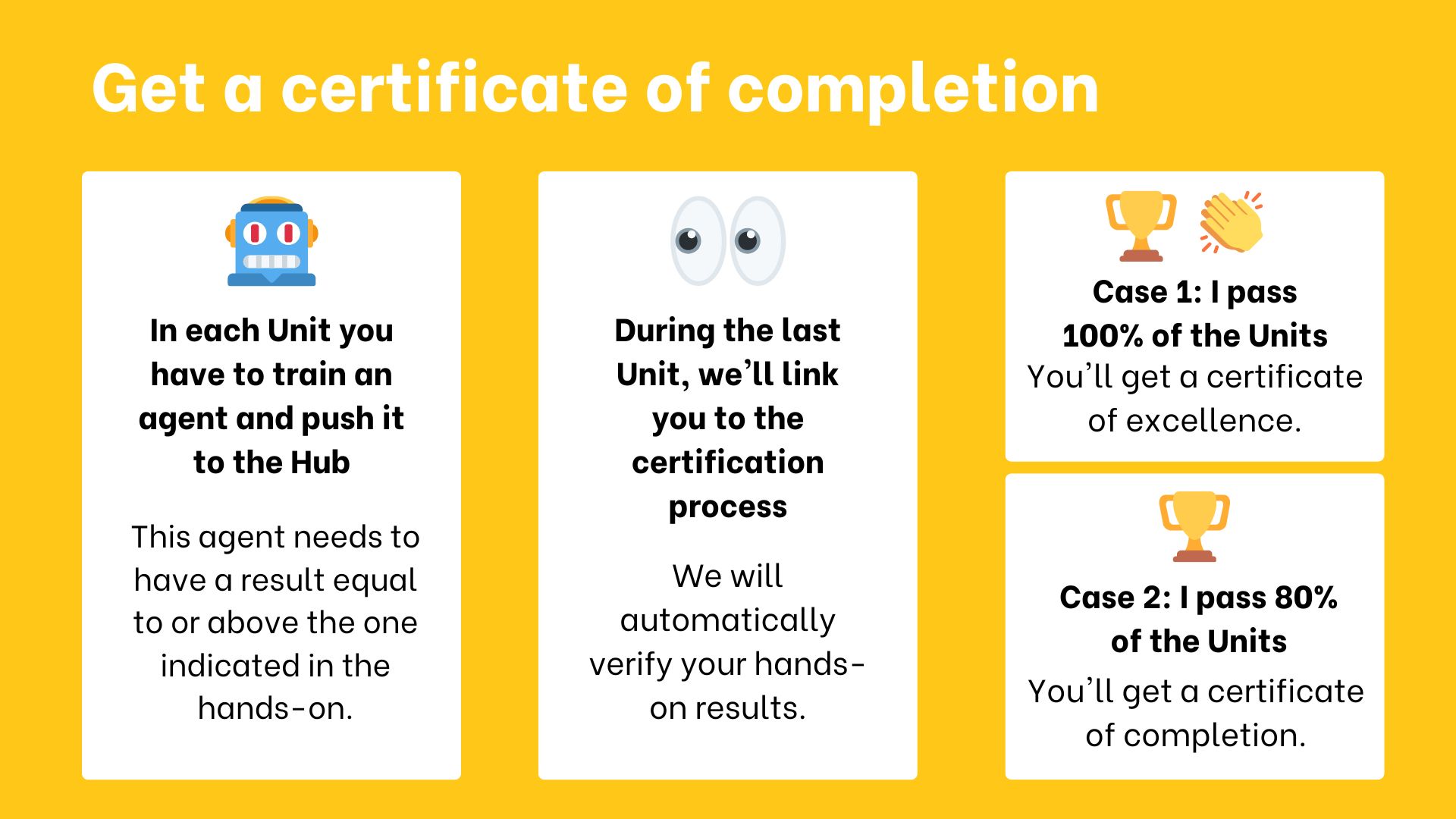

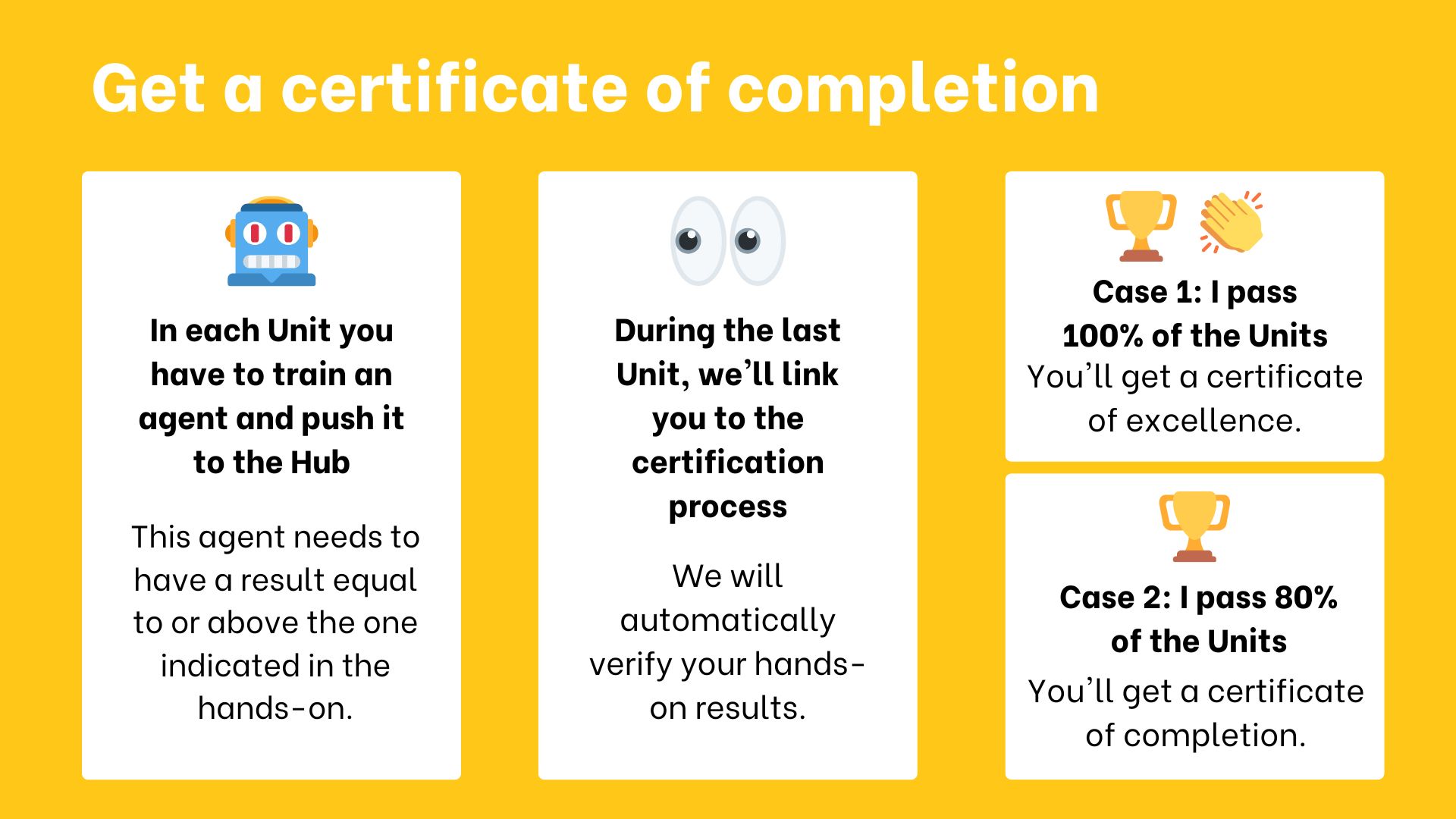

The certification process is **completely free**:

-- To get a *certificate of completion*: you need **to pass 80% of the assignments** before the end of July 2023.

-- To get a *certificate of excellence*: you need **to pass 100% of the assignments** before the end of July 2023.

+- To get a *certificate of completion*: you need **to pass 80% of the assignments**.

+- To get a *certificate of excellence*: you need **to pass 100% of the assignments**.

+

+There's **no deadlines, the course is self-paced**.

diff --git a/units/en/unit0/discord101.mdx b/units/en/unit0/discord101.mdx

index 0406a976..962c766e 100644

--- a/units/en/unit0/discord101.mdx

+++ b/units/en/unit0/discord101.mdx

@@ -5,20 +5,18 @@ Although I don't know much about fetching sticks (yet), I know one or two things

diff --git a/units/en/unit0/discord101.mdx b/units/en/unit0/discord101.mdx

index 0406a976..962c766e 100644

--- a/units/en/unit0/discord101.mdx

+++ b/units/en/unit0/discord101.mdx

@@ -5,20 +5,18 @@ Although I don't know much about fetching sticks (yet), I know one or two things

-Discord is a free chat platform. If you've used Slack, **it's quite similar**. There is a Hugging Face Community Discord server with 36000 members you can join with a single click here. So many humans to play with!

+Discord is a free chat platform. If you've used Slack, **it's quite similar**. There is a Hugging Face Community Discord server with 50000 members you can join with a single click here. So many humans to play with!

Starting in Discord can be a bit intimidating, so let me take you through it.

-When you [sign-up to our Discord server](http://hf.co/join/discord), you'll choose your interests. Make sure to **click "Reinforcement Learning"**.

+When you [sign-up to our Discord server](http://hf.co/join/discord), you'll choose your interests. Make sure to **click "Reinforcement Learning,"** and you'll get access to the Reinforcement Learning Category containing all the course-related channels. If you feel like joining even more channels, go for it! 🚀

Then click next, you'll then get to **introduce yourself in the `#introduce-yourself` channel**.

-Discord is a free chat platform. If you've used Slack, **it's quite similar**. There is a Hugging Face Community Discord server with 36000 members you can join with a single click here. So many humans to play with!

+Discord is a free chat platform. If you've used Slack, **it's quite similar**. There is a Hugging Face Community Discord server with 50000 members you can join with a single click here. So many humans to play with!

Starting in Discord can be a bit intimidating, so let me take you through it.

-When you [sign-up to our Discord server](http://hf.co/join/discord), you'll choose your interests. Make sure to **click "Reinforcement Learning"**.

+When you [sign-up to our Discord server](http://hf.co/join/discord), you'll choose your interests. Make sure to **click "Reinforcement Learning,"** and you'll get access to the Reinforcement Learning Category containing all the course-related channels. If you feel like joining even more channels, go for it! 🚀

Then click next, you'll then get to **introduce yourself in the `#introduce-yourself` channel**.

-## So which channels are interesting to me? [[channels]]

-

-They are in the reinforcement learning lounge. **Don't forget to sign up to these channels** by clicking on 🤖 Reinforcement Learning in `role-assigment`.

+They are in the reinforcement learning category. **Don't forget to sign up to these channels** by clicking on 🤖 Reinforcement Learning in `role-assigment`.

- `rl-announcements`: where we give the **lastest information about the course**.

- `rl-discussions`: where you can **exchange about RL and share information**.

- `rl-study-group`: where you can **ask questions and exchange with your classmates**.

diff --git a/units/en/unit0/introduction.mdx b/units/en/unit0/introduction.mdx

index 07c60faf..fec14228 100644

--- a/units/en/unit0/introduction.mdx

+++ b/units/en/unit0/introduction.mdx

@@ -59,10 +59,11 @@ This is the course's syllabus:

You can choose to follow this course either:

-- *To get a certificate of completion*: you need to complete 80% of the assignments before the end of July 2023.

-- *To get a certificate of honors*: you need to complete 100% of the assignments before the end of July 2023.

-- *As a simple audit*: you can participate in all challenges and do assignments if you want, but you have no deadlines.

+- *To get a certificate of completion*: you need to complete 80% of the assignments.

+- *To get a certificate of honors*: you need to complete 100% of the assignments.

+- *As a simple audit*: you can participate in all challenges and do assignments if you want.

+There's **no deadlines, the course is self-paced**.

Both paths **are completely free**.

Whatever path you choose, we advise you **to follow the recommended pace to enjoy the course and challenges with your fellow classmates.**

@@ -72,8 +73,10 @@ You don't need to tell us which path you choose. **If you get more than 80% of t

The certification process is **completely free**:

-- *To get a certificate of completion*: you need to complete 80% of the assignments before the end of July 2023.

-- *To get a certificate of honors*: you need to complete 100% of the assignments before the end of July 2023.

+- *To get a certificate of completion*: you need to complete 80% of the assignments.

+- *To get a certificate of honors*: you need to complete 100% of the assignments.

+

+Again, there's **no deadline** since the course is self paced. But our advice **is to follow the recommended pace section**.

-## So which channels are interesting to me? [[channels]]

-

-They are in the reinforcement learning lounge. **Don't forget to sign up to these channels** by clicking on 🤖 Reinforcement Learning in `role-assigment`.

+They are in the reinforcement learning category. **Don't forget to sign up to these channels** by clicking on 🤖 Reinforcement Learning in `role-assigment`.

- `rl-announcements`: where we give the **lastest information about the course**.

- `rl-discussions`: where you can **exchange about RL and share information**.

- `rl-study-group`: where you can **ask questions and exchange with your classmates**.

diff --git a/units/en/unit0/introduction.mdx b/units/en/unit0/introduction.mdx

index 07c60faf..fec14228 100644

--- a/units/en/unit0/introduction.mdx

+++ b/units/en/unit0/introduction.mdx

@@ -59,10 +59,11 @@ This is the course's syllabus:

You can choose to follow this course either:

-- *To get a certificate of completion*: you need to complete 80% of the assignments before the end of July 2023.

-- *To get a certificate of honors*: you need to complete 100% of the assignments before the end of July 2023.

-- *As a simple audit*: you can participate in all challenges and do assignments if you want, but you have no deadlines.

+- *To get a certificate of completion*: you need to complete 80% of the assignments.

+- *To get a certificate of honors*: you need to complete 100% of the assignments.

+- *As a simple audit*: you can participate in all challenges and do assignments if you want.

+There's **no deadlines, the course is self-paced**.

Both paths **are completely free**.

Whatever path you choose, we advise you **to follow the recommended pace to enjoy the course and challenges with your fellow classmates.**

@@ -72,8 +73,10 @@ You don't need to tell us which path you choose. **If you get more than 80% of t

The certification process is **completely free**:

-- *To get a certificate of completion*: you need to complete 80% of the assignments before the end of July 2023.

-- *To get a certificate of honors*: you need to complete 100% of the assignments before the end of July 2023.

+- *To get a certificate of completion*: you need to complete 80% of the assignments.

+- *To get a certificate of honors*: you need to complete 100% of the assignments.

+

+Again, there's **no deadline** since the course is self paced. But our advice **is to follow the recommended pace section**.

@@ -100,15 +103,8 @@ You need only 3 things:

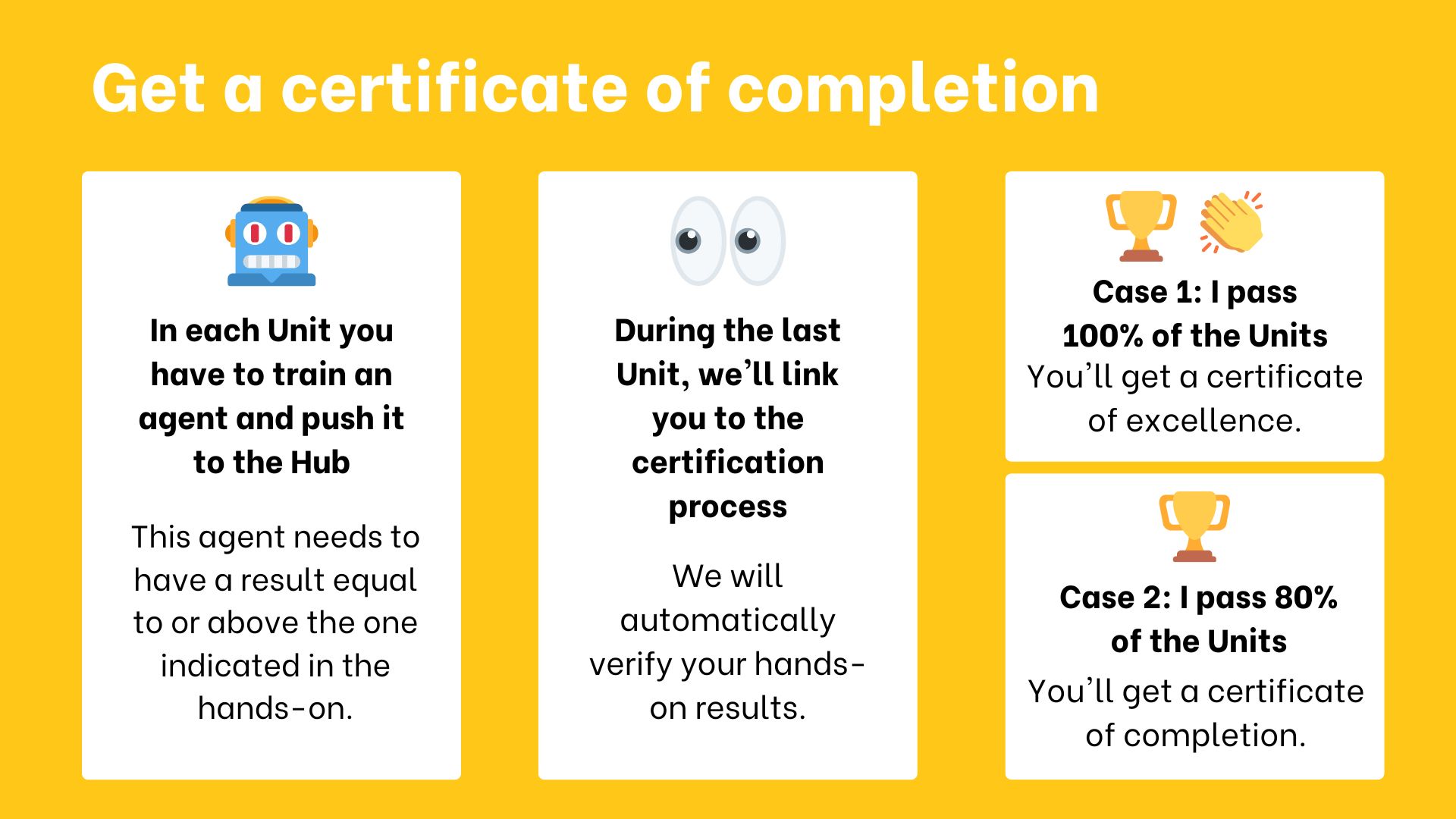

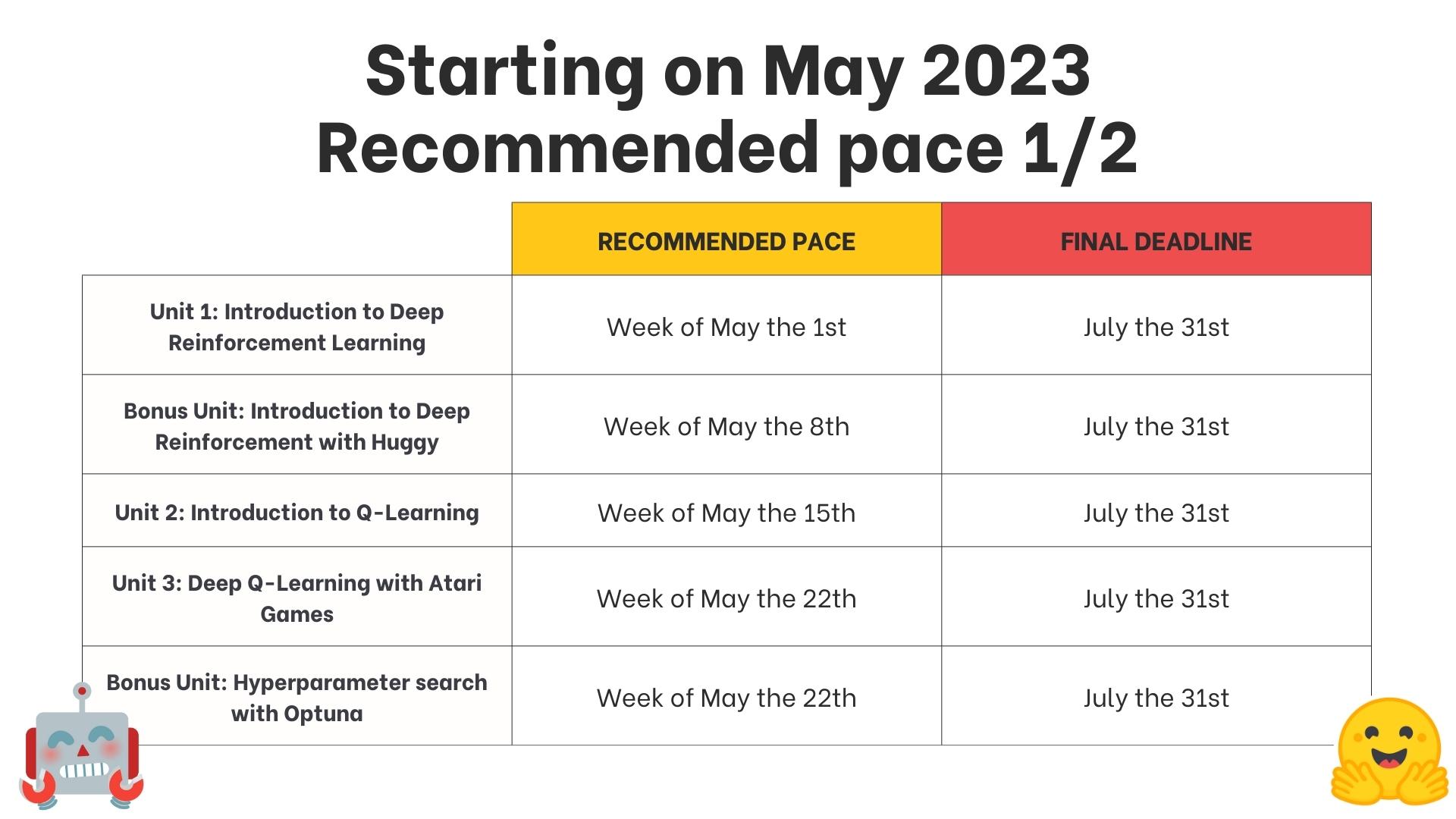

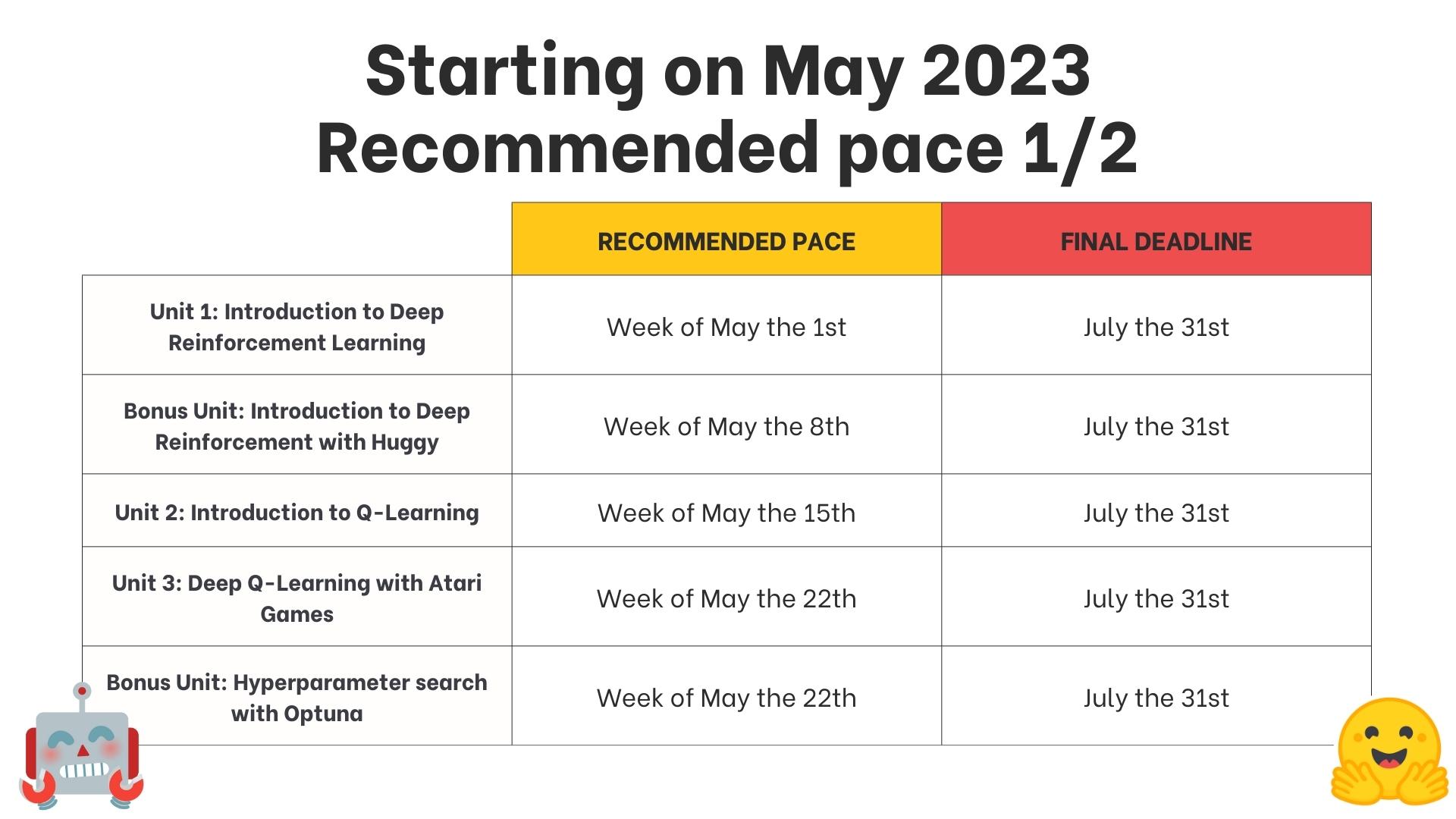

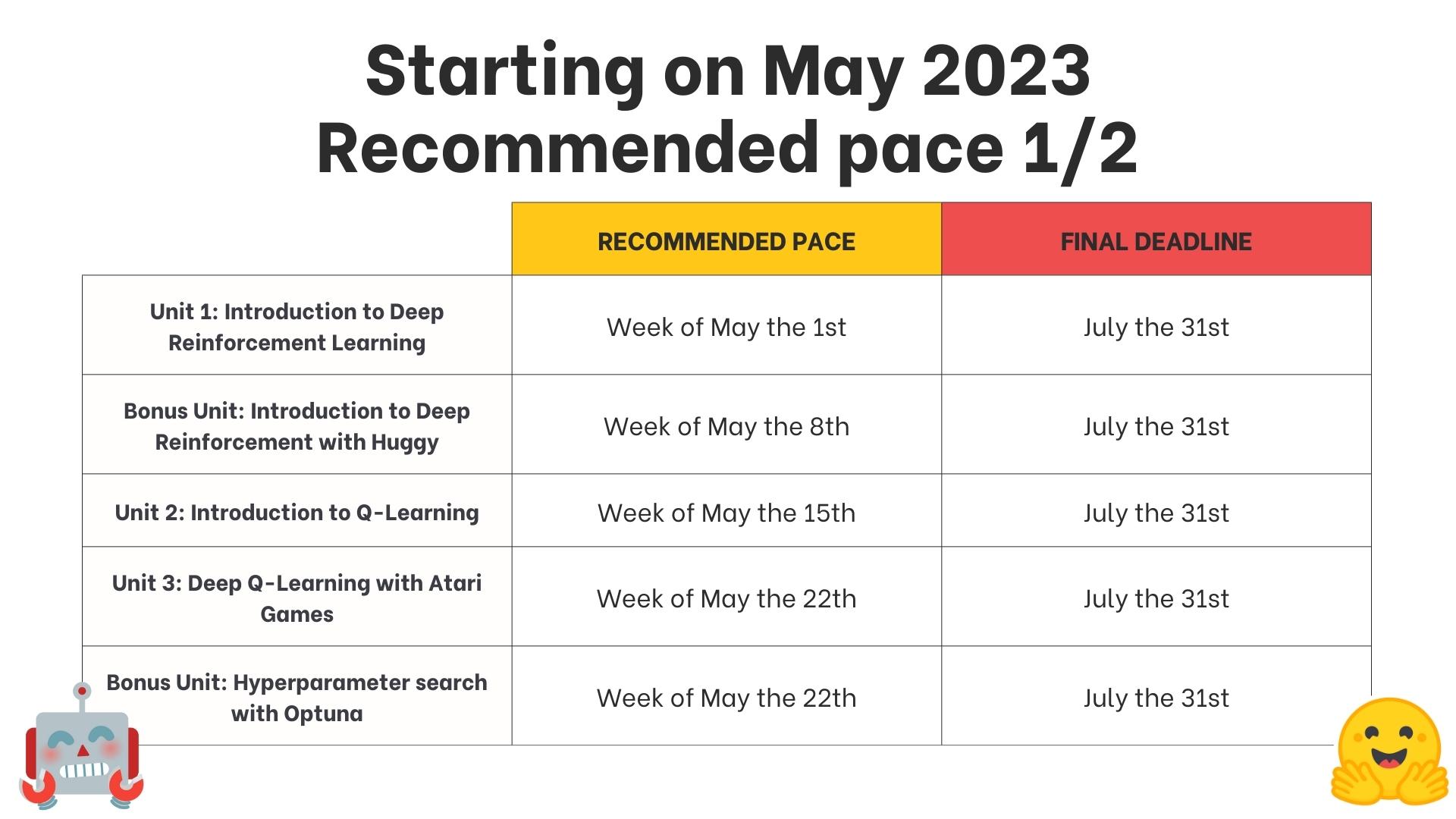

## What is the recommended pace? [[recommended-pace]]

-We defined a plan that you can follow to keep up the pace of the course.

-

-

@@ -100,15 +103,8 @@ You need only 3 things:

## What is the recommended pace? [[recommended-pace]]

-We defined a plan that you can follow to keep up the pace of the course.

-

- -

- -

-

Each chapter in this course is designed **to be completed in 1 week, with approximately 3-4 hours of work per week**. However, you can take as much time as necessary to complete the course. If you want to dive into a topic more in-depth, we'll provide additional resources to help you achieve that.

-

## Who are we [[who-are-we]]

About the author:

@@ -120,7 +116,7 @@ About the team:

- Sayak Paul is a Developer Advocate Engineer at Hugging Face. He's interested in the area of representation learning (self-supervision, semi-supervision, model robustness). And he loves watching crime and action thrillers 🔪.

-## When do the challenges start? [[challenges]]

+## What are the challenges in this course? [[challenges]]

In this new version of the course, you have two types of challenges:

- [A leaderboard](https://huggingface.co/spaces/huggingface-projects/Deep-Reinforcement-Learning-Leaderboard) to compare your agent's performance to other classmates'.

diff --git a/units/en/unit0/setup.mdx b/units/en/unit0/setup.mdx

index 4f6f442e..73572a22 100644

--- a/units/en/unit0/setup.mdx

+++ b/units/en/unit0/setup.mdx

@@ -15,7 +15,7 @@ You can now sign up for our Discord Server. This is the place where you **can ch

👉🏻 Join our discord server here.

-When you join, remember to introduce yourself in #introduce-yourself and sign-up for reinforcement channels in #role-assignments.

+When you join, remember to introduce yourself in #introduce-yourself and sign-up for reinforcement channels in #channels-and-roles.

We have multiple RL-related channels:

- `rl-announcements`: where we give the latest information about the course.

diff --git a/units/en/unit1/hands-on.mdx b/units/en/unit1/hands-on.mdx

index 7661f8c2..3c87f27d 100644

--- a/units/en/unit1/hands-on.mdx

+++ b/units/en/unit1/hands-on.mdx

@@ -5,7 +5,7 @@

@@ -282,7 +282,7 @@ env.close()

## Create the LunarLander environment 🌛 and understand how it works

-### [The environment 🎮](https://gymnasium.farama.org/environments/box2d/lunar_lander/)

+### The environment 🎮

In this first tutorial, we’re going to train our agent, a [Lunar Lander](https://gymnasium.farama.org/environments/box2d/lunar_lander/), **to land correctly on the moon**. To do that, the agent needs to learn **to adapt its speed and position (horizontal, vertical, and angular) to land correctly.**

@@ -315,8 +315,8 @@ We see with `Observation Space Shape (8,)` that the observation is a vector of s

- Vertical speed (y)

- Angle

- Angular speed

-- If the left leg contact point has touched the land

-- If the right leg contact point has touched the land

+- If the left leg contact point has touched the land (boolean)

+- If the right leg contact point has touched the land (boolean)

```python

@@ -433,7 +433,7 @@ model = PPO(

# TODO: Train it for 1,000,000 timesteps

# TODO: Specify file name for model and save the model to file

-model_name = ""

+model_name = "ppo-LunarLander-v2"

```

#### Solution

diff --git a/units/en/unit1/rl-framework.mdx b/units/en/unit1/rl-framework.mdx

index cf155e7d..97453575 100644

--- a/units/en/unit1/rl-framework.mdx

+++ b/units/en/unit1/rl-framework.mdx

@@ -83,11 +83,11 @@ The actions can come from a *discrete* or *continuous space*:

-

-

Each chapter in this course is designed **to be completed in 1 week, with approximately 3-4 hours of work per week**. However, you can take as much time as necessary to complete the course. If you want to dive into a topic more in-depth, we'll provide additional resources to help you achieve that.

-

## Who are we [[who-are-we]]

About the author:

@@ -120,7 +116,7 @@ About the team:

- Sayak Paul is a Developer Advocate Engineer at Hugging Face. He's interested in the area of representation learning (self-supervision, semi-supervision, model robustness). And he loves watching crime and action thrillers 🔪.

-## When do the challenges start? [[challenges]]

+## What are the challenges in this course? [[challenges]]

In this new version of the course, you have two types of challenges:

- [A leaderboard](https://huggingface.co/spaces/huggingface-projects/Deep-Reinforcement-Learning-Leaderboard) to compare your agent's performance to other classmates'.

diff --git a/units/en/unit0/setup.mdx b/units/en/unit0/setup.mdx

index 4f6f442e..73572a22 100644

--- a/units/en/unit0/setup.mdx

+++ b/units/en/unit0/setup.mdx

@@ -15,7 +15,7 @@ You can now sign up for our Discord Server. This is the place where you **can ch

👉🏻 Join our discord server here.

-When you join, remember to introduce yourself in #introduce-yourself and sign-up for reinforcement channels in #role-assignments.

+When you join, remember to introduce yourself in #introduce-yourself and sign-up for reinforcement channels in #channels-and-roles.

We have multiple RL-related channels:

- `rl-announcements`: where we give the latest information about the course.

diff --git a/units/en/unit1/hands-on.mdx b/units/en/unit1/hands-on.mdx

index 7661f8c2..3c87f27d 100644

--- a/units/en/unit1/hands-on.mdx

+++ b/units/en/unit1/hands-on.mdx

@@ -5,7 +5,7 @@

@@ -282,7 +282,7 @@ env.close()

## Create the LunarLander environment 🌛 and understand how it works

-### [The environment 🎮](https://gymnasium.farama.org/environments/box2d/lunar_lander/)

+### The environment 🎮

In this first tutorial, we’re going to train our agent, a [Lunar Lander](https://gymnasium.farama.org/environments/box2d/lunar_lander/), **to land correctly on the moon**. To do that, the agent needs to learn **to adapt its speed and position (horizontal, vertical, and angular) to land correctly.**

@@ -315,8 +315,8 @@ We see with `Observation Space Shape (8,)` that the observation is a vector of s

- Vertical speed (y)

- Angle

- Angular speed

-- If the left leg contact point has touched the land

-- If the right leg contact point has touched the land

+- If the left leg contact point has touched the land (boolean)

+- If the right leg contact point has touched the land (boolean)

```python

@@ -433,7 +433,7 @@ model = PPO(

# TODO: Train it for 1,000,000 timesteps

# TODO: Specify file name for model and save the model to file

-model_name = ""

+model_name = "ppo-LunarLander-v2"

```

#### Solution

diff --git a/units/en/unit1/rl-framework.mdx b/units/en/unit1/rl-framework.mdx

index cf155e7d..97453575 100644

--- a/units/en/unit1/rl-framework.mdx

+++ b/units/en/unit1/rl-framework.mdx

@@ -83,11 +83,11 @@ The actions can come from a *discrete* or *continuous space*:

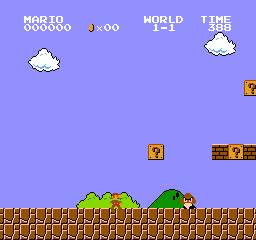

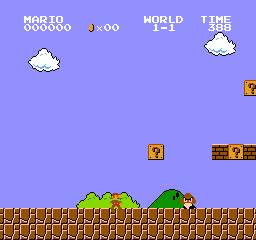

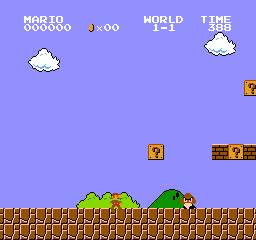

-Again, in Super Mario Bros, we have only 5 possible actions: 4 directions and jumping

+In Super Mario Bros, we have only 4 possible actions: left, right, up (jumping) and down (crouching).

-In Super Mario Bros, we have a finite set of actions since we have only 4 directions and jump.

+Again, in Super Mario Bros, we have a finite set of actions since we have only 4 directions.

- *Continuous space*: the number of possible actions is **infinite**.

diff --git a/units/en/unit1/two-methods.mdx b/units/en/unit1/two-methods.mdx

index 44ce264f..fcfc04ad 100644

--- a/units/en/unit1/two-methods.mdx

+++ b/units/en/unit1/two-methods.mdx

@@ -54,7 +54,7 @@ We have two types of policies:

-

-Again, in Super Mario Bros, we have only 5 possible actions: 4 directions and jumping

+In Super Mario Bros, we have only 4 possible actions: left, right, up (jumping) and down (crouching).

-In Super Mario Bros, we have a finite set of actions since we have only 4 directions and jump.

+Again, in Super Mario Bros, we have a finite set of actions since we have only 4 directions.

- *Continuous space*: the number of possible actions is **infinite**.

diff --git a/units/en/unit1/two-methods.mdx b/units/en/unit1/two-methods.mdx

index 44ce264f..fcfc04ad 100644

--- a/units/en/unit1/two-methods.mdx

+++ b/units/en/unit1/two-methods.mdx

@@ -54,7 +54,7 @@ We have two types of policies:

- +

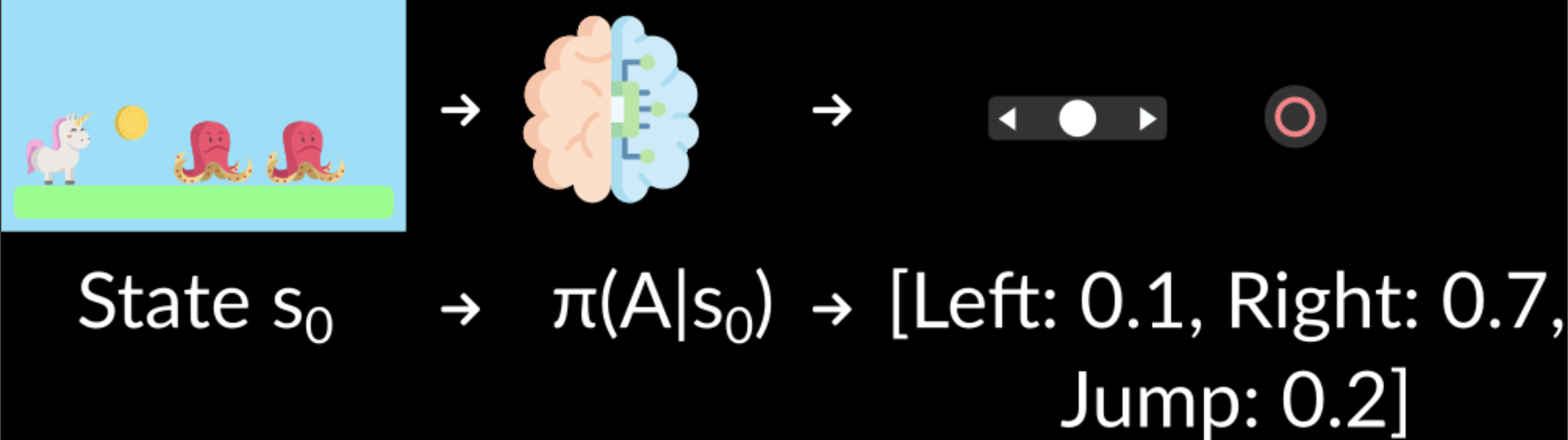

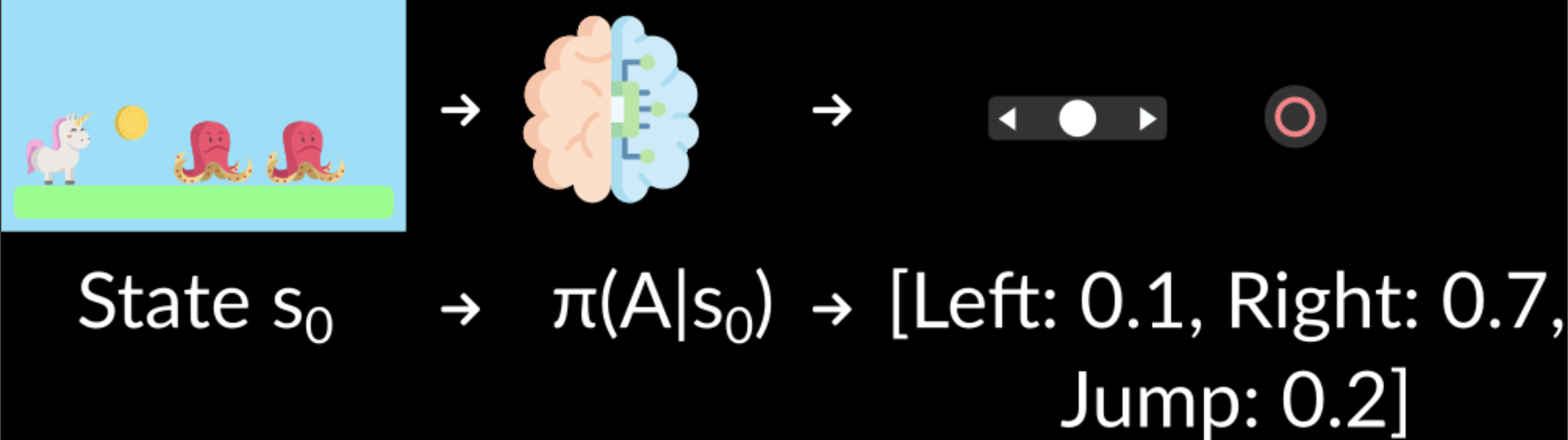

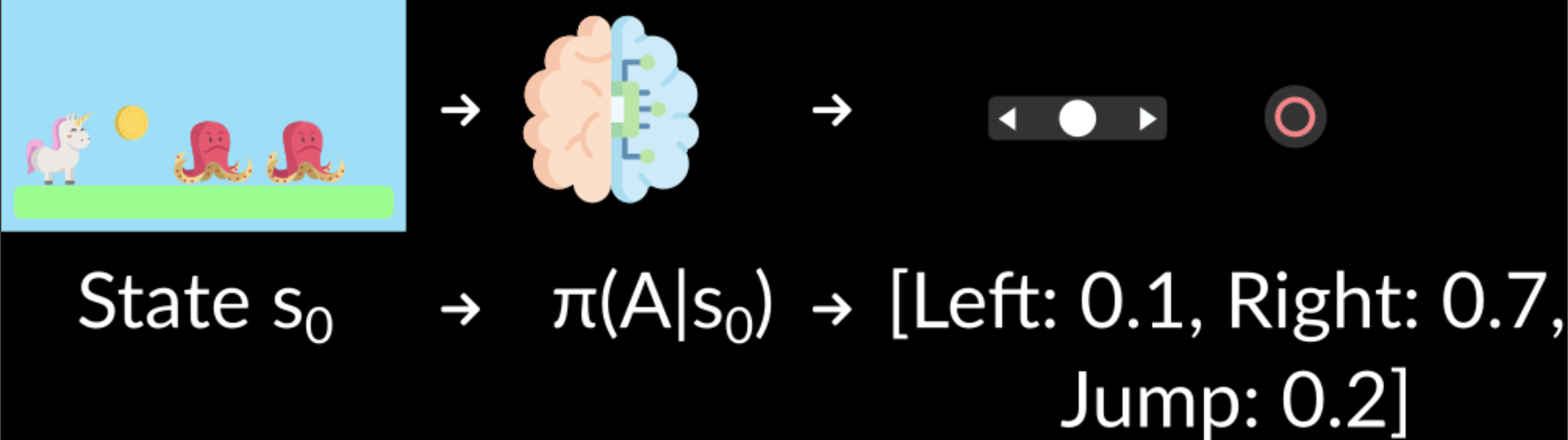

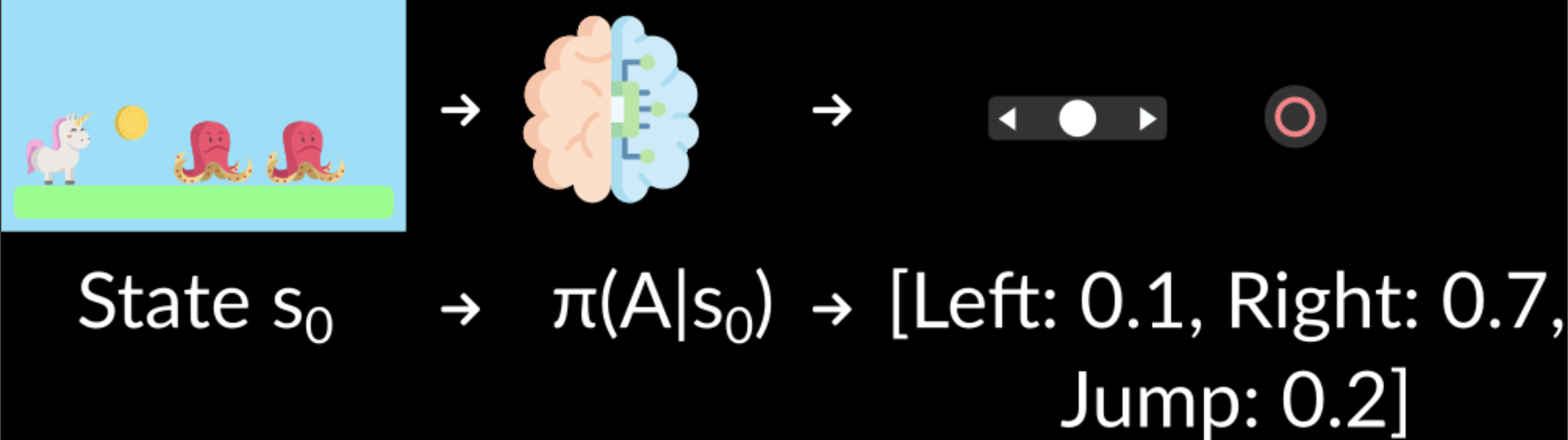

+ Given an initial state, our stochastic policy will output probability distributions over the possible actions at that state.

diff --git a/units/en/unit2/glossary.mdx b/units/en/unit2/glossary.mdx

index 879931e4..f76ea52e 100644

--- a/units/en/unit2/glossary.mdx

+++ b/units/en/unit2/glossary.mdx

@@ -11,7 +11,7 @@ This is a community-created glossary. Contributions are welcomed!

### Among the value-based methods, we can find two main strategies

- **The state-value function.** For each state, the state-value function is the expected return if the agent starts in that state and follows the policy until the end.

-- **The action-value function.** In contrast to the state-value function, the action-value calculates for each state and action pair the expected return if the agent starts in that state and takes an action. Then it follows the policy forever after.

+- **The action-value function.** In contrast to the state-value function, the action-value calculates for each state and action pair the expected return if the agent starts in that state, takes that action, and then follows the policy forever after.

### Epsilon-greedy strategy:

@@ -32,6 +32,12 @@ This is a community-created glossary. Contributions are welcomed!

- **Off-policy algorithms:** A different policy is used at training time and inference time

- **On-policy algorithms:** The same policy is used during training and inference

+### Monte Carlo and Temporal Difference learning strategies

+

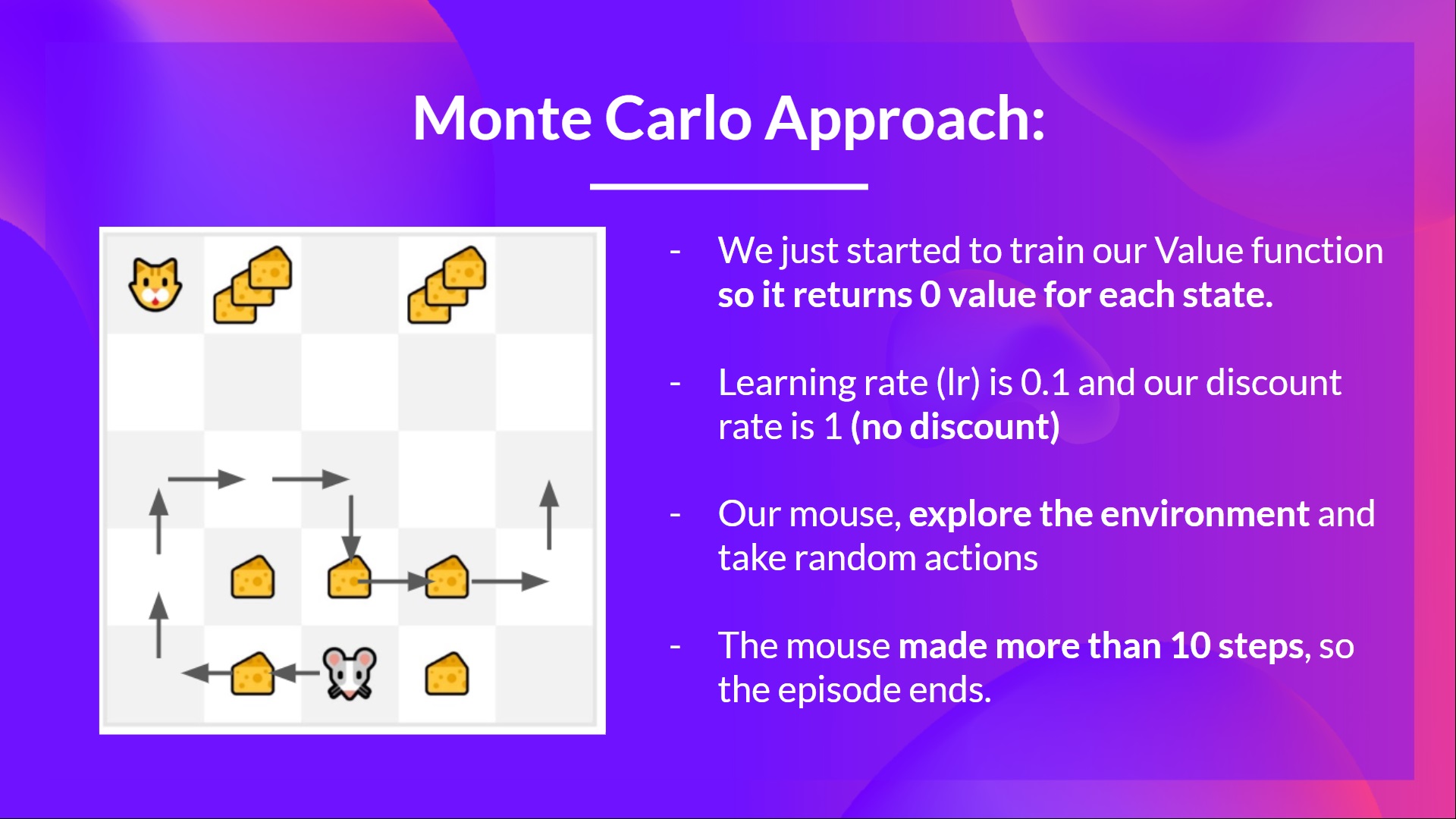

+- **Monte Carlo (MC):** Learning at the end of the episode. With Monte Carlo, we wait until the episode ends and then we update the value function (or policy function) from a complete episode.

+

+- **Temporal Difference (TD):** Learning at each step. With Temporal Difference Learning, we update the value function (or policy function) at each step without requiring a complete episode.

+

If you want to improve the course, you can [open a Pull Request.](https://github.com/huggingface/deep-rl-class/pulls)

This glossary was made possible thanks to:

diff --git a/units/en/unit2/hands-on.mdx b/units/en/unit2/hands-on.mdx

index f8dd666a..f55cc13e 100644

--- a/units/en/unit2/hands-on.mdx

+++ b/units/en/unit2/hands-on.mdx

@@ -2,7 +2,7 @@

@@ -93,16 +93,16 @@ Before diving into the notebook, you need to:

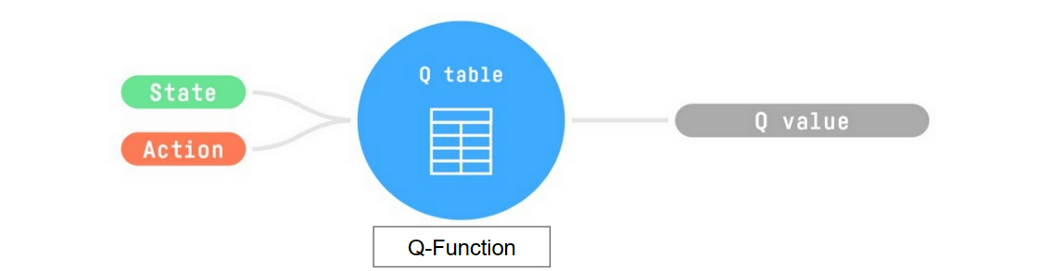

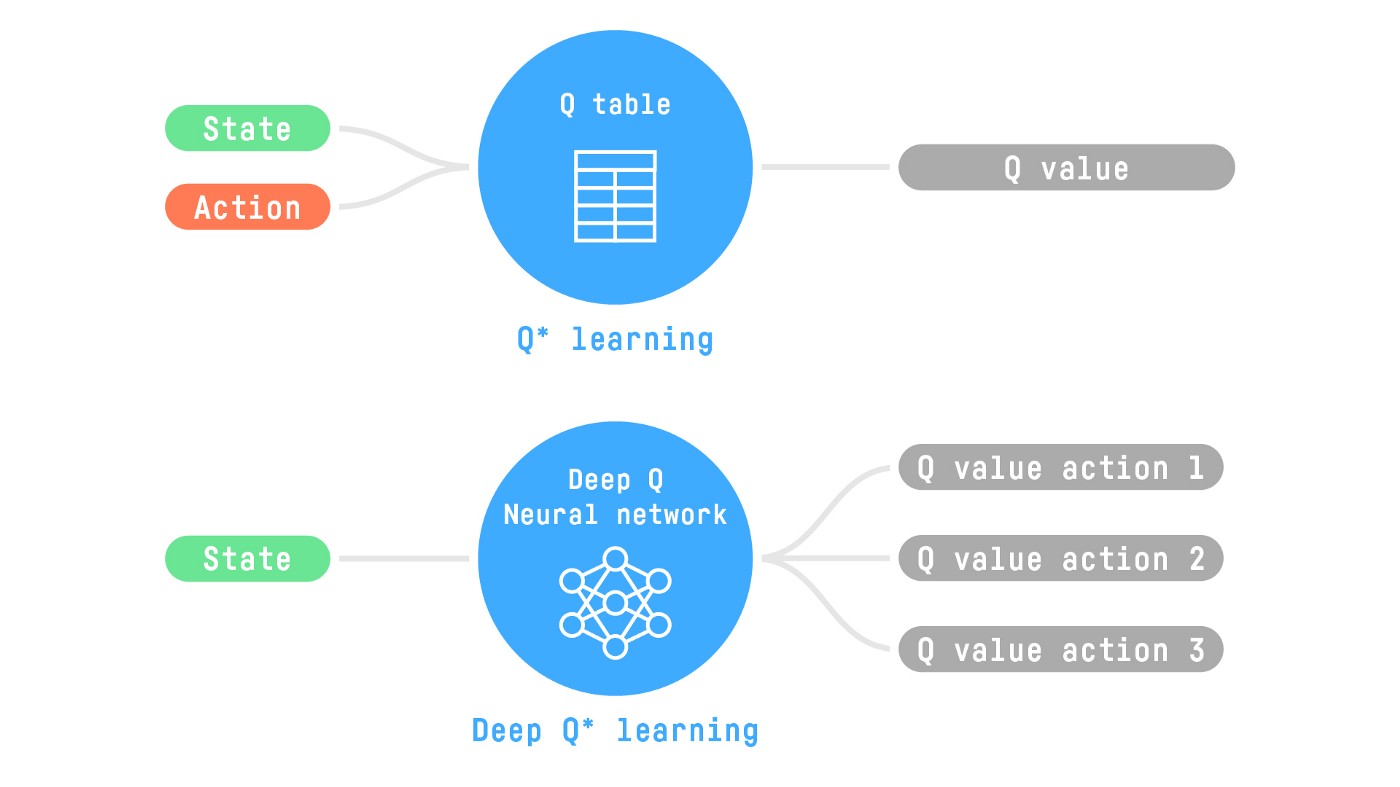

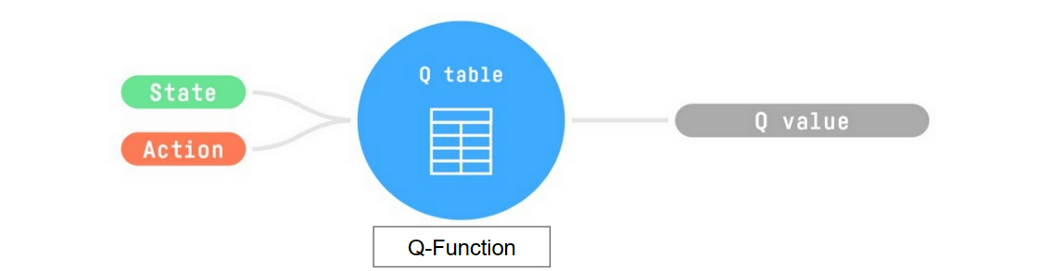

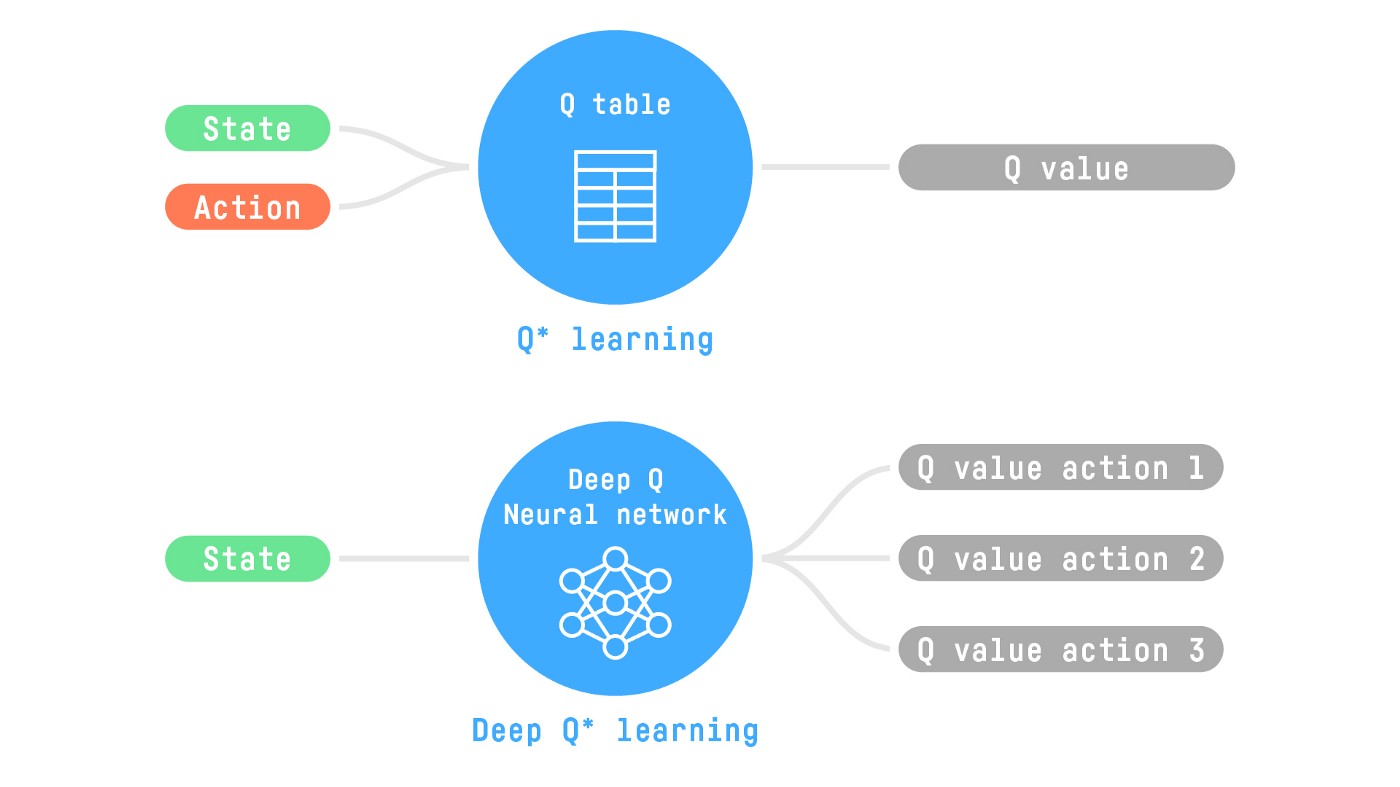

*Q-Learning* **is the RL algorithm that**:

-- Trains *Q-Function*, an **action-value function** that encoded, in internal memory, by a *Q-table* **that contains all the state-action pair values.**

+- Trains *Q-Function*, an **action-value function** that is encoded, in internal memory, by a *Q-table* **that contains all the state-action pair values.**

- Given a state and action, our Q-Function **will search the Q-table for the corresponding value.**

Given an initial state, our stochastic policy will output probability distributions over the possible actions at that state.

diff --git a/units/en/unit2/glossary.mdx b/units/en/unit2/glossary.mdx

index 879931e4..f76ea52e 100644

--- a/units/en/unit2/glossary.mdx

+++ b/units/en/unit2/glossary.mdx

@@ -11,7 +11,7 @@ This is a community-created glossary. Contributions are welcomed!

### Among the value-based methods, we can find two main strategies

- **The state-value function.** For each state, the state-value function is the expected return if the agent starts in that state and follows the policy until the end.

-- **The action-value function.** In contrast to the state-value function, the action-value calculates for each state and action pair the expected return if the agent starts in that state and takes an action. Then it follows the policy forever after.

+- **The action-value function.** In contrast to the state-value function, the action-value calculates for each state and action pair the expected return if the agent starts in that state, takes that action, and then follows the policy forever after.

### Epsilon-greedy strategy:

@@ -32,6 +32,12 @@ This is a community-created glossary. Contributions are welcomed!

- **Off-policy algorithms:** A different policy is used at training time and inference time

- **On-policy algorithms:** The same policy is used during training and inference

+### Monte Carlo and Temporal Difference learning strategies

+

+- **Monte Carlo (MC):** Learning at the end of the episode. With Monte Carlo, we wait until the episode ends and then we update the value function (or policy function) from a complete episode.

+

+- **Temporal Difference (TD):** Learning at each step. With Temporal Difference Learning, we update the value function (or policy function) at each step without requiring a complete episode.

+

If you want to improve the course, you can [open a Pull Request.](https://github.com/huggingface/deep-rl-class/pulls)

This glossary was made possible thanks to:

diff --git a/units/en/unit2/hands-on.mdx b/units/en/unit2/hands-on.mdx

index f8dd666a..f55cc13e 100644

--- a/units/en/unit2/hands-on.mdx

+++ b/units/en/unit2/hands-on.mdx

@@ -2,7 +2,7 @@

@@ -93,16 +93,16 @@ Before diving into the notebook, you need to:

*Q-Learning* **is the RL algorithm that**:

-- Trains *Q-Function*, an **action-value function** that encoded, in internal memory, by a *Q-table* **that contains all the state-action pair values.**

+- Trains *Q-Function*, an **action-value function** that is encoded, in internal memory, by a *Q-table* **that contains all the state-action pair values.**

- Given a state and action, our Q-Function **will search the Q-table for the corresponding value.**

-- When the training is done,**we have an optimal Q-Function, so an optimal Q-Table.**

+- When the training is done, **we have an optimal Q-Function, so an optimal Q-Table.**

- And if we **have an optimal Q-function**, we

-have an optimal policy, since we **know for, each state, the best action to take.**

+have an optimal policy, since we **know for each state, the best action to take.**

-- When the training is done,**we have an optimal Q-Function, so an optimal Q-Table.**

+- When the training is done, **we have an optimal Q-Function, so an optimal Q-Table.**

- And if we **have an optimal Q-function**, we

-have an optimal policy, since we **know for, each state, the best action to take.**

+have an optimal policy, since we **know for each state, the best action to take.**

@@ -146,7 +146,8 @@ pip install -r https://raw.githubusercontent.com/huggingface/deep-rl-class/main/

```bash

sudo apt-get update

-apt install python-opengl ffmpeg xvfb

+sudo apt-get install -y python3-opengl

+apt install ffmpeg xvfb

pip3 install pyvirtualdisplay

```

@@ -246,7 +247,7 @@ print("Observation Space", env.observation_space)

print("Sample observation", env.observation_space.sample()) # Get a random observation

```

-We see with `Observation Space Shape Discrete(16)` that the observation is an integer representing the **agent’s current position as current_row * nrows + current_col (where both the row and col start at 0)**.

+We see with `Observation Space Shape Discrete(16)` that the observation is an integer representing the **agent’s current position as current_row * ncols + current_col (where both the row and col start at 0)**.

For example, the goal position in the 4x4 map can be calculated as follows: 3 * 4 + 3 = 15. The number of possible observations is dependent on the size of the map. **For example, the 4x4 map has 16 possible observations.**

@@ -352,7 +353,7 @@ def greedy_policy(Qtable, state):

return action

```

-##Define the epsilon-greedy policy 🤖

+## Define the epsilon-greedy policy 🤖

Epsilon-greedy is the training policy that handles the exploration/exploitation trade-off.

@@ -388,9 +389,9 @@ def epsilon_greedy_policy(Qtable, state, epsilon):

```python

def epsilon_greedy_policy(Qtable, state, epsilon):

# Randomly generate a number between 0 and 1

- random_int = random.uniform(0, 1)

- # if random_int > greater than epsilon --> exploitation

- if random_int > epsilon:

+ random_num = random.uniform(0, 1)

+ # if random_num > greater than epsilon --> exploitation

+ if random_num > epsilon:

# Take the action with the highest value given a state

# np.argmax can be useful here

action = greedy_policy(Qtable, state)

@@ -716,13 +717,10 @@ def push_to_hub(repo_id, model, env, video_fps=1, local_repo_path="hub"):

## Usage

- ```python

-

model = load_from_hub(repo_id="{repo_id}", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

- ```

"""

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

diff --git a/units/en/unit2/mc-vs-td.mdx b/units/en/unit2/mc-vs-td.mdx

index 78ef297c..ddc97e8c 100644

--- a/units/en/unit2/mc-vs-td.mdx

+++ b/units/en/unit2/mc-vs-td.mdx

@@ -57,18 +57,26 @@ For instance, if we train a state-value function using Monte Carlo:

@@ -146,7 +146,8 @@ pip install -r https://raw.githubusercontent.com/huggingface/deep-rl-class/main/

```bash

sudo apt-get update

-apt install python-opengl ffmpeg xvfb

+sudo apt-get install -y python3-opengl

+apt install ffmpeg xvfb

pip3 install pyvirtualdisplay

```

@@ -246,7 +247,7 @@ print("Observation Space", env.observation_space)

print("Sample observation", env.observation_space.sample()) # Get a random observation

```

-We see with `Observation Space Shape Discrete(16)` that the observation is an integer representing the **agent’s current position as current_row * nrows + current_col (where both the row and col start at 0)**.

+We see with `Observation Space Shape Discrete(16)` that the observation is an integer representing the **agent’s current position as current_row * ncols + current_col (where both the row and col start at 0)**.

For example, the goal position in the 4x4 map can be calculated as follows: 3 * 4 + 3 = 15. The number of possible observations is dependent on the size of the map. **For example, the 4x4 map has 16 possible observations.**

@@ -352,7 +353,7 @@ def greedy_policy(Qtable, state):

return action

```

-##Define the epsilon-greedy policy 🤖

+## Define the epsilon-greedy policy 🤖

Epsilon-greedy is the training policy that handles the exploration/exploitation trade-off.

@@ -388,9 +389,9 @@ def epsilon_greedy_policy(Qtable, state, epsilon):

```python

def epsilon_greedy_policy(Qtable, state, epsilon):

# Randomly generate a number between 0 and 1

- random_int = random.uniform(0, 1)

- # if random_int > greater than epsilon --> exploitation

- if random_int > epsilon:

+ random_num = random.uniform(0, 1)

+ # if random_num > greater than epsilon --> exploitation

+ if random_num > epsilon:

# Take the action with the highest value given a state

# np.argmax can be useful here

action = greedy_policy(Qtable, state)

@@ -716,13 +717,10 @@ def push_to_hub(repo_id, model, env, video_fps=1, local_repo_path="hub"):

## Usage

- ```python

-

model = load_from_hub(repo_id="{repo_id}", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

- ```

"""

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

diff --git a/units/en/unit2/mc-vs-td.mdx b/units/en/unit2/mc-vs-td.mdx

index 78ef297c..ddc97e8c 100644

--- a/units/en/unit2/mc-vs-td.mdx

+++ b/units/en/unit2/mc-vs-td.mdx

@@ -57,18 +57,26 @@ For instance, if we train a state-value function using Monte Carlo:

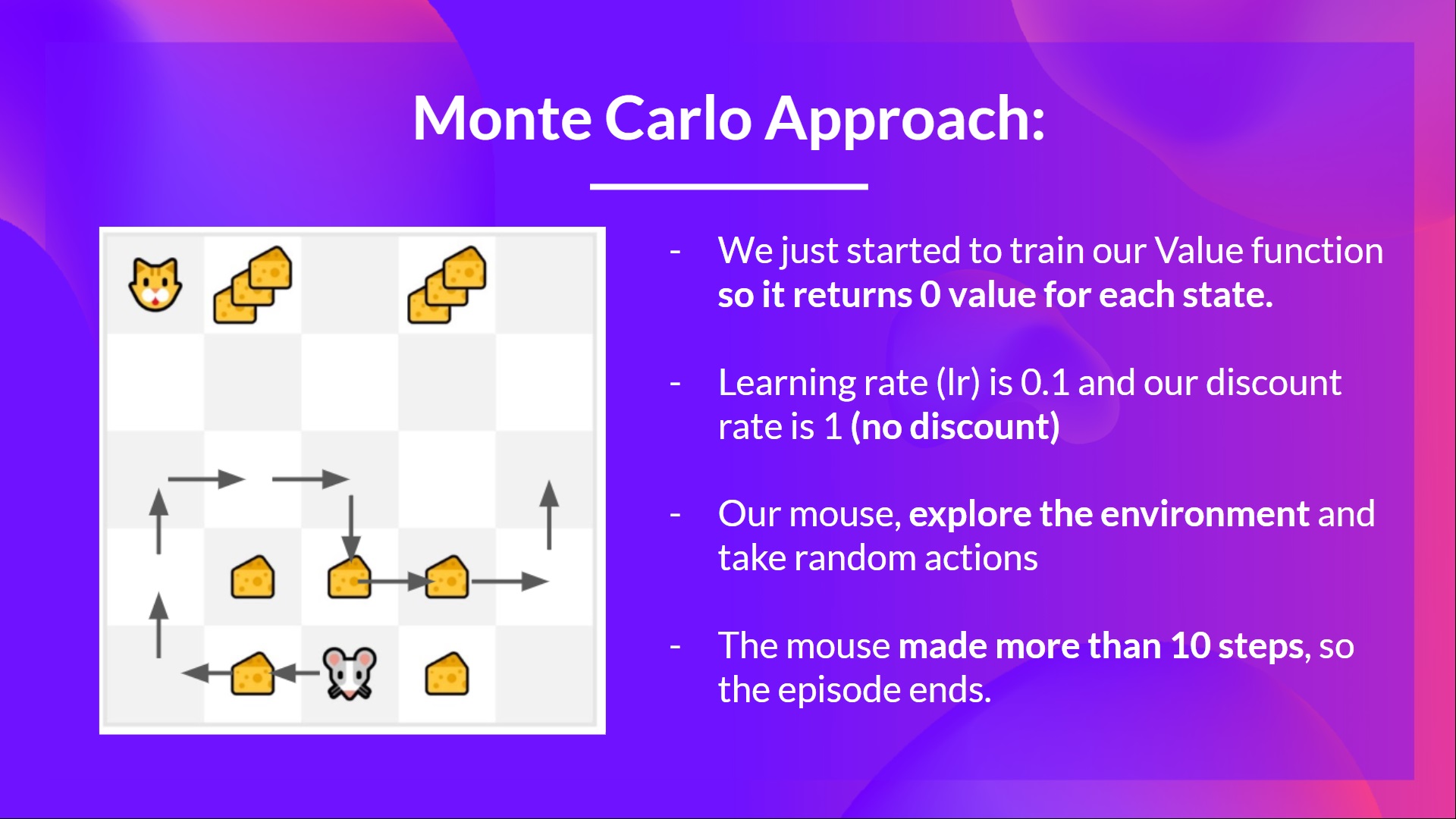

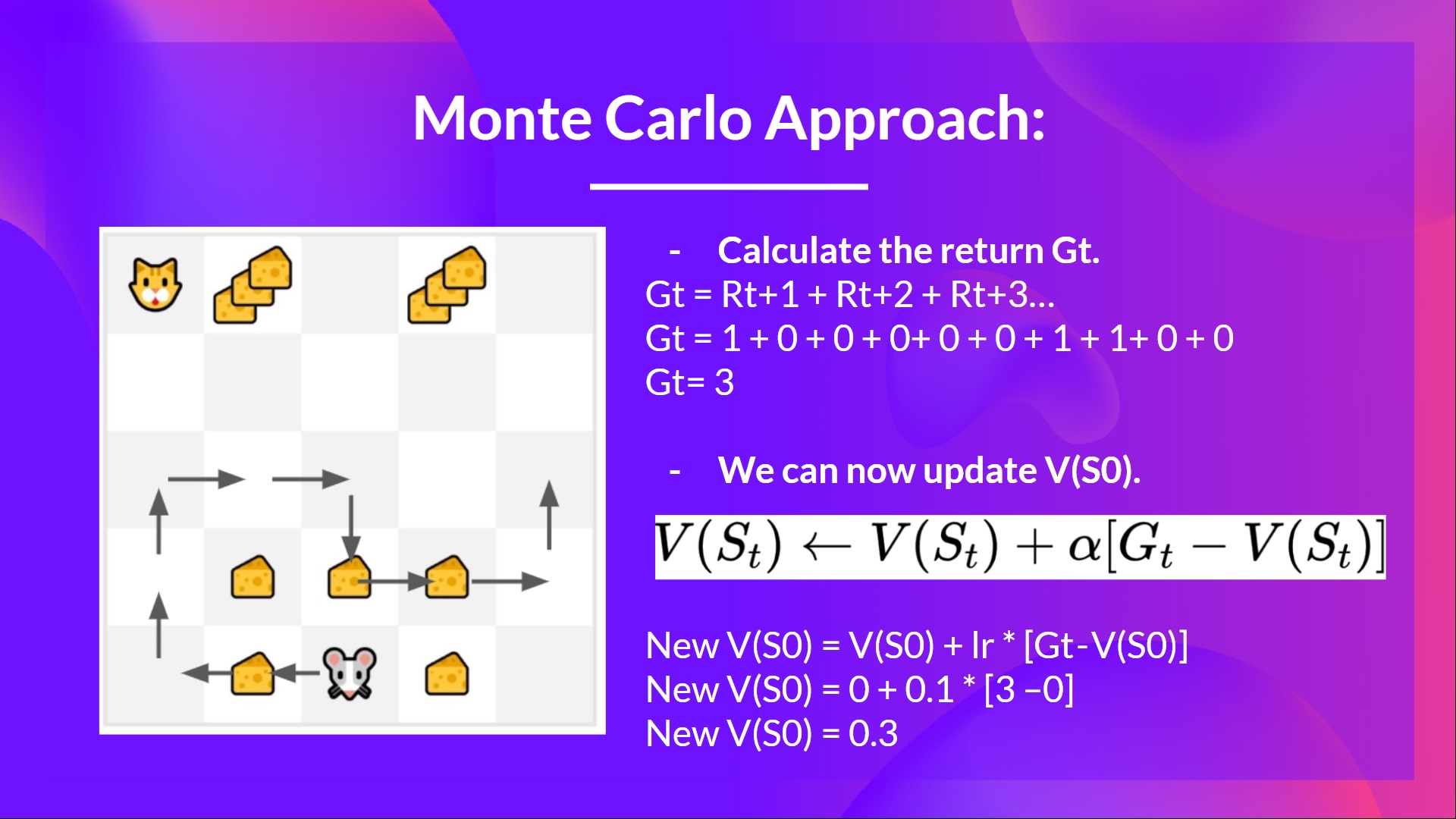

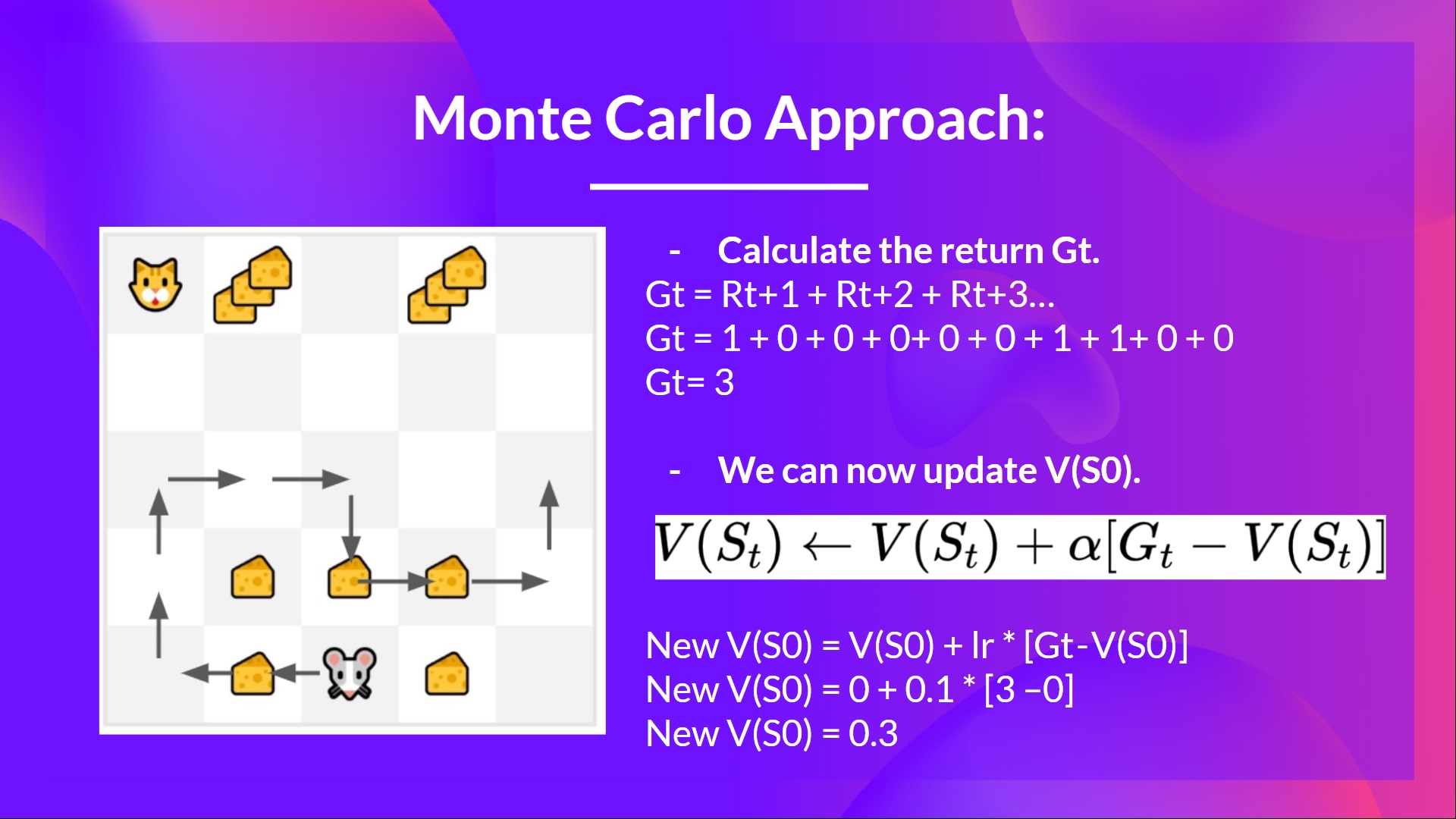

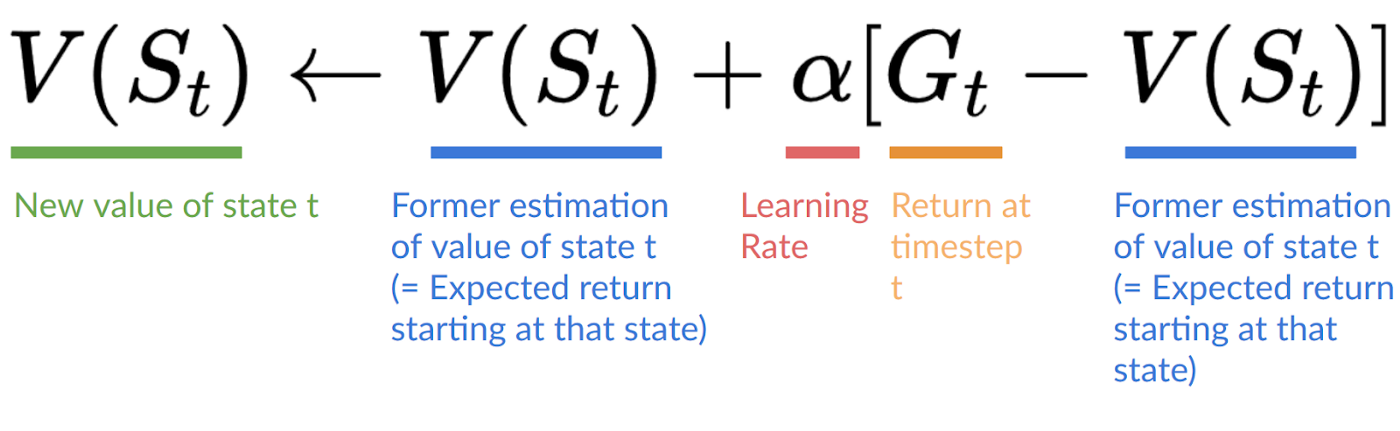

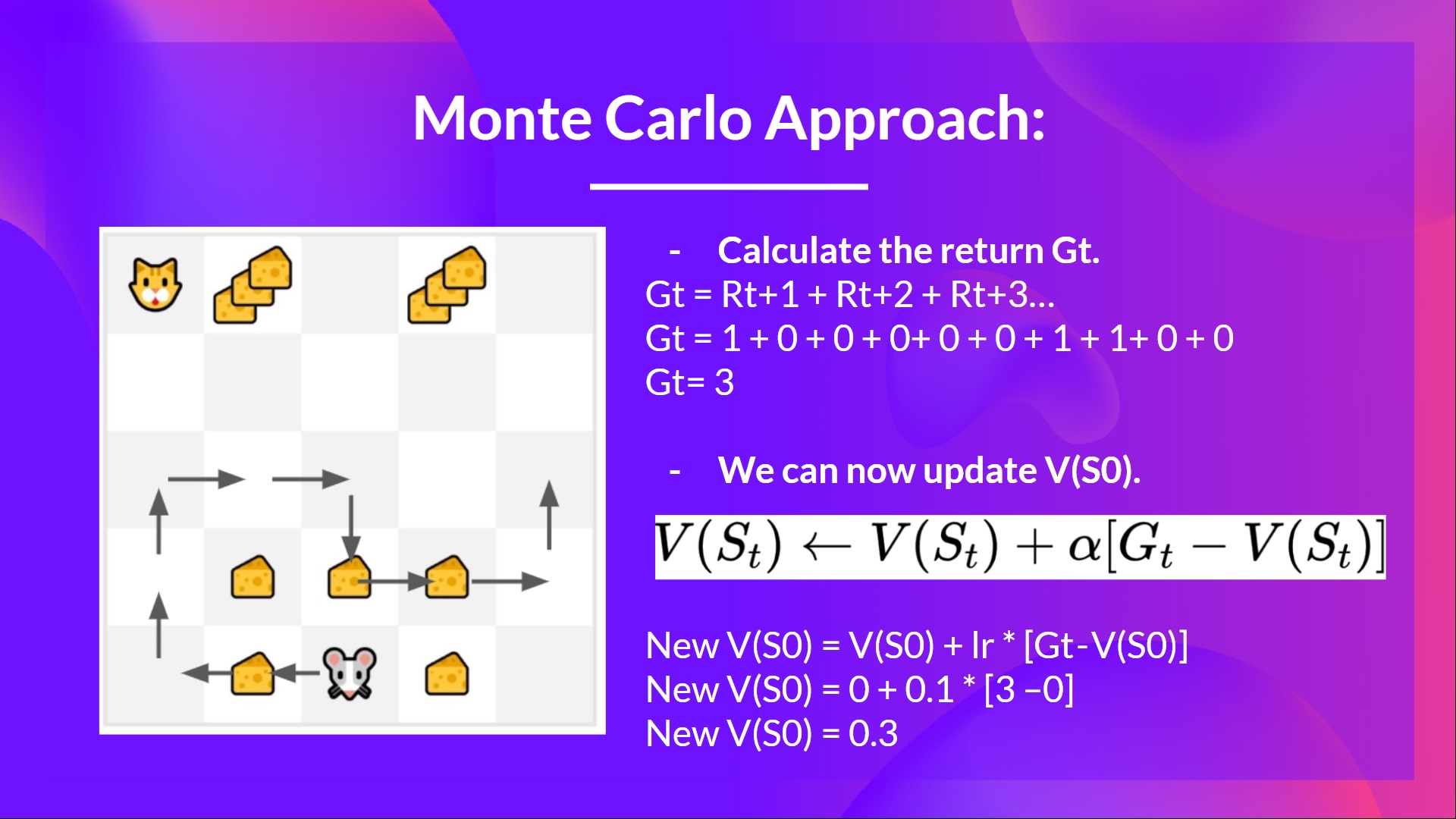

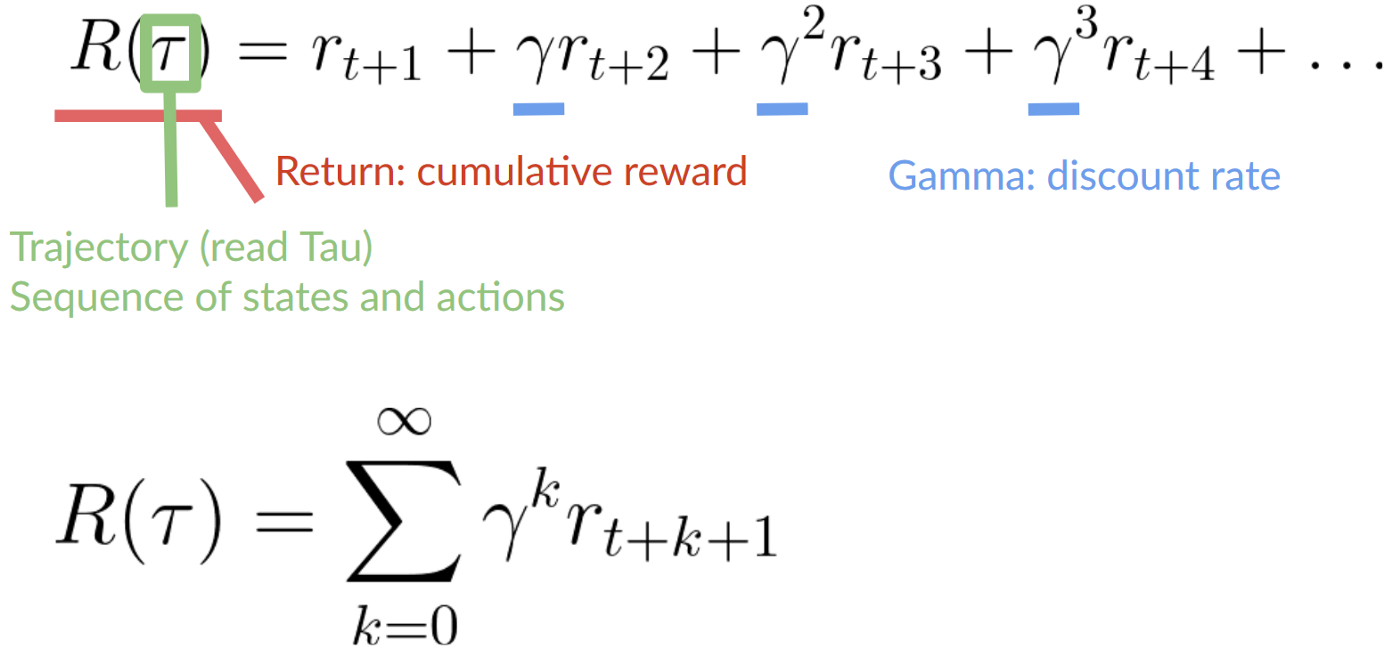

-- We have a list of state, action, rewards, next_state, **we need to calculate the return \\(G{t}\\)**

-- \\(G_t = R_{t+1} + R_{t+2} + R_{t+3} ...\\)

-- \\(G_t = R_{t+1} + R_{t+2} + R_{t+3}…\\) (for simplicity we don’t discount the rewards).

-- \\(G_t = 1 + 0 + 0 + 0+ 0 + 0 + 1 + 1 + 0 + 0\\)

-- \\(G_t= 3\\)

-- We can now update \\(V(S_0)\\):

+

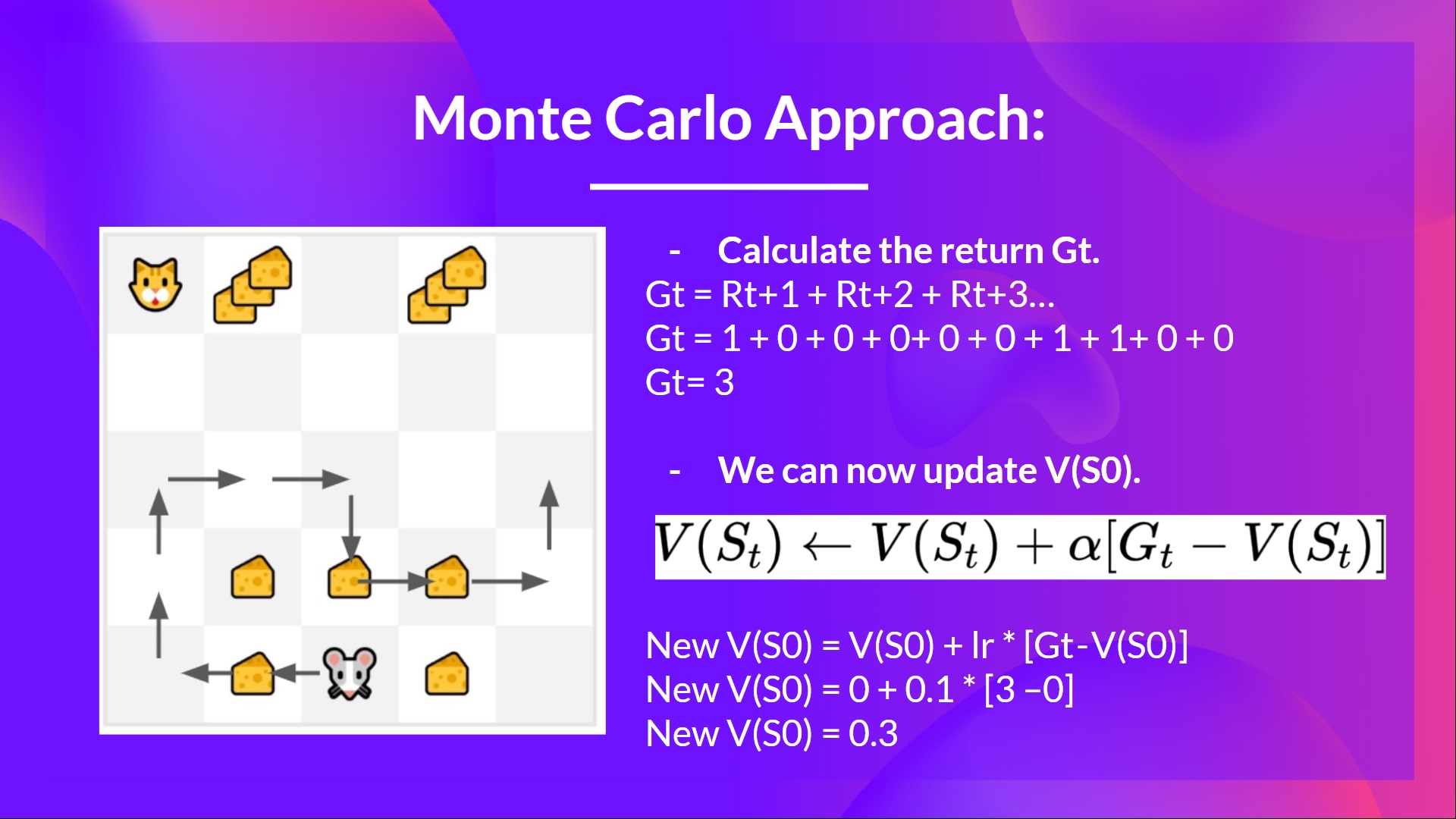

+- We have a list of state, action, rewards, next_state, **we need to calculate the return \\(G{t=0}\\)**

+

+\\(G_t = R_{t+1} + R_{t+2} + R_{t+3} ...\\) (for simplicity, we don't discount the rewards)

+

+\\(G_0 = R_{1} + R_{2} + R_{3}…\\)

+

+\\(G_0 = 1 + 0 + 0 + 0 + 0 + 0 + 1 + 1 + 0 + 0\\)

+

+\\(G_0 = 3\\)

+

+- We can now compute the **new** \\(V(S_0)\\):

-- We have a list of state, action, rewards, next_state, **we need to calculate the return \\(G{t}\\)**

-- \\(G_t = R_{t+1} + R_{t+2} + R_{t+3} ...\\)

-- \\(G_t = R_{t+1} + R_{t+2} + R_{t+3}…\\) (for simplicity we don’t discount the rewards).

-- \\(G_t = 1 + 0 + 0 + 0+ 0 + 0 + 1 + 1 + 0 + 0\\)

-- \\(G_t= 3\\)

-- We can now update \\(V(S_0)\\):

+

+- We have a list of state, action, rewards, next_state, **we need to calculate the return \\(G{t=0}\\)**

+

+\\(G_t = R_{t+1} + R_{t+2} + R_{t+3} ...\\) (for simplicity, we don't discount the rewards)

+

+\\(G_0 = R_{1} + R_{2} + R_{3}…\\)

+

+\\(G_0 = 1 + 0 + 0 + 0 + 0 + 0 + 1 + 1 + 0 + 0\\)

+

+\\(G_0 = 3\\)

+

+- We can now compute the **new** \\(V(S_0)\\):

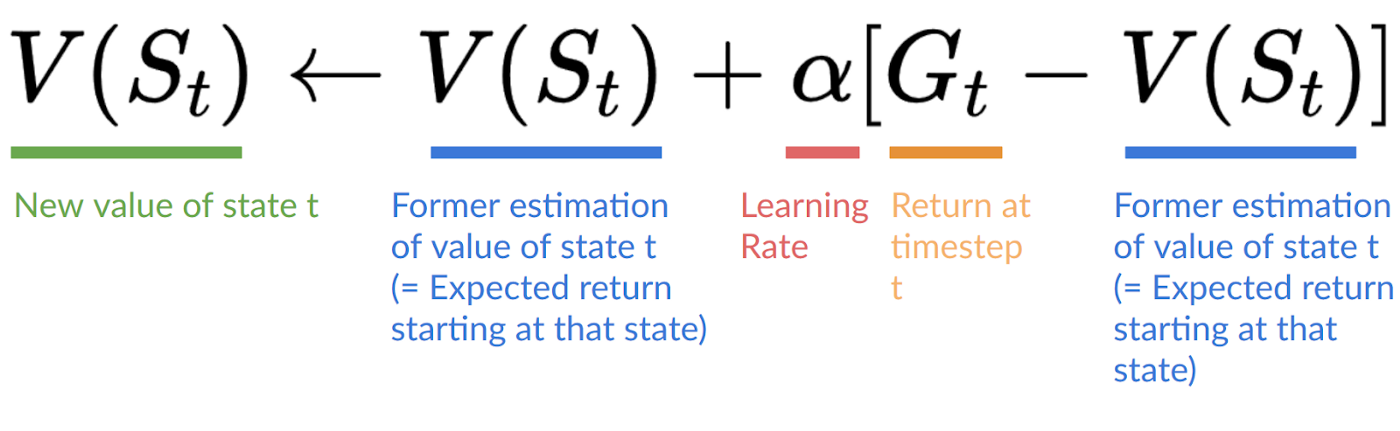

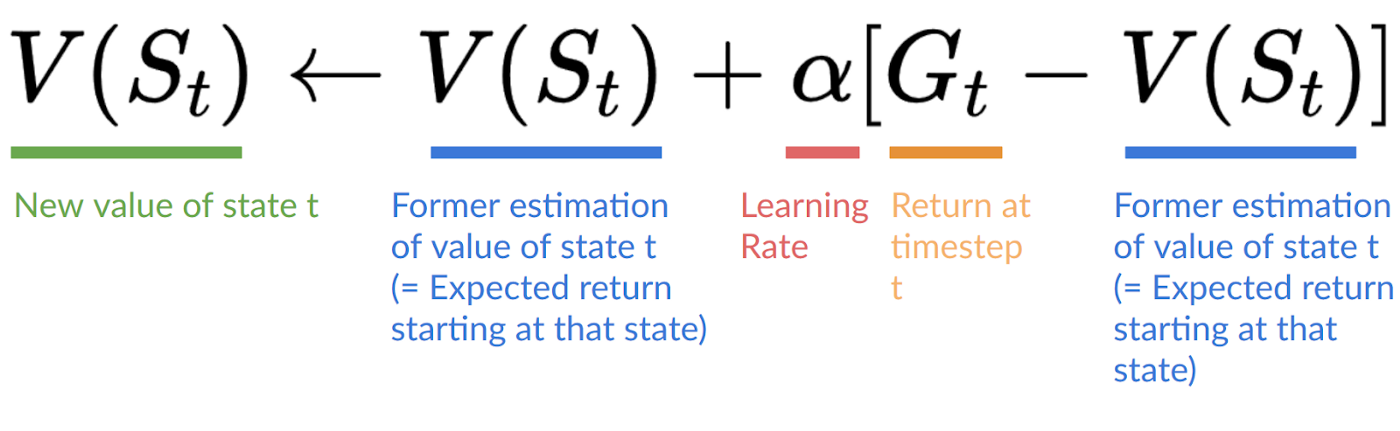

-- New \\(V(S_0) = V(S_0) + lr * [G_t — V(S_0)]\\)

-- New \\(V(S_0) = 0 + 0.1 * [3 – 0]\\)

-- New \\(V(S_0) = 0.3\\)

+\\(V(S_0) = V(S_0) + lr * [G_0 — V(S_0)]\\)

+

+\\(V(S_0) = 0 + 0.1 * [3 – 0]\\)

+

+\\(V(S_0) = 0.3\\)

-- New \\(V(S_0) = V(S_0) + lr * [G_t — V(S_0)]\\)

-- New \\(V(S_0) = 0 + 0.1 * [3 – 0]\\)

-- New \\(V(S_0) = 0.3\\)

+\\(V(S_0) = V(S_0) + lr * [G_0 — V(S_0)]\\)

+

+\\(V(S_0) = 0 + 0.1 * [3 – 0]\\)

+

+\\(V(S_0) = 0.3\\)

diff --git a/units/en/unit2/q-learning.mdx b/units/en/unit2/q-learning.mdx

index ec321720..1ff84565 100644

--- a/units/en/unit2/q-learning.mdx

+++ b/units/en/unit2/q-learning.mdx

@@ -27,7 +27,8 @@ Let's go through an example of a maze.

diff --git a/units/en/unit2/q-learning.mdx b/units/en/unit2/q-learning.mdx

index ec321720..1ff84565 100644

--- a/units/en/unit2/q-learning.mdx

+++ b/units/en/unit2/q-learning.mdx

@@ -27,7 +27,8 @@ Let's go through an example of a maze.

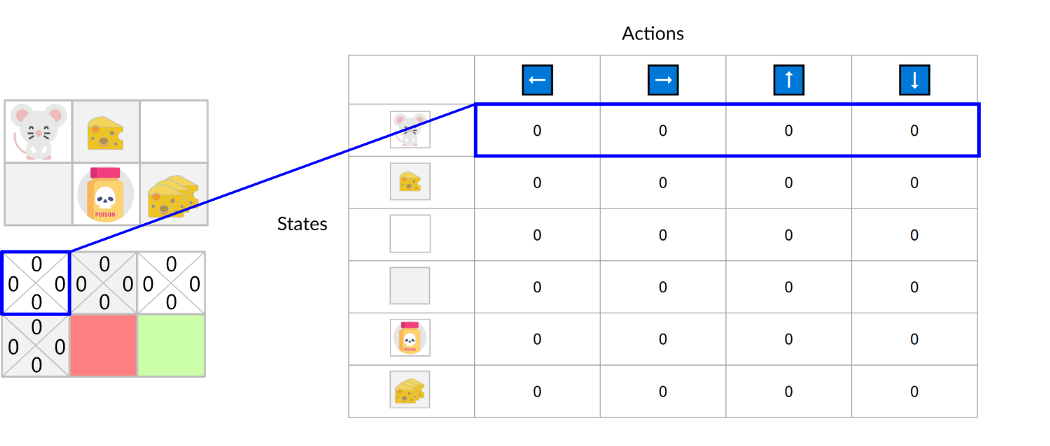

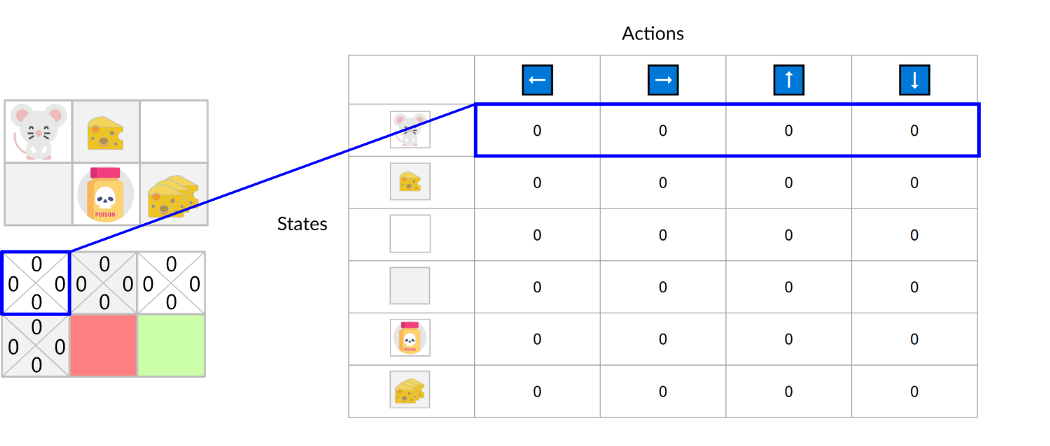

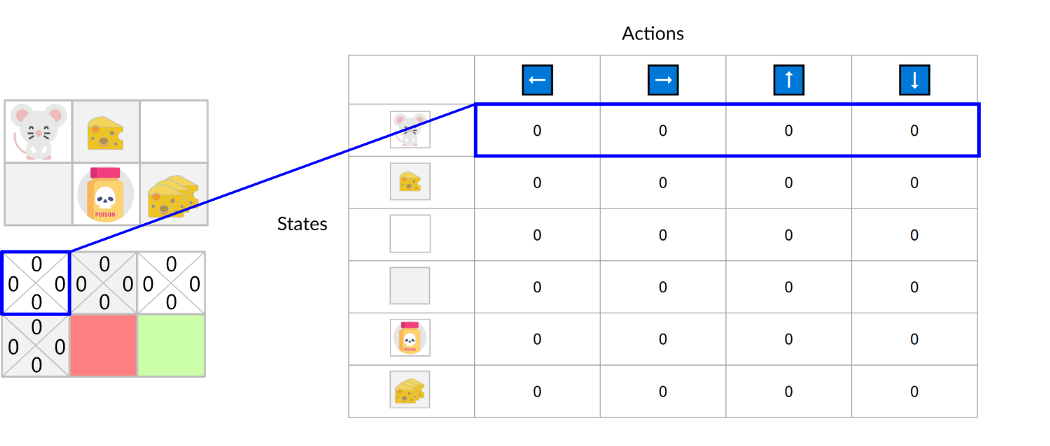

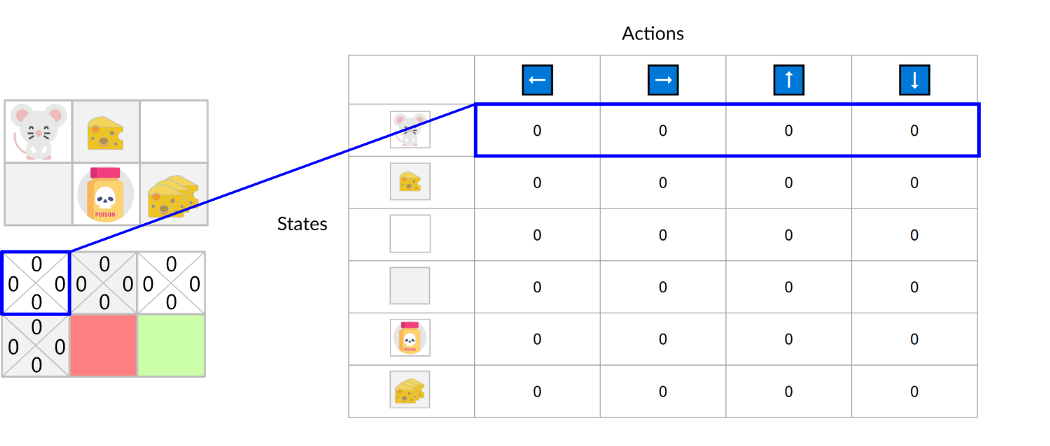

-The Q-table is initialized. That's why all values are = 0. This table **contains, for each state and action, the corresponding state-action values.**

+The Q-table is initialized. That's why all values are = 0. This table **contains, for each state and action, the corresponding state-action values.**

+For this simple example, the state is only defined by the position of the mouse. Therefore, we have 2*3 rows in our Q-table, one row for each possible position of the mouse. In more complex scenarios, the state could contain more information than the position of the actor.

-The Q-table is initialized. That's why all values are = 0. This table **contains, for each state and action, the corresponding state-action values.**

+The Q-table is initialized. That's why all values are = 0. This table **contains, for each state and action, the corresponding state-action values.**

+For this simple example, the state is only defined by the position of the mouse. Therefore, we have 2*3 rows in our Q-table, one row for each possible position of the mouse. In more complex scenarios, the state could contain more information than the position of the actor.

@@ -113,7 +114,7 @@ This means that to update our \\(Q(S_t, A_t)\\):

- To update our Q-value at a given state-action pair, we use the TD target.

How do we form the TD target?

-1. We obtain the reward after taking the action \\(R_{t+1}\\).

+1. We obtain the reward \\(R_{t+1}\\) after taking the action \\(A_t\\).

2. To get the **best state-action pair value** for the next state, we use a greedy policy to select the next best action. Note that this is not an epsilon-greedy policy, this will always take the action with the highest state-action value.

Then when the update of this Q-value is done, we start in a new state and select our action **using a epsilon-greedy policy again.**

diff --git a/units/en/unit3/deep-q-algorithm.mdx b/units/en/unit3/deep-q-algorithm.mdx

index adbe44a6..28e7fd50 100644

--- a/units/en/unit3/deep-q-algorithm.mdx

+++ b/units/en/unit3/deep-q-algorithm.mdx

@@ -40,8 +40,8 @@ Experience replay helps by **using the experiences of the training more efficien

⇒ This allows the agent to **learn from the same experiences multiple times**.

-2. **Avoid forgetting previous experiences and reduce the correlation between experiences**.

-- The problem we get if we give sequential samples of experiences to our neural network is that it tends to forget **the previous experiences as it gets new experiences.** For instance, if the agent is in the first level and then in the second, which is different, it can forget how to behave and play in the first level.

+2. **Avoid forgetting previous experiences (aka catastrophic interference, or catastrophic forgetting) and reduce the correlation between experiences**.

+- **[catastrophic forgetting](https://en.wikipedia.org/wiki/Catastrophic_interference)**: The problem we get if we give sequential samples of experiences to our neural network is that it tends to forget **the previous experiences as it gets new experiences.** For instance, if the agent is in the first level and then in the second, which is different, it can forget how to behave and play in the first level.

The solution is to create a Replay Buffer that stores experience tuples while interacting with the environment and then sample a small batch of tuples. This prevents **the network from only learning about what it has done immediately before.**

diff --git a/units/en/unit3/deep-q-network.mdx b/units/en/unit3/deep-q-network.mdx

index dc8cb13a..50cd4f2b 100644

--- a/units/en/unit3/deep-q-network.mdx

+++ b/units/en/unit3/deep-q-network.mdx

@@ -32,7 +32,7 @@ That’s why, to capture temporal information, we stack four frames together.

Then the stacked frames are processed by three convolutional layers. These layers **allow us to capture and exploit spatial relationships in images**. But also, because the frames are stacked together, **we can exploit some temporal properties across those frames**.

-If you don't know what convolutional layers are, don't worry. You can check out [Lesson 4 of this free Deep Reinforcement Learning Course by Udacity](https://www.udacity.com/course/deep-learning-pytorch--ud188)

+If you don't know what convolutional layers are, don't worry. You can check out [Lesson 4 of this free Deep Learning Course by Udacity](https://www.udacity.com/course/deep-learning-pytorch--ud188)

Finally, we have a couple of fully connected layers that output a Q-value for each possible action at that state.

diff --git a/units/en/unit3/introduction.mdx b/units/en/unit3/introduction.mdx

index de755409..b892c751 100644

--- a/units/en/unit3/introduction.mdx

+++ b/units/en/unit3/introduction.mdx

@@ -6,7 +6,7 @@

In the last unit, we learned our first reinforcement learning algorithm: Q-Learning, **implemented it from scratch**, and trained it in two environments, FrozenLake-v1 ☃️ and Taxi-v3 🚕.

-We got excellent results with this simple algorithm, but these environments were relatively simple because the **state space was discrete and small** (14 different states for FrozenLake-v1 and 500 for Taxi-v3). For comparison, the state space in Atari games can **contain \\(10^{9}\\) to \\(10^{11}\\) states**.

+We got excellent results with this simple algorithm, but these environments were relatively simple because the **state space was discrete and small** (16 different states for FrozenLake-v1 and 500 for Taxi-v3). For comparison, the state space in Atari games can **contain \\(10^{9}\\) to \\(10^{11}\\) states**.

But as we'll see, producing and updating a **Q-table can become ineffective in large state space environments.**

diff --git a/units/en/unit4/glossary.mdx b/units/en/unit4/glossary.mdx

new file mode 100644

index 00000000..e2ea67f7

--- /dev/null

+++ b/units/en/unit4/glossary.mdx

@@ -0,0 +1,25 @@

+# Glossary

+

+This is a community-created glossary. Contributions are welcome!

+

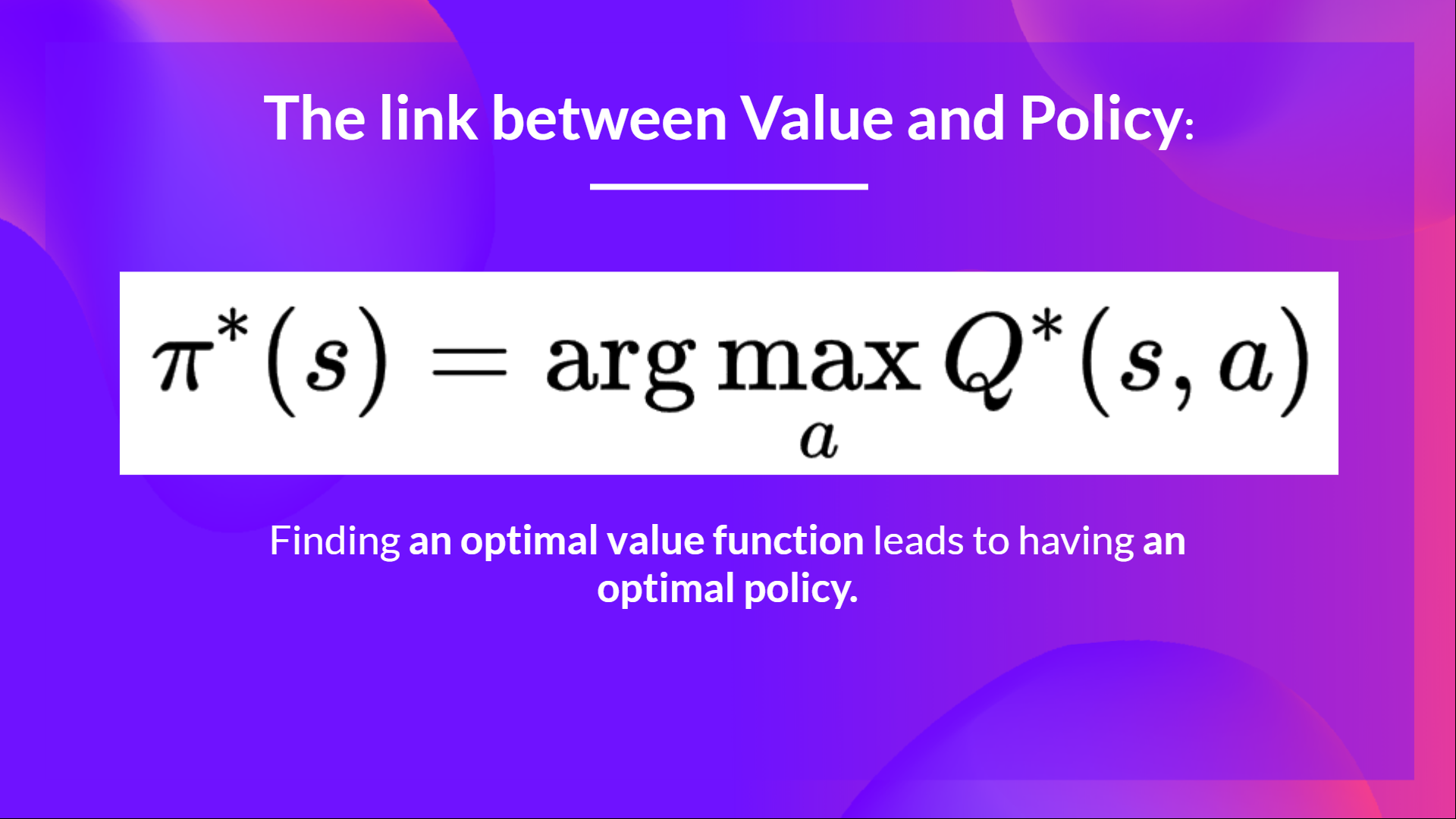

+- **Deep Q-Learning:** A value-based deep reinforcement learning algorithm that uses a deep neural network to approximate Q-values for actions in a given state. The goal of Deep Q-learning is to find the optimal policy that maximizes the expected cumulative reward by learning the action-values.

+

+- **Value-based methods:** Reinforcement Learning methods that estimate a value function as an intermediate step towards finding an optimal policy.

+

+- **Policy-based methods:** Reinforcement Learning methods that directly learn to approximate the optimal policy without learning a value function. In practice they output a probability distribution over actions.

+

+ The benefits of using policy-gradient methods over value-based methods include:

+ - simplicity of integration: no need to store action values;

+ - ability to learn a stochastic policy: the agent explores the state space without always taking the same trajectory, and avoids the problem of perceptual aliasing;

+ - effectiveness in high-dimensional and continuous action spaces; and

+ - improved convergence properties.

+

+- **Policy Gradient:** A subset of policy-based methods where the objective is to maximize the performance of a parameterized policy using gradient ascent. The goal of a policy-gradient is to control the probability distribution of actions by tuning the policy such that good actions (that maximize the return) are sampled more frequently in the future.

+

+- **Monte Carlo Reinforce:** A policy-gradient algorithm that uses an estimated return from an entire episode to update the policy parameter.

+

+If you want to improve the course, you can [open a Pull Request.](https://github.com/huggingface/deep-rl-class/pulls)

+

+This glossary was made possible thanks to:

+

+- [Diego Carpintero](https://github.com/dcarpintero)

\ No newline at end of file

diff --git a/units/en/unit5/hands-on.mdx b/units/en/unit5/hands-on.mdx

index adc02c6c..95fe5aa5 100644

--- a/units/en/unit5/hands-on.mdx

+++ b/units/en/unit5/hands-on.mdx

@@ -11,11 +11,11 @@ We learned what ML-Agents is and how it works. We also studied the two environme

@@ -113,7 +114,7 @@ This means that to update our \\(Q(S_t, A_t)\\):

- To update our Q-value at a given state-action pair, we use the TD target.

How do we form the TD target?

-1. We obtain the reward after taking the action \\(R_{t+1}\\).

+1. We obtain the reward \\(R_{t+1}\\) after taking the action \\(A_t\\).

2. To get the **best state-action pair value** for the next state, we use a greedy policy to select the next best action. Note that this is not an epsilon-greedy policy, this will always take the action with the highest state-action value.

Then when the update of this Q-value is done, we start in a new state and select our action **using a epsilon-greedy policy again.**

diff --git a/units/en/unit3/deep-q-algorithm.mdx b/units/en/unit3/deep-q-algorithm.mdx

index adbe44a6..28e7fd50 100644

--- a/units/en/unit3/deep-q-algorithm.mdx

+++ b/units/en/unit3/deep-q-algorithm.mdx

@@ -40,8 +40,8 @@ Experience replay helps by **using the experiences of the training more efficien

⇒ This allows the agent to **learn from the same experiences multiple times**.

-2. **Avoid forgetting previous experiences and reduce the correlation between experiences**.

-- The problem we get if we give sequential samples of experiences to our neural network is that it tends to forget **the previous experiences as it gets new experiences.** For instance, if the agent is in the first level and then in the second, which is different, it can forget how to behave and play in the first level.

+2. **Avoid forgetting previous experiences (aka catastrophic interference, or catastrophic forgetting) and reduce the correlation between experiences**.

+- **[catastrophic forgetting](https://en.wikipedia.org/wiki/Catastrophic_interference)**: The problem we get if we give sequential samples of experiences to our neural network is that it tends to forget **the previous experiences as it gets new experiences.** For instance, if the agent is in the first level and then in the second, which is different, it can forget how to behave and play in the first level.

The solution is to create a Replay Buffer that stores experience tuples while interacting with the environment and then sample a small batch of tuples. This prevents **the network from only learning about what it has done immediately before.**

diff --git a/units/en/unit3/deep-q-network.mdx b/units/en/unit3/deep-q-network.mdx

index dc8cb13a..50cd4f2b 100644

--- a/units/en/unit3/deep-q-network.mdx

+++ b/units/en/unit3/deep-q-network.mdx

@@ -32,7 +32,7 @@ That’s why, to capture temporal information, we stack four frames together.

Then the stacked frames are processed by three convolutional layers. These layers **allow us to capture and exploit spatial relationships in images**. But also, because the frames are stacked together, **we can exploit some temporal properties across those frames**.

-If you don't know what convolutional layers are, don't worry. You can check out [Lesson 4 of this free Deep Reinforcement Learning Course by Udacity](https://www.udacity.com/course/deep-learning-pytorch--ud188)

+If you don't know what convolutional layers are, don't worry. You can check out [Lesson 4 of this free Deep Learning Course by Udacity](https://www.udacity.com/course/deep-learning-pytorch--ud188)

Finally, we have a couple of fully connected layers that output a Q-value for each possible action at that state.

diff --git a/units/en/unit3/introduction.mdx b/units/en/unit3/introduction.mdx

index de755409..b892c751 100644

--- a/units/en/unit3/introduction.mdx

+++ b/units/en/unit3/introduction.mdx

@@ -6,7 +6,7 @@

In the last unit, we learned our first reinforcement learning algorithm: Q-Learning, **implemented it from scratch**, and trained it in two environments, FrozenLake-v1 ☃️ and Taxi-v3 🚕.

-We got excellent results with this simple algorithm, but these environments were relatively simple because the **state space was discrete and small** (14 different states for FrozenLake-v1 and 500 for Taxi-v3). For comparison, the state space in Atari games can **contain \\(10^{9}\\) to \\(10^{11}\\) states**.

+We got excellent results with this simple algorithm, but these environments were relatively simple because the **state space was discrete and small** (16 different states for FrozenLake-v1 and 500 for Taxi-v3). For comparison, the state space in Atari games can **contain \\(10^{9}\\) to \\(10^{11}\\) states**.

But as we'll see, producing and updating a **Q-table can become ineffective in large state space environments.**

diff --git a/units/en/unit4/glossary.mdx b/units/en/unit4/glossary.mdx

new file mode 100644

index 00000000..e2ea67f7

--- /dev/null

+++ b/units/en/unit4/glossary.mdx

@@ -0,0 +1,25 @@

+# Glossary

+

+This is a community-created glossary. Contributions are welcome!

+

+- **Deep Q-Learning:** A value-based deep reinforcement learning algorithm that uses a deep neural network to approximate Q-values for actions in a given state. The goal of Deep Q-learning is to find the optimal policy that maximizes the expected cumulative reward by learning the action-values.

+

+- **Value-based methods:** Reinforcement Learning methods that estimate a value function as an intermediate step towards finding an optimal policy.

+

+- **Policy-based methods:** Reinforcement Learning methods that directly learn to approximate the optimal policy without learning a value function. In practice they output a probability distribution over actions.

+

+ The benefits of using policy-gradient methods over value-based methods include:

+ - simplicity of integration: no need to store action values;

+ - ability to learn a stochastic policy: the agent explores the state space without always taking the same trajectory, and avoids the problem of perceptual aliasing;

+ - effectiveness in high-dimensional and continuous action spaces; and

+ - improved convergence properties.

+

+- **Policy Gradient:** A subset of policy-based methods where the objective is to maximize the performance of a parameterized policy using gradient ascent. The goal of a policy-gradient is to control the probability distribution of actions by tuning the policy such that good actions (that maximize the return) are sampled more frequently in the future.

+

+- **Monte Carlo Reinforce:** A policy-gradient algorithm that uses an estimated return from an entire episode to update the policy parameter.

+

+If you want to improve the course, you can [open a Pull Request.](https://github.com/huggingface/deep-rl-class/pulls)

+

+This glossary was made possible thanks to:

+

+- [Diego Carpintero](https://github.com/dcarpintero)

\ No newline at end of file

diff --git a/units/en/unit5/hands-on.mdx b/units/en/unit5/hands-on.mdx

index adc02c6c..95fe5aa5 100644

--- a/units/en/unit5/hands-on.mdx

+++ b/units/en/unit5/hands-on.mdx

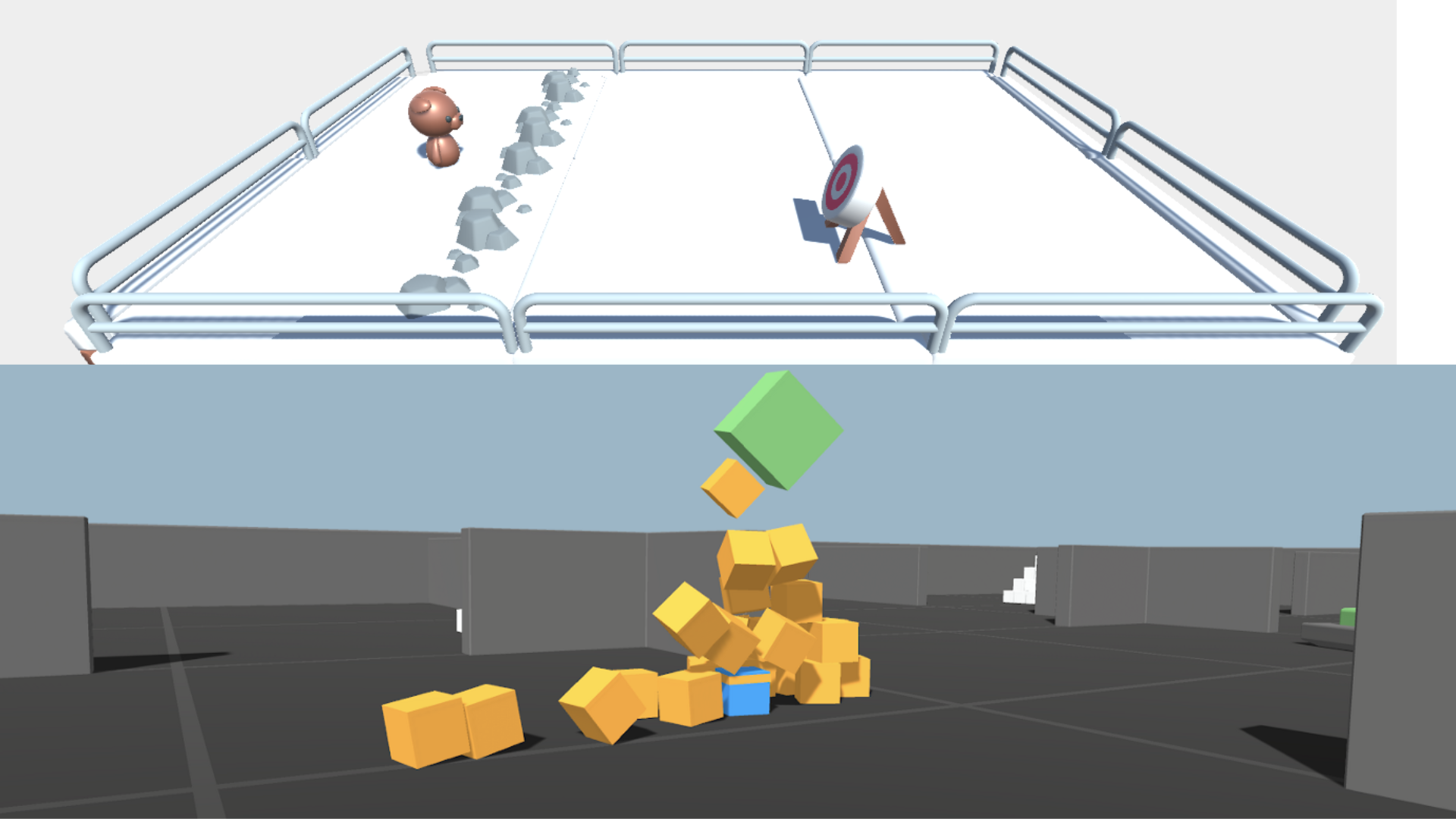

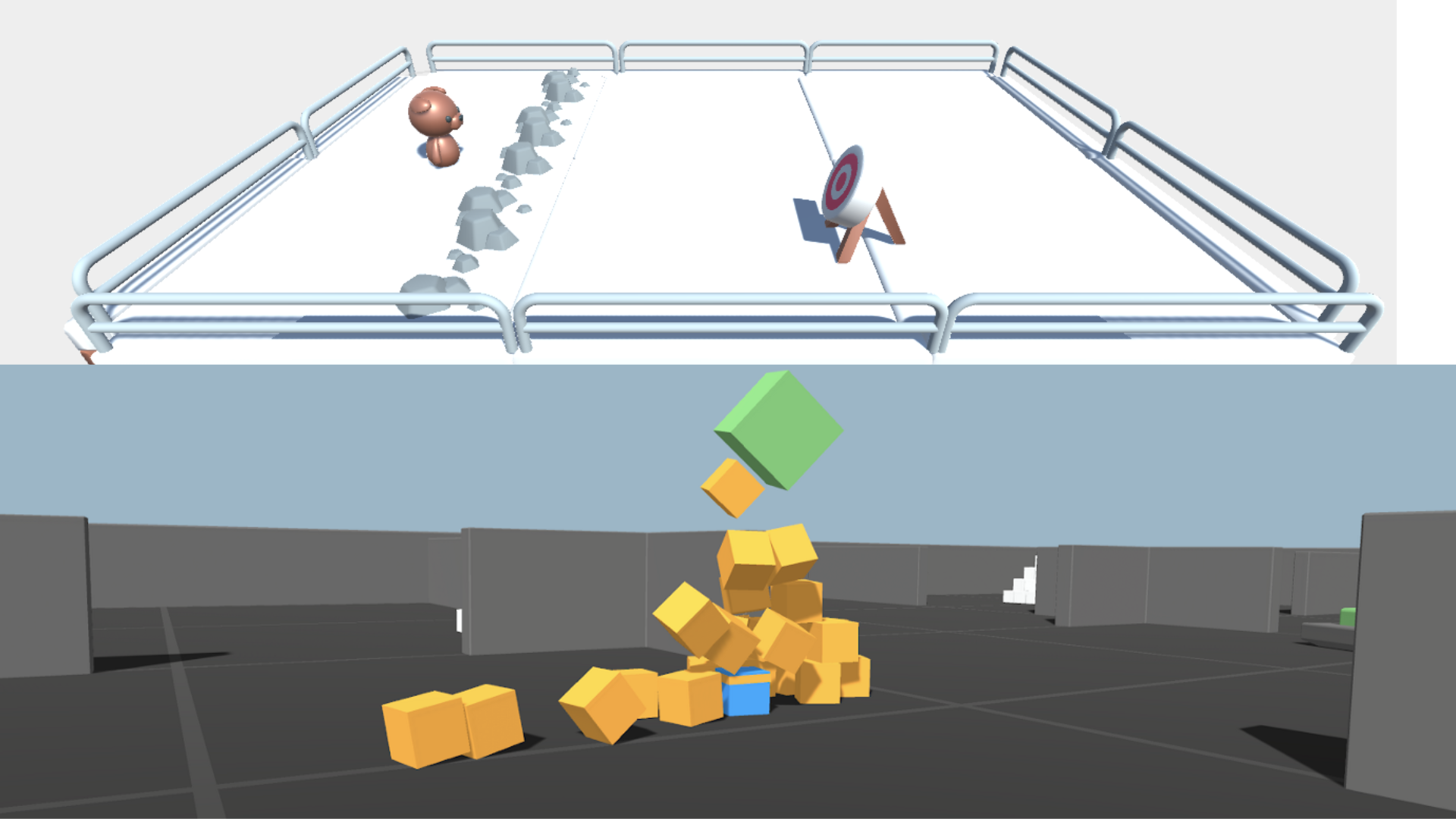

@@ -11,11 +11,11 @@ We learned what ML-Agents is and how it works. We also studied the two environme

-The ML-Agents integration on the Hub **is still experimental**. Some features will be added in the future. But, for now, to validate this hands-on for the certification process, you just need to push your trained models to the Hub.

-There are no minimum results to attain in order to validate this Hands On. But if you want to get nice results, you can try to reach the following:

+To validate this hands-on for the certification process, you **just need to push your trained models to the Hub.**

+There are **no minimum results to attain** in order to validate this Hands On. But if you want to get nice results, you can try to reach the following:

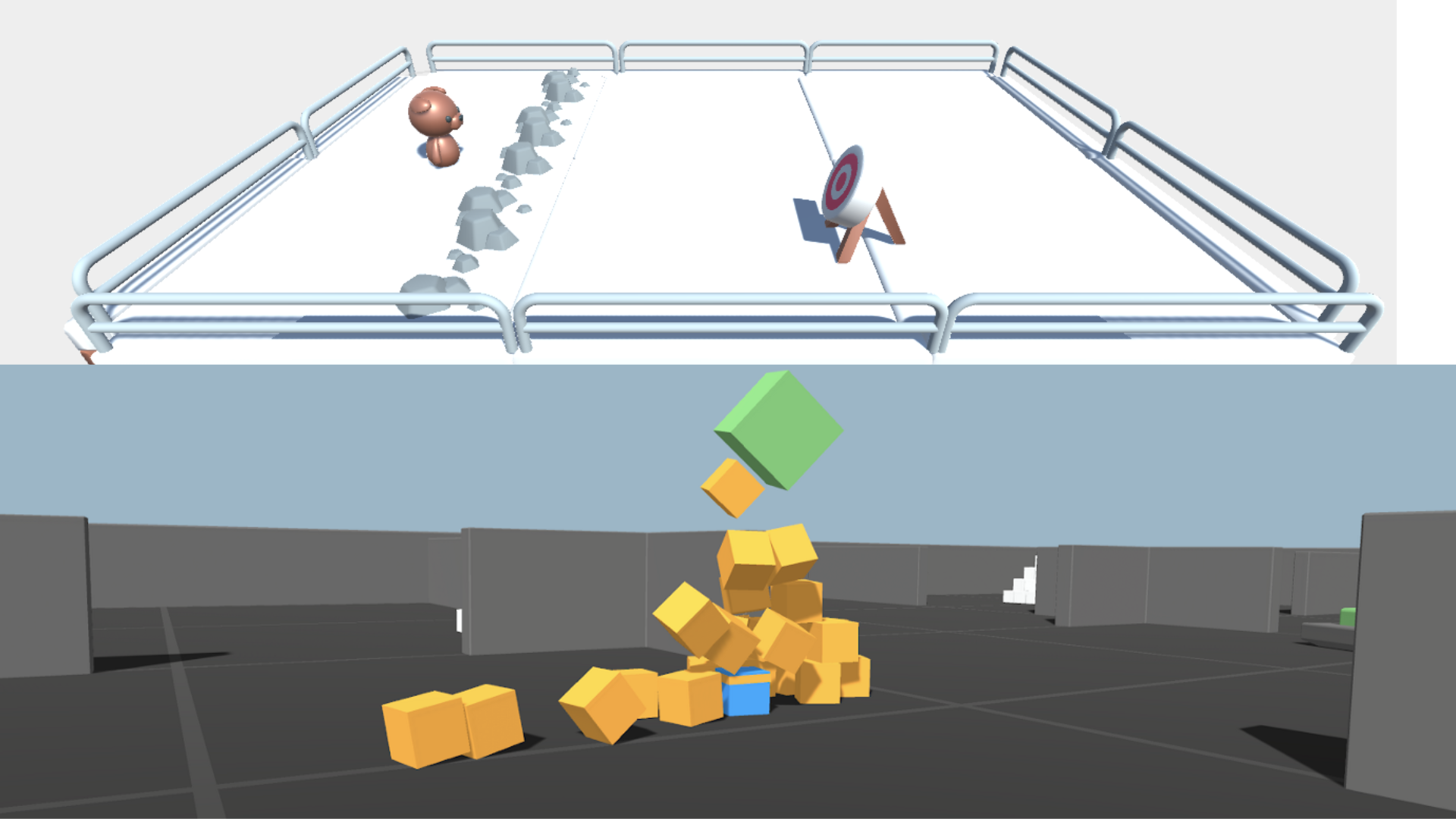

-- For [Pyramids](https://singularite.itch.io/pyramids): Mean Reward = 1.75

-- For [SnowballTarget](https://singularite.itch.io/snowballtarget): Mean Reward = 15 or 30 targets shoot in an episode.

+- For [Pyramids](https://huggingface.co/spaces/unity/ML-Agents-Pyramids): Mean Reward = 1.75

+- For [SnowballTarget](https://huggingface.co/spaces/ThomasSimonini/ML-Agents-SnowballTarget): Mean Reward = 15 or 30 targets shoot in an episode.

For more information about the certification process, check this section 👉 https://huggingface.co/deep-rl-course/en/unit0/introduction#certification-process

@@ -53,9 +53,7 @@ For more information about the certification process, check this section 👉 ht

### 📚 RL-Library:

-- [ML-Agents (HuggingFace Experimental Version)](https://github.com/huggingface/ml-agents)

-

-⚠ We're going to use an experimental version of ML-Agents where you can push to Hub and load from Hub Unity ML-Agents Models **you need to install the same version**

+- [ML-Agents](https://github.com/Unity-Technologies/ml-agents)

We're constantly trying to improve our tutorials, so **if you find some issues in this notebook**, please [open an issue on the GitHub Repo](https://github.com/huggingface/deep-rl-class/issues).

@@ -86,18 +84,16 @@ Before diving into the notebook, you need to:

## Clone the repository and install the dependencies 🔽

- We need to clone the repository that **contains the experimental version of the library that allows you to push your trained agent to the Hub.**

-```python

-%%capture

+```bash

# Clone the repository

-!git clone --depth 1 --branch hf-integration-save https://github.com/huggingface/ml-agents

+git clone --depth 1 https://github.com/Unity-Technologies/ml-agents

```

-```python

-%%capture

+```bash

# Go inside the repository and install the package

-%cd ml-agents

-!pip3 install -e ./ml-agents-envs

-!pip3 install -e ./ml-agents

+cd ml-agents

+pip install -e ./ml-agents-envs

+pip install -e ./ml-agents

```

## SnowballTarget ⛄

@@ -106,35 +102,35 @@ If you need a refresher on how this environment works check this section 👉

https://huggingface.co/deep-rl-course/unit5/snowball-target

### Download and move the environment zip file in `./training-envs-executables/linux/`

+

- Our environment executable is in a zip file.

- We need to download it and place it to `./training-envs-executables/linux/`

- We use a linux executable because we use colab, and colab machines OS is Ubuntu (linux)

-```python

+```bash

# Here, we create training-envs-executables and linux

-!mkdir ./training-envs-executables

-!mkdir ./training-envs-executables/linux

+mkdir ./training-envs-executables

+mkdir ./training-envs-executables/linux

```

Download the file SnowballTarget.zip from https://drive.google.com/file/d/1YHHLjyj6gaZ3Gemx1hQgqrPgSS2ZhmB5 using `wget`.

Check out the full solution to download large files from GDrive [here](https://bcrf.biochem.wisc.edu/2021/02/05/download-google-drive-files-using-wget/)

-```python

-!wget --load-cookies /tmp/cookies.txt "https://docs.google.com/uc?export=download&confirm=$(wget --quiet --save-cookies /tmp/cookies.txt --keep-session-cookies --no-check-certificate 'https://docs.google.com/uc?export=download&id=1YHHLjyj6gaZ3Gemx1hQgqrPgSS2ZhmB5' -O- | sed -rn 's/.*confirm=([0-9A-Za-z_]+).*/\1\n/p')&id=1YHHLjyj6gaZ3Gemx1hQgqrPgSS2ZhmB5" -O ./training-envs-executables/linux/SnowballTarget.zip && rm -rf /tmp/cookies.txt

+```bash

+wget --load-cookies /tmp/cookies.txt "https://docs.google.com/uc?export=download&confirm=$(wget --quiet --save-cookies /tmp/cookies.txt --keep-session-cookies --no-check-certificate 'https://docs.google.com/uc?export=download&id=1YHHLjyj6gaZ3Gemx1hQgqrPgSS2ZhmB5' -O- | sed -rn 's/.*confirm=([0-9A-Za-z_]+).*/\1\n/p')&id=1YHHLjyj6gaZ3Gemx1hQgqrPgSS2ZhmB5" -O ./training-envs-executables/linux/SnowballTarget.zip && rm -rf /tmp/cookies.txt

```

We unzip the executable.zip file

-```python

-%%capture

-!unzip -d ./training-envs-executables/linux/ ./training-envs-executables/linux/SnowballTarget.zip

+```bash

+unzip -d ./training-envs-executables/linux/ ./training-envs-executables/linux/SnowballTarget.zip

```

Make sure your file is accessible

-```python

-!chmod -R 755 ./training-envs-executables/linux/SnowballTarget

+```bash

+chmod -R 755 ./training-envs-executables/linux/SnowballTarget

```

### Define the SnowballTarget config file

@@ -204,7 +200,7 @@ Train the model and use the `--resume` flag to continue training in case of inte

The training will take 10 to 35min depending on your config. Go take a ☕️ you deserve it 🤗.

```bash

-!mlagents-learn ./config/ppo/SnowballTarget.yaml --env=./training-envs-executables/linux/SnowballTarget/SnowballTarget --run-id="SnowballTarget1" --no-graphics

+mlagents-learn ./config/ppo/SnowballTarget.yaml --env=./training-envs-executables/linux/SnowballTarget/SnowballTarget --run-id="SnowballTarget1" --no-graphics

```

### Push the agent to the Hugging Face Hub

@@ -245,10 +241,10 @@ If the repo does not exist **it will be created automatically**

For instance:

-`!mlagents-push-to-hf --run-id="SnowballTarget1" --local-dir="./results/SnowballTarget1" --repo-id="ThomasSimonini/ppo-SnowballTarget" --commit-message="First Push"`

+`mlagents-push-to-hf --run-id="SnowballTarget1" --local-dir="./results/SnowballTarget1" --repo-id="ThomasSimonini/ppo-SnowballTarget" --commit-message="First Push"`

```python

-!mlagents-push-to-hf --run-id= # Add your run id --local-dir= # Your local dir --repo-id= # Your repo id --commit-message= # Your commit message

+mlagents-push-to-hf --run-id= # Add your run id --local-dir= # Your local dir --repo-id= # Your repo id --commit-message= # Your commit message

```

If everything worked you should see this at the end of the process (but with a different url 😆) :

@@ -269,7 +265,7 @@ This step it's simple:

1. Remember your repo-id

-2. Go here: https://singularite.itch.io/snowballtarget

+2. Go here: https://huggingface.co/spaces/ThomasSimonini/ML-Agents-SnowballTarget

3. Launch the game and put it in full screen by clicking on the bottom right button

@@ -309,11 +305,12 @@ Unzip it

Make sure your file is accessible

-```python

-!chmod -R 755 ./training-envs-executables/linux/Pyramids/Pyramids

+```bash

+chmod -R 755 ./training-envs-executables/linux/Pyramids/Pyramids

```

### Modify the PyramidsRND config file

+

- Contrary to the first environment, which was a custom one, **Pyramids was made by the Unity team**.

- So the PyramidsRND config file already exists and is in ./content/ml-agents/config/ppo/PyramidsRND.yaml

- You might ask why "RND" is in PyramidsRND. RND stands for *random network distillation* it's a way to generate curiosity rewards. If you want to know more about that, we wrote an article explaining this technique: https://medium.com/data-from-the-trenches/curiosity-driven-learning-through-random-network-distillation-488ffd8e5938

@@ -333,37 +330,36 @@ We’re now ready to train our agent 🔥.

The training will take 30 to 45min depending on your machine, go take a ☕️ you deserve it 🤗.

```python

-!mlagents-learn ./config/ppo/PyramidsRND.yaml --env=./training-envs-executables/linux/Pyramids/Pyramids --run-id="Pyramids Training" --no-graphics

+mlagents-learn ./config/ppo/PyramidsRND.yaml --env=./training-envs-executables/linux/Pyramids/Pyramids --run-id="Pyramids Training" --no-graphics

```

### Push the agent to the Hugging Face Hub

- Now that we trained our agent, we’re **ready to push it to the Hub to be able to visualize it playing on your browser🔥.**

-```bash

-!mlagents-push-to-hf --run-id= # Add your run id --local-dir= # Your local dir --repo-id= # Your repo id --commit-message= # Your commit message

+```python

+mlagents-push-to-hf --run-id= # Add your run id --local-dir= # Your local dir --repo-id= # Your repo id --commit-message= # Your commit message

```

### Watch your agent playing 👀

-The temporary link for the Pyramids demo is: https://singularite.itch.io/pyramids

-

+👉 https://huggingface.co/spaces/unity/ML-Agents-Pyramids

+

### 🎁 Bonus: Why not train on another environment?

+

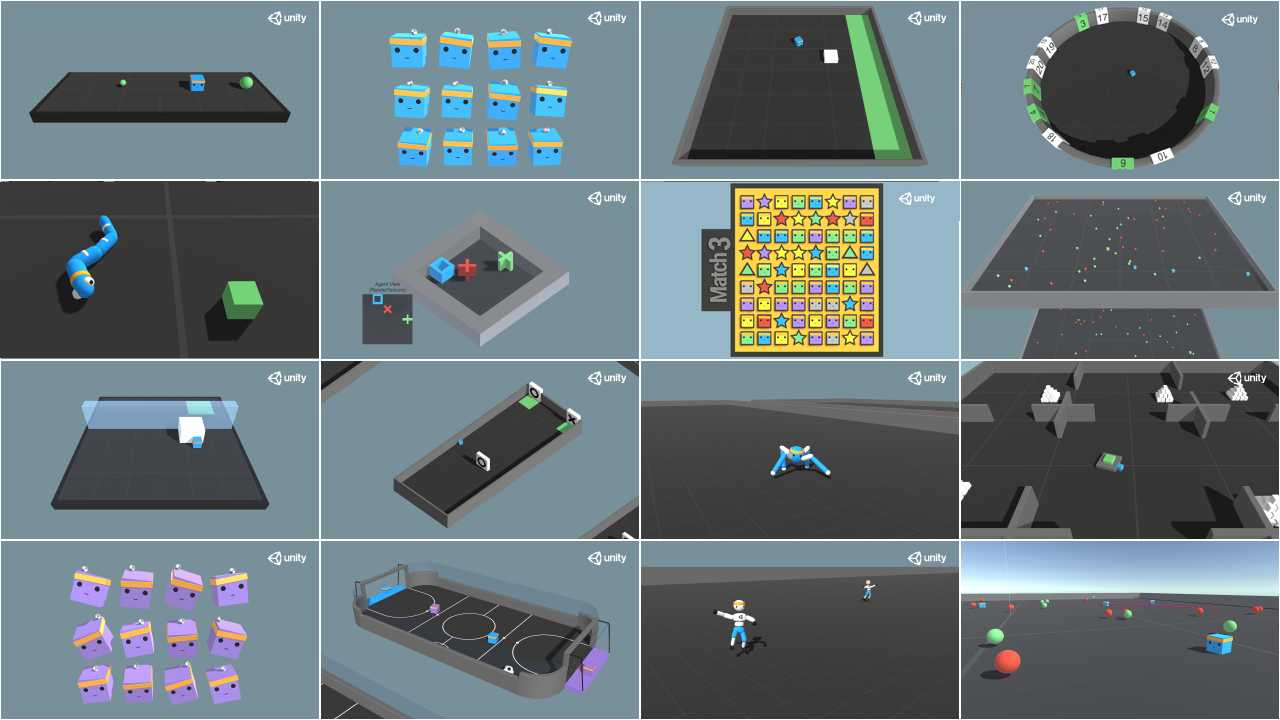

Now that you know how to train an agent using MLAgents, **why not try another environment?**

-MLAgents provides 18 different environments and we’re building some custom ones. The best way to learn is to try things on your own, have fun.

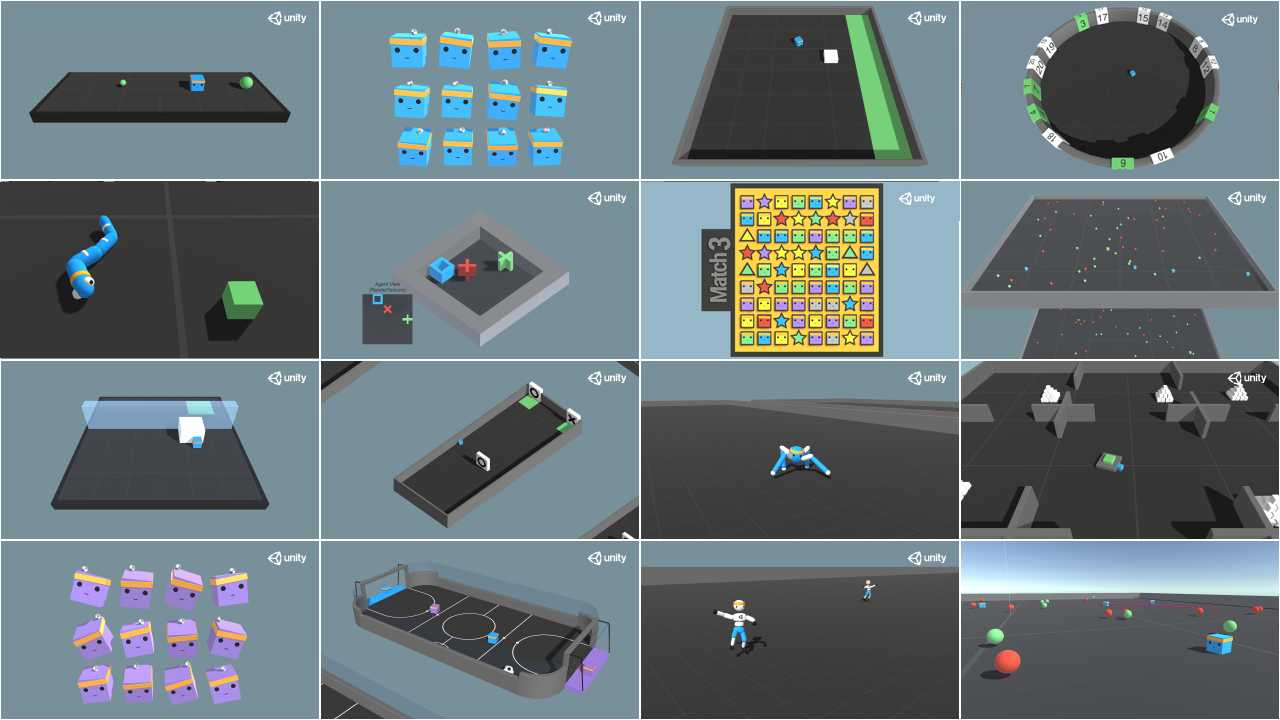

+MLAgents provides 17 different environments and we’re building some custom ones. The best way to learn is to try things on your own, have fun.

You have the full list of the one currently available environments on Hugging Face here 👉 https://github.com/huggingface/ml-agents#the-environments

-For the demos to visualize your agent, the temporary link is: https://singularite.itch.io (temporary because we'll also put the demos on Hugging Face Spaces)

-

-For now we have integrated:

-- [Worm](https://singularite.itch.io/worm) demo where you teach a **worm to crawl**.

-- [Walker](https://singularite.itch.io/walker) demo where you teach an agent **to walk towards a goal**.

+For the demos to visualize your agent 👉 https://huggingface.co/unity

-If you want new demos to be added, please open an issue: https://github.com/huggingface/deep-rl-class 🤗

+For now we have integrated:

+- [Worm](https://huggingface.co/spaces/unity/ML-Agents-Worm) demo where you teach a **worm to crawl**.

+- [Walker](https://huggingface.co/spaces/unity/ML-Agents-Walker) demo where you teach an agent **to walk towards a goal**.

That’s all for today. Congrats on finishing this tutorial!

diff --git a/units/en/unit5/how-mlagents-works.mdx b/units/en/unit5/how-mlagents-works.mdx

index 12acede7..f92054f2 100644

--- a/units/en/unit5/how-mlagents-works.mdx

+++ b/units/en/unit5/how-mlagents-works.mdx

@@ -31,7 +31,7 @@ With Unity ML-Agents, you have six essential components:

## Inside the Learning Component [[inside-learning-component]]

-Inside the Learning Component, we have **three important elements**:

+Inside the Learning Component, we have **two important elements**:

- The first is the *agent component*, the actor of the scene. We’ll **train the agent by optimizing its policy** (which will tell us what action to take in each state). The policy is called the *Brain*.

- Finally, there is the *Academy*. This component **orchestrates agents and their decision-making processes**. Think of this Academy as a teacher who handles Python API requests.

diff --git a/units/en/unit5/quiz.mdx b/units/en/unit5/quiz.mdx

new file mode 100644

index 00000000..7b9ec0c8

--- /dev/null

+++ b/units/en/unit5/quiz.mdx

@@ -0,0 +1,130 @@

+# Quiz

+

+The best way to learn and [to avoid the illusion of competence](https://www.coursera.org/lecture/learning-how-to-learn/illusions-of-competence-BuFzf) **is to test yourself.** This will help you to find **where you need to reinforce your knowledge**.

+

+### Q1: Which of the following tools are specifically designed for video games development?

+

+

+

+### Q2: What of the following statements are true about Unity ML-Agents?

+

+

+

+### Q3: Fill the missing letters

+

+- In Unity ML-Agents, the Policy of an Agent is called a b _ _ _ n

+- The component in charge of orchestrating the agents is called the _ c _ _ _ m _

+

+

-The ML-Agents integration on the Hub **is still experimental**. Some features will be added in the future. But, for now, to validate this hands-on for the certification process, you just need to push your trained models to the Hub.

-There are no minimum results to attain in order to validate this Hands On. But if you want to get nice results, you can try to reach the following:

+To validate this hands-on for the certification process, you **just need to push your trained models to the Hub.**

+There are **no minimum results to attain** in order to validate this Hands On. But if you want to get nice results, you can try to reach the following:

-- For [Pyramids](https://singularite.itch.io/pyramids): Mean Reward = 1.75

-- For [SnowballTarget](https://singularite.itch.io/snowballtarget): Mean Reward = 15 or 30 targets shoot in an episode.

+- For [Pyramids](https://huggingface.co/spaces/unity/ML-Agents-Pyramids): Mean Reward = 1.75

+- For [SnowballTarget](https://huggingface.co/spaces/ThomasSimonini/ML-Agents-SnowballTarget): Mean Reward = 15 or 30 targets shoot in an episode.

For more information about the certification process, check this section 👉 https://huggingface.co/deep-rl-course/en/unit0/introduction#certification-process

@@ -53,9 +53,7 @@ For more information about the certification process, check this section 👉 ht

### 📚 RL-Library:

-- [ML-Agents (HuggingFace Experimental Version)](https://github.com/huggingface/ml-agents)

-

-⚠ We're going to use an experimental version of ML-Agents where you can push to Hub and load from Hub Unity ML-Agents Models **you need to install the same version**

+- [ML-Agents](https://github.com/Unity-Technologies/ml-agents)

We're constantly trying to improve our tutorials, so **if you find some issues in this notebook**, please [open an issue on the GitHub Repo](https://github.com/huggingface/deep-rl-class/issues).

@@ -86,18 +84,16 @@ Before diving into the notebook, you need to:

## Clone the repository and install the dependencies 🔽

- We need to clone the repository that **contains the experimental version of the library that allows you to push your trained agent to the Hub.**

-```python

-%%capture

+```bash

# Clone the repository

-!git clone --depth 1 --branch hf-integration-save https://github.com/huggingface/ml-agents

+git clone --depth 1 https://github.com/Unity-Technologies/ml-agents

```

-```python

-%%capture

+```bash

# Go inside the repository and install the package

-%cd ml-agents

-!pip3 install -e ./ml-agents-envs

-!pip3 install -e ./ml-agents

+cd ml-agents

+pip install -e ./ml-agents-envs

+pip install -e ./ml-agents

```

## SnowballTarget ⛄

@@ -106,35 +102,35 @@ If you need a refresher on how this environment works check this section 👉

https://huggingface.co/deep-rl-course/unit5/snowball-target

### Download and move the environment zip file in `./training-envs-executables/linux/`

+

- Our environment executable is in a zip file.

- We need to download it and place it to `./training-envs-executables/linux/`

- We use a linux executable because we use colab, and colab machines OS is Ubuntu (linux)

-```python

+```bash

# Here, we create training-envs-executables and linux

-!mkdir ./training-envs-executables

-!mkdir ./training-envs-executables/linux

+mkdir ./training-envs-executables

+mkdir ./training-envs-executables/linux

```

Download the file SnowballTarget.zip from https://drive.google.com/file/d/1YHHLjyj6gaZ3Gemx1hQgqrPgSS2ZhmB5 using `wget`.

Check out the full solution to download large files from GDrive [here](https://bcrf.biochem.wisc.edu/2021/02/05/download-google-drive-files-using-wget/)

-```python

-!wget --load-cookies /tmp/cookies.txt "https://docs.google.com/uc?export=download&confirm=$(wget --quiet --save-cookies /tmp/cookies.txt --keep-session-cookies --no-check-certificate 'https://docs.google.com/uc?export=download&id=1YHHLjyj6gaZ3Gemx1hQgqrPgSS2ZhmB5' -O- | sed -rn 's/.*confirm=([0-9A-Za-z_]+).*/\1\n/p')&id=1YHHLjyj6gaZ3Gemx1hQgqrPgSS2ZhmB5" -O ./training-envs-executables/linux/SnowballTarget.zip && rm -rf /tmp/cookies.txt

+```bash

+wget --load-cookies /tmp/cookies.txt "https://docs.google.com/uc?export=download&confirm=$(wget --quiet --save-cookies /tmp/cookies.txt --keep-session-cookies --no-check-certificate 'https://docs.google.com/uc?export=download&id=1YHHLjyj6gaZ3Gemx1hQgqrPgSS2ZhmB5' -O- | sed -rn 's/.*confirm=([0-9A-Za-z_]+).*/\1\n/p')&id=1YHHLjyj6gaZ3Gemx1hQgqrPgSS2ZhmB5" -O ./training-envs-executables/linux/SnowballTarget.zip && rm -rf /tmp/cookies.txt

```

We unzip the executable.zip file

-```python

-%%capture

-!unzip -d ./training-envs-executables/linux/ ./training-envs-executables/linux/SnowballTarget.zip

+```bash

+unzip -d ./training-envs-executables/linux/ ./training-envs-executables/linux/SnowballTarget.zip

```

Make sure your file is accessible

-```python

-!chmod -R 755 ./training-envs-executables/linux/SnowballTarget

+```bash

+chmod -R 755 ./training-envs-executables/linux/SnowballTarget

```

### Define the SnowballTarget config file

@@ -204,7 +200,7 @@ Train the model and use the `--resume` flag to continue training in case of inte

The training will take 10 to 35min depending on your config. Go take a ☕️ you deserve it 🤗.

```bash

-!mlagents-learn ./config/ppo/SnowballTarget.yaml --env=./training-envs-executables/linux/SnowballTarget/SnowballTarget --run-id="SnowballTarget1" --no-graphics

+mlagents-learn ./config/ppo/SnowballTarget.yaml --env=./training-envs-executables/linux/SnowballTarget/SnowballTarget --run-id="SnowballTarget1" --no-graphics

```

### Push the agent to the Hugging Face Hub

@@ -245,10 +241,10 @@ If the repo does not exist **it will be created automatically**

For instance:

-`!mlagents-push-to-hf --run-id="SnowballTarget1" --local-dir="./results/SnowballTarget1" --repo-id="ThomasSimonini/ppo-SnowballTarget" --commit-message="First Push"`

+`mlagents-push-to-hf --run-id="SnowballTarget1" --local-dir="./results/SnowballTarget1" --repo-id="ThomasSimonini/ppo-SnowballTarget" --commit-message="First Push"`

```python

-!mlagents-push-to-hf --run-id= # Add your run id --local-dir= # Your local dir --repo-id= # Your repo id --commit-message= # Your commit message

+mlagents-push-to-hf --run-id= # Add your run id --local-dir= # Your local dir --repo-id= # Your repo id --commit-message= # Your commit message

```

If everything worked you should see this at the end of the process (but with a different url 😆) :

@@ -269,7 +265,7 @@ This step it's simple:

1. Remember your repo-id

-2. Go here: https://singularite.itch.io/snowballtarget

+2. Go here: https://huggingface.co/spaces/ThomasSimonini/ML-Agents-SnowballTarget

3. Launch the game and put it in full screen by clicking on the bottom right button

@@ -309,11 +305,12 @@ Unzip it

Make sure your file is accessible

-```python

-!chmod -R 755 ./training-envs-executables/linux/Pyramids/Pyramids

+```bash

+chmod -R 755 ./training-envs-executables/linux/Pyramids/Pyramids

```

### Modify the PyramidsRND config file

+

- Contrary to the first environment, which was a custom one, **Pyramids was made by the Unity team**.

- So the PyramidsRND config file already exists and is in ./content/ml-agents/config/ppo/PyramidsRND.yaml

- You might ask why "RND" is in PyramidsRND. RND stands for *random network distillation* it's a way to generate curiosity rewards. If you want to know more about that, we wrote an article explaining this technique: https://medium.com/data-from-the-trenches/curiosity-driven-learning-through-random-network-distillation-488ffd8e5938

@@ -333,37 +330,36 @@ We’re now ready to train our agent 🔥.

The training will take 30 to 45min depending on your machine, go take a ☕️ you deserve it 🤗.

```python

-!mlagents-learn ./config/ppo/PyramidsRND.yaml --env=./training-envs-executables/linux/Pyramids/Pyramids --run-id="Pyramids Training" --no-graphics

+mlagents-learn ./config/ppo/PyramidsRND.yaml --env=./training-envs-executables/linux/Pyramids/Pyramids --run-id="Pyramids Training" --no-graphics

```

### Push the agent to the Hugging Face Hub

- Now that we trained our agent, we’re **ready to push it to the Hub to be able to visualize it playing on your browser🔥.**

-```bash

-!mlagents-push-to-hf --run-id= # Add your run id --local-dir= # Your local dir --repo-id= # Your repo id --commit-message= # Your commit message

+```python

+mlagents-push-to-hf --run-id= # Add your run id --local-dir= # Your local dir --repo-id= # Your repo id --commit-message= # Your commit message

```

### Watch your agent playing 👀

-The temporary link for the Pyramids demo is: https://singularite.itch.io/pyramids

-

+👉 https://huggingface.co/spaces/unity/ML-Agents-Pyramids

+

### 🎁 Bonus: Why not train on another environment?

+

Now that you know how to train an agent using MLAgents, **why not try another environment?**

-MLAgents provides 18 different environments and we’re building some custom ones. The best way to learn is to try things on your own, have fun.

+MLAgents provides 17 different environments and we’re building some custom ones. The best way to learn is to try things on your own, have fun.

You have the full list of the one currently available environments on Hugging Face here 👉 https://github.com/huggingface/ml-agents#the-environments

-For the demos to visualize your agent, the temporary link is: https://singularite.itch.io (temporary because we'll also put the demos on Hugging Face Spaces)

-

-For now we have integrated:

-- [Worm](https://singularite.itch.io/worm) demo where you teach a **worm to crawl**.

-- [Walker](https://singularite.itch.io/walker) demo where you teach an agent **to walk towards a goal**.

+For the demos to visualize your agent 👉 https://huggingface.co/unity

-If you want new demos to be added, please open an issue: https://github.com/huggingface/deep-rl-class 🤗

+For now we have integrated:

+- [Worm](https://huggingface.co/spaces/unity/ML-Agents-Worm) demo where you teach a **worm to crawl**.

+- [Walker](https://huggingface.co/spaces/unity/ML-Agents-Walker) demo where you teach an agent **to walk towards a goal**.

That’s all for today. Congrats on finishing this tutorial!

diff --git a/units/en/unit5/how-mlagents-works.mdx b/units/en/unit5/how-mlagents-works.mdx

index 12acede7..f92054f2 100644

--- a/units/en/unit5/how-mlagents-works.mdx

+++ b/units/en/unit5/how-mlagents-works.mdx

@@ -31,7 +31,7 @@ With Unity ML-Agents, you have six essential components:

## Inside the Learning Component [[inside-learning-component]]

-Inside the Learning Component, we have **three important elements**:

+Inside the Learning Component, we have **two important elements**:

- The first is the *agent component*, the actor of the scene. We’ll **train the agent by optimizing its policy** (which will tell us what action to take in each state). The policy is called the *Brain*.

- Finally, there is the *Academy*. This component **orchestrates agents and their decision-making processes**. Think of this Academy as a teacher who handles Python API requests.

diff --git a/units/en/unit5/quiz.mdx b/units/en/unit5/quiz.mdx

new file mode 100644

index 00000000..7b9ec0c8

--- /dev/null

+++ b/units/en/unit5/quiz.mdx

@@ -0,0 +1,130 @@

+# Quiz

+

+The best way to learn and [to avoid the illusion of competence](https://www.coursera.org/lecture/learning-how-to-learn/illusions-of-competence-BuFzf) **is to test yourself.** This will help you to find **where you need to reinforce your knowledge**.

+

+### Q1: Which of the following tools are specifically designed for video games development?

+

+

+

+### Q2: What of the following statements are true about Unity ML-Agents?

+

+

+

+### Q3: Fill the missing letters

+

+- In Unity ML-Agents, the Policy of an Agent is called a b _ _ _ n

+- The component in charge of orchestrating the agents is called the _ c _ _ _ m _

+

+

+Solution

+- b r a i n

+- a c a d e m y

+

+

+### Q4: Define with your own words what is a `raycast`

+

+

+Solution

+A raycast is (most of the times) a linear projection, as a `laser` which aims to detect collisions through objects.

+

+

+### Q5: Which are the differences between capturing the environment using `frames` or `raycasts`?

+

+

+

+

+### Q6: Name several environment and agent input variables used to train the agent in the Snowball or Pyramid environments

+

+

+Solution

+- Collisions of the raycasts spawned from the agent detecting blocks, (invisible) walls, stones, our target, switches, etc.

+- Traditional inputs describing agent features, as its speed

+- Boolean vars, as the switch (on/off) in Pyramids or the `can I shoot?` in the SnowballTarget.

+

+

+

+Congrats on finishing this Quiz 🥳, if you missed some elements, take time to read the chapter again to reinforce (😏) your knowledge.

diff --git a/units/en/unit6/hands-on.mdx b/units/en/unit6/hands-on.mdx

index 9d34e59f..5bc8e75f 100644

--- a/units/en/unit6/hands-on.mdx

+++ b/units/en/unit6/hands-on.mdx

@@ -1,4 +1,4 @@

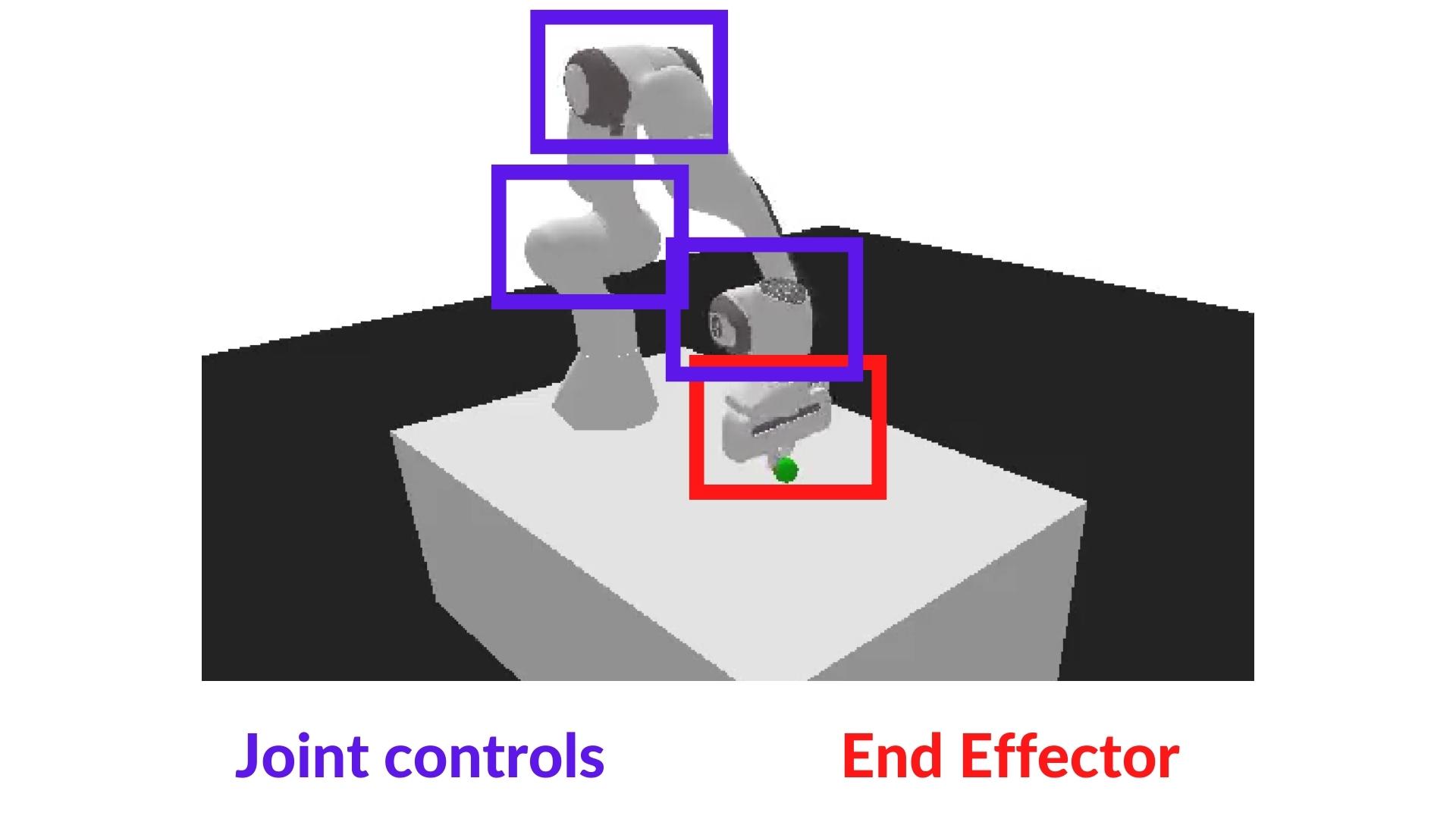

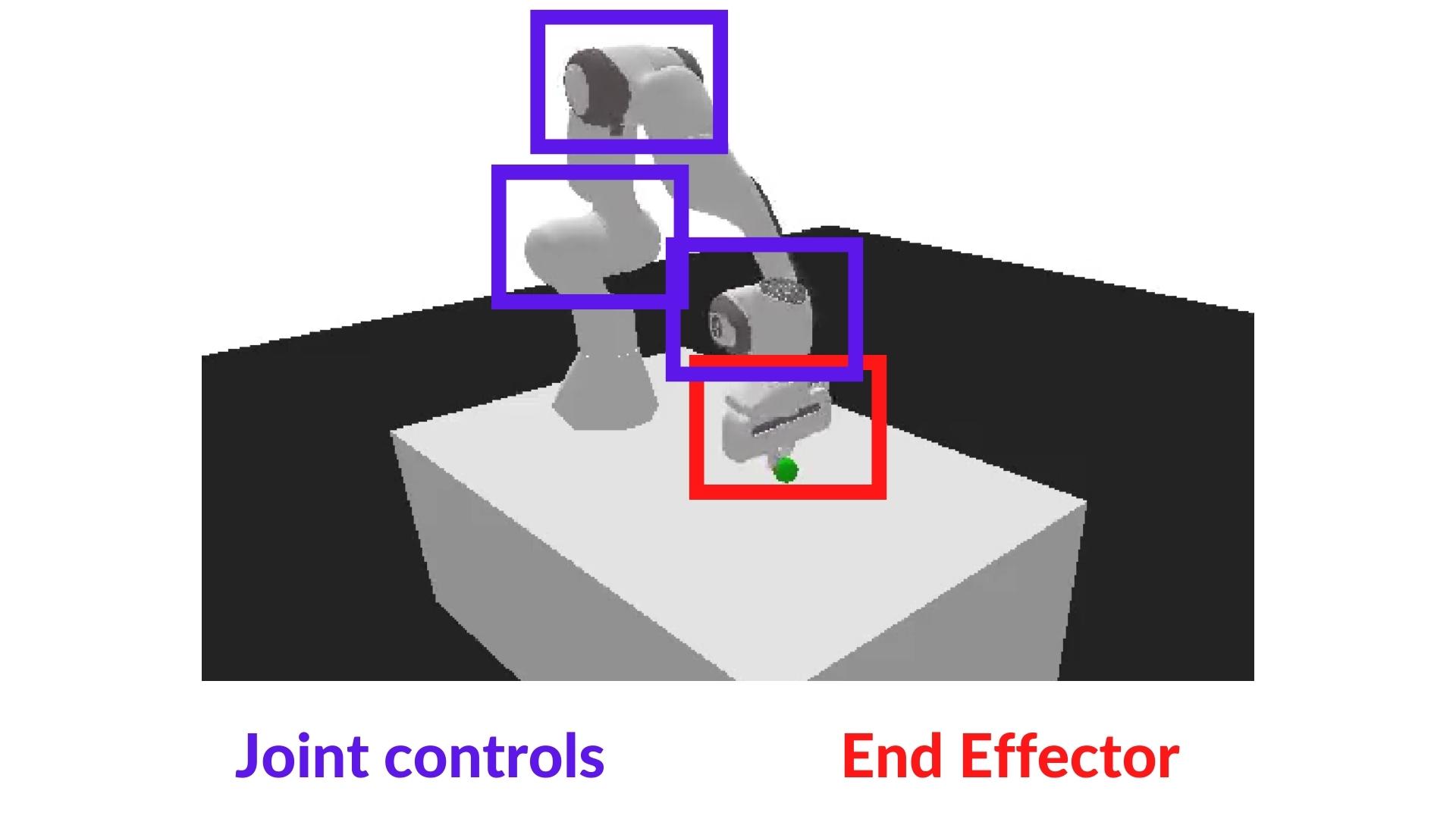

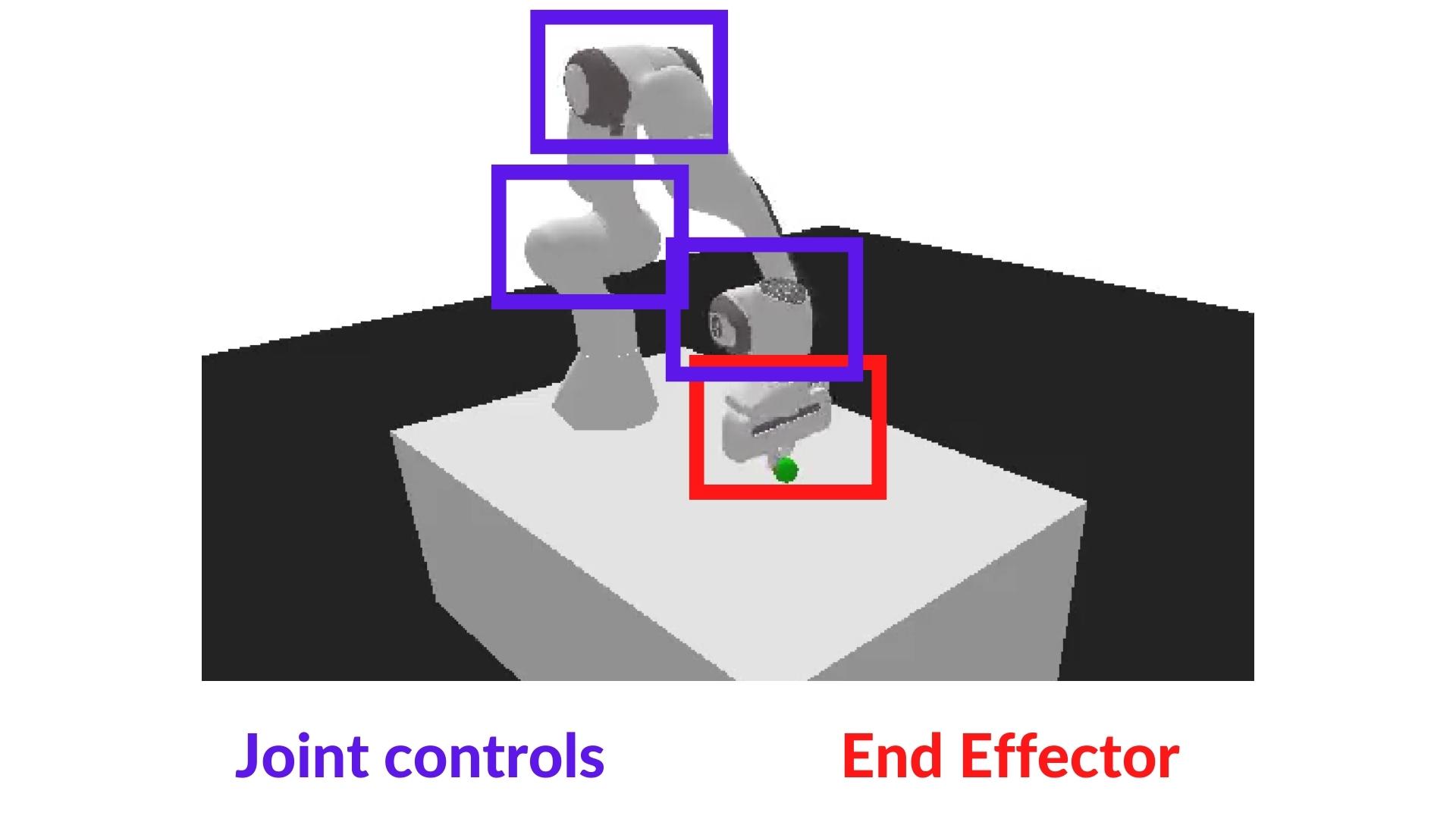

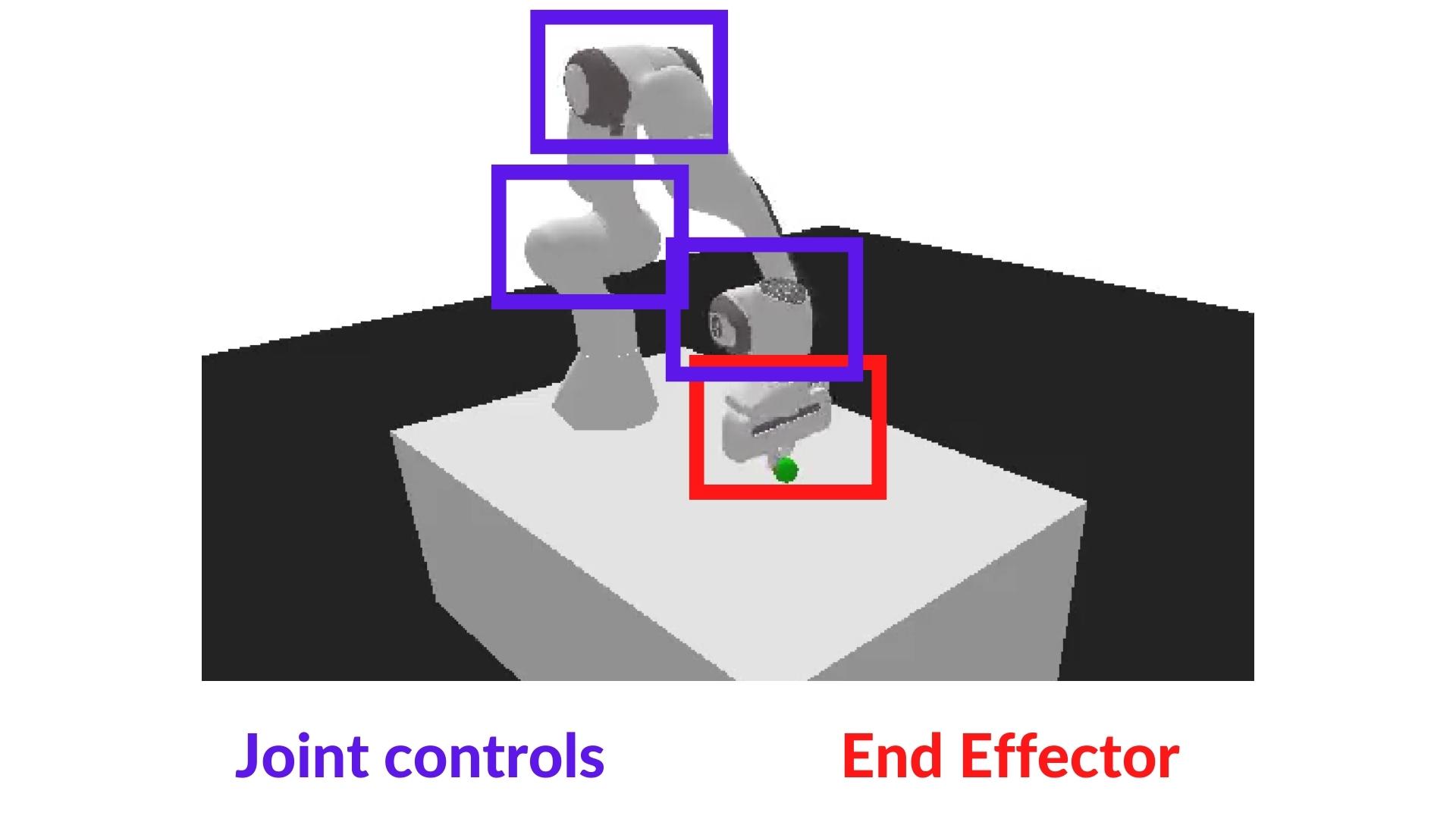

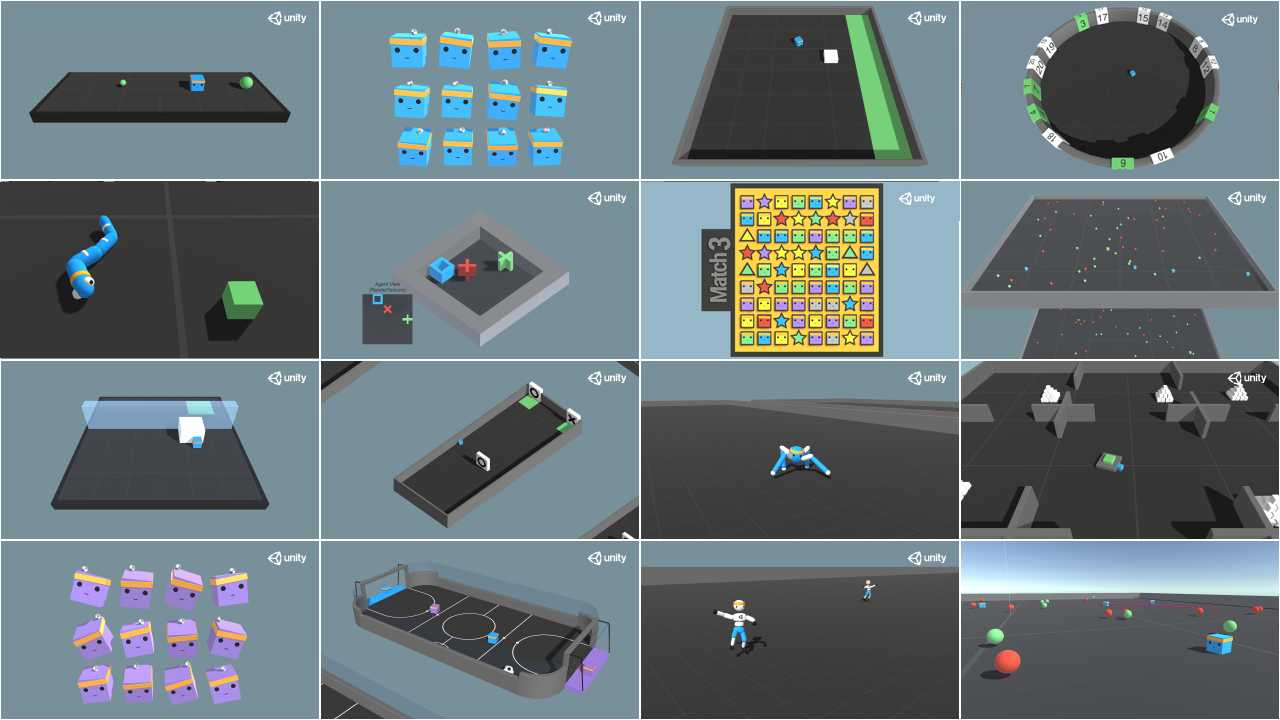

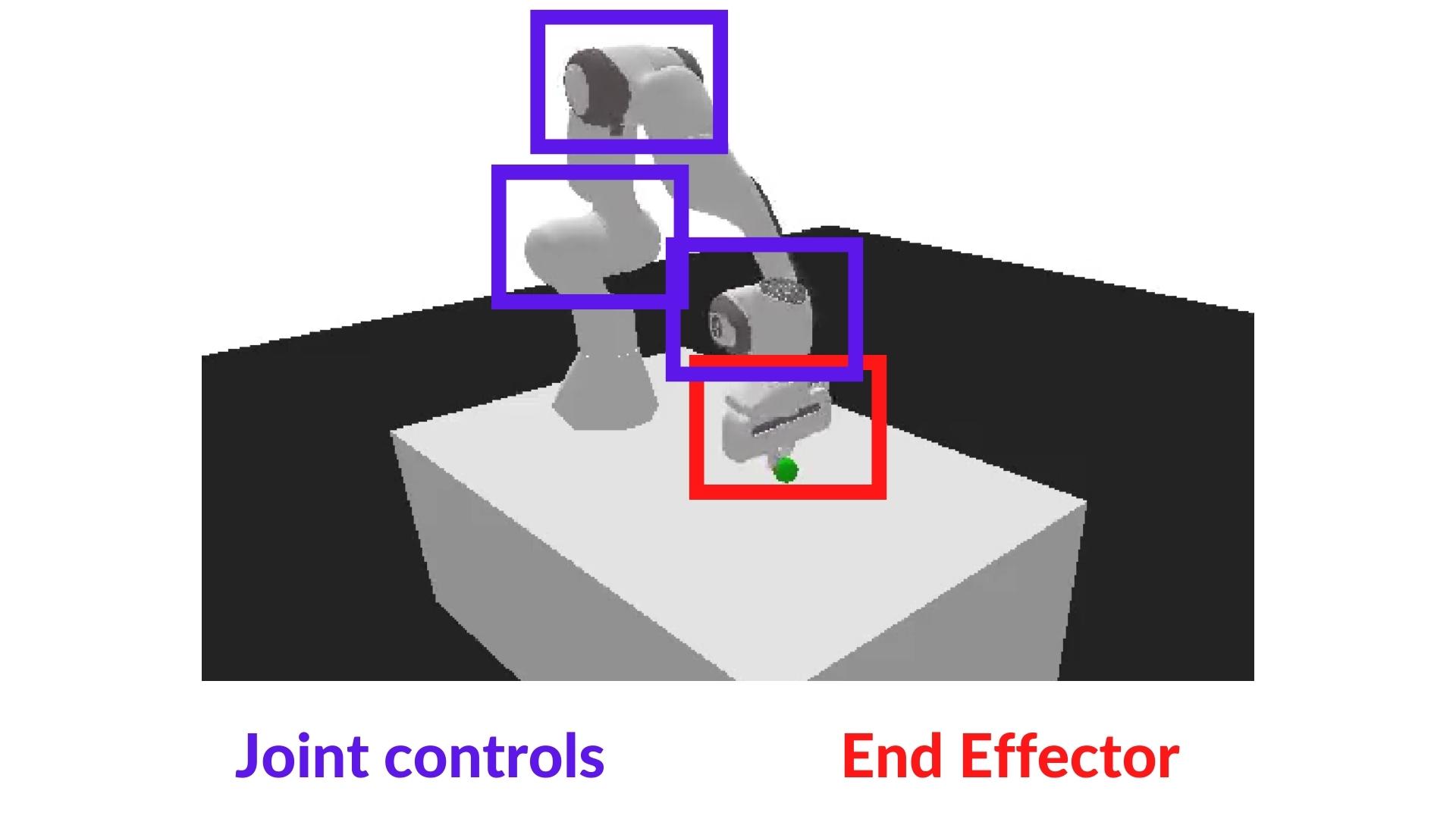

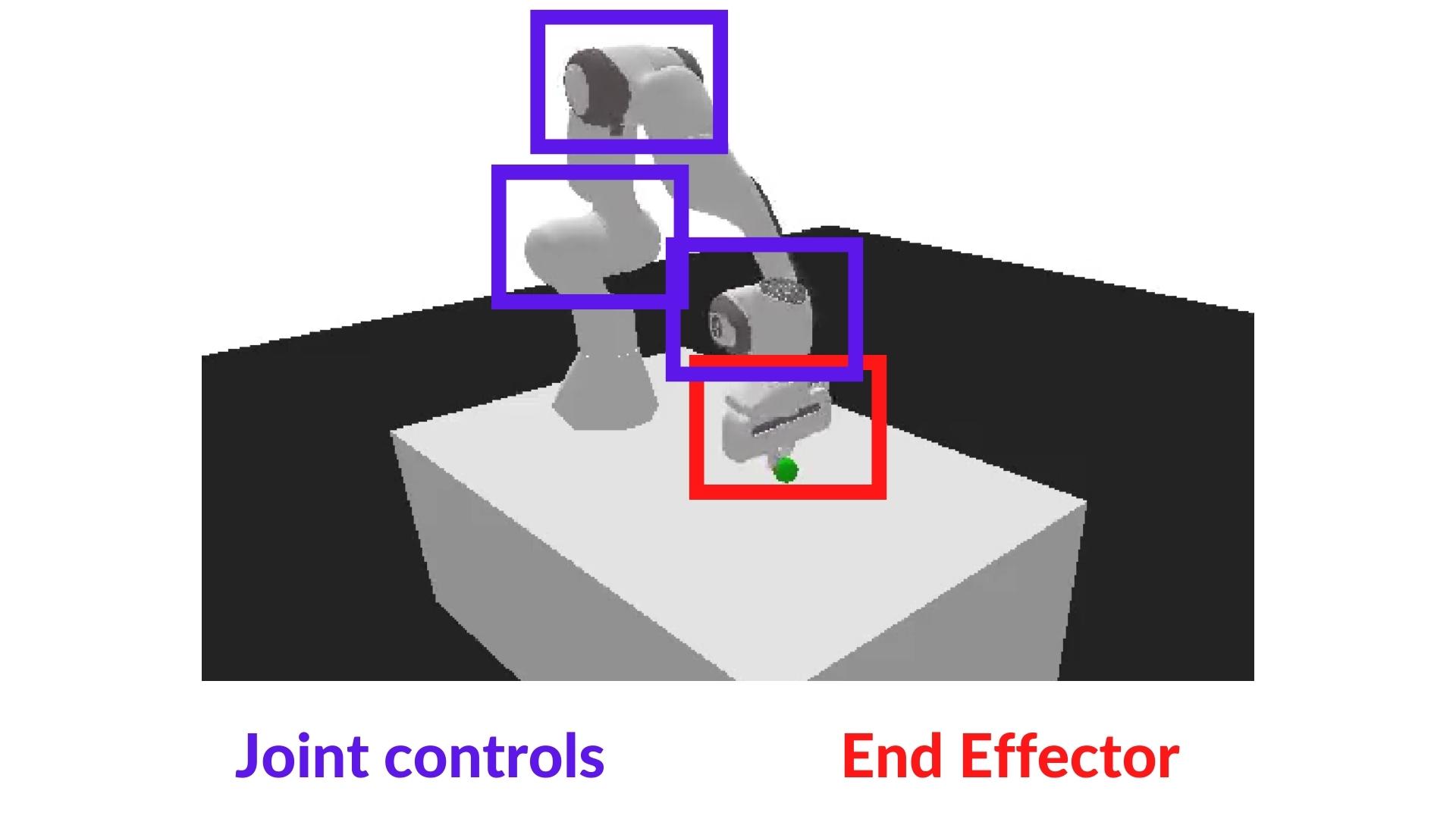

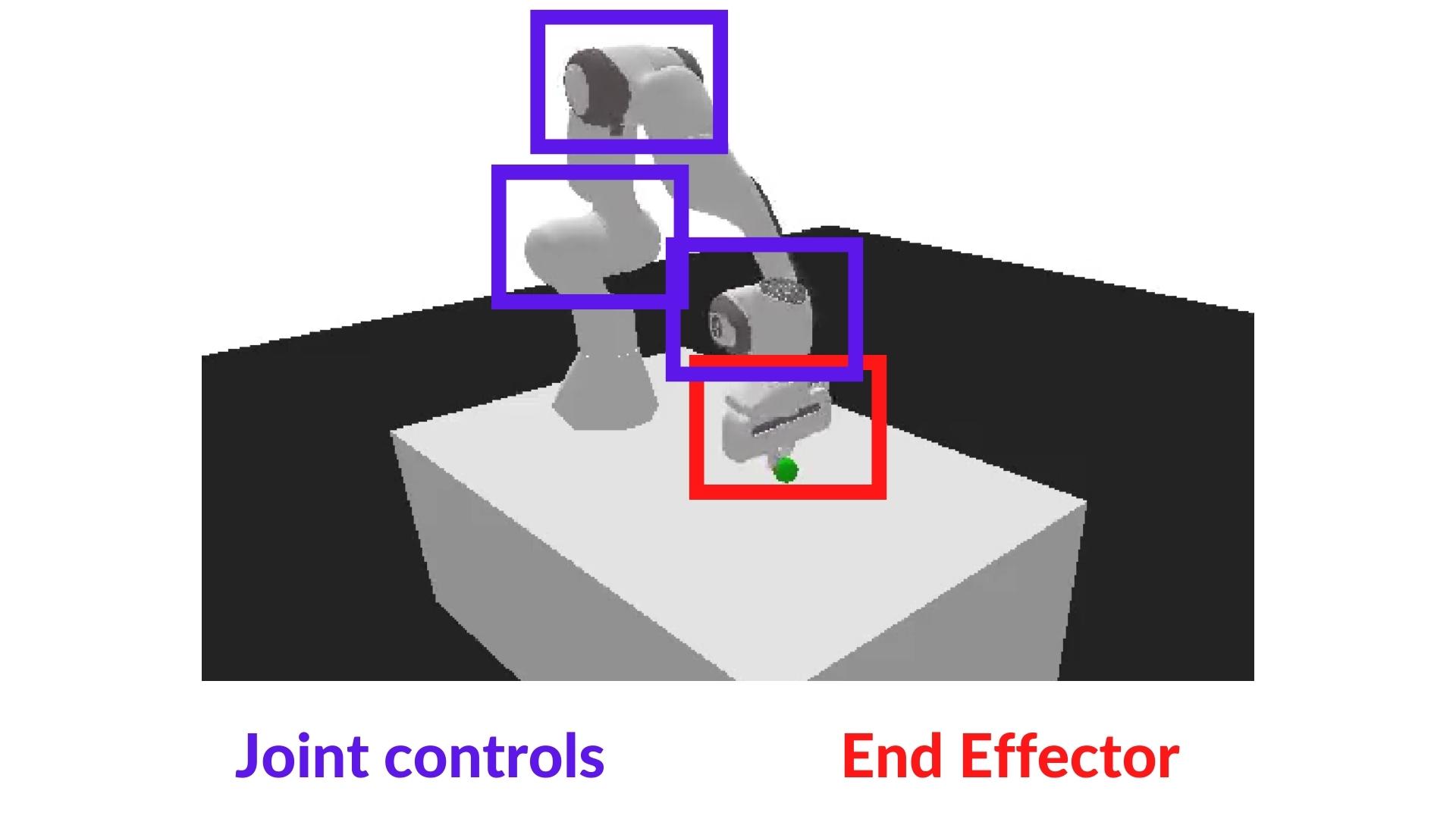

-# Advantage Actor Critic (A2C) using Robotics Simulations with PyBullet and Panda-Gym 🤖 [[hands-on]]

+# Advantage Actor Critic (A2C) using Robotics Simulations with Panda-Gym 🤖 [[hands-on]]

-Now that you've studied the theory behind Advantage Actor Critic (A2C), **you're ready to train your A2C agent** using Stable-Baselines3 in robotic environments. And train two robots:

-

-- A spider 🕷️ to learn to move.

+Now that you've studied the theory behind Advantage Actor Critic (A2C), **you're ready to train your A2C agent** using Stable-Baselines3 in a robotic environment. And train a:

- A robotic arm 🦾 to move to the correct position.

-We're going to use two Robotics environments:

-

-- [PyBullet](https://github.com/bulletphysics/bullet3)

+We're going to use

- [panda-gym](https://github.com/qgallouedec/panda-gym)

- -

-

To validate this hands-on for the certification process, you need to push your two trained models to the Hub and get the following results:

-- `AntBulletEnv-v0` get a result of >= 650.

-- `PandaReachDense-v2` get a result of >= -3.5.

+- `PandaReachDense-v3` get a result of >= -3.5.

To find your result, [go to the leaderboard](https://huggingface.co/spaces/huggingface-projects/Deep-Reinforcement-Learning-Leaderboard) and find your model, **the result = mean_reward - std of reward**

-**If you don't find your model, go to the bottom of the page and click on the refresh button.**

-

For more information about the certification process, check this section 👉 https://huggingface.co/deep-rl-course/en/unit0/introduction#certification-process

**To start the hands-on click on Open In Colab button** 👇 :

@@ -37,11 +27,10 @@ For more information about the certification process, check this section 👉 ht