diff --git a/src/pages/docs/addons/update.md b/src/pages/docs/addons/update.md

index 2c605ce3..c0a7d48a 100644

--- a/src/pages/docs/addons/update.md

+++ b/src/pages/docs/addons/update.md

@@ -36,12 +36,18 @@ Updating an addon in Testsigma keeps your tools up-to-date and functional. First

## **Updating Addon**

1. Modify the addon’s code to fix issues, add features, or adjust settings. Compress the file into **Zipped** Format.

-2. In Testsigma Application, Click on the **Addons** icon from the left navigation bar. Go to the **My Addons** page in Testsigma and select the addon you want to update.

-3. Select **Update** from the dropdown and In **Upload Zip File** Overlay, Browse and select the zipped file, then click **Update**.

-4. After uploading the code, click on **Publish** from the dropdown menu. Then, choose your publish options:

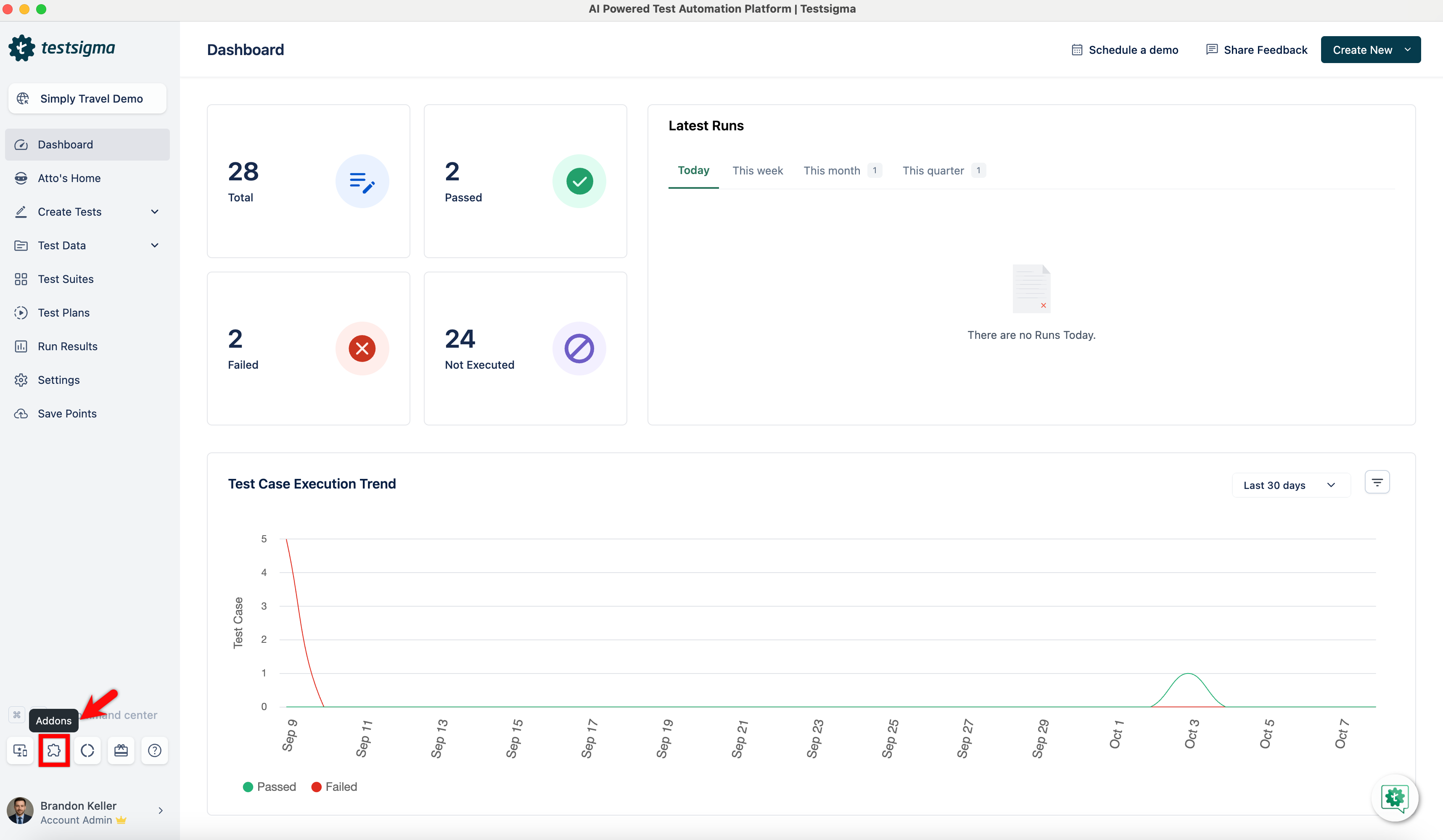

+2. In Testsigma Application, click on the **Addons** icon from the left navigation bar.

+

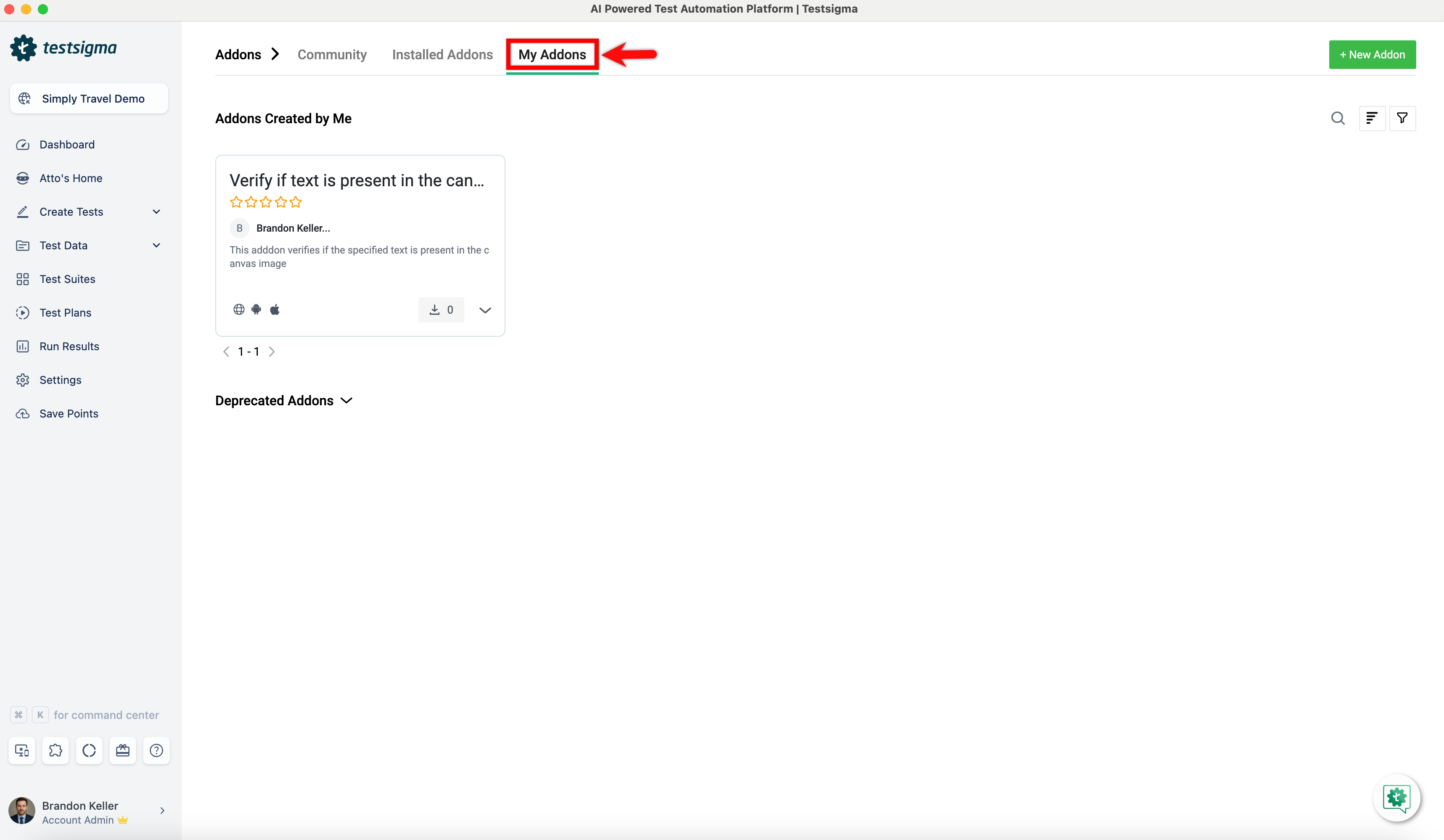

+3. Go to the **My Addons** page in Testsigma and select the addon you want to update.

+

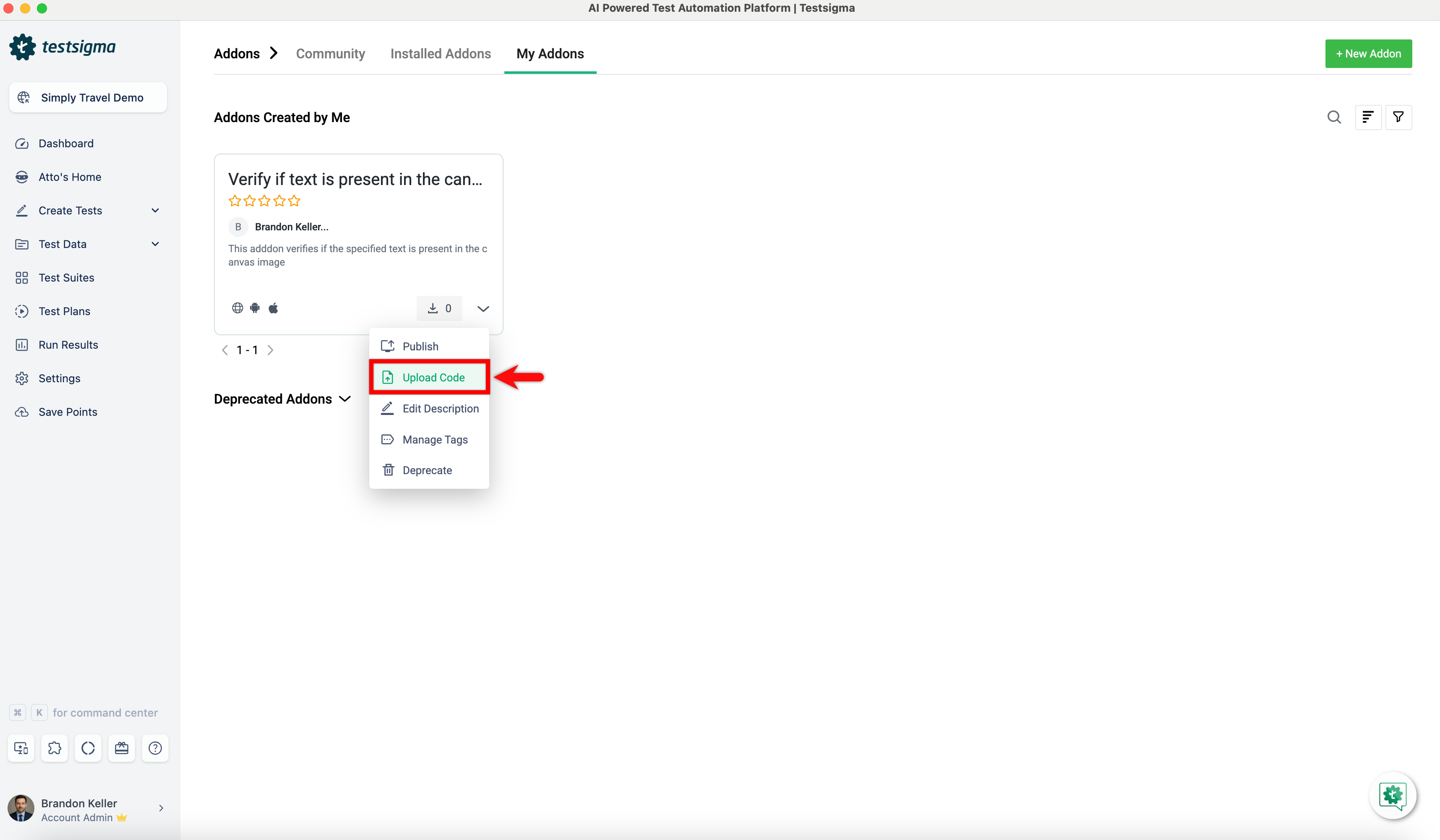

+4. Select **Upload Code** from the dropdown.

+

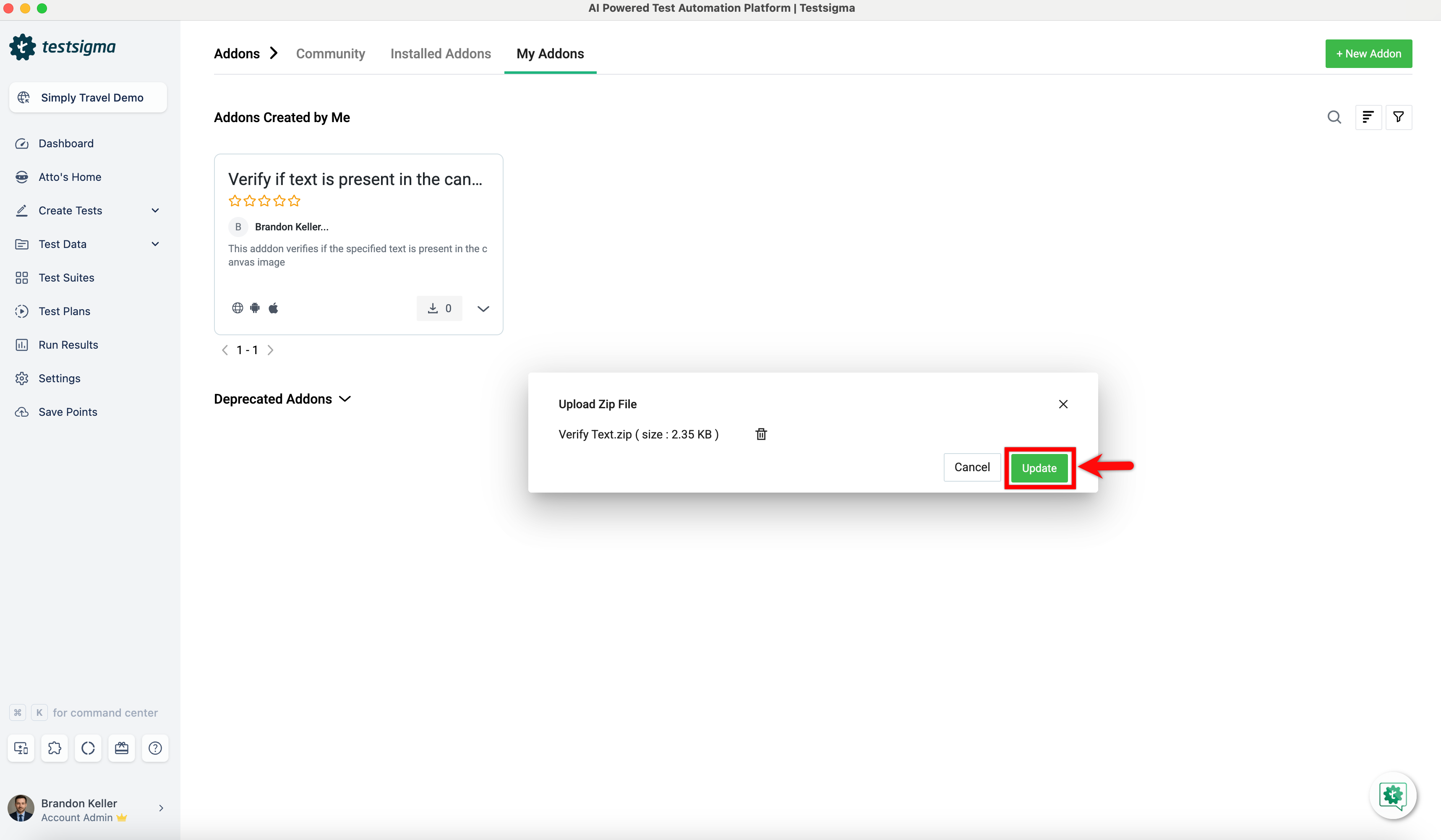

+5. In **Upload Zip File** Overlay, Browse and select the zipped file, then click **Update**.

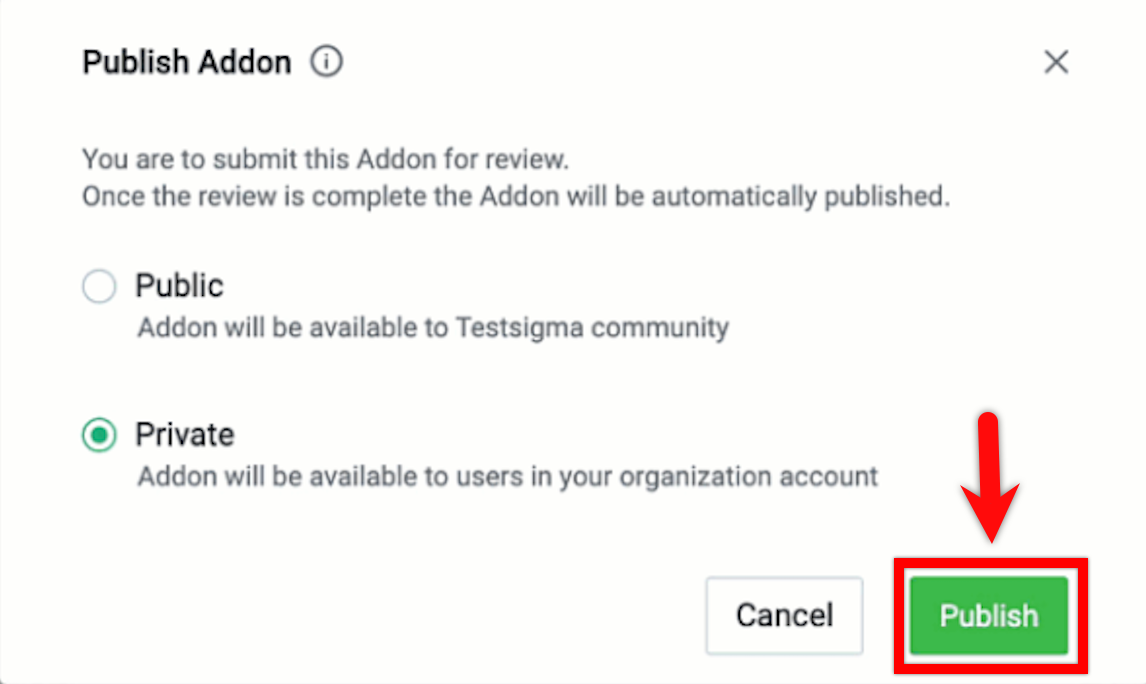

+6. After uploading the code, click on **Publish** from the dropdown menu. Then, choose your publish options:

- **Public**: Make the addon available to the entire Testsigma community.

- **Private**: Restrict availability to users within your organization.

-5. An automatic security check will be done. If it passes, the addon will be published. If it fails, you will get an email notification.

+

+7. An automatic security check will be done. If it passes, the addon will be published. If it fails, you will get an email notification.

---

diff --git a/src/pages/docs/configuration/api-keys.md b/src/pages/docs/configuration/api-keys.md

index 1a25d3f1..bde91b10 100644

--- a/src/pages/docs/configuration/api-keys.md

+++ b/src/pages/docs/configuration/api-keys.md

@@ -25,12 +25,18 @@ You can access and control it programmatically once you generate the API key. Te

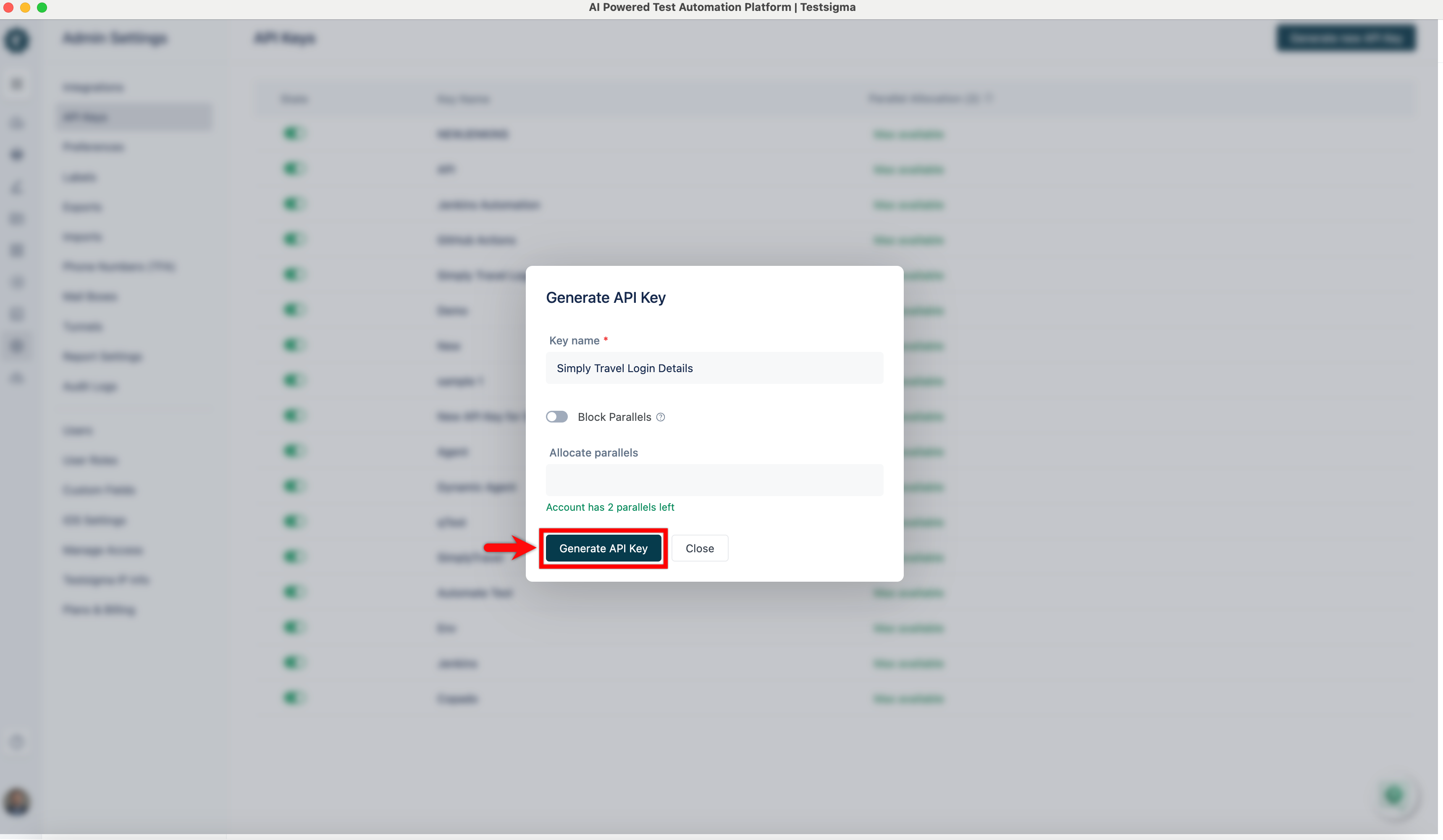

Follow the below steps to generate an API key in Testsigma:

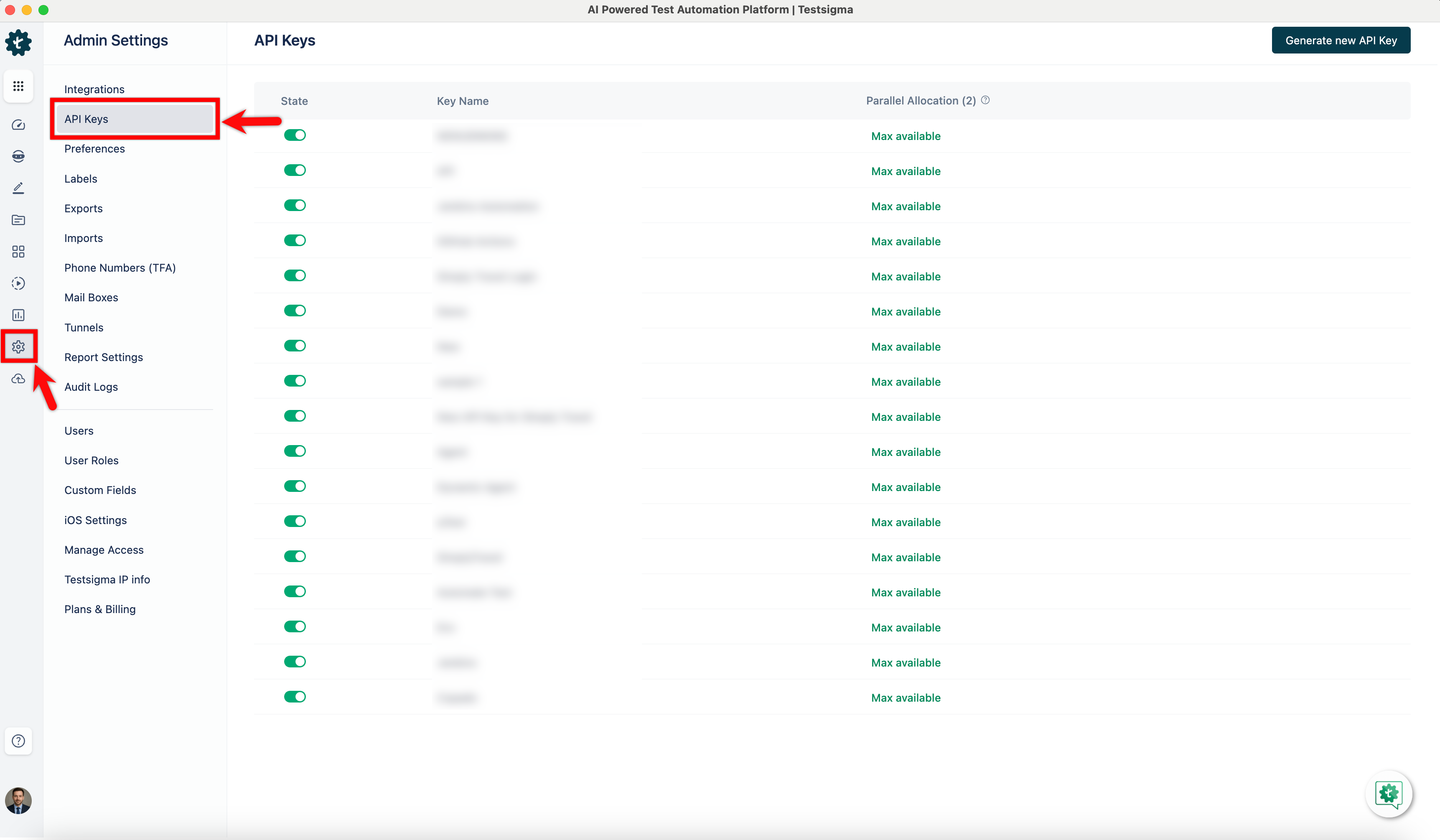

1. Navigate to **Settings** > **API Keys** in the left-side navbar.

+

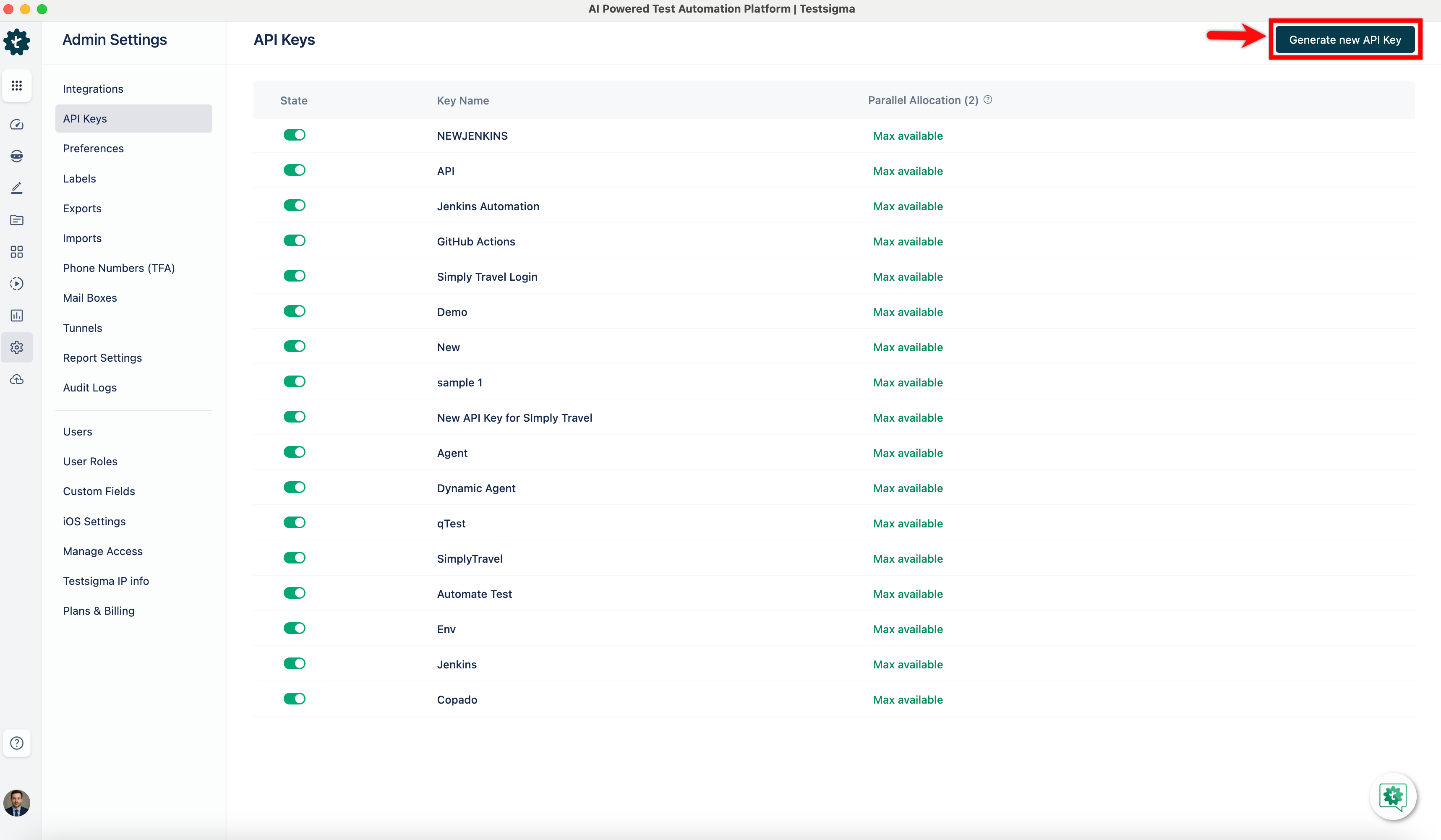

2. Click the **Generate new API Key** button in the top right corner of the screen on the **API Keys** page, and a **Generate API Key** pop-up overlay will appear.

+

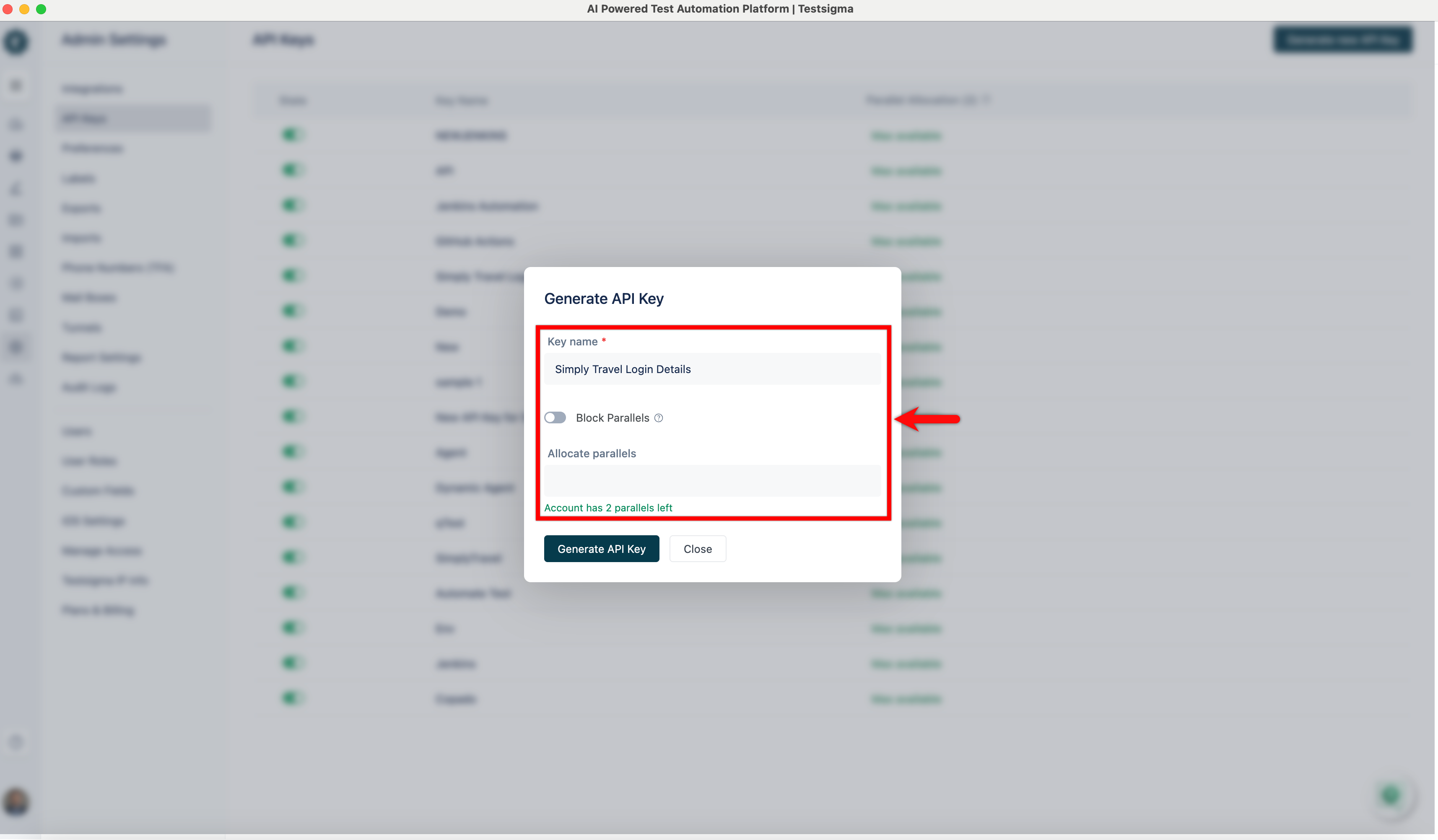

3. In the pop-up overlay for **Generate API Key**, you can enter a **Name** for your **API Key** and specify the **number of parallel test** executions for this key. The overlay provides a toggle for restricting parallel test execution for this key.

+

-4. After entering a name and configuring parallel settings, click the **Generate Key** button. The API key will be displayed on the screen. Click the **Copy API Key** button to copy it to your clipboard for use in your applications or scripts.

+4. After entering a name and configuring parallel settings, click the **Generate Key** button.

+

+

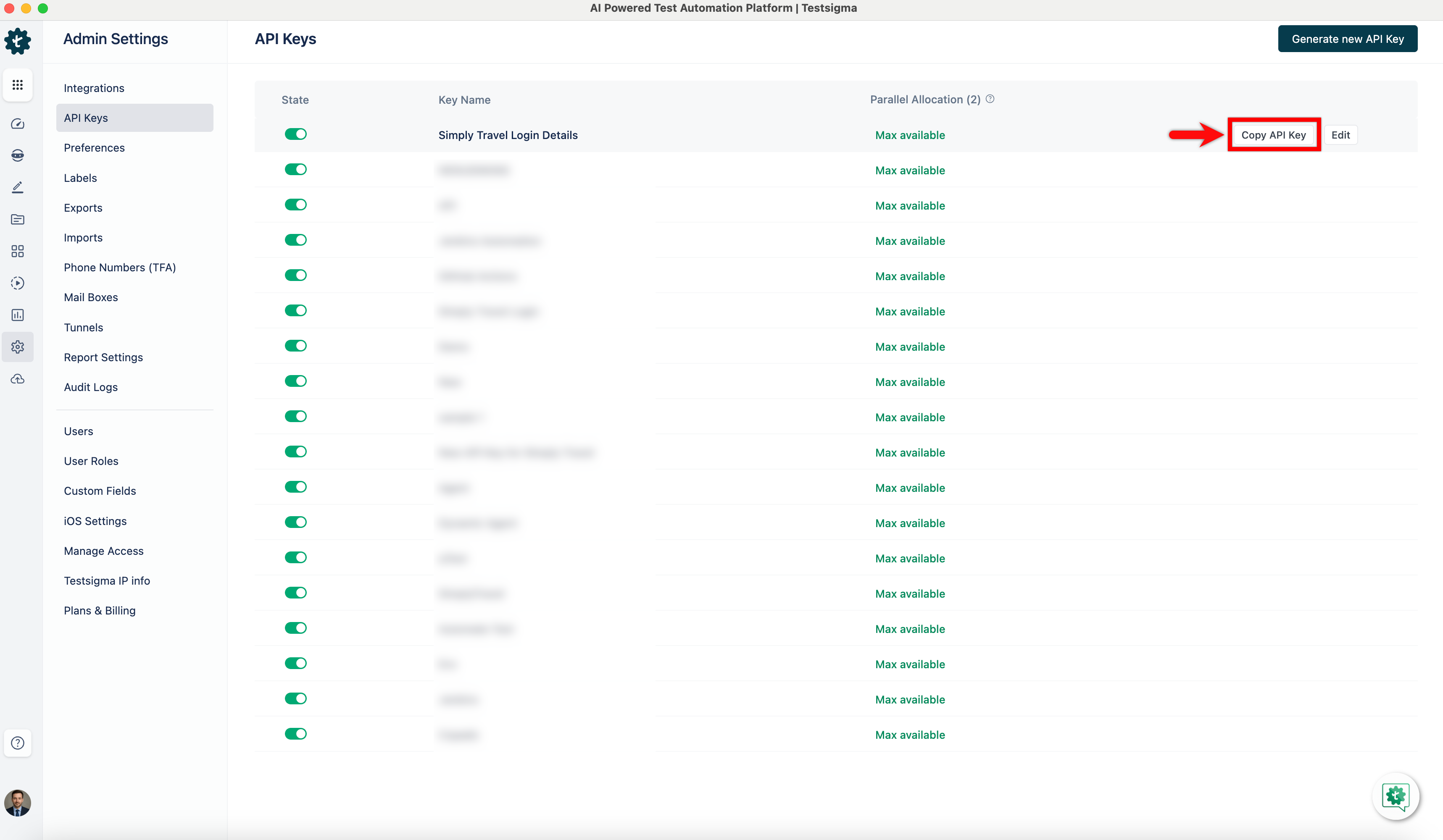

+5. The API key will be displayed on the screen. Click the **Copy API Key** button to copy it to your clipboard for use in your applications or scripts.

[[info | **NOTE**:]]

| Only users with **Admin** or **Super Admin** roles can generate API keys in Testsigma.

diff --git a/src/pages/docs/desired-capabilities/network-logs.md b/src/pages/docs/desired-capabilities/network-logs.md

index 413500ef..728f93b5 100644

--- a/src/pages/docs/desired-capabilities/network-logs.md

+++ b/src/pages/docs/desired-capabilities/network-logs.md

@@ -50,15 +50,27 @@ This documentation will guide you on how to enable the network log in Test Case

## **Enable Network Logs in Test Case**

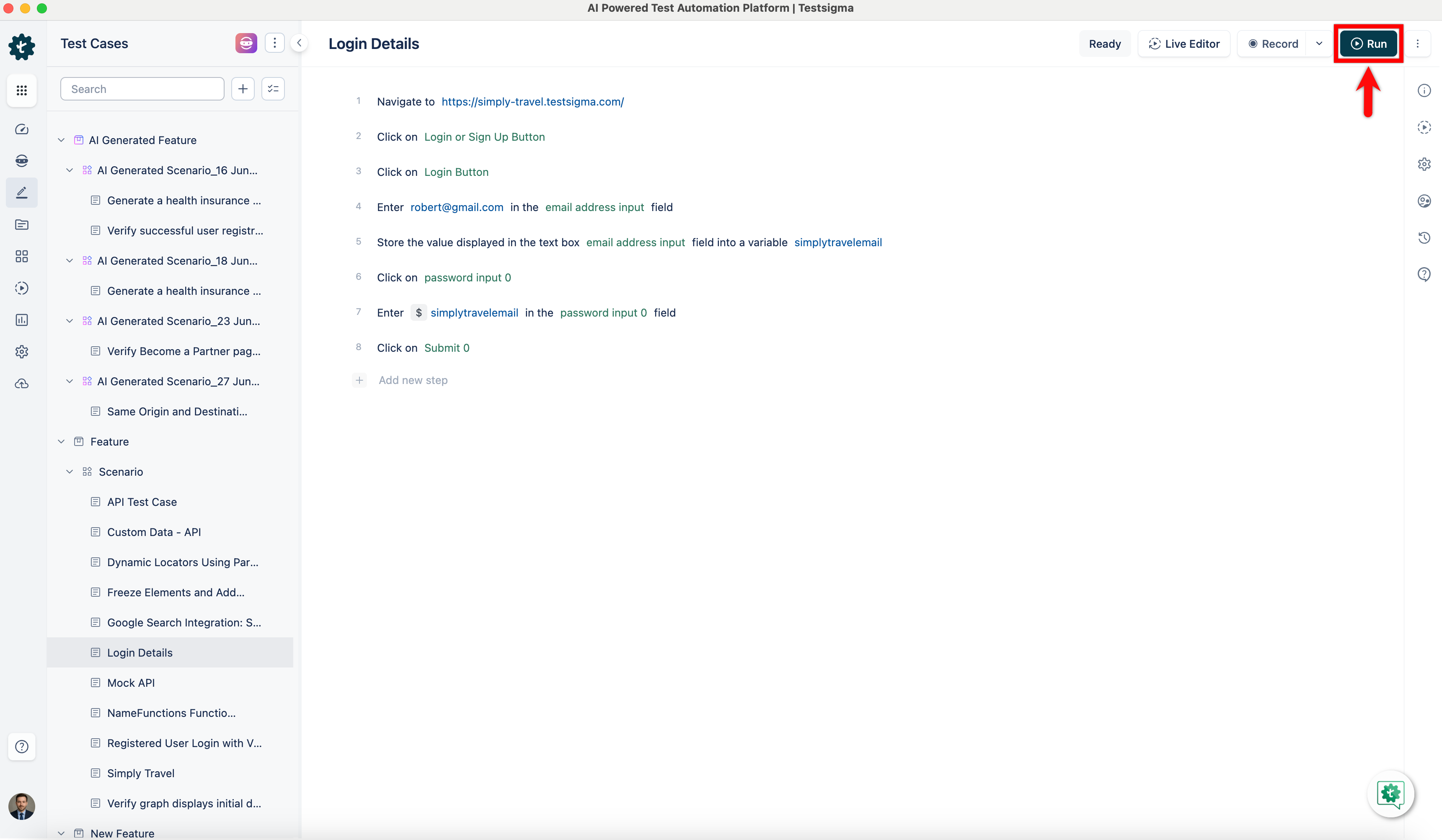

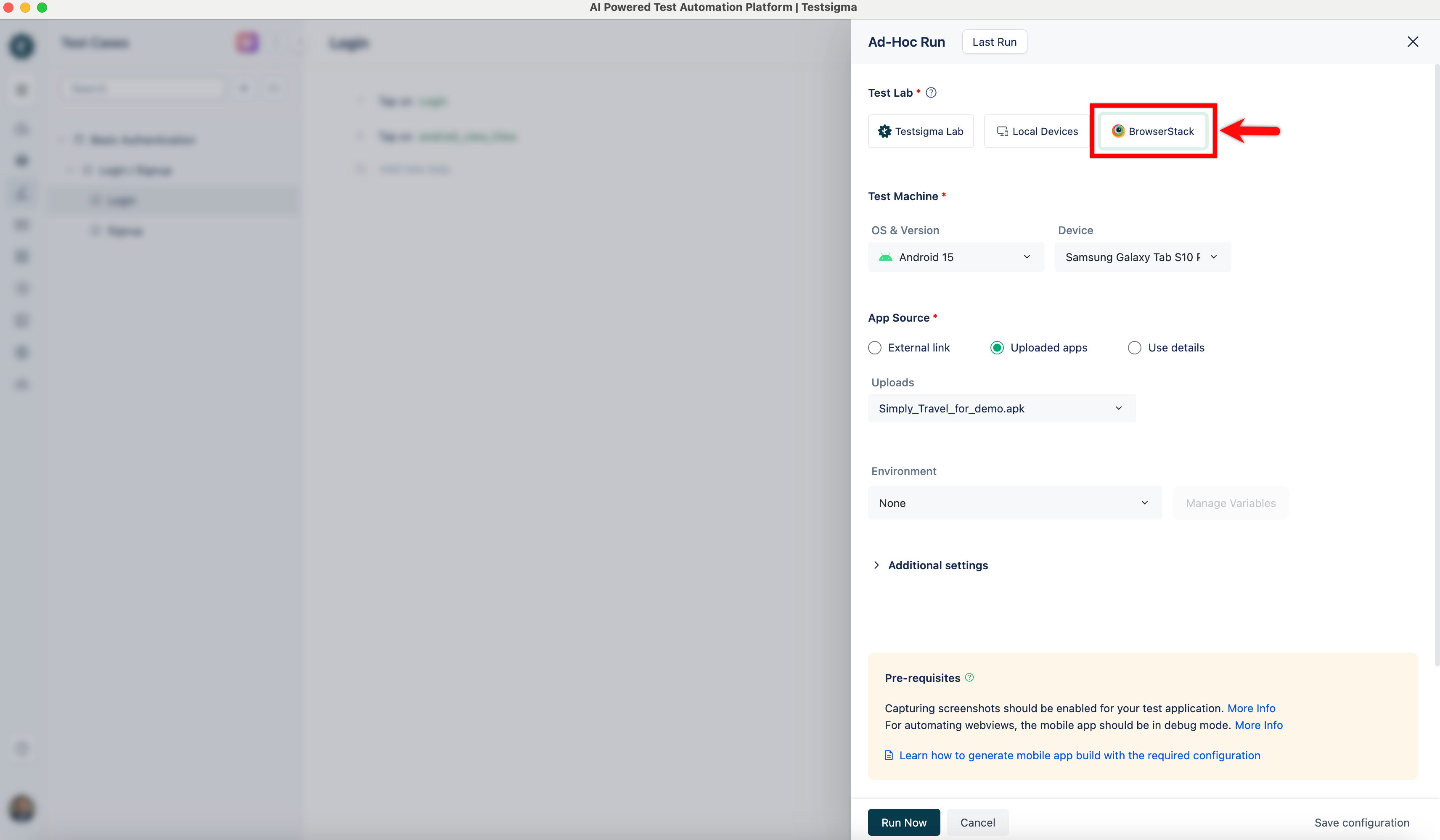

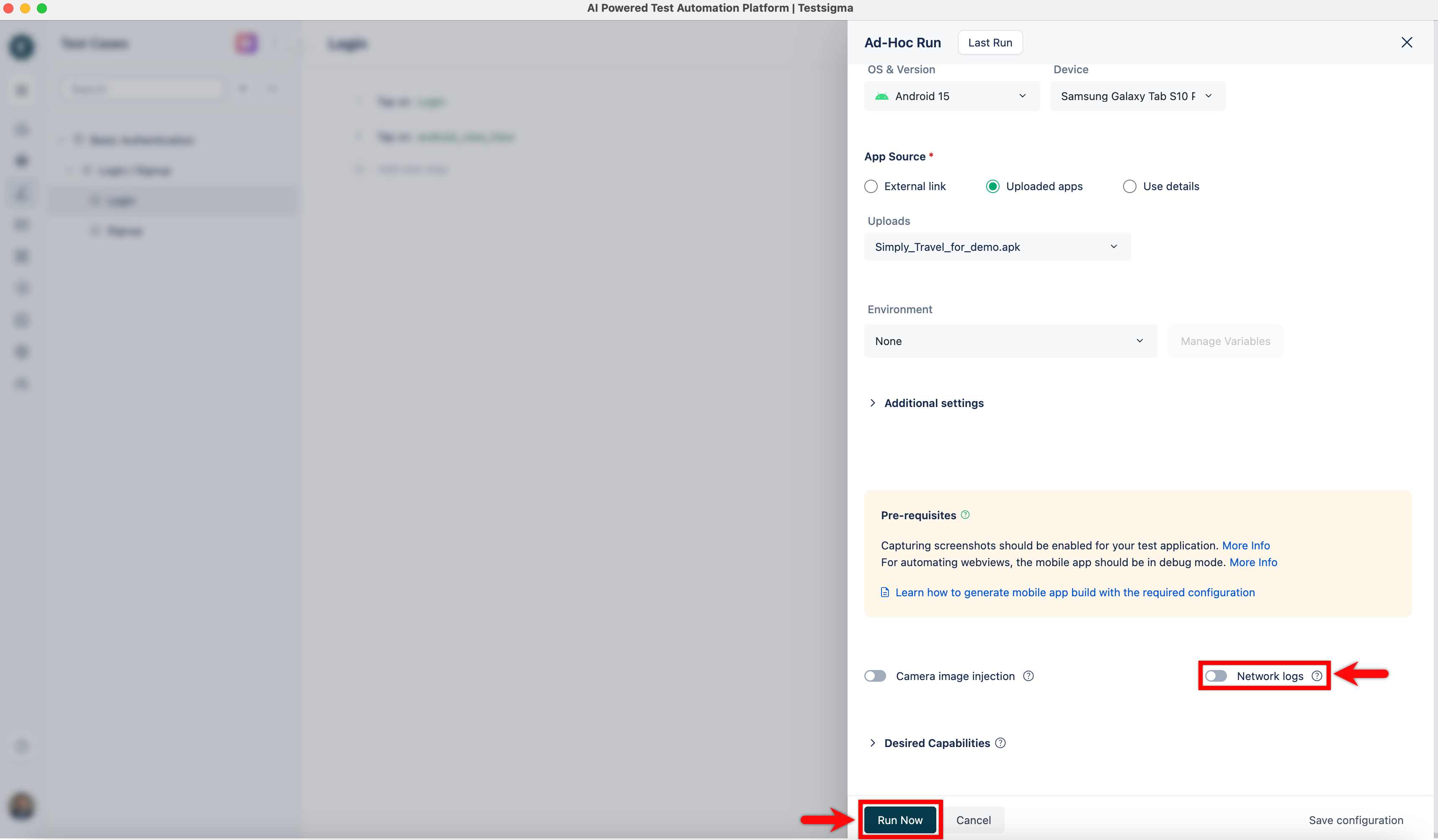

1. Click **Run** in the top right corner of the screen on the Test Case details page.

-2. Select **BrowserStack** as a Test Lab on the **Ad-hoc Ru**n page on the right side of the screen, enable the **Network Log** toggle, and click **Run Now** to start Test Execution.

+

+

+2. Select **BrowserStack** as a Test Lab on the **Ad-hoc Run** overlay.

+

+

+3. Enable the **Network Log** toggle, and click **Run Now** to start test execution.

+

---

## **Enable Network Logs in Test Plan**

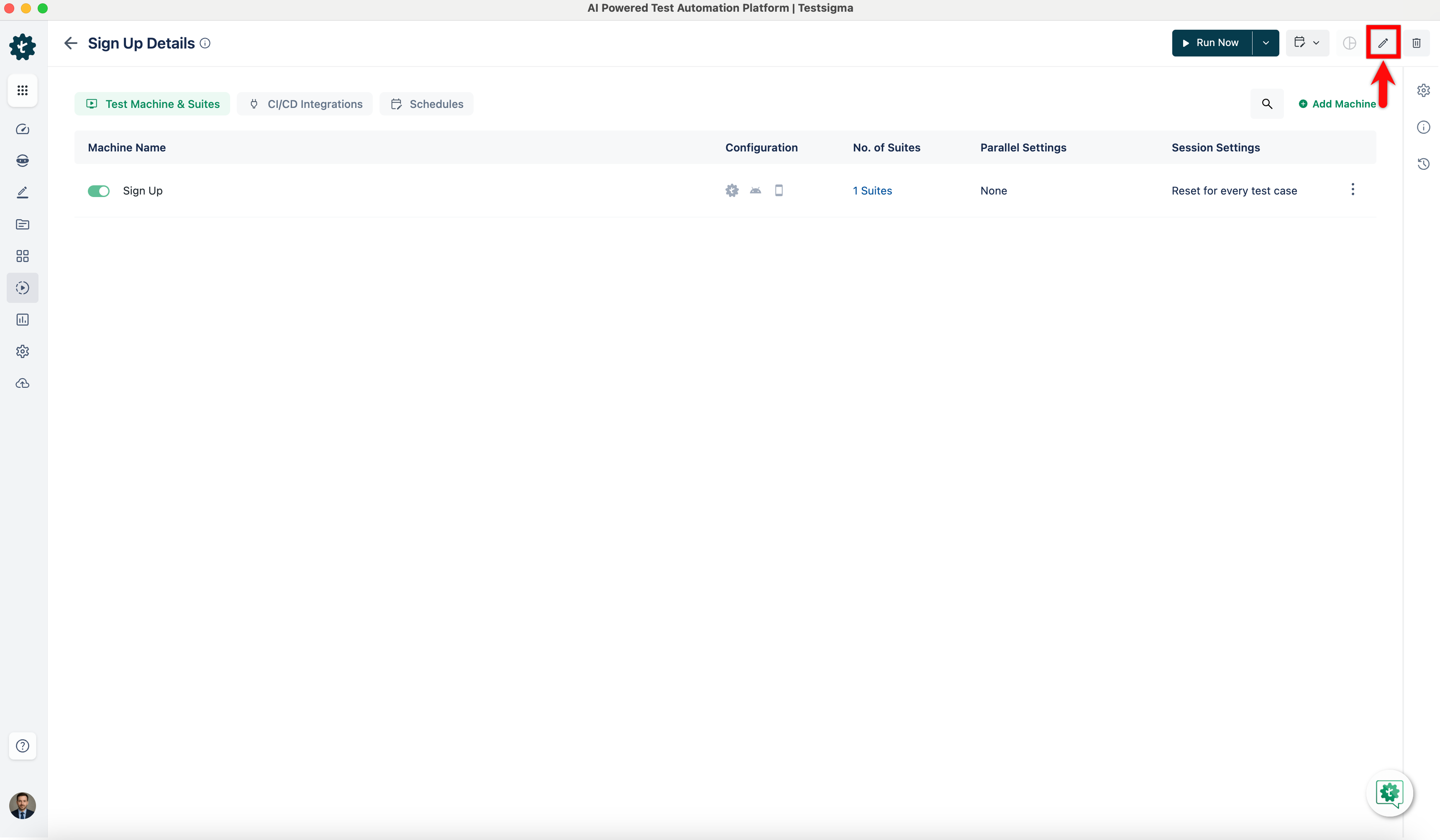

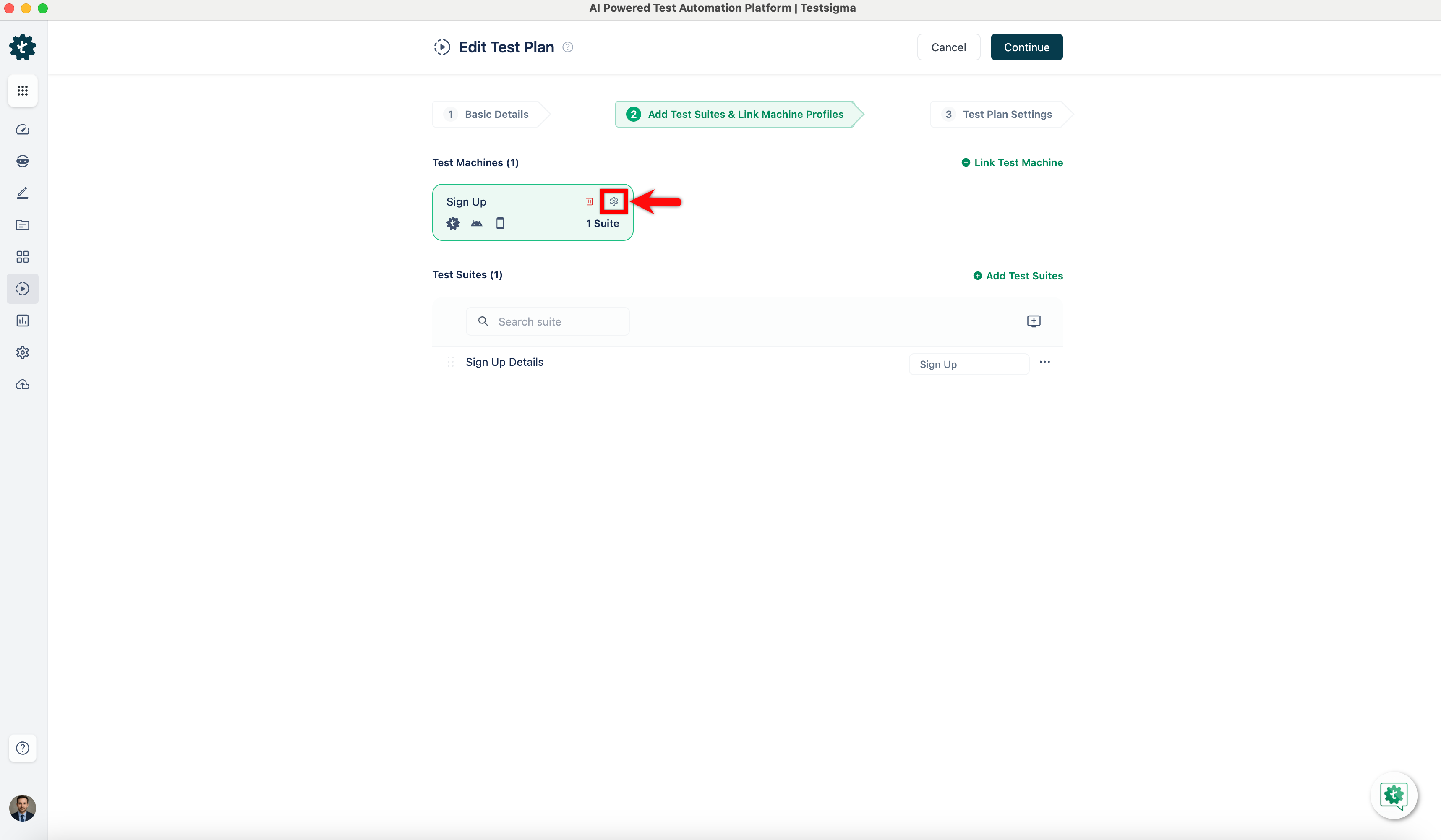

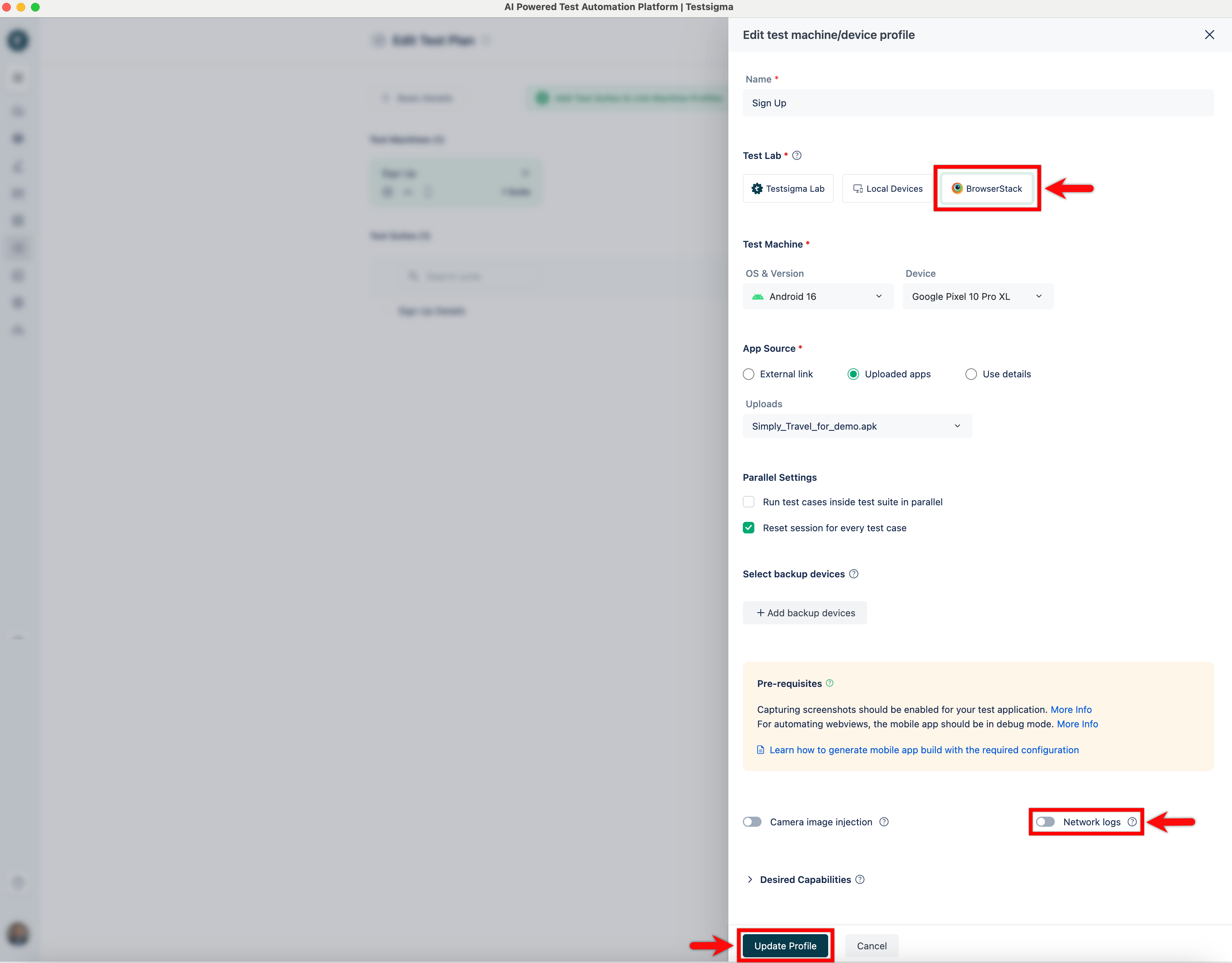

1. Navigate to the **Add Test Suites & Link Machine Profiles** tab on the **Create** or **Edit Test Plan** page.

+

+

2. Click the **Test Machine Settings** icon, and the **Adding** or **Editing Test Machine/Device Profiles** overlay will appear.

-3. On the **Add** or **Edit Machine/Device Profile** overlay, select Test Lab Type as **BrowserStack**, enable the **Network Log** toggle, and click **Create/Update**.

+

+

+3. On the **Add** or **Edit Machine/Device Profile** overlay, select Test Lab Type as **BrowserStack**, enable the **Network Log** toggle, and click **Create/Update**.

+

+

---

diff --git a/src/pages/docs/elements/dynamic-elements/with-parameter-test-data.md b/src/pages/docs/elements/dynamic-elements/with-parameter-test-data.md

index bd0e6f96..5fcd053e 100644

--- a/src/pages/docs/elements/dynamic-elements/with-parameter-test-data.md

+++ b/src/pages/docs/elements/dynamic-elements/with-parameter-test-data.md

@@ -50,9 +50,16 @@ Dynamic locators are necessary for data-driven testing because they help adjust

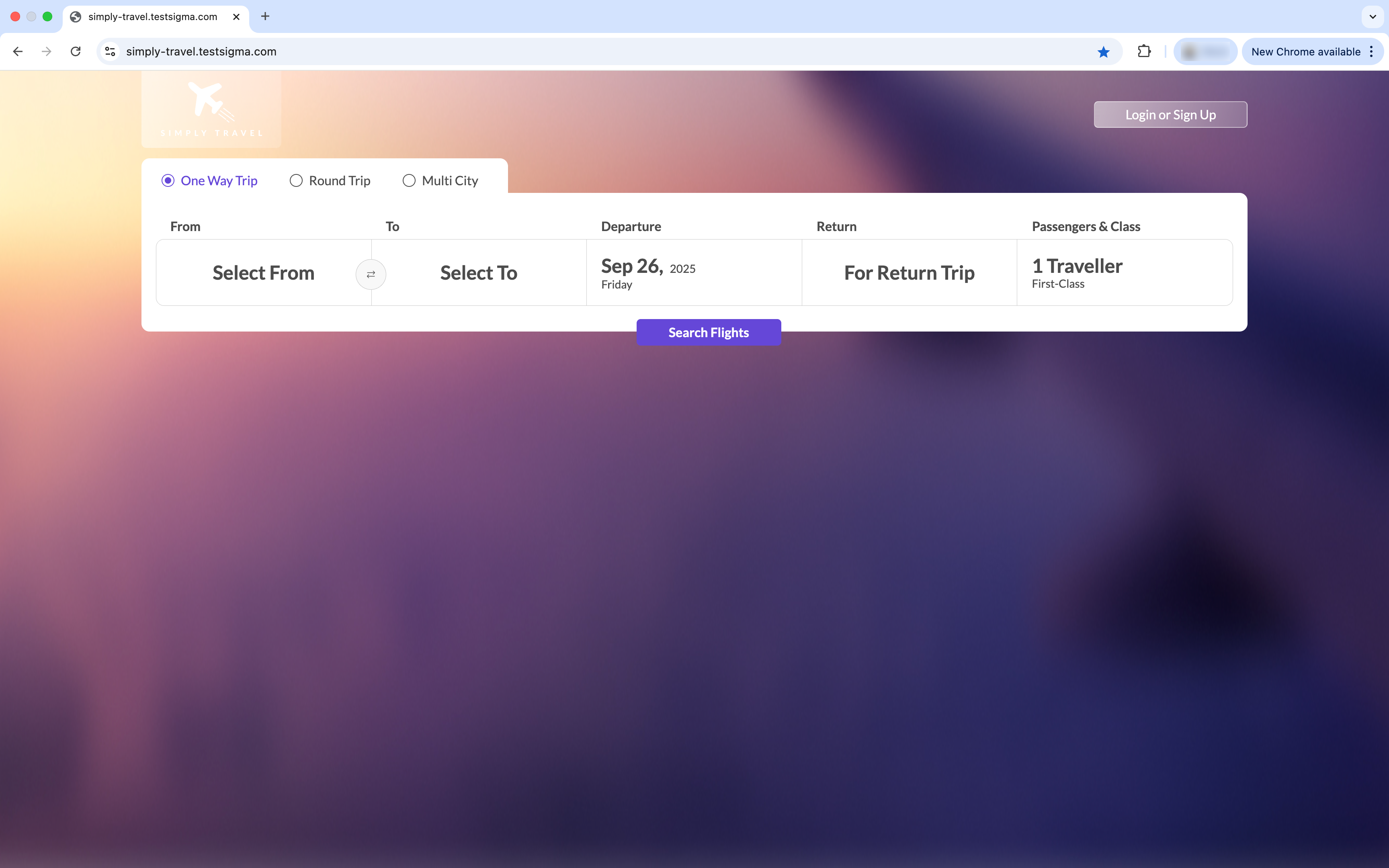

We are using the Simply Travel Flight website as an example and sorting the city names and the variable part of the element's ID attribute in the test data profile.

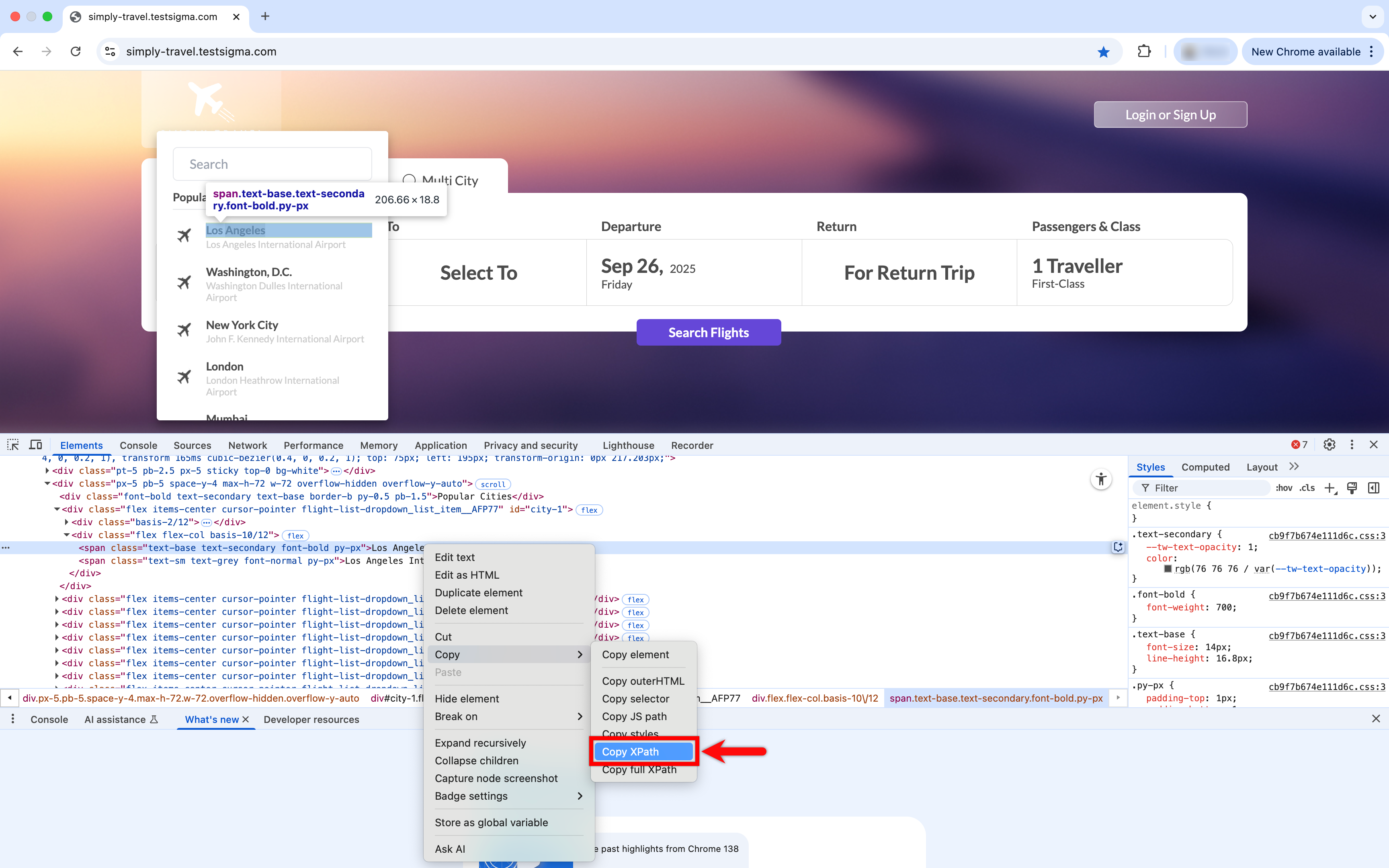

1. Navigate to the website containing the elements you want to copy the **XPath**.

-2. **Right-click** on the desired and select **Inspect** to open **Chrome Developer Tools**.

-3. Use the **Select Element** icon to locate and inspect the element, then right-click the HTML tag and copy the **XPath**.

-4. We will use the Simply Travel Flight website as an example of the above test steps. Click **Select from Cities** and copy the **XPath** values for the cities.

+

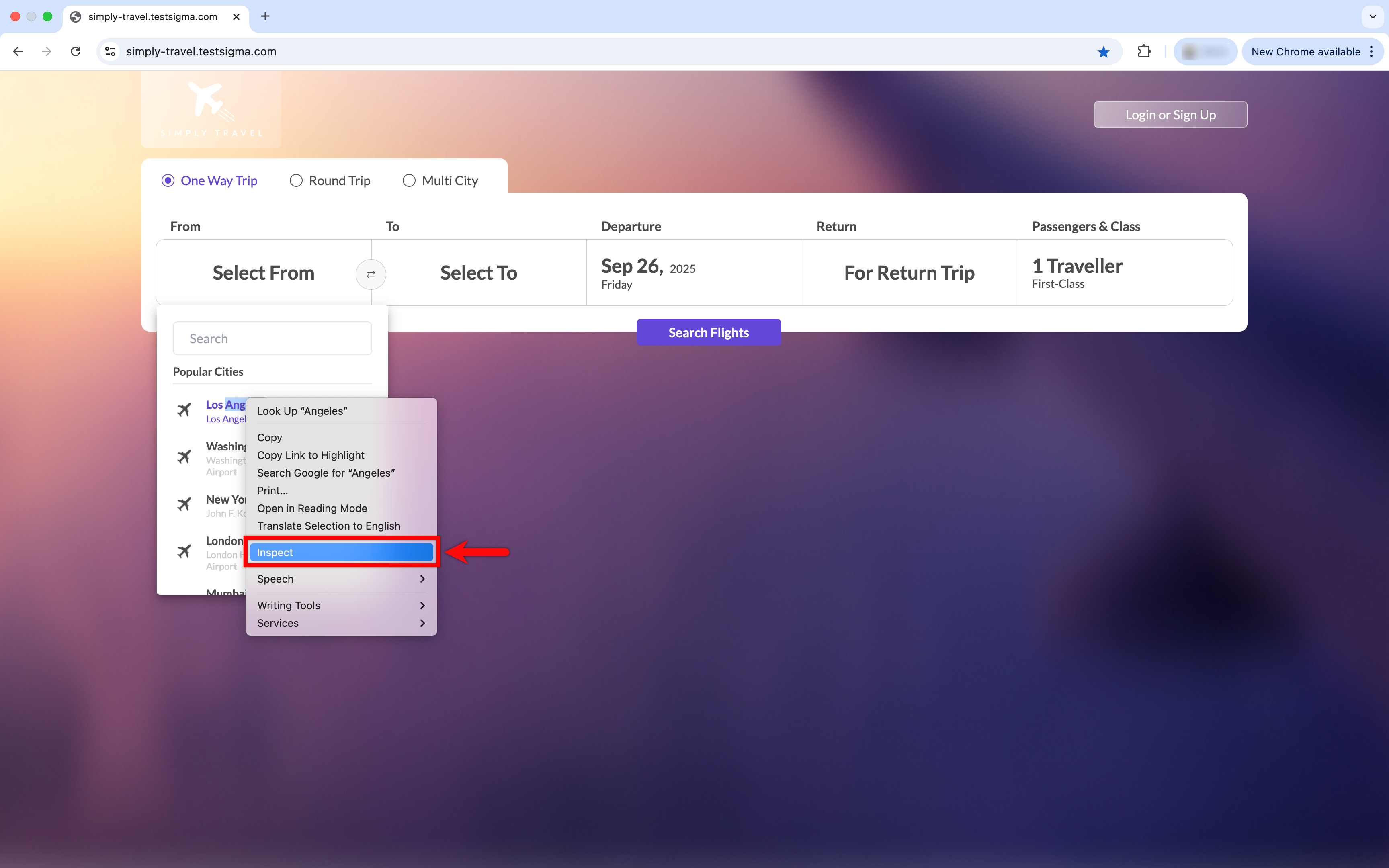

+2. We will use the Simply Travel Flight website as an example of the above test steps. Click **Select from Cities** and copy the **XPath** values for the cities.

+

+

+3. **Right-click** on the desired and select **Inspect** to open **Chrome Developer Tools**.

+

+

+4. Use the **Select Element** icon to locate and inspect the element, then right-click the HTML tag and copy the **XPath**.

+

+

5. The table below explains how the city names and their corresponding XPath for an element.

@@ -78,37 +85,57 @@ We are using the Simply Travel Flight website as an example and sorting the city

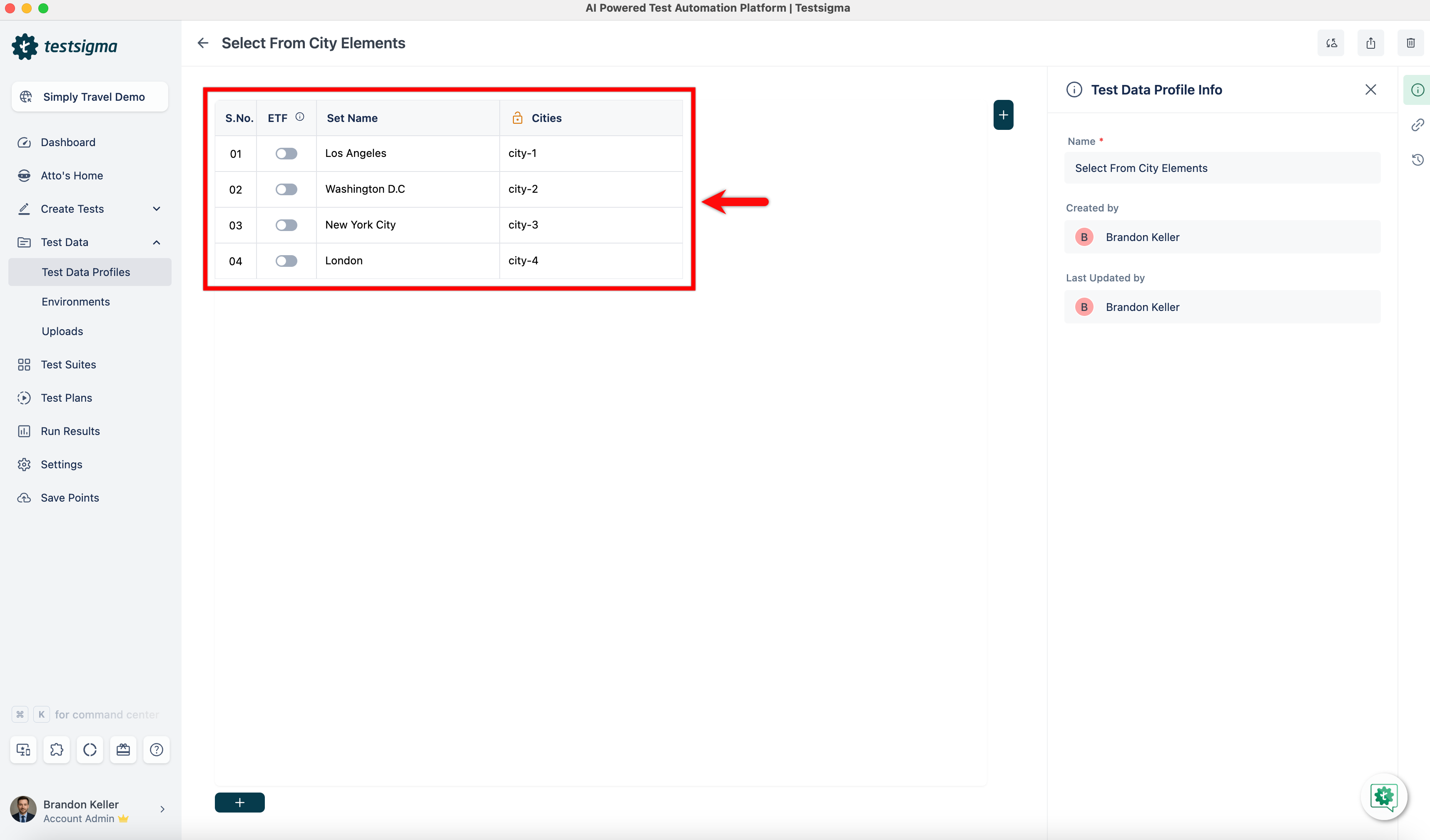

6. In the provided XPath examples, the IDs of the cities have a common portion, **city-**, followed by a variable digit from **1** to **4**. We will parameterise this by storing the city and the variable portion using a Test Data profile.

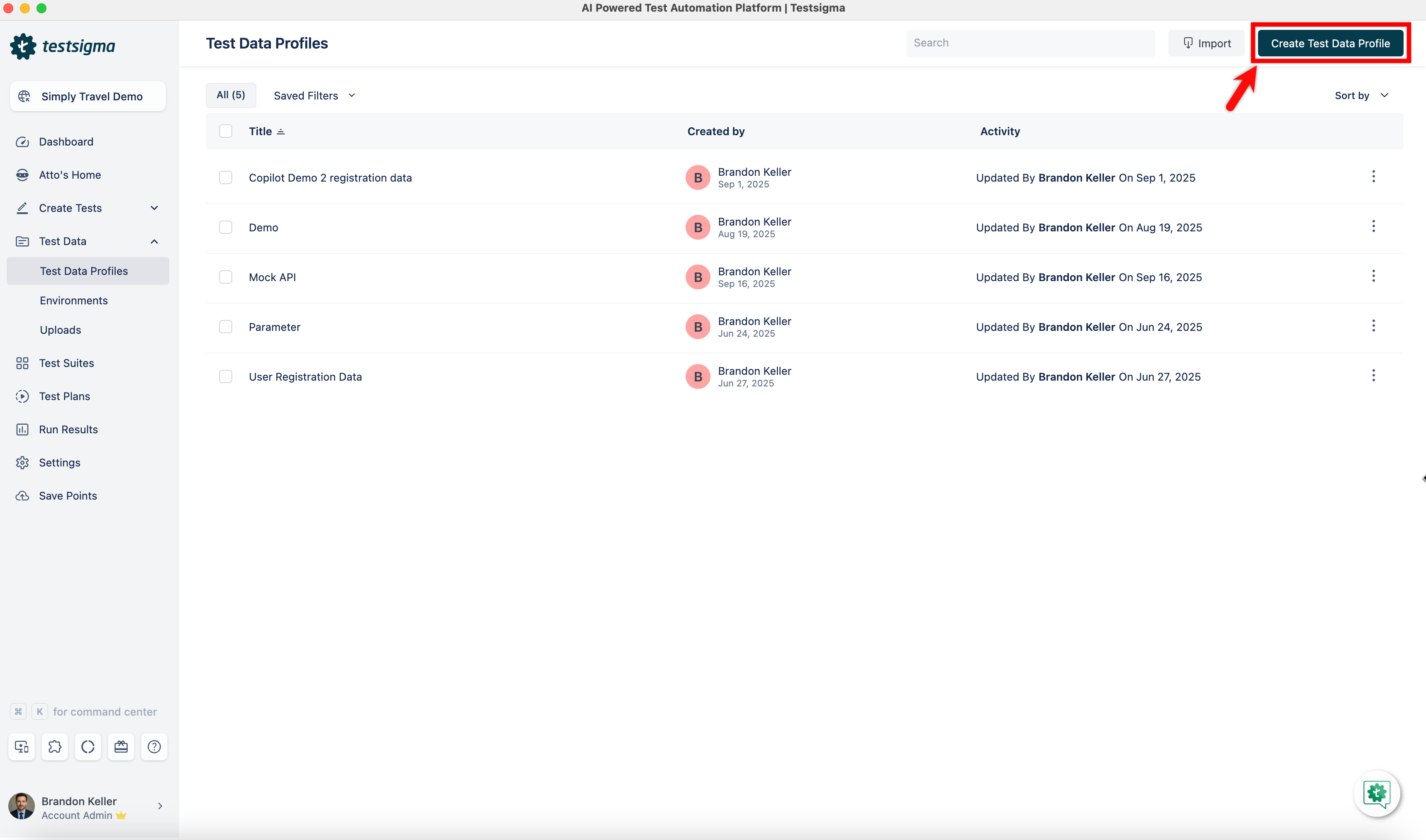

-7. Navigate to **Test Data** > **Test Data Profile**. Click on **Create Test Data Profile**.

-8. Below is the created Test Data profile for storing element ID attributes:

+7. Navigate to **Test Data** > **Test Data Profile**. Click on **Create Test Data Profile**.

+

+

+8. Below is the created Test Data profile for storing element ID attributes:

+

---

## **Creating Dynamic Elements using Parameter**

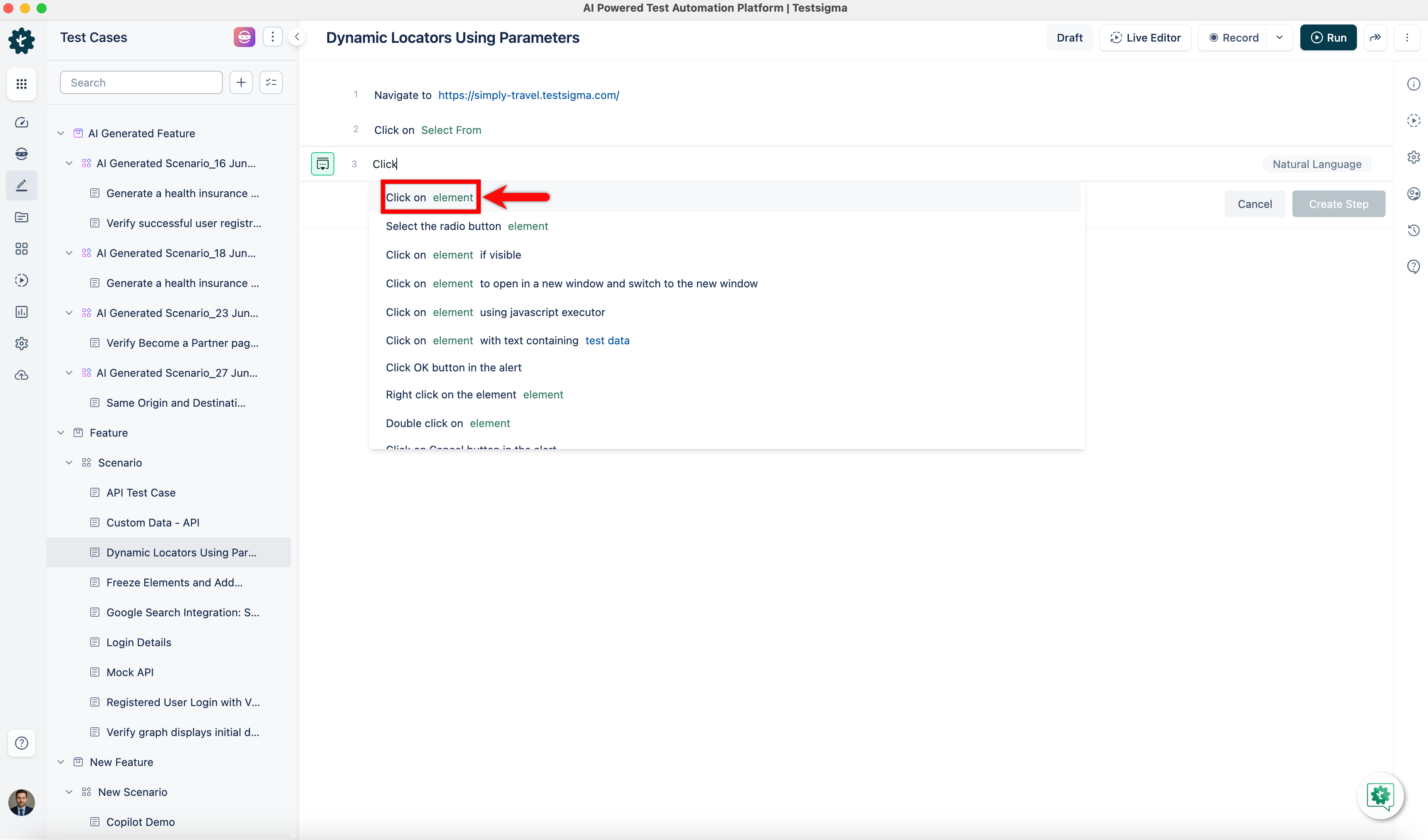

-1. Use NLP to create a new step in the Test Case and include a placeholder for the element.

+1. Use NLP to create a new step in the Test Case and include a placeholder for the element.

+

+

[[info | Example:]]

| Utilise NLP "Click on **Element**" to click an element with a given UI Identifier.

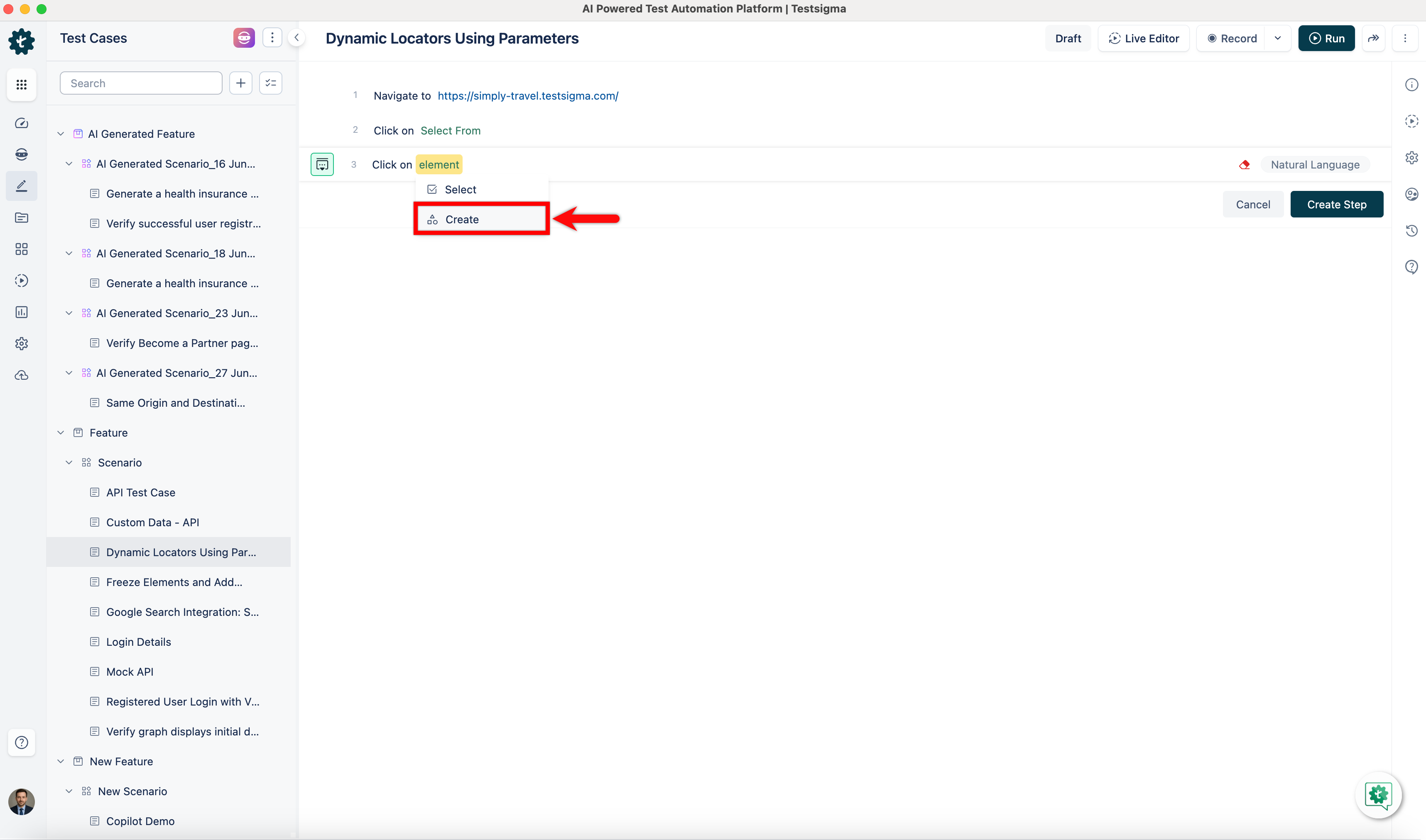

2. Click on the **Element**, then select **Create Element** from the dropdown list.

+

+

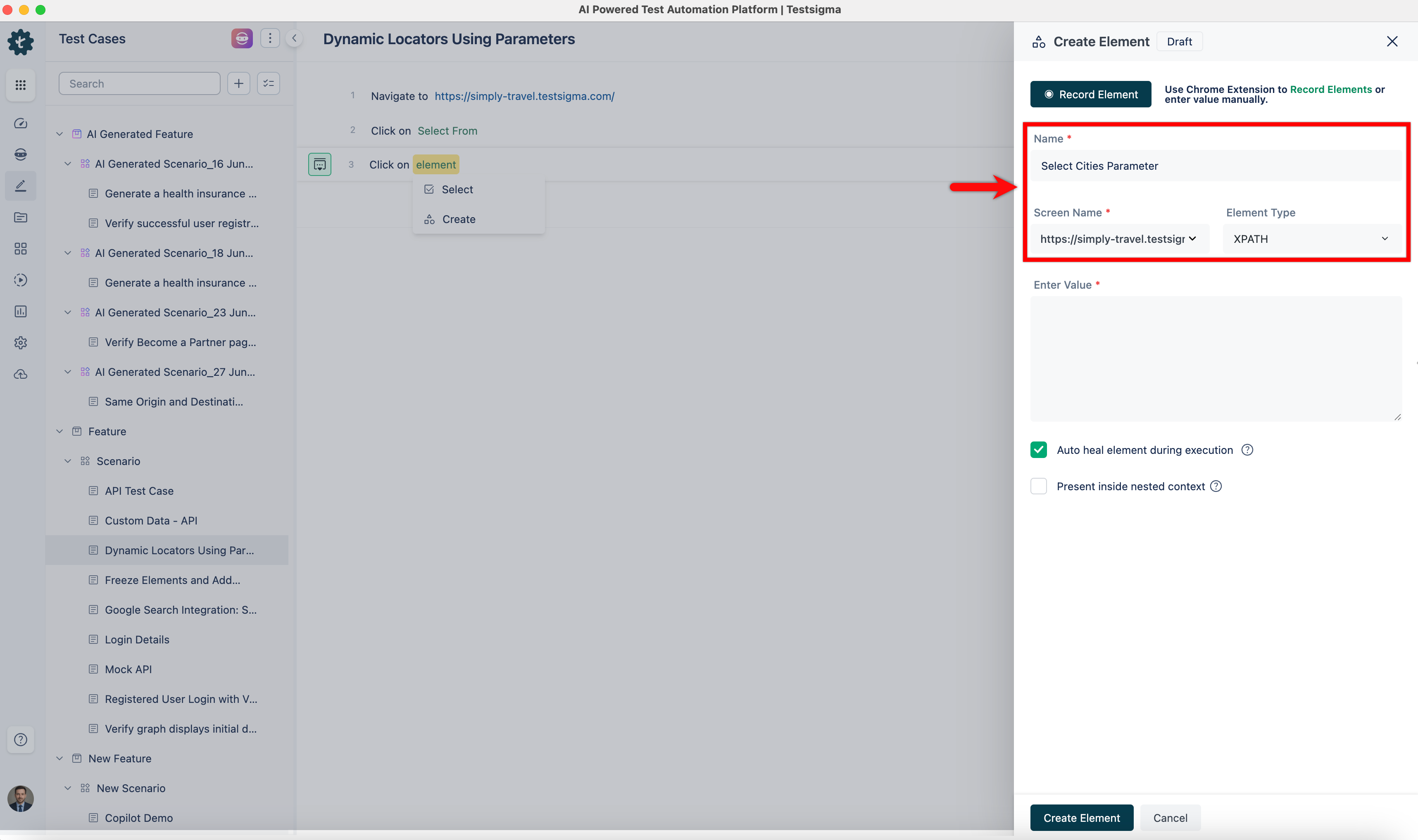

3. Manually enter the **Element Name**, **Screen Name**, and **Element Type** in the **Create Element** overlay screen.

+

+

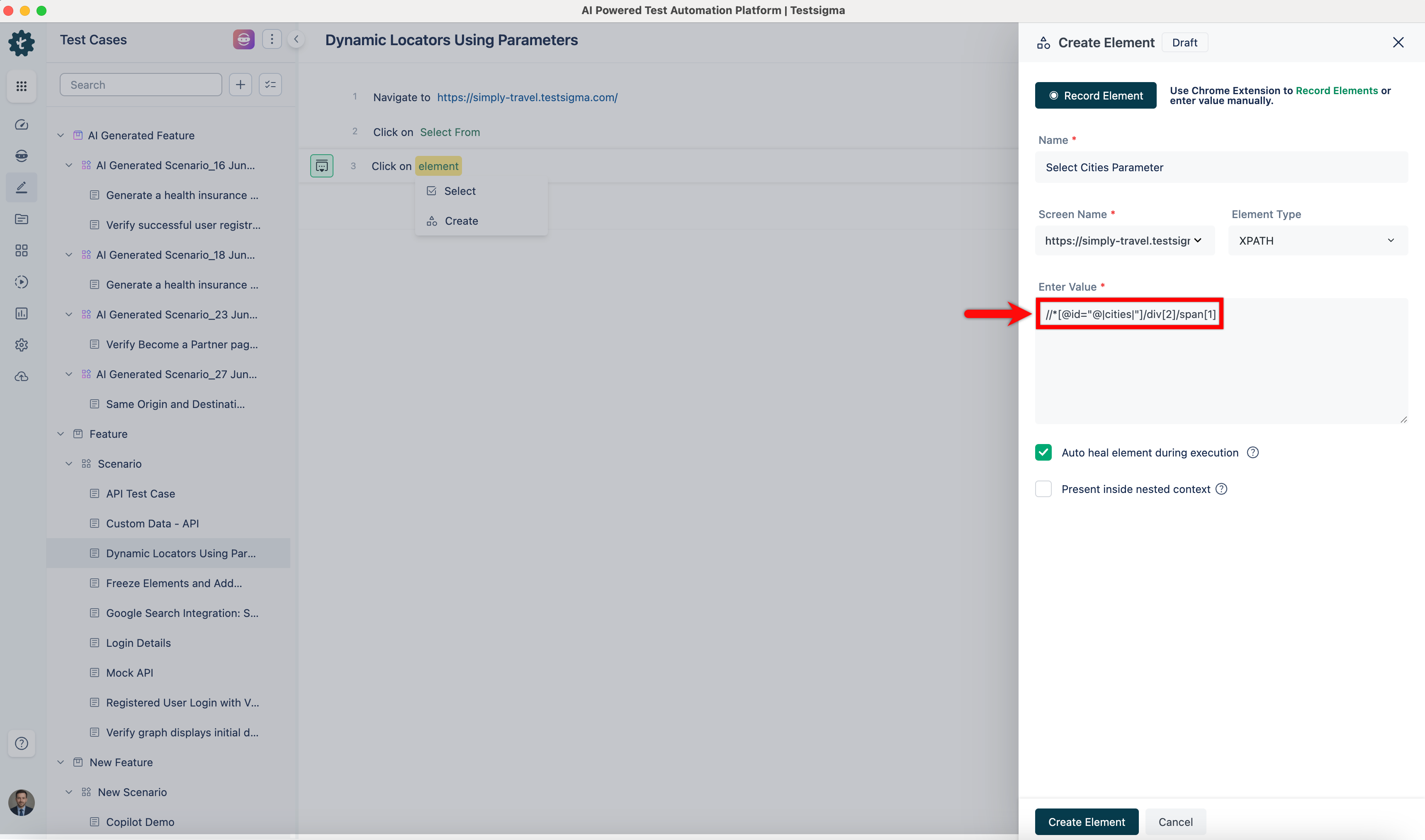

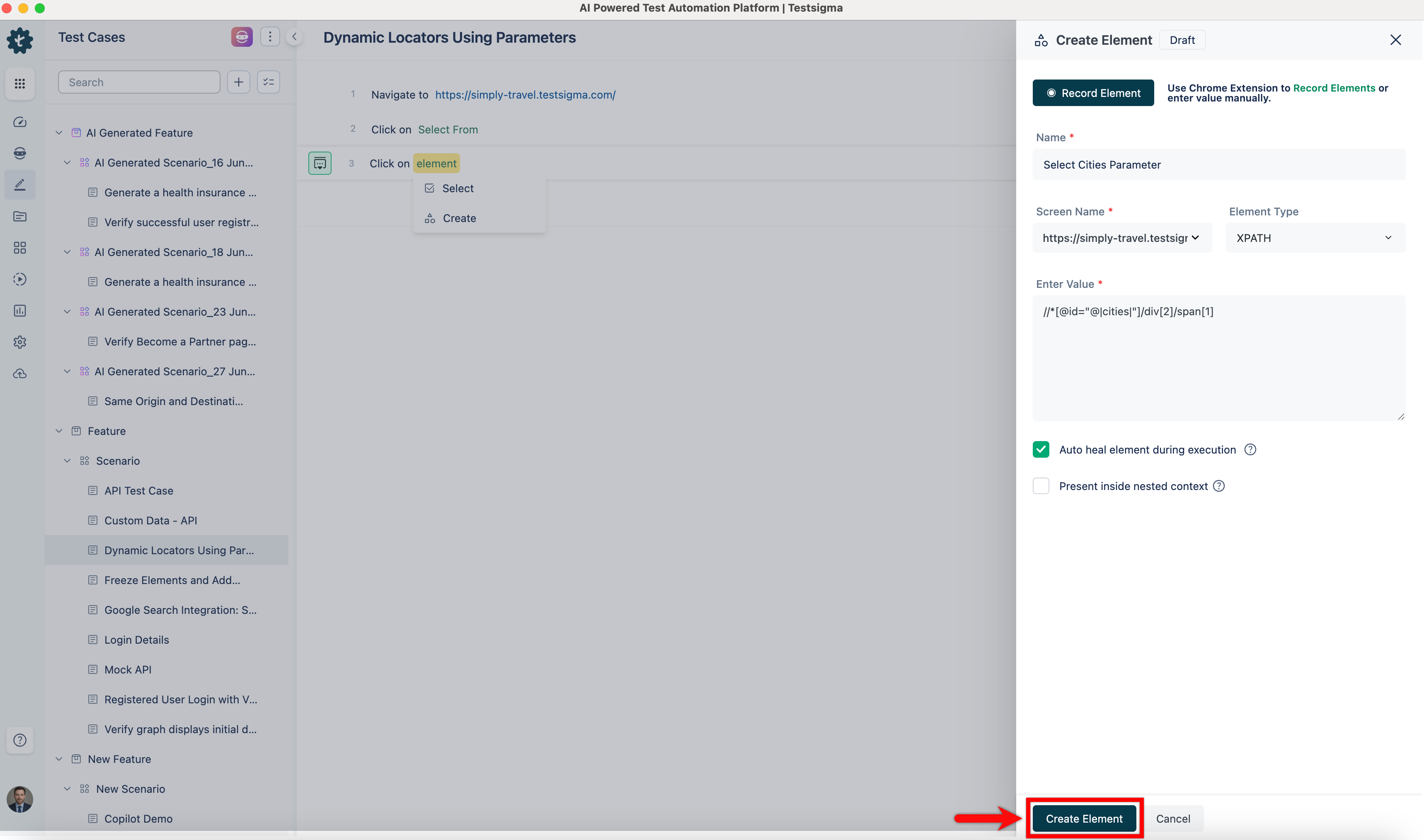

4. Provide the dynamic element value **//*[@id="@|cities|"]/div[2]/span[1]**, where **cities** is the parameter name containing dynamic data.

-5. Click **Create Element** to complete element creation and then **Create Step**.

+

+

+5. Click **Create Element** to complete element creation and then **Create Step**.

+

---

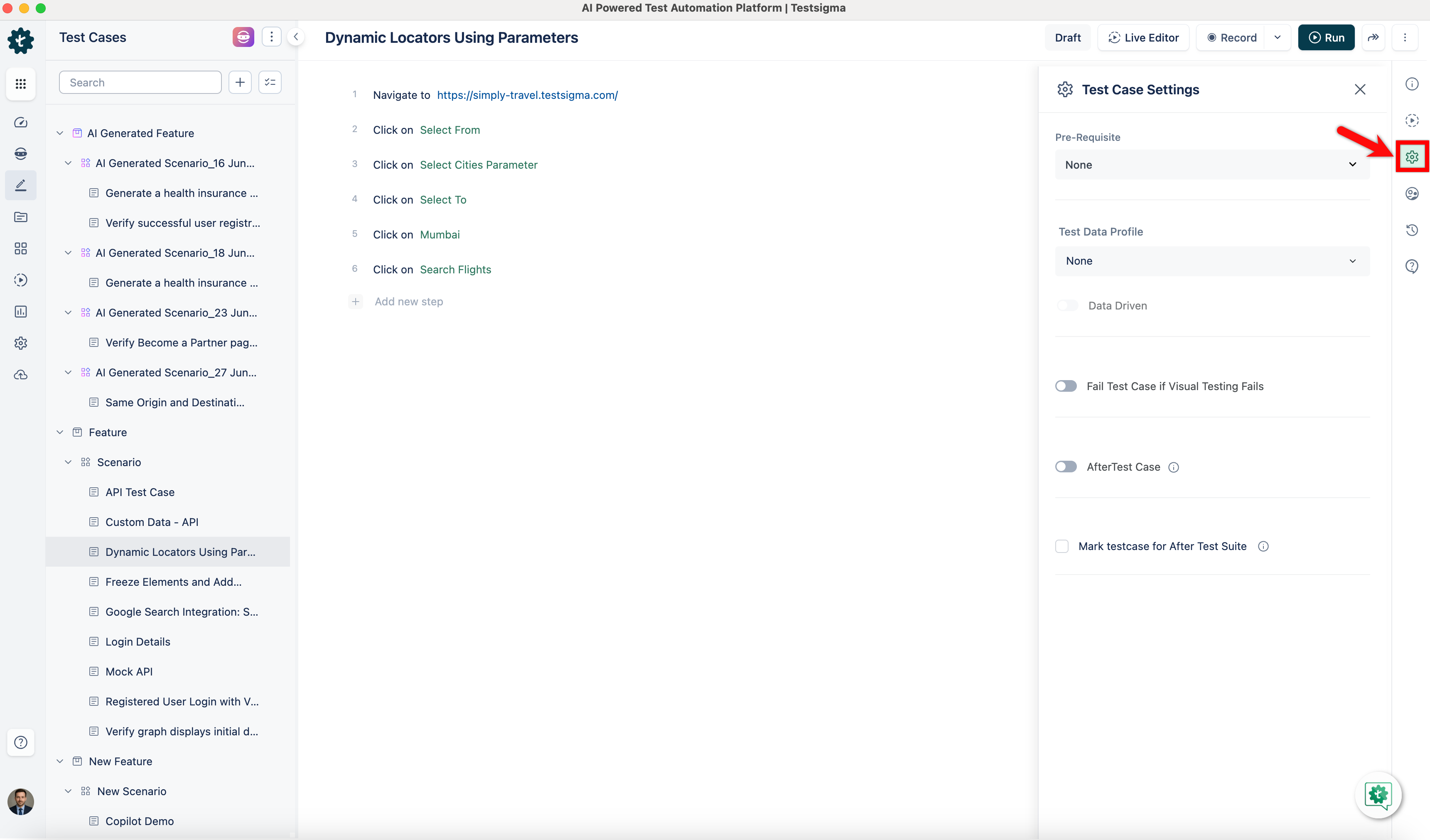

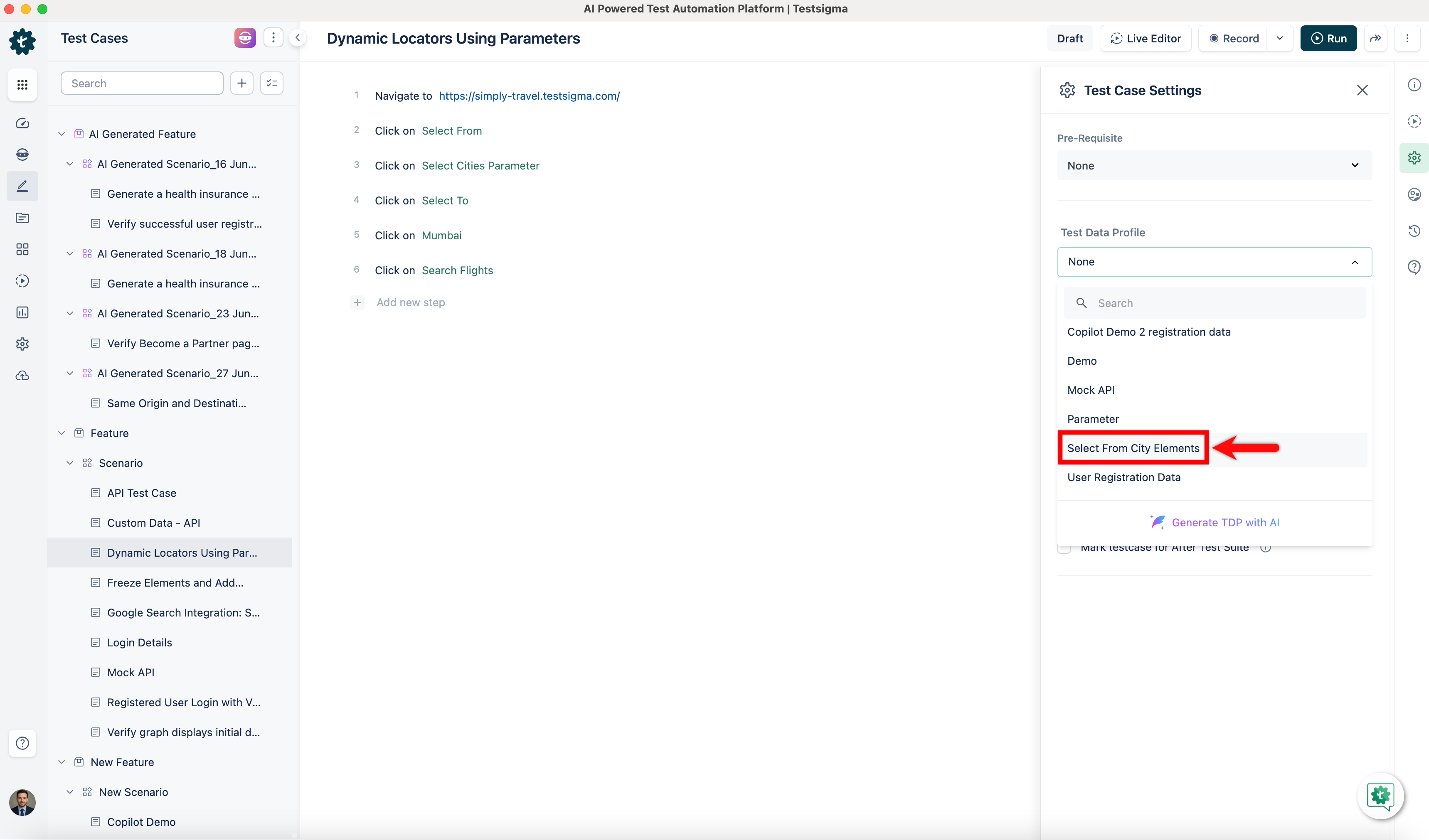

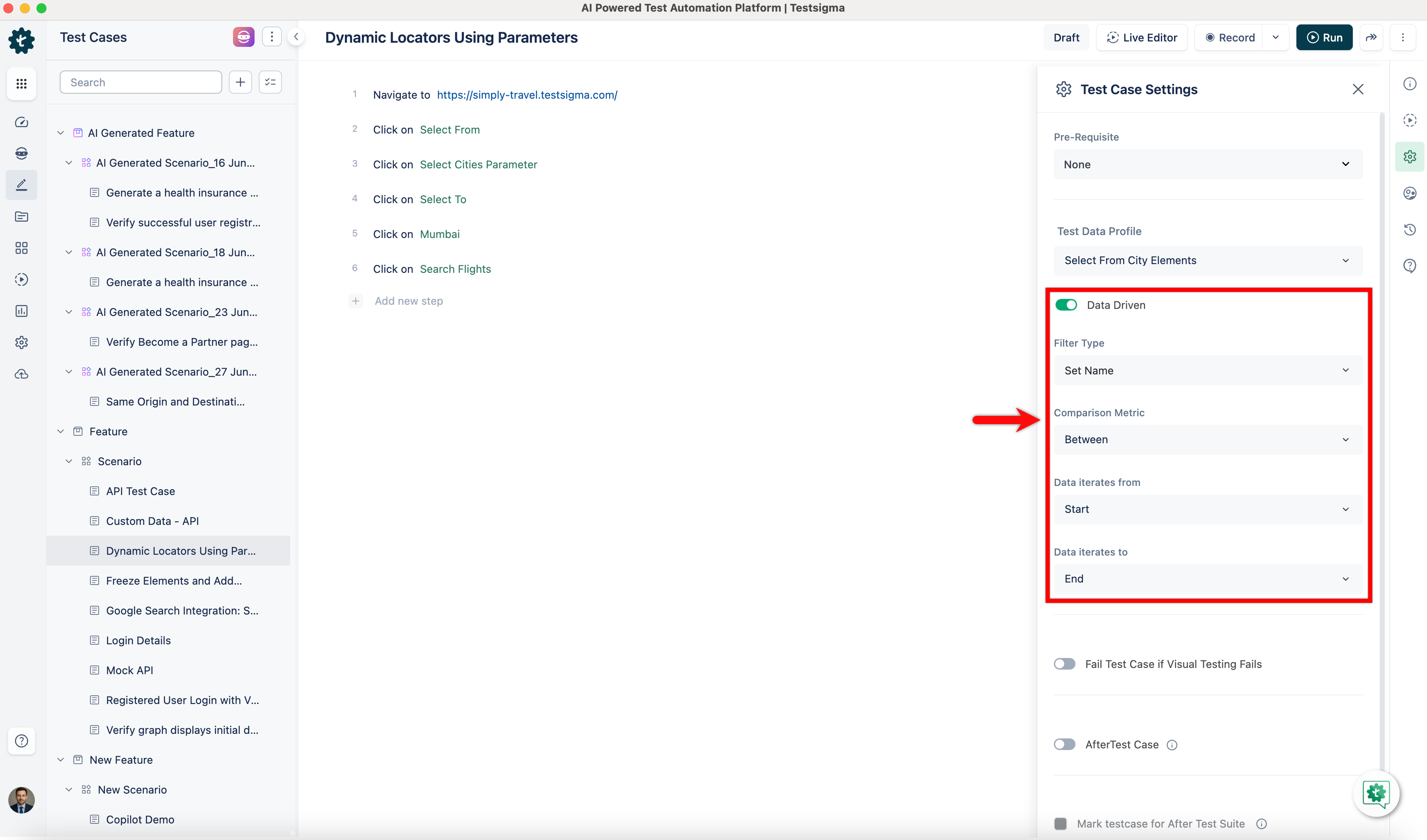

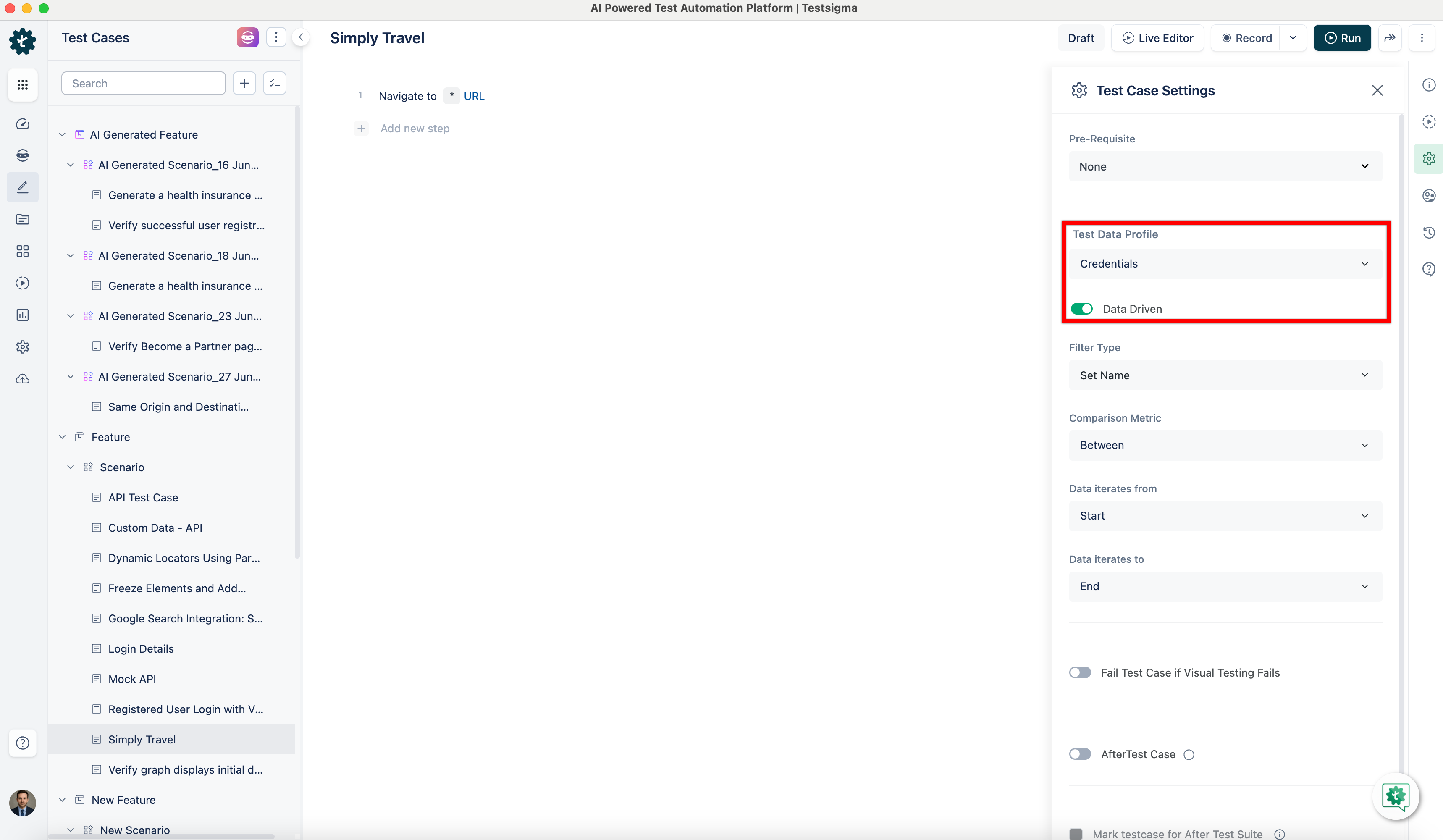

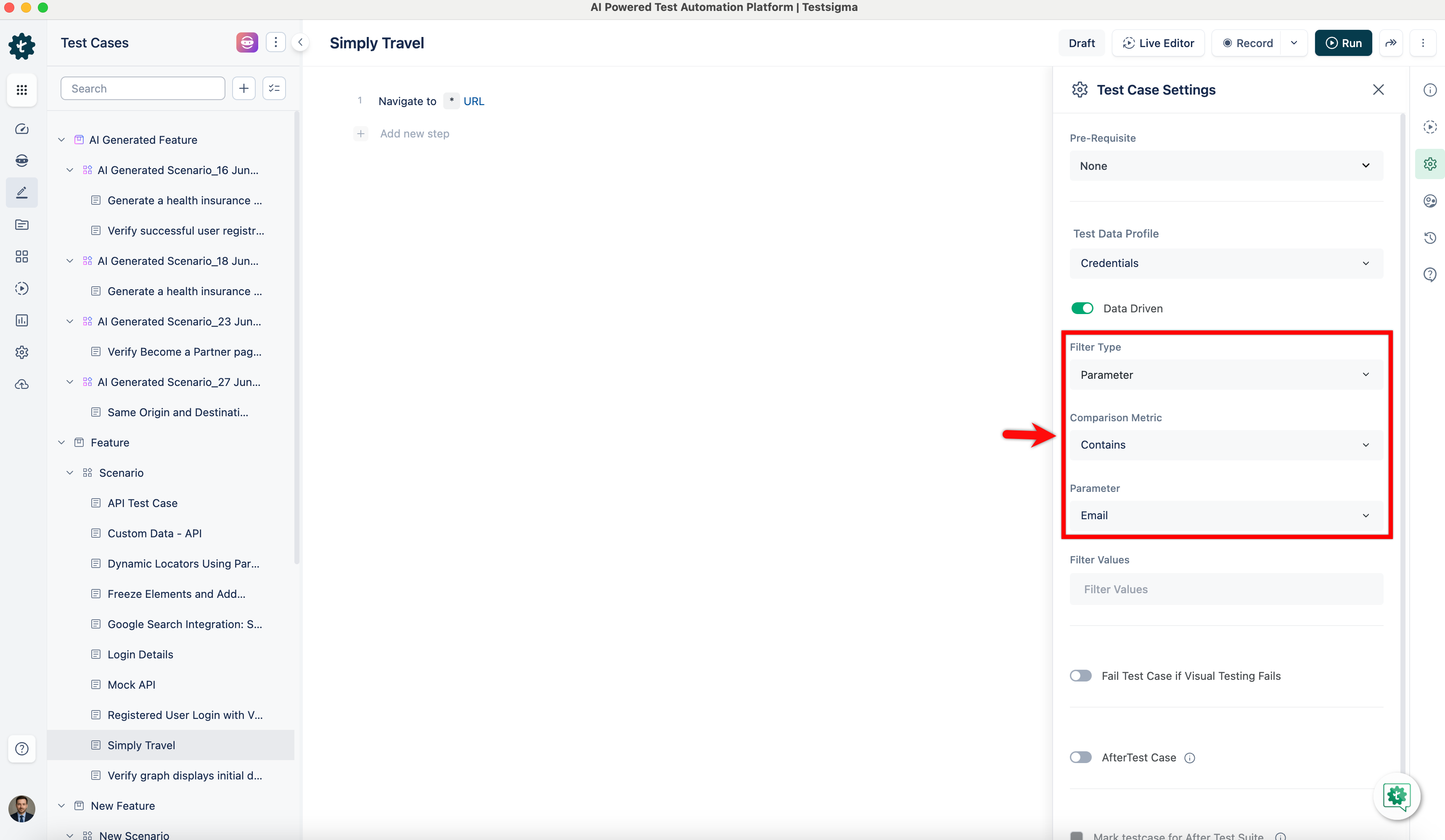

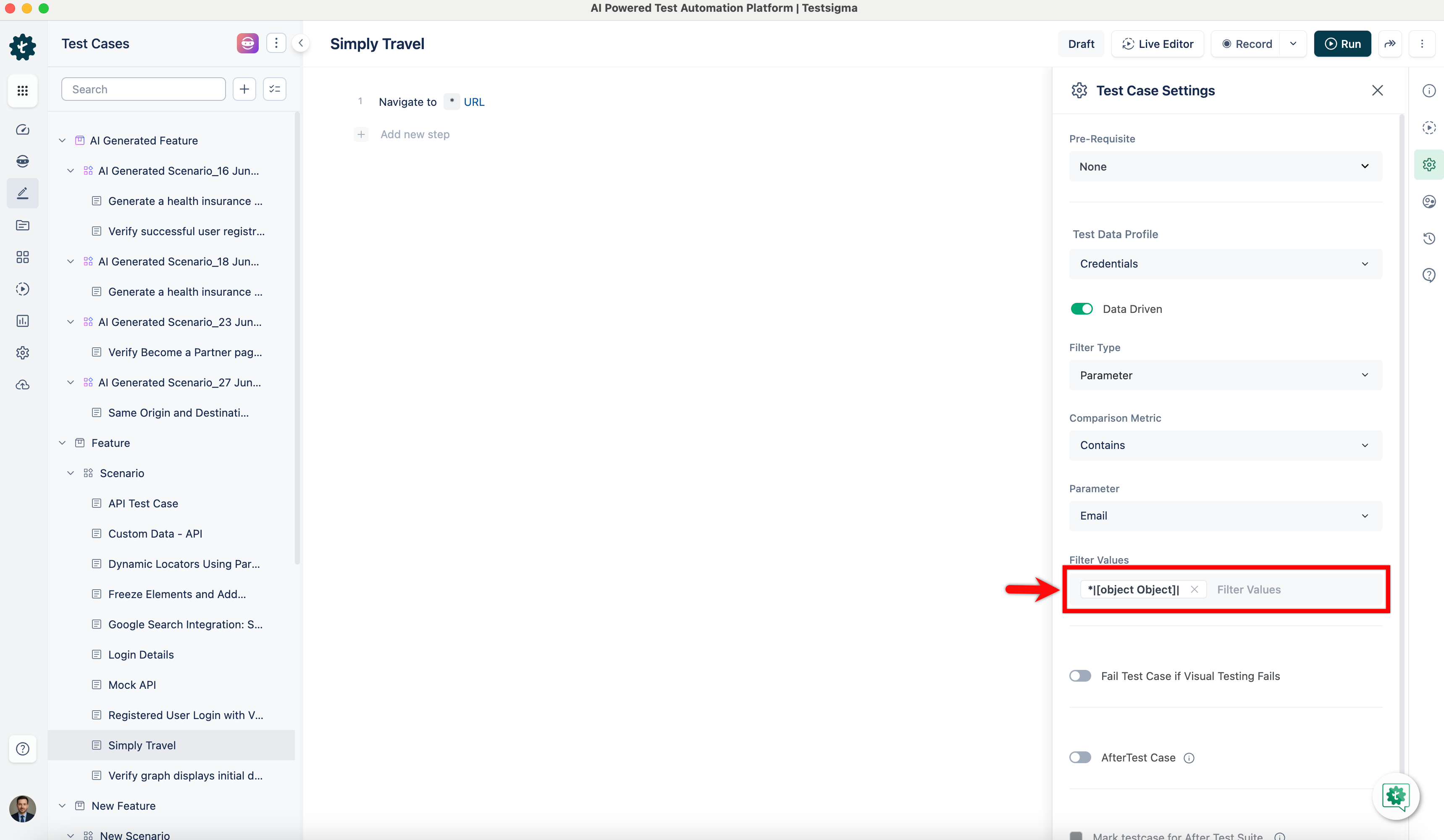

## **Configuring Test Data Profile in Test Case**

1. In Test Case details page, click **Test Case Settings** in the right-side navbar.

+

+

2. Choose the associated **Test Data Profile**.

-3. Enable the **Data-Driven** toggle and select **Filter Type**, **Comparison Metric**, and **Data Iteration**.

+

+

+3. Enable the **Data-Driven** toggle and select **Filter Type**, **Comparison Metric**, and **Data Iteration**.

+

---

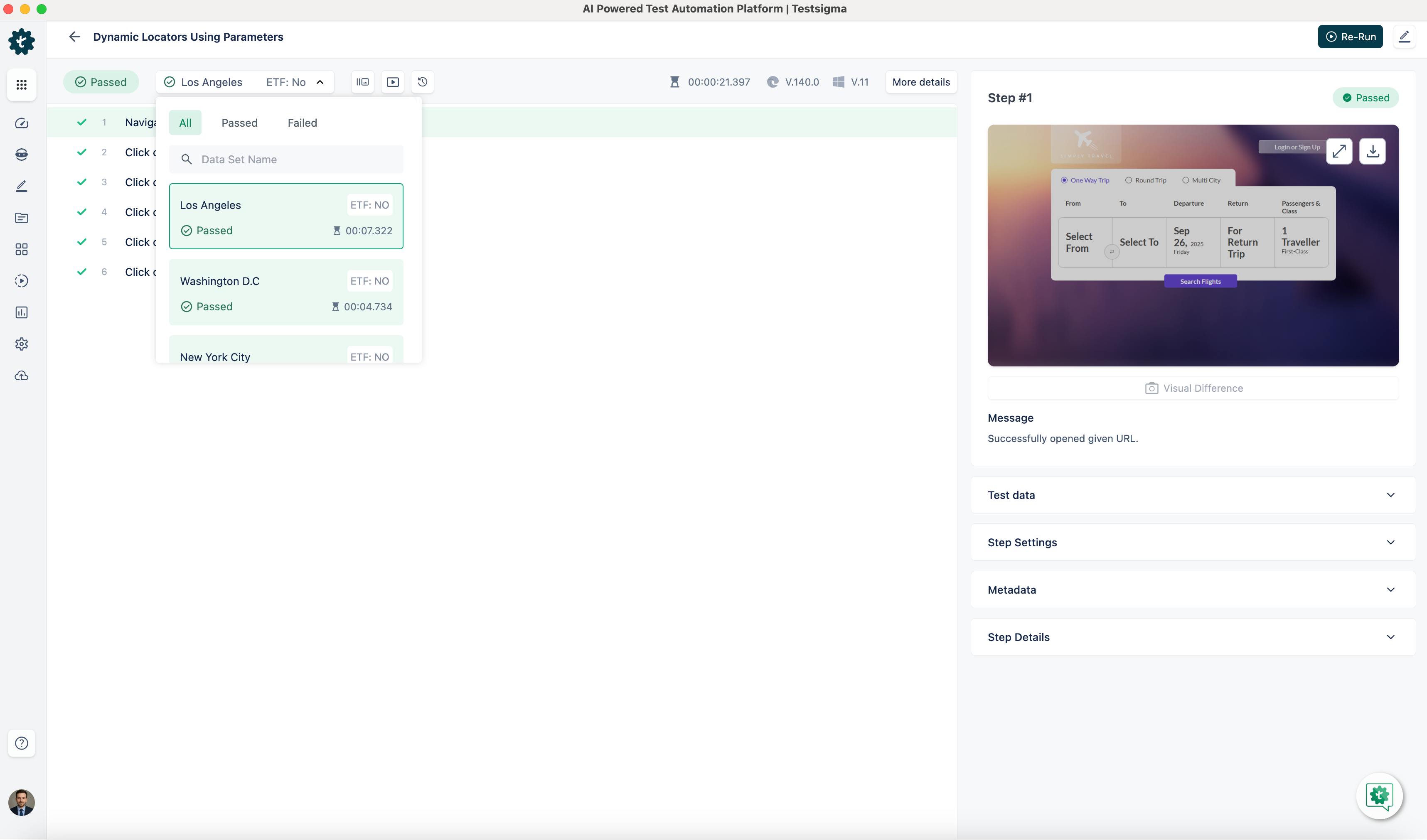

## **Run Results for Dynamic Elements using Parameter**

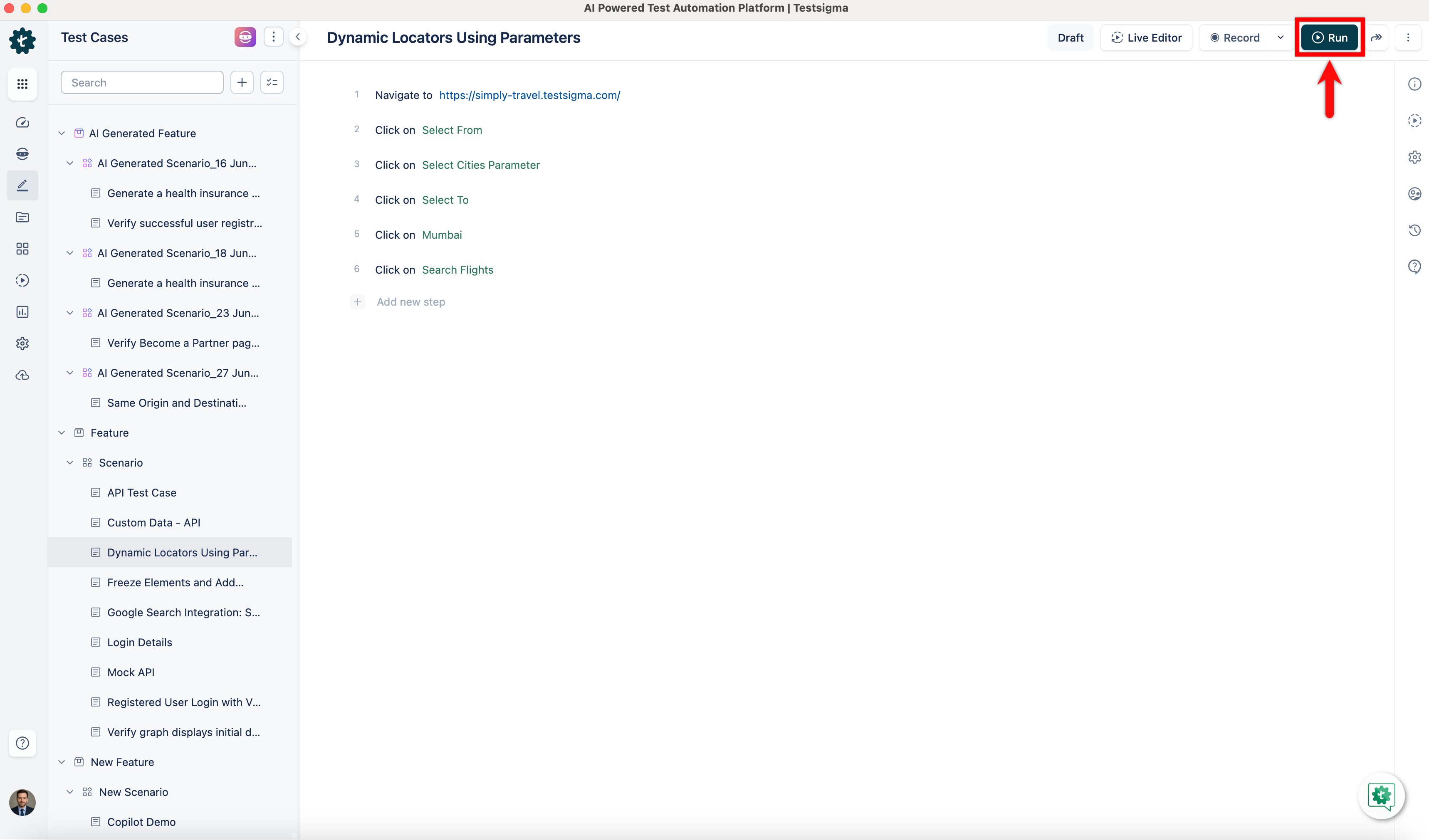

1. Once the test step created with the dynamic Element, execute the Test Case.

-2. Wait until the Test Case execution is completed.

-3. The Test Case will iterate as many times as there are **Test Data Sets (rows)** in the Test Data Profile, resulting in four iterations based on the provided example.

+

----

+2. Wait until the Test Case execution is completed.

+

+3. The Test Case will iterate as many times as there are **Test Data Sets (rows)** in the Test Data Profile, resulting in four iterations based on the provided example.

+

+---

\ No newline at end of file

diff --git a/src/pages/docs/runs/adhoc-runs.md b/src/pages/docs/runs/adhoc-runs.md

index b96cf35c..7d1dd717 100644

--- a/src/pages/docs/runs/adhoc-runs.md

+++ b/src/pages/docs/runs/adhoc-runs.md

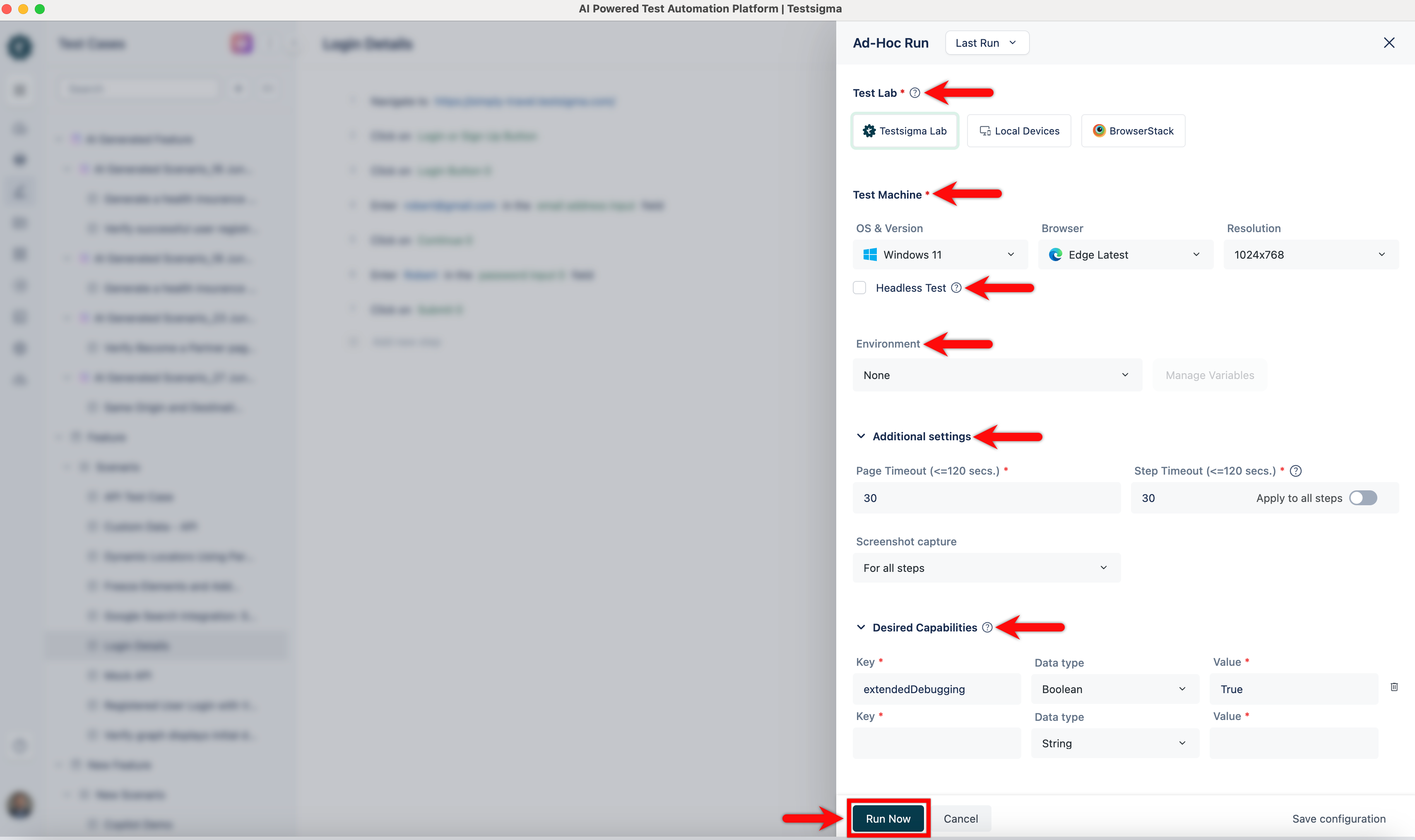

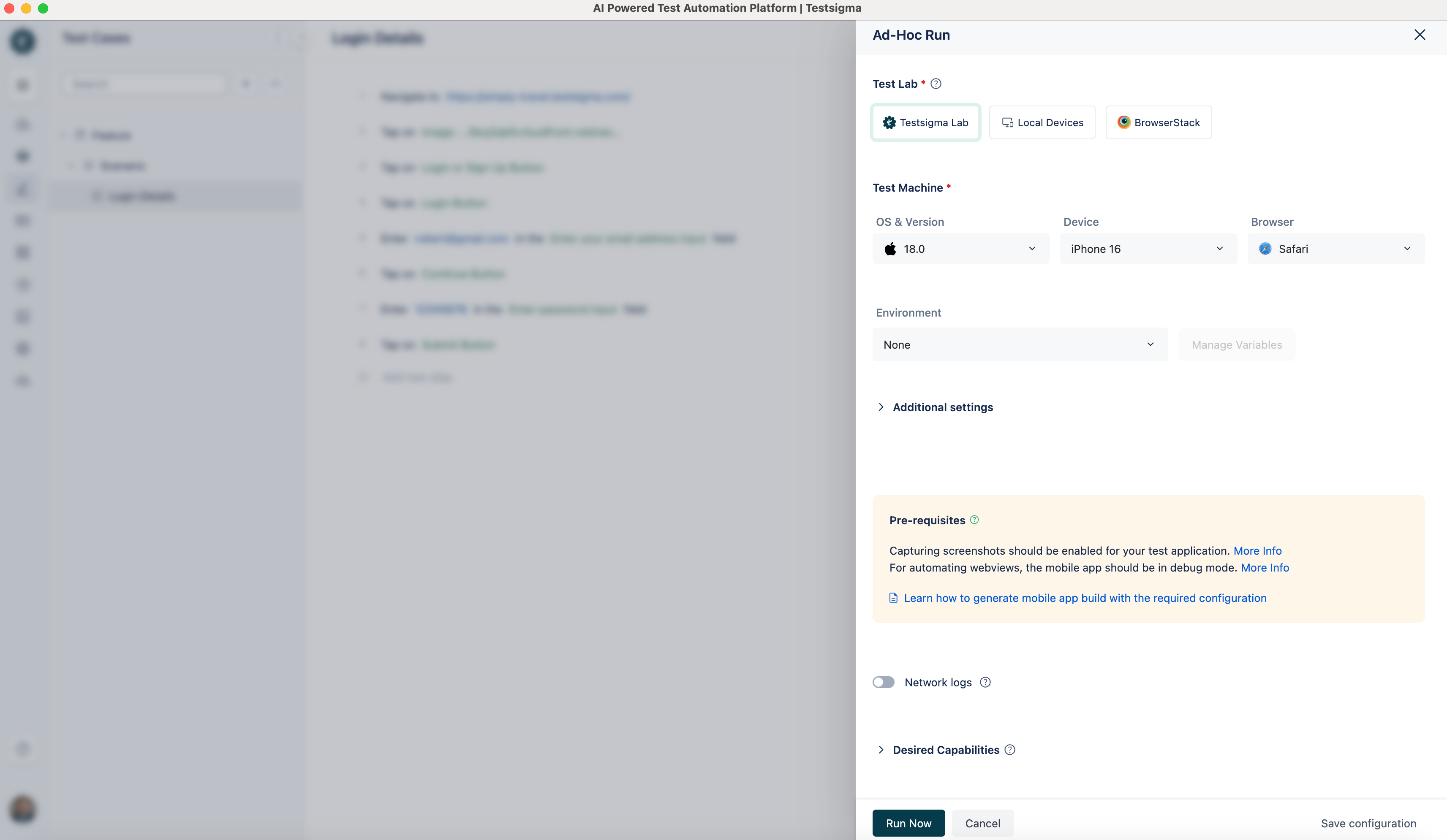

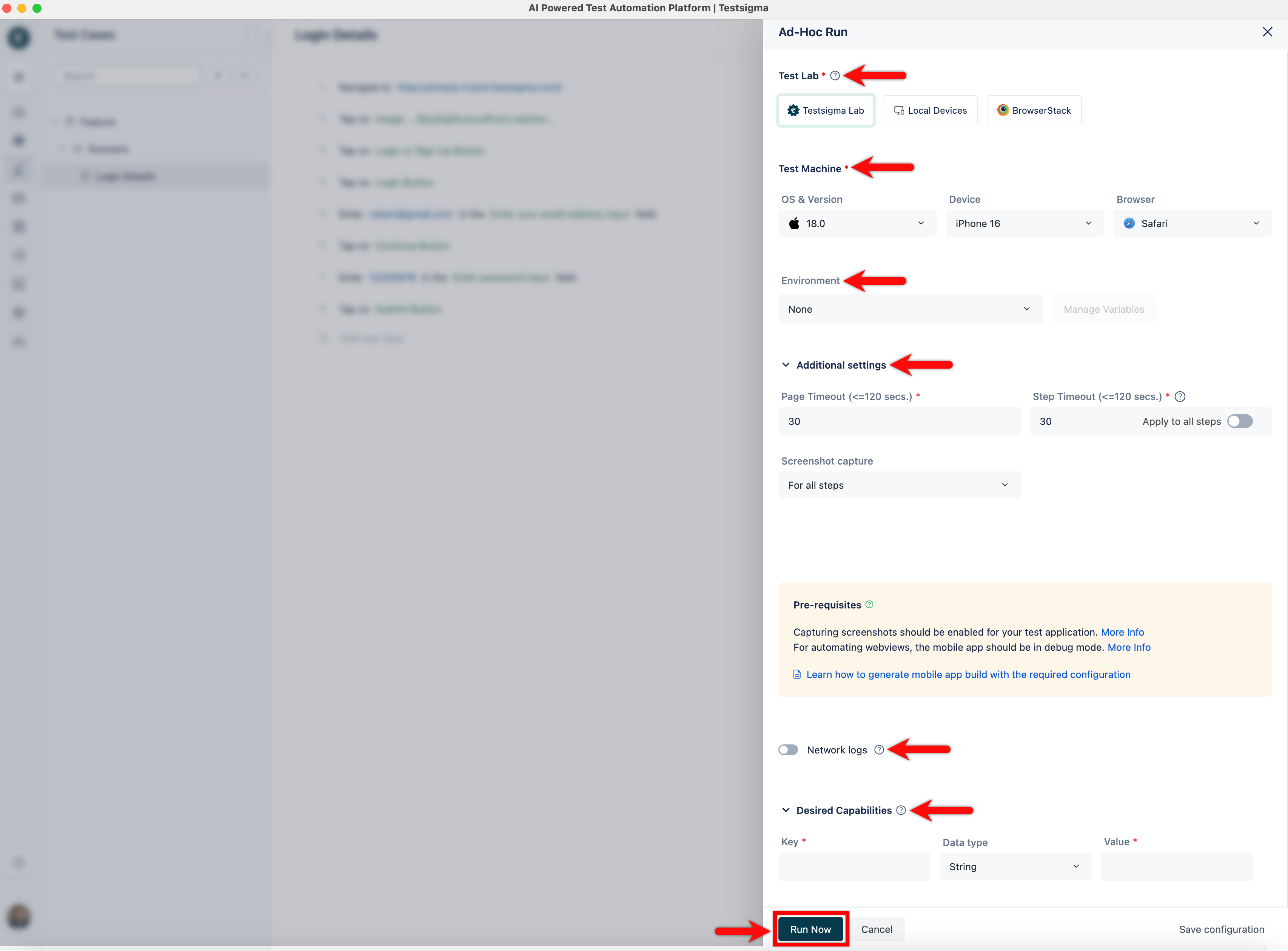

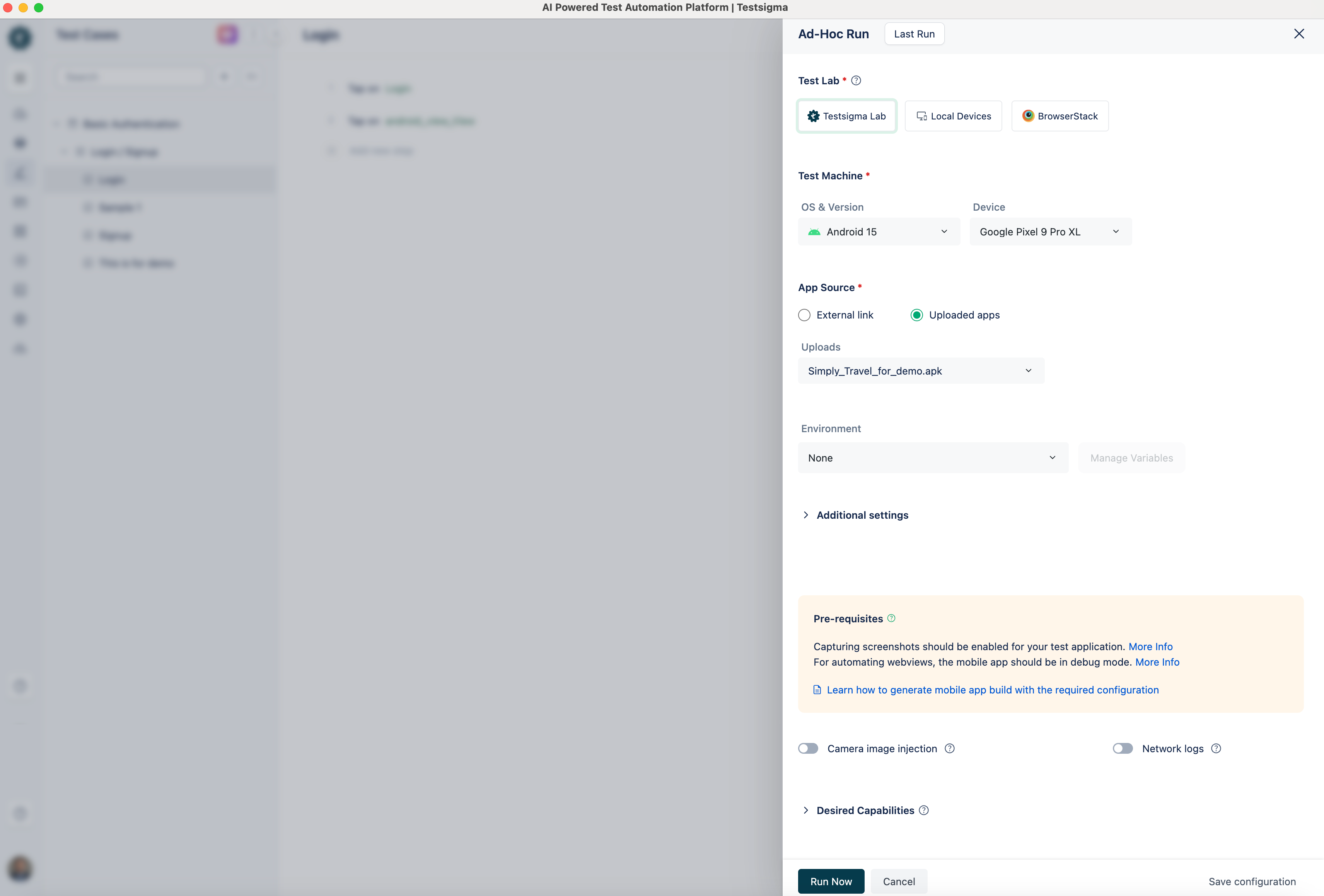

@@ -70,7 +70,7 @@ This documentation will guide you through setting up Test Labs and Test Machines

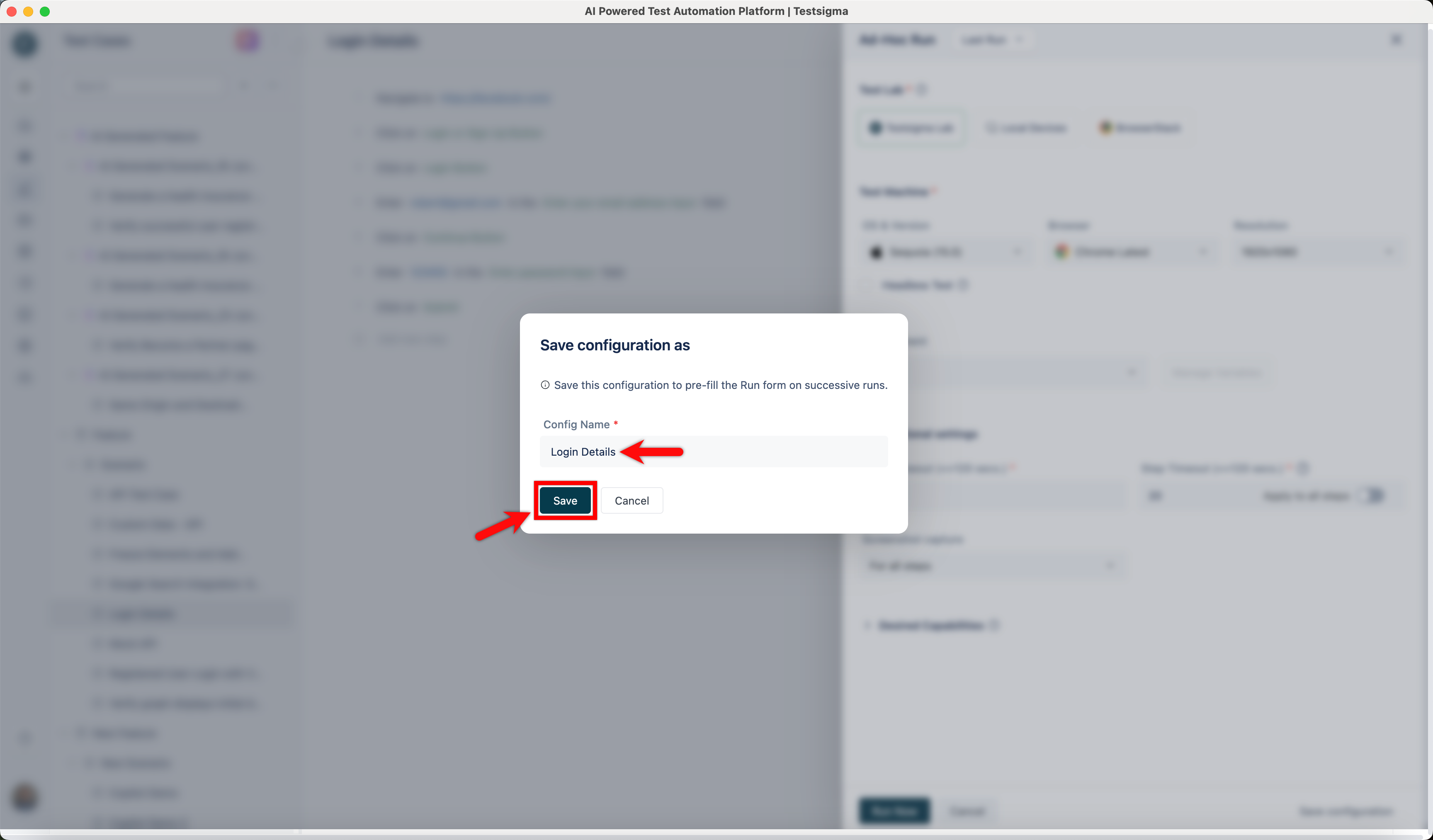

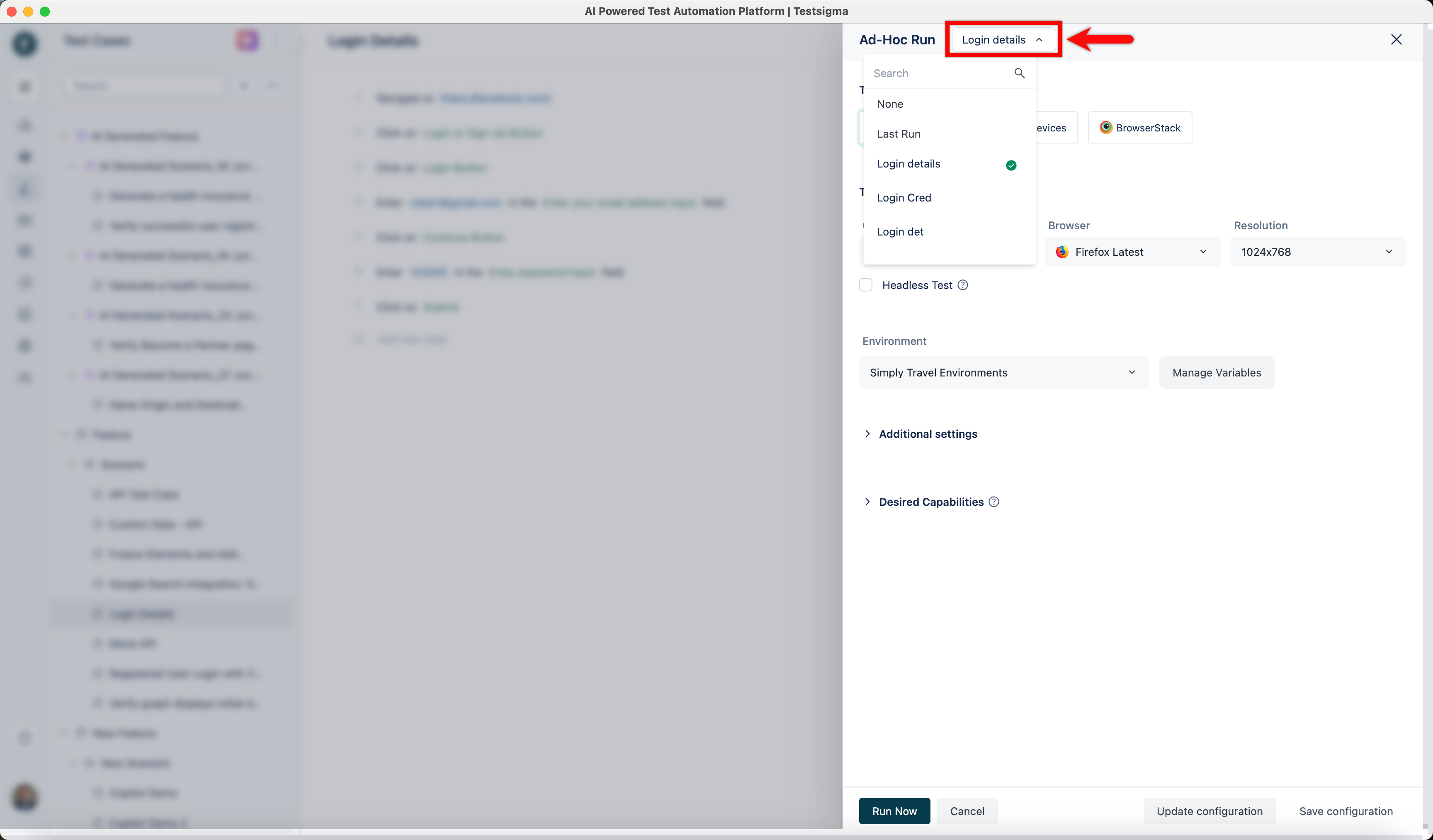

5. Click on **Saved Configs** at the top of the screen to reuse the saved configuration, and a drop-down list will appear. Select the Saved Config from this list.

6. The Ad-hoc Run overlay will be automatically pre-filled with the saved configuration for your next runs when selected.

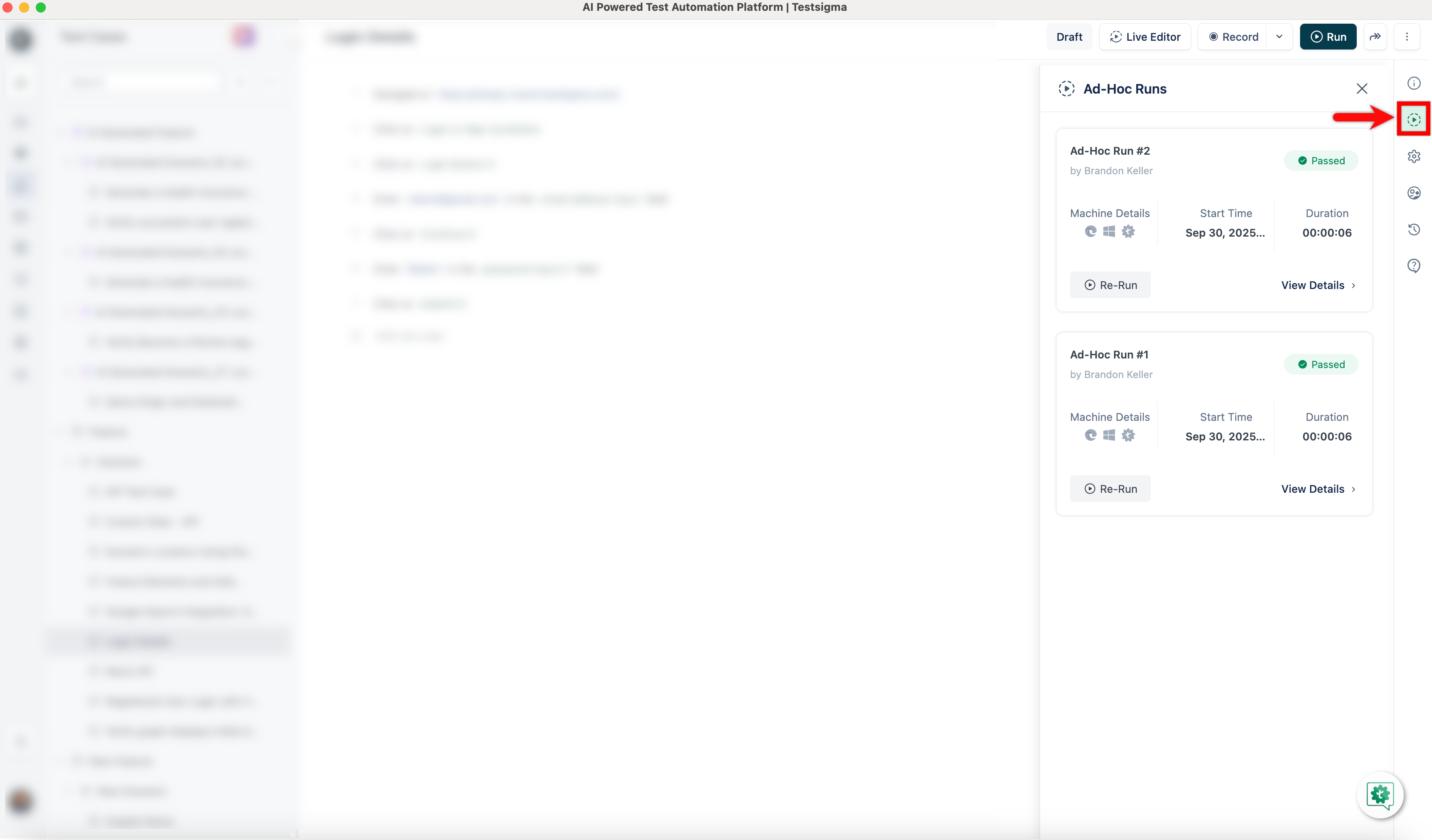

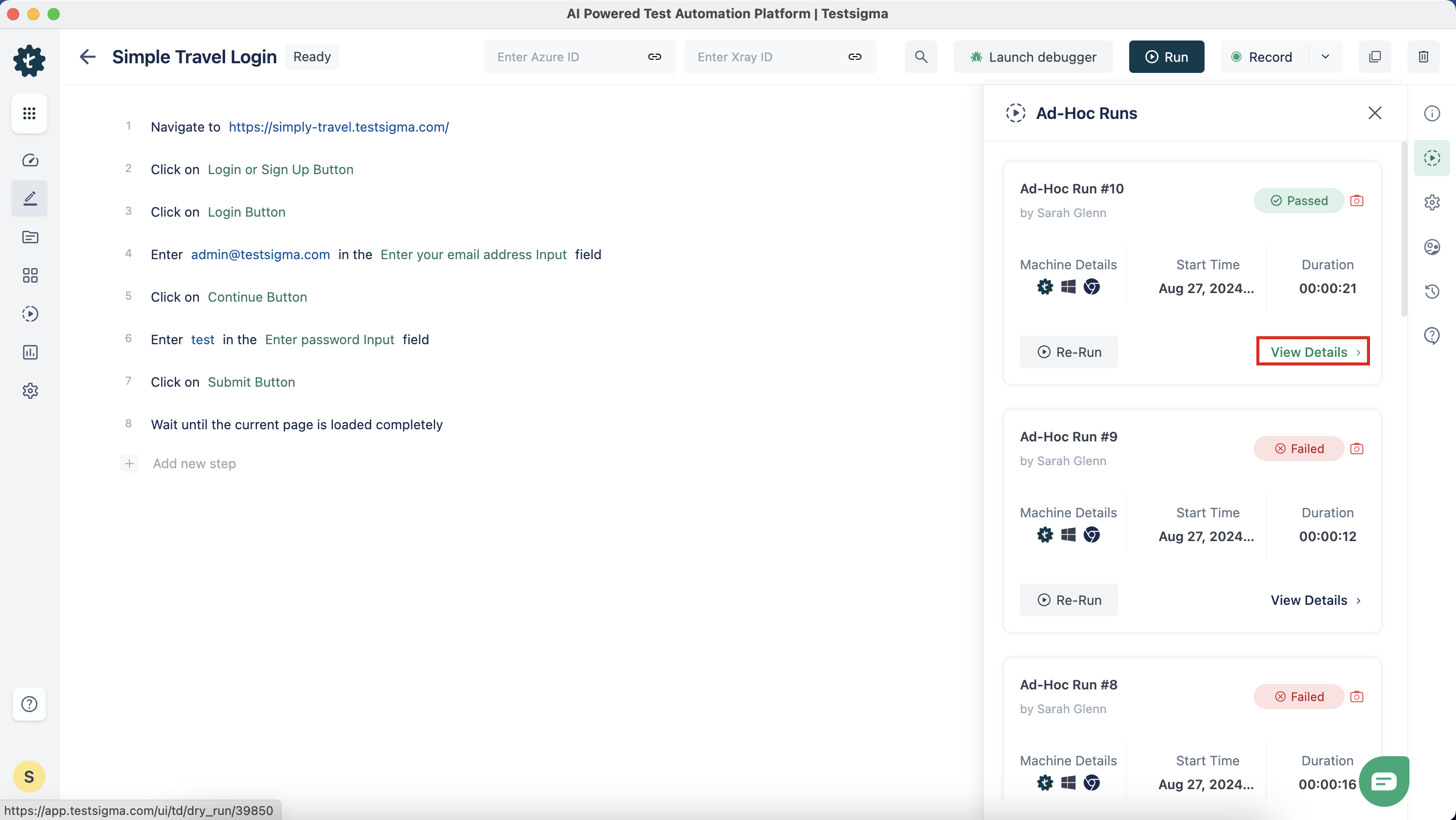

-7. In the **Test Case Details** page, click **Ad-Hoc Runs** in the right Navigation Bar to open the right sidebar. This will enable you to view the history and details of Ad-Hoc Run results for the Test Case.

+7. In the **Test Case Details** page, click **Ad-Hoc Runs** in the right Navigation Bar to open the right sidebar. This will enable you to view the history and details of Ad-Hoc Run results for the Test Case.

[[info | **NOTE**:]]

@@ -93,7 +93,7 @@ In the Ad-hoc run layover, provide the following configuration settings for a we

3. **Page Timeout**: Duration for which the test should wait for the page to load.

4. **Element Timeout**: Duration for which the test should wait for the element to load.

5. **Desired Capabilities**: Provide **Key**, **Data Type** and **Value** under Desired Capabilities.

-6. Click on **Run Now**.

+6. Click on **Run Now**.

---

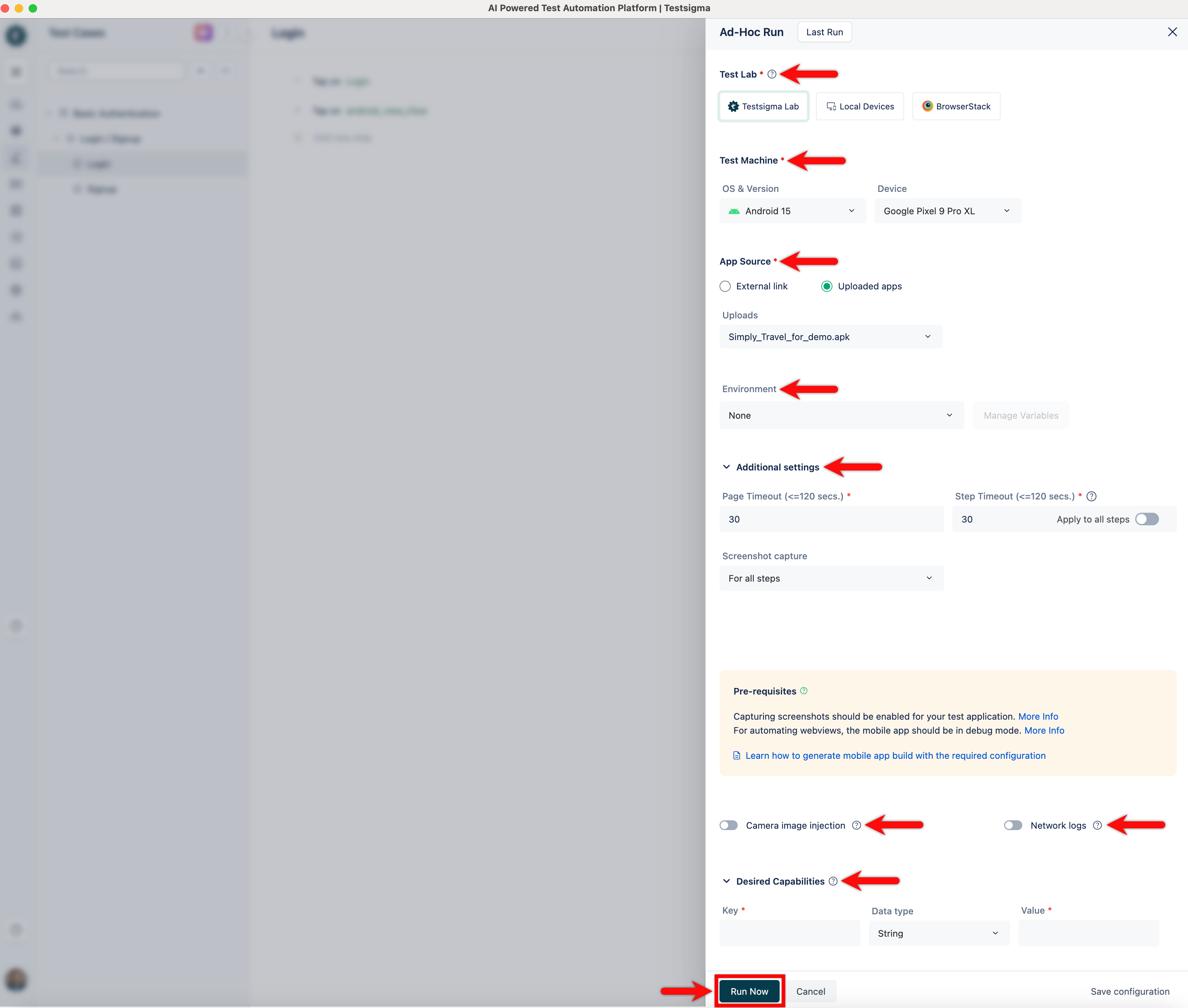

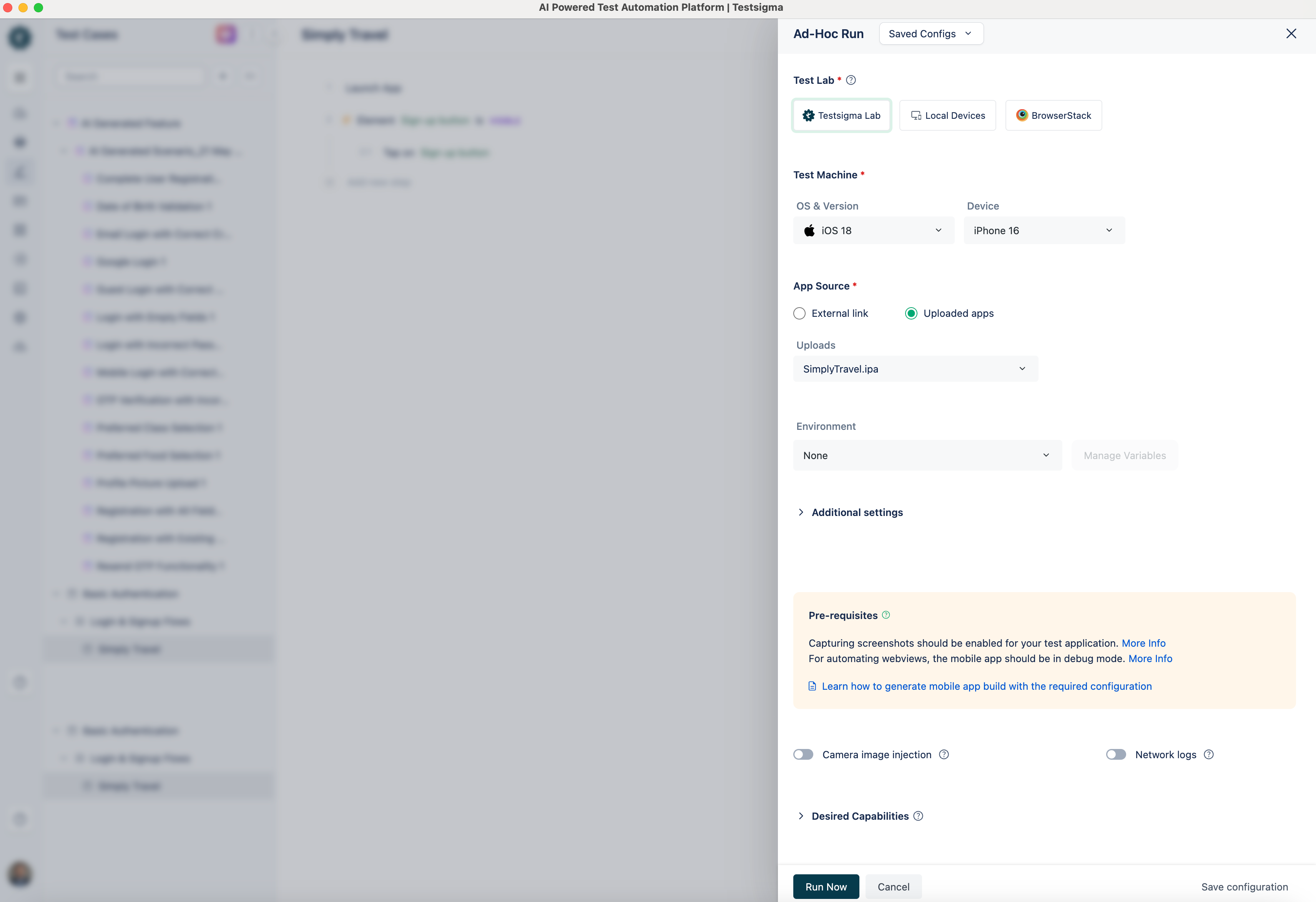

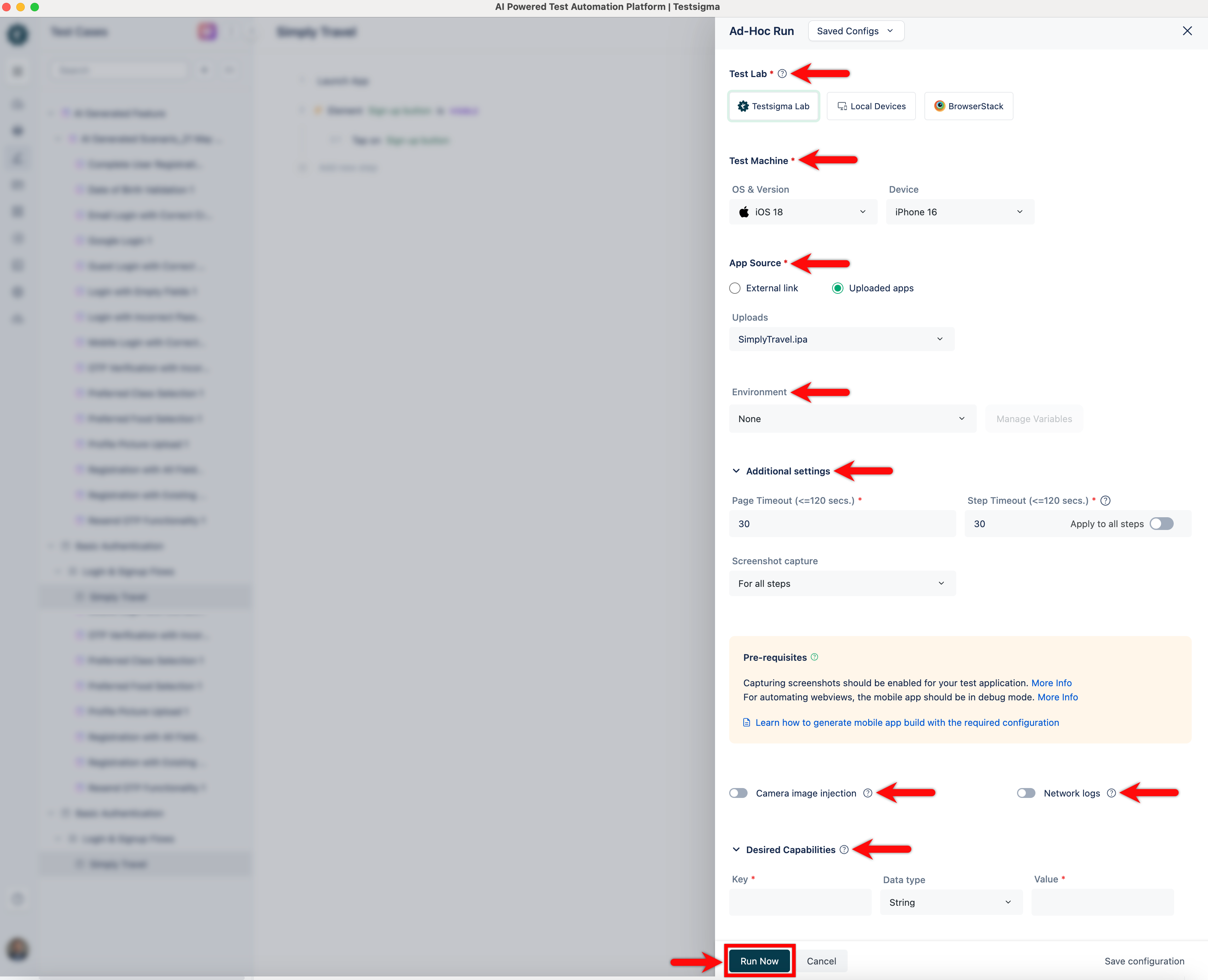

@@ -113,7 +113,7 @@ In the Ad-hoc run layover, provide the following configuration settings for a mo

4. **Camera Image Injection**: Click the toggle button for **Camera Image Injection** to enable camera image injection for a test case.

5. **Network Logs**: Click the toggle button for **Network Logs** to enable network logs for a test case.

6. **Desired Capabilities**: Provide **Key**, **Data Type** and **Value** under Desired Capabilities.

-7. Click on **Run Now**.

+7. Click on **Run Now**.

---

@@ -135,7 +135,7 @@ In the Ad-hoc run layover, provide the following configuration settings for an A

5. **Camera Image Injection**: Click the toggle button for **Camera Image Injection** to enable camera image injection for a test case.

6. **Network Logs**: Click the toggle button for **Network Logs** to enable network logs for a test case.

7. **Desired Capabilities**: Provide **Key**, **Data Type** and **Value** under Desired Capabilities.

-8. Click on **Run Now**.

+8. Click on **Run Now**.

---

@@ -158,7 +158,7 @@ In the Ad-hoc run layover, provide the following configuration settings for an i

5. **Camera Image Injection**: Click the toggle button for **Camera Image Injection** to enable camera image injection for a test case.

6. **Network Logs**: Click the toggle button for **Network Logs** to enable network logs for a test case.

7. **Desired Capabilities**: Provide **Key**, **Data Type** and **Value** under Desired Capabilities.

-8. Click on **Run Now**.

+8. Click on **Run Now**.

---

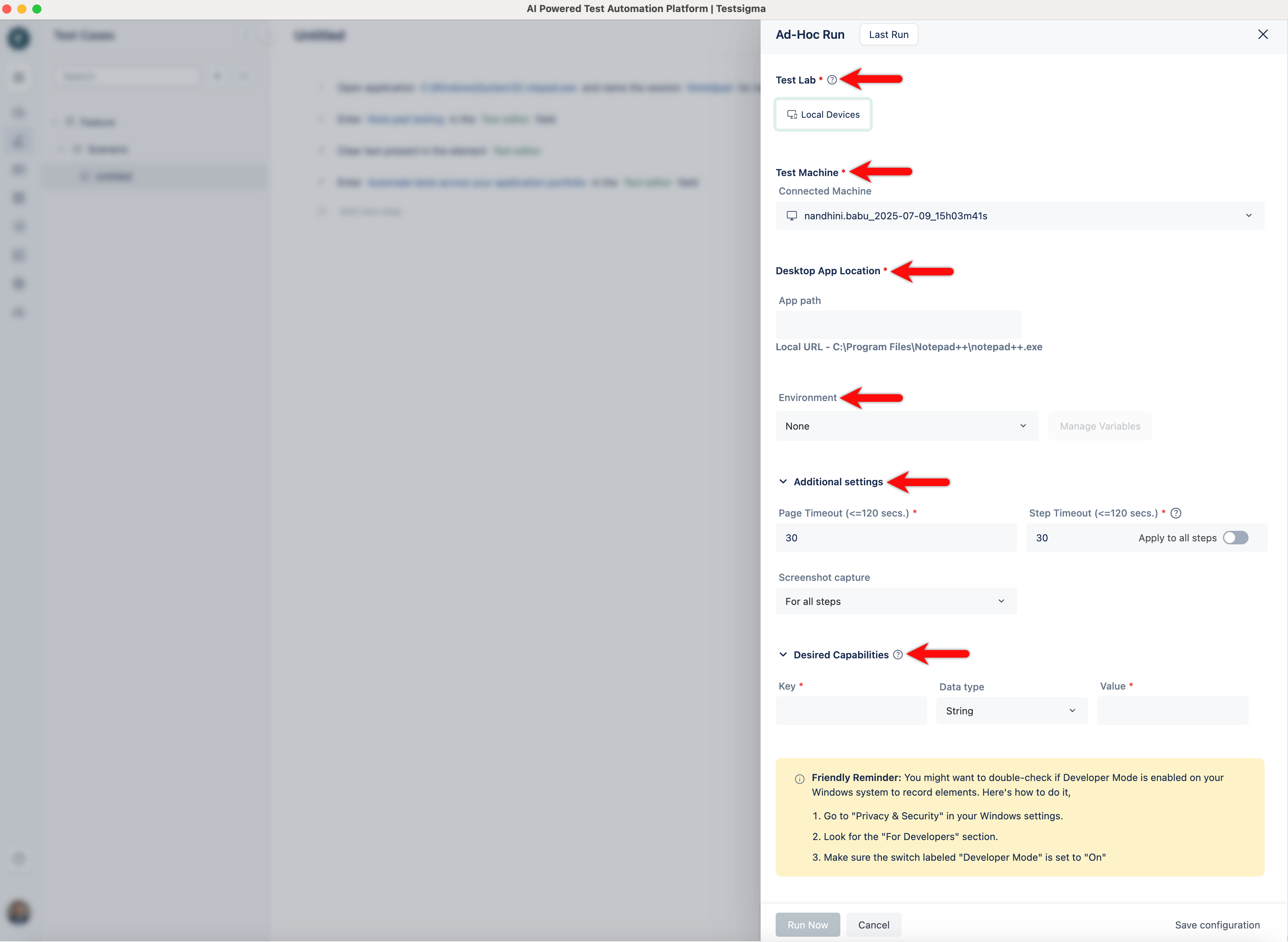

@@ -170,7 +170,7 @@ In the Ad-hoc run layover, provide the following configuration settings for a De

3. **Desktop App Location**: Provide the Local URL for the application path.

4. **Environment**: Select the test environment.

5. **Desired Capabilities**: Provide **Key**, **Data Type** and **Value** under Desired Capabilities.

-6. Click on **Run Now**.

+6. Click on **Run Now**.

---

@@ -180,7 +180,7 @@ In the Ad-hoc run layover, provide the following configuration settings for a Re

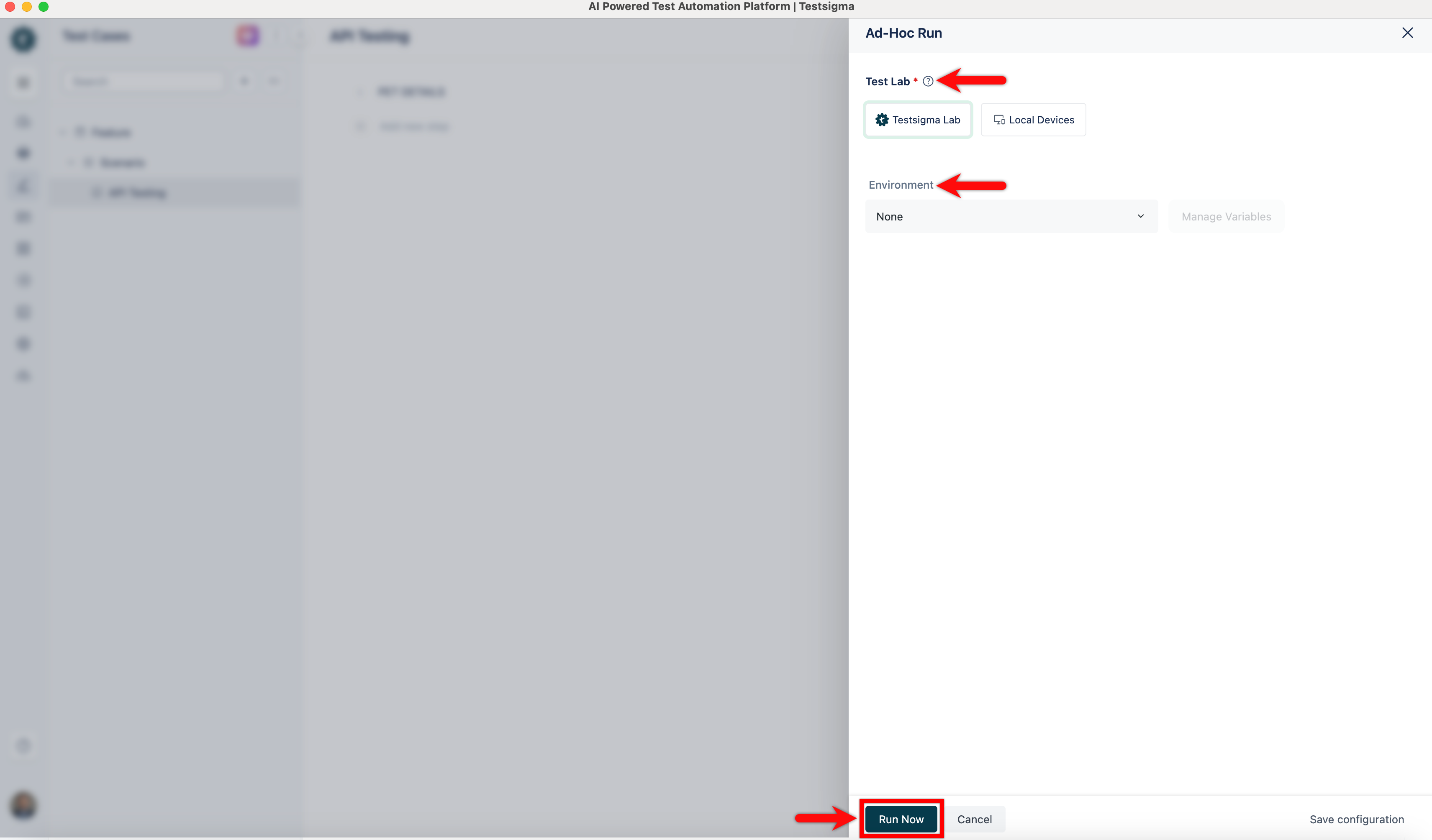

1. **Test Lab**: Select the test lab, either **Testsigma Cloud Lab** or **Local Devices**, to run the test case.

2. **Environment**: Select the test environment.

3. **Desired Capabilities**: Provide **Key**, **Data Type** and **Value** under Desired Capabilities.

-4. Click on **Run Now**.

+4. Click on **Run Now**.

---

@@ -189,10 +189,10 @@ In the Ad-hoc run layover, provide the following configuration settings for a Re

You can add a prerequisite test case for any test case while executing the test case as dry runs. Follow the steps below to add a prerequisite test case for dry runs.

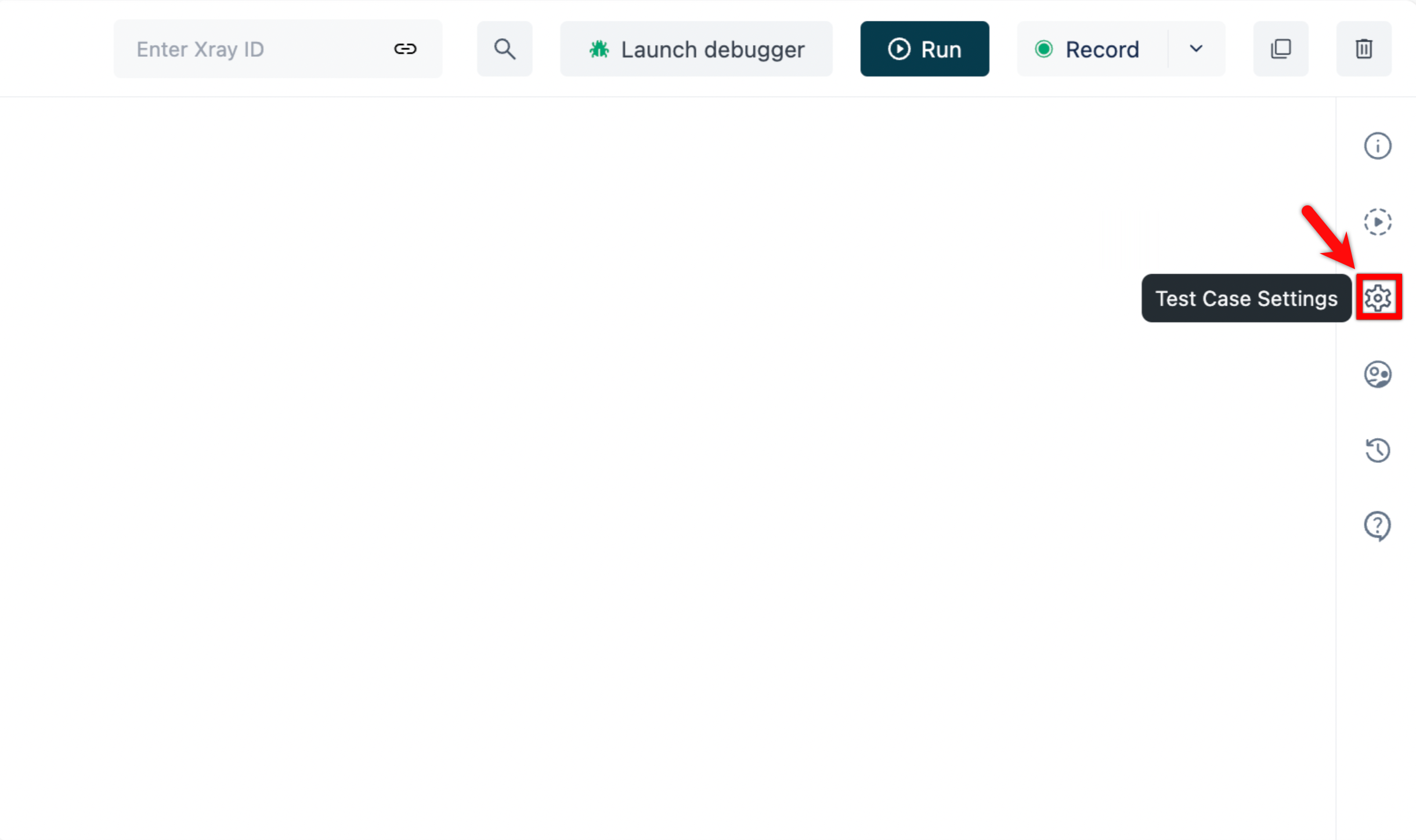

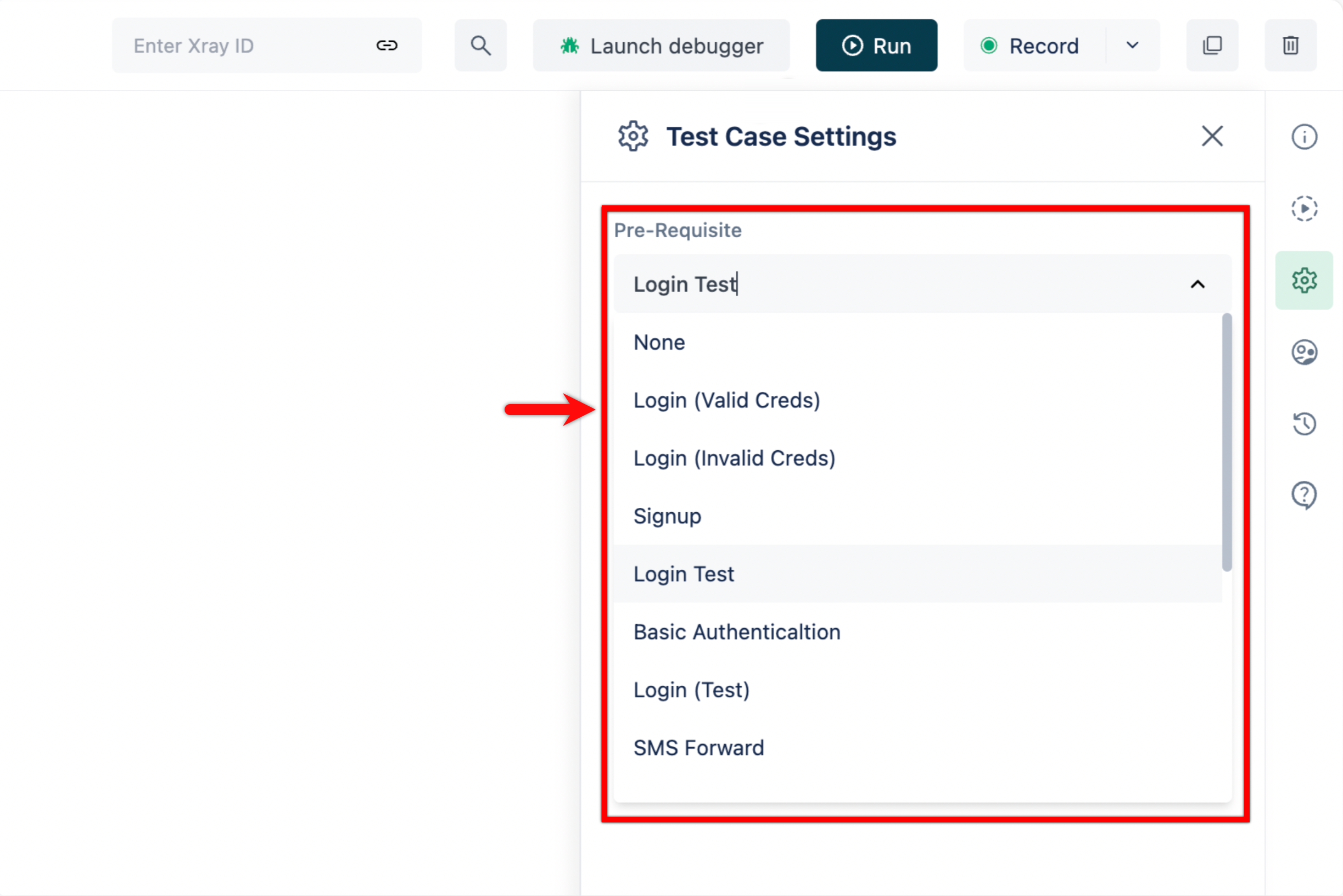

1. Navigate to **Create Tests > Test Cases**, select a test case for which you want to add prerequisites and click on **Test Case Settings**.

-

+

2. Select the prerequisite test case from the dropdown.

-

+

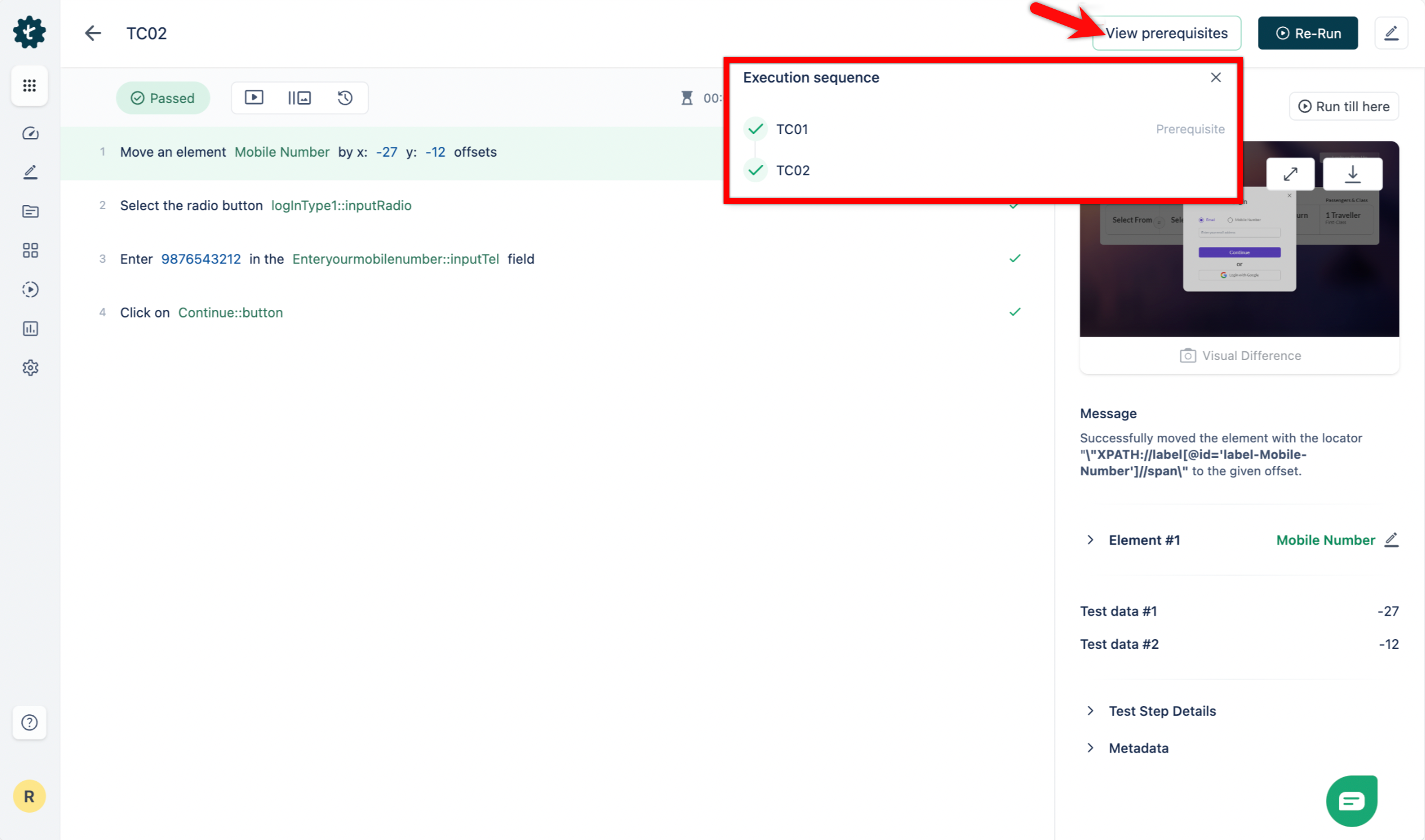

3. For **Dry Runs**, the test case will be executed after the prerequisite test case. You can check the **Execution sequence** on the test case results page.

-

\ No newline at end of file

+

\ No newline at end of file

diff --git a/src/pages/docs/runs/test-plan-executions.md b/src/pages/docs/runs/test-plan-executions.md

index 73aeada9..e4db4ae7 100644

--- a/src/pages/docs/runs/test-plan-executions.md

+++ b/src/pages/docs/runs/test-plan-executions.md

@@ -47,18 +47,13 @@ This article guides the user to prepare and execute test plans in Testsigma plat

## **Steps to Create and Execute Test Plan**

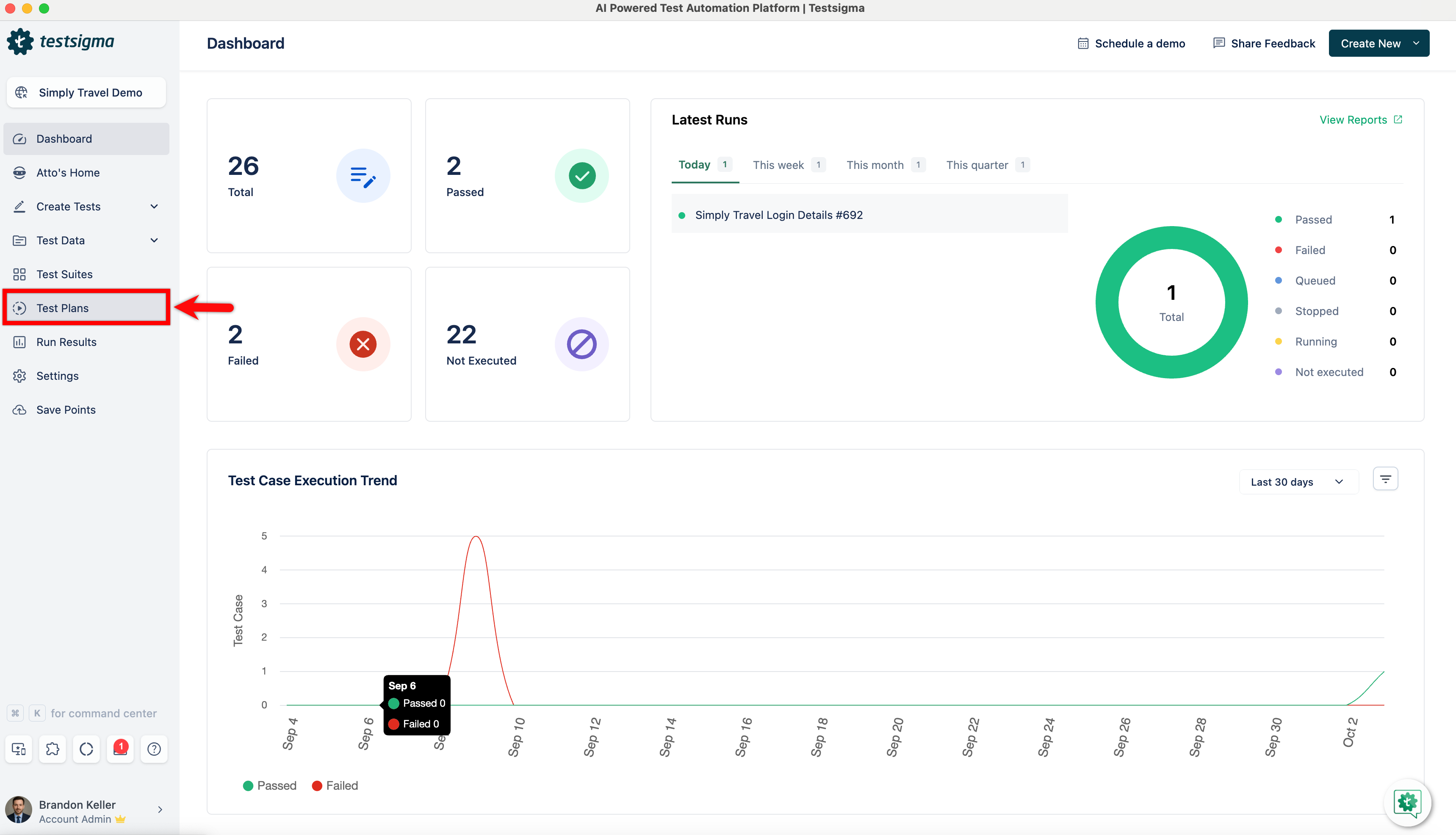

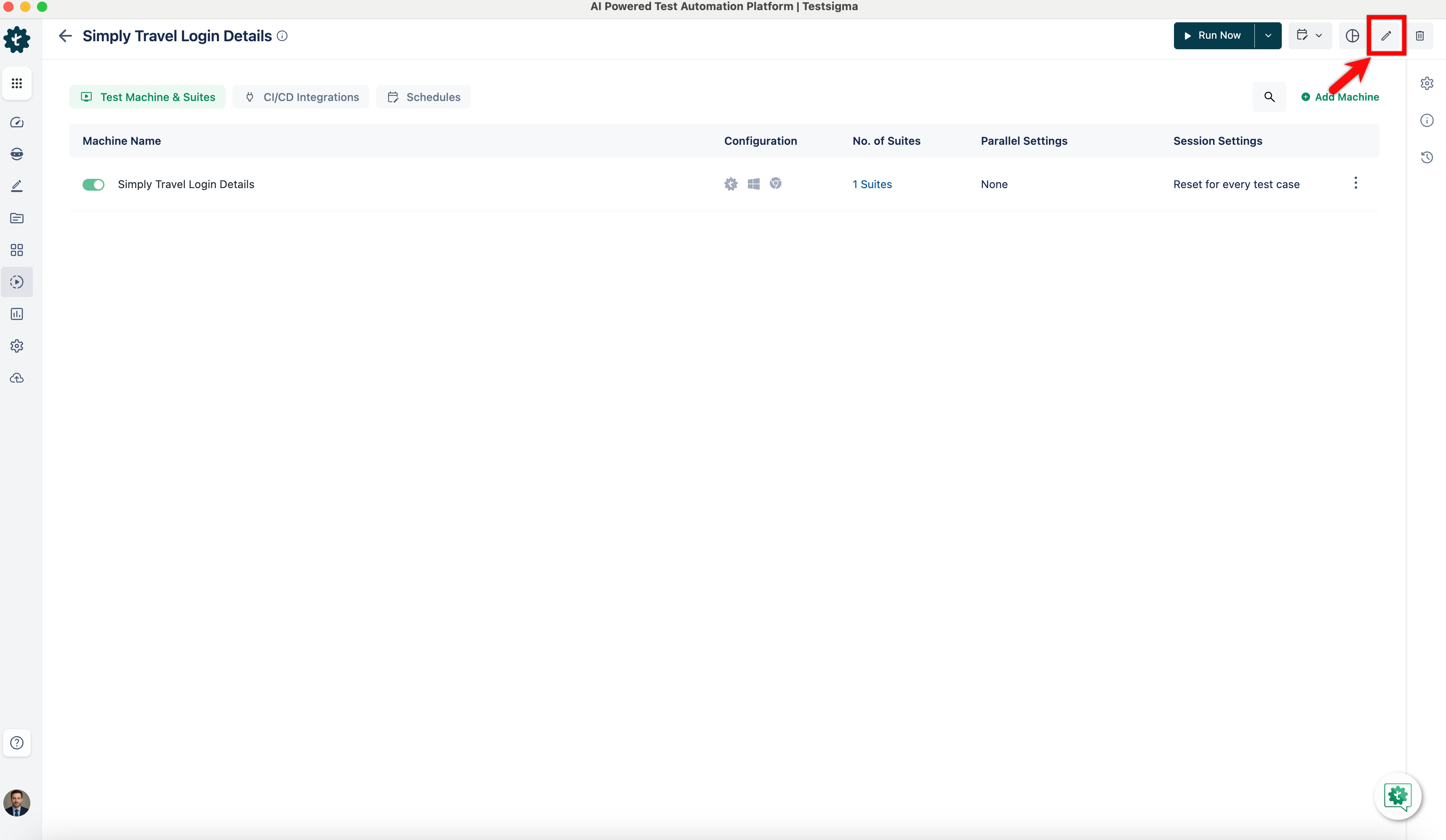

1. Create a test case with all the necessary steps.

-

2. Create a test suite and add the test case created in step 1 to this test suite.

-

-

3. Create a test plan and add the test suite we just created to this test plan.

-

-

-1. Click on the **Run Now** to start the test plan execution.

-

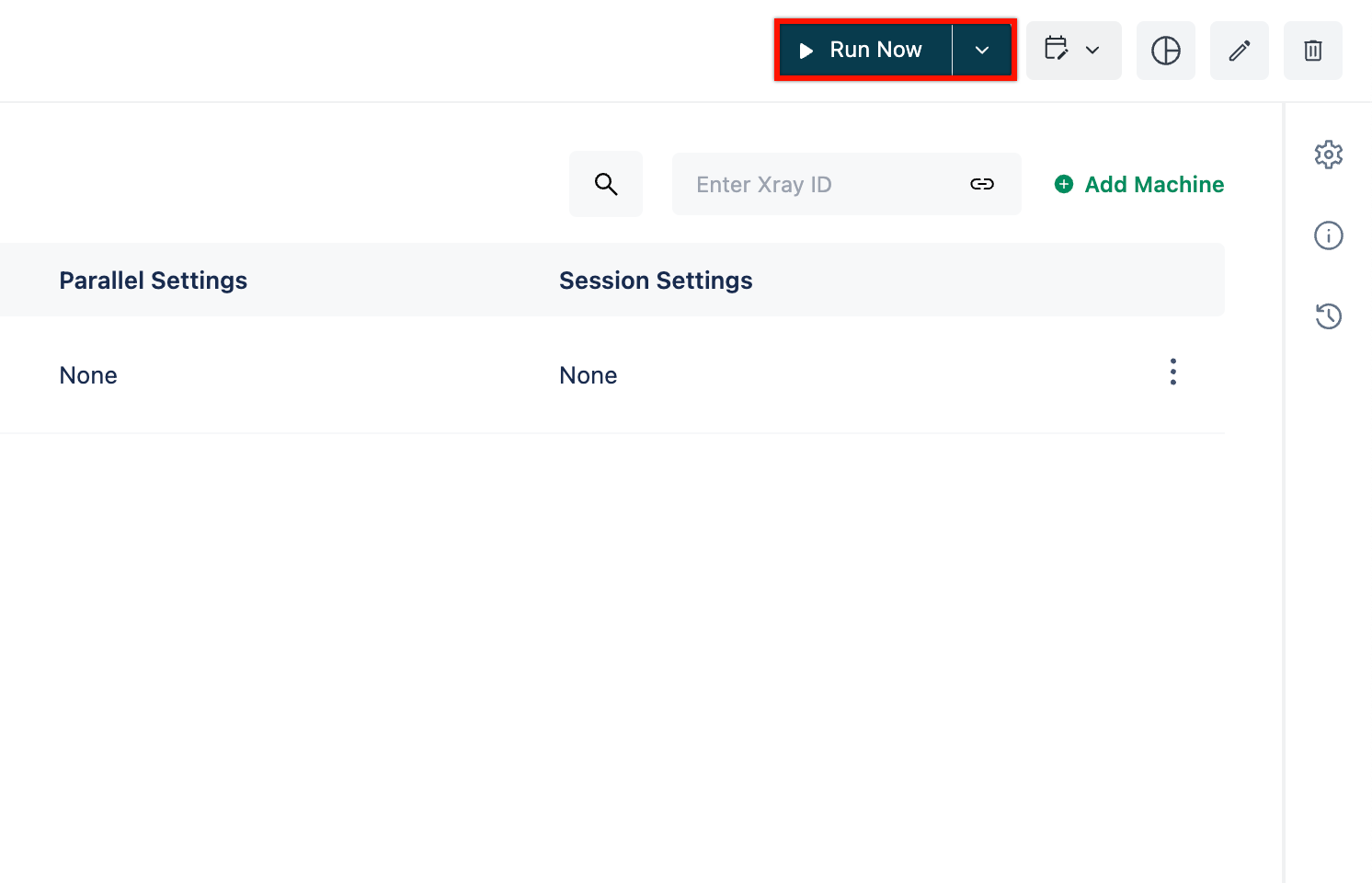

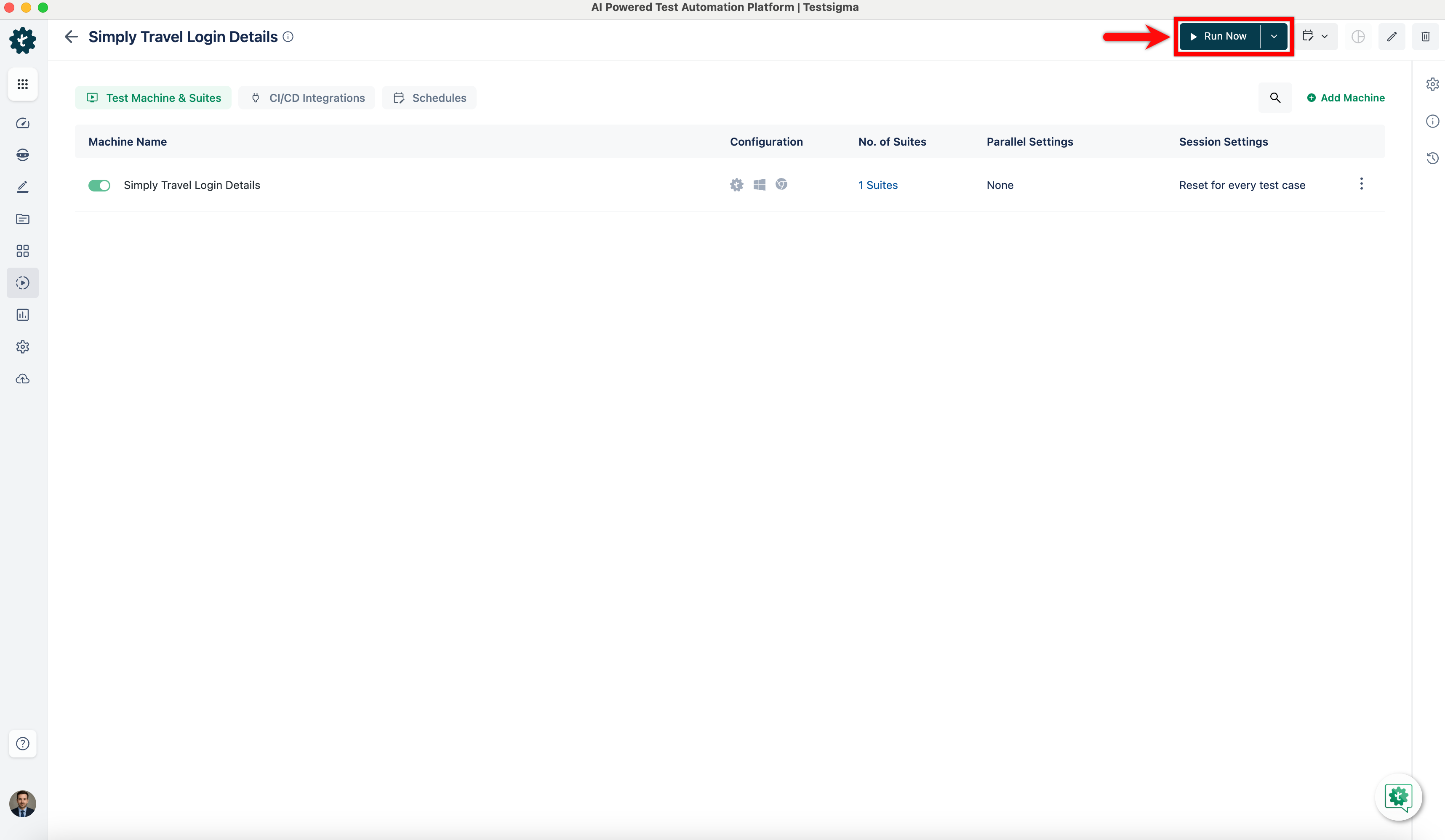

+4. Click on the **Run Now** to start the test plan execution.

+

[[info | **NOTE**:]]

| Whitelisting of Testsigma IP addresses is necessary to ensure that the Testsigma servers and lab environments can securely interact with your applications and systems for testing activities. For more information on whitelisting Testsigma IP addresses, refer to the [documentation on whitelisting Testsigma IP addresses](https://testsigma.com/docs/runs/test-locally-hosted-applications/#whitelisting-testsigma-ip-addresses)

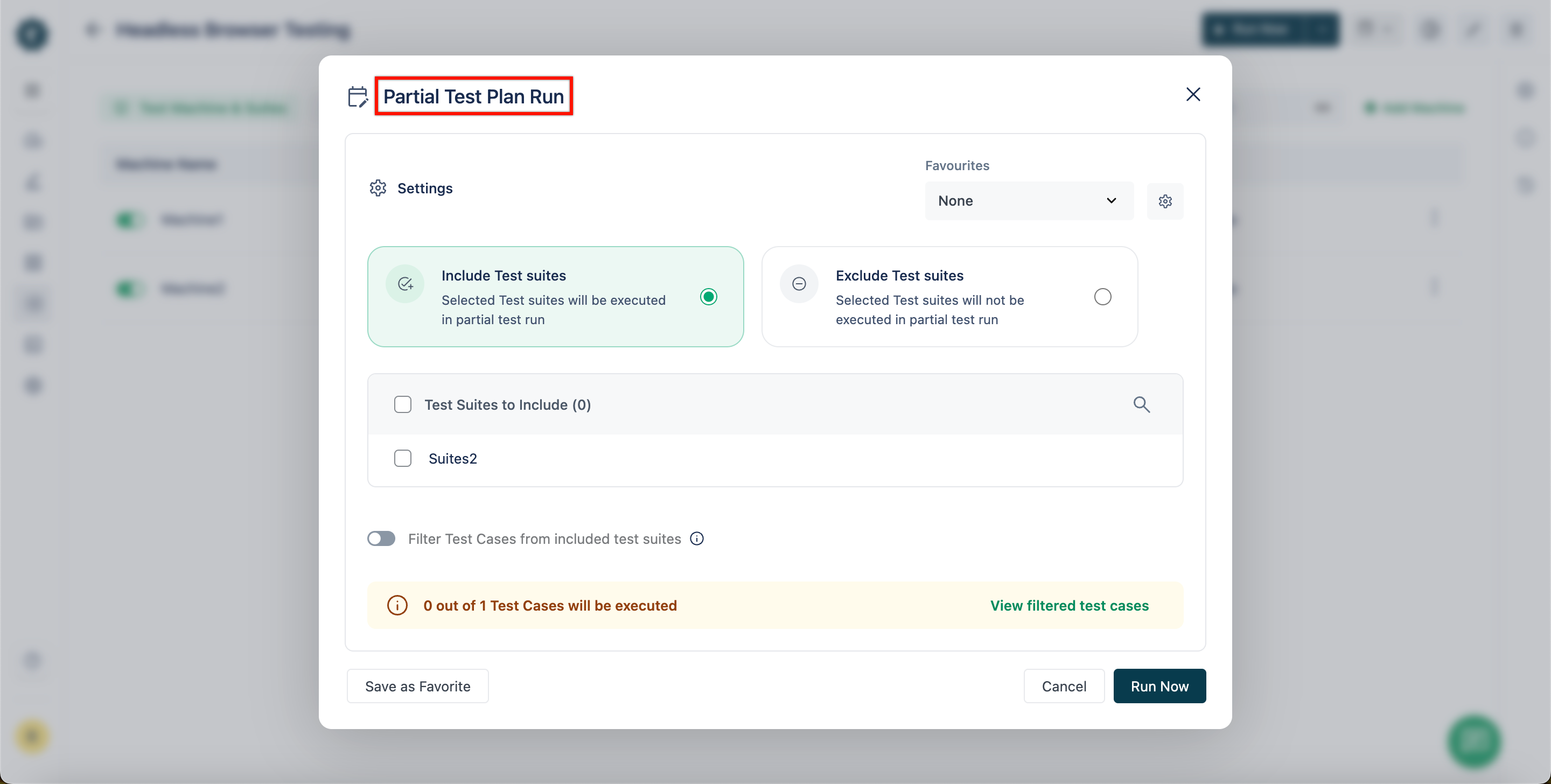

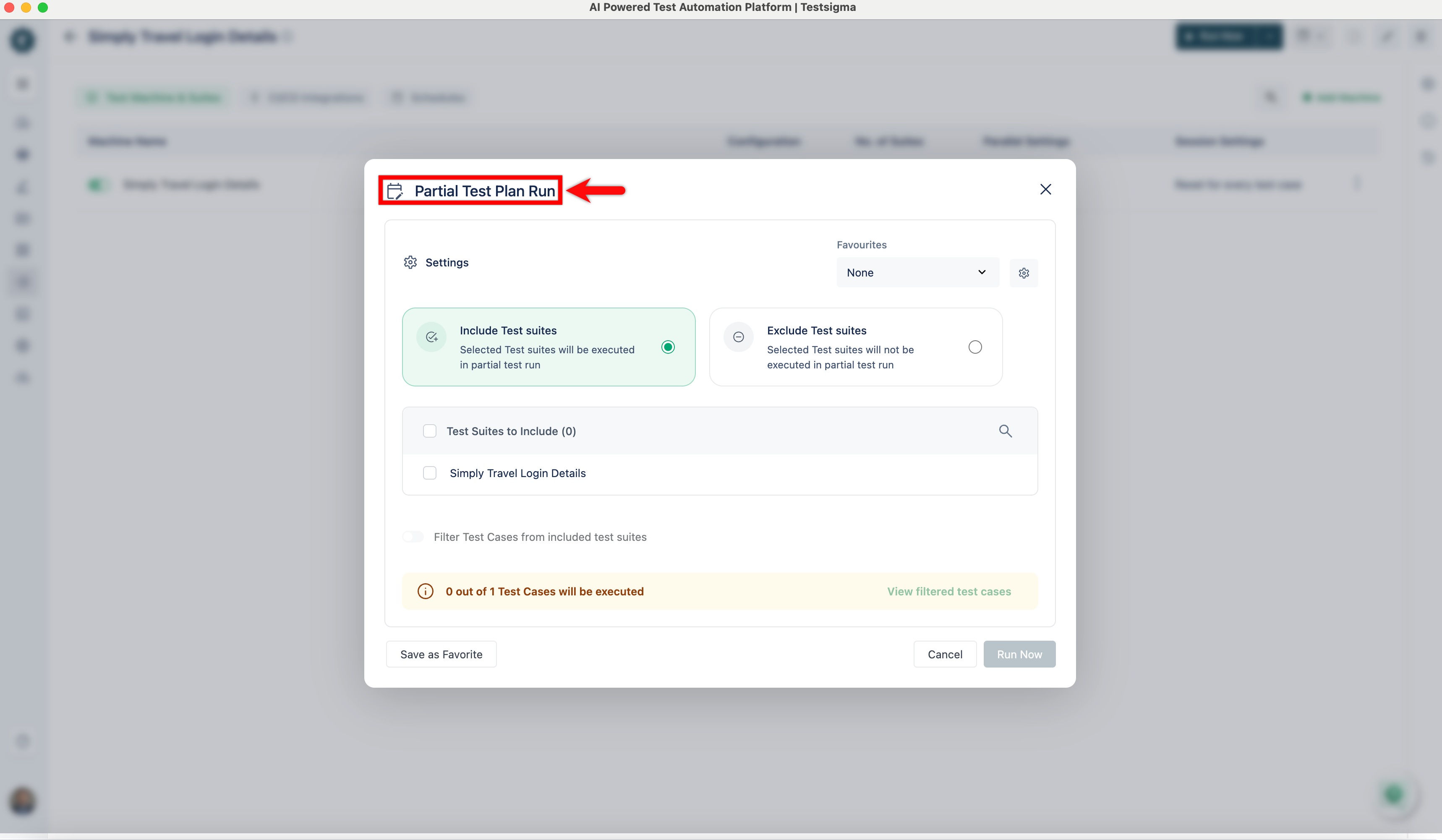

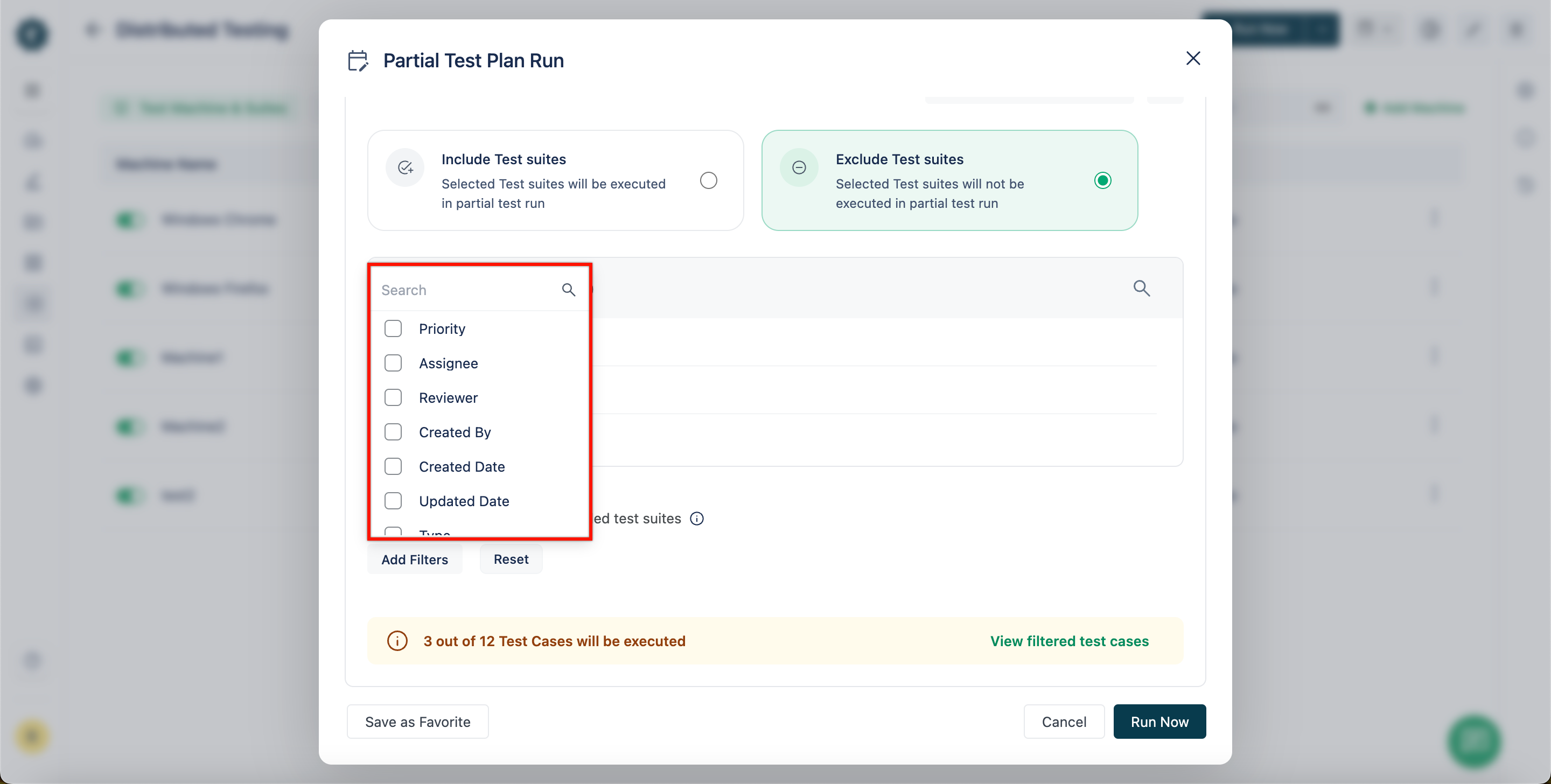

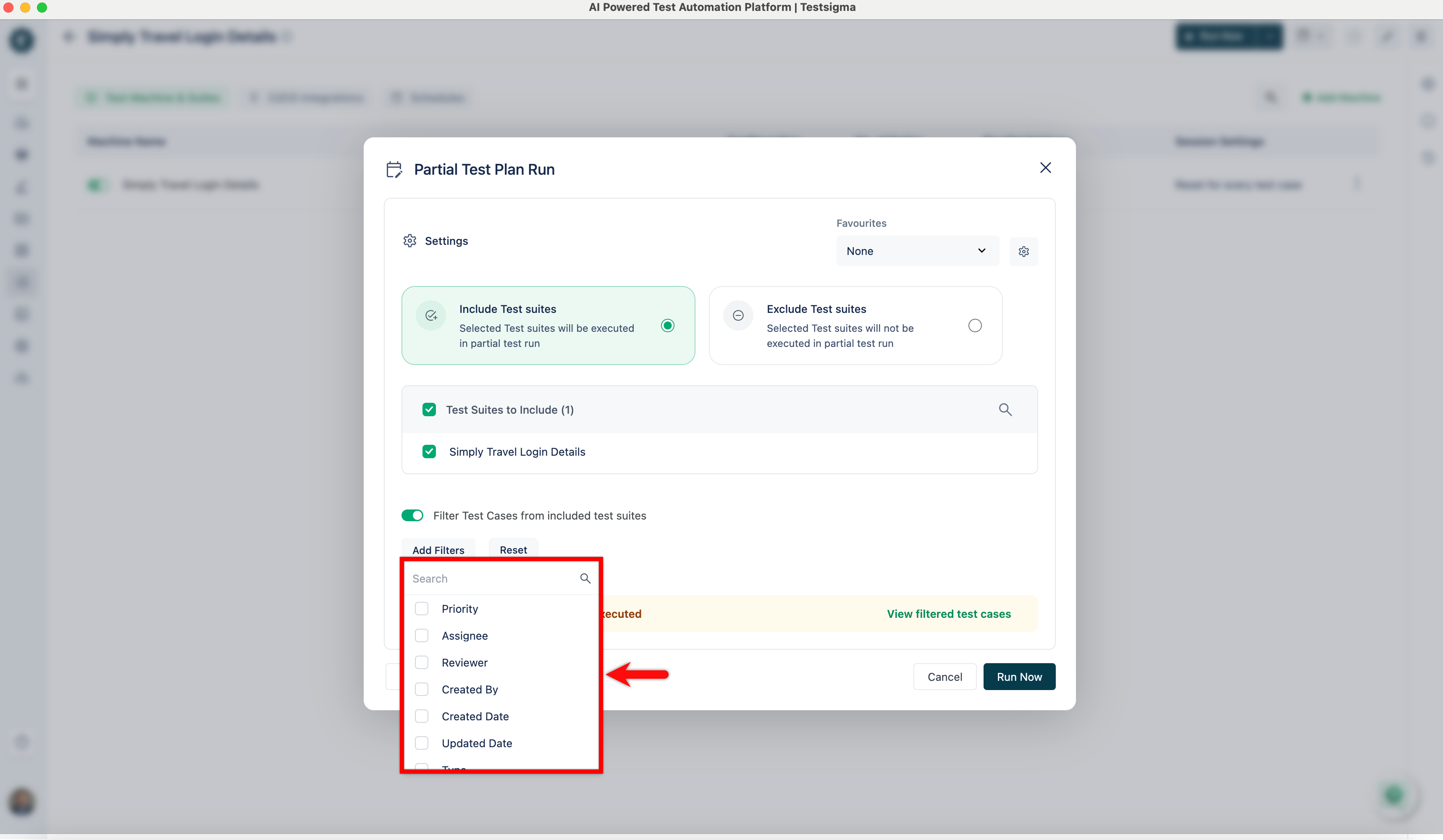

@@ -72,11 +67,11 @@ There are instances when you need to execute your test plan only partially inste

**Lets see how we can do that:**

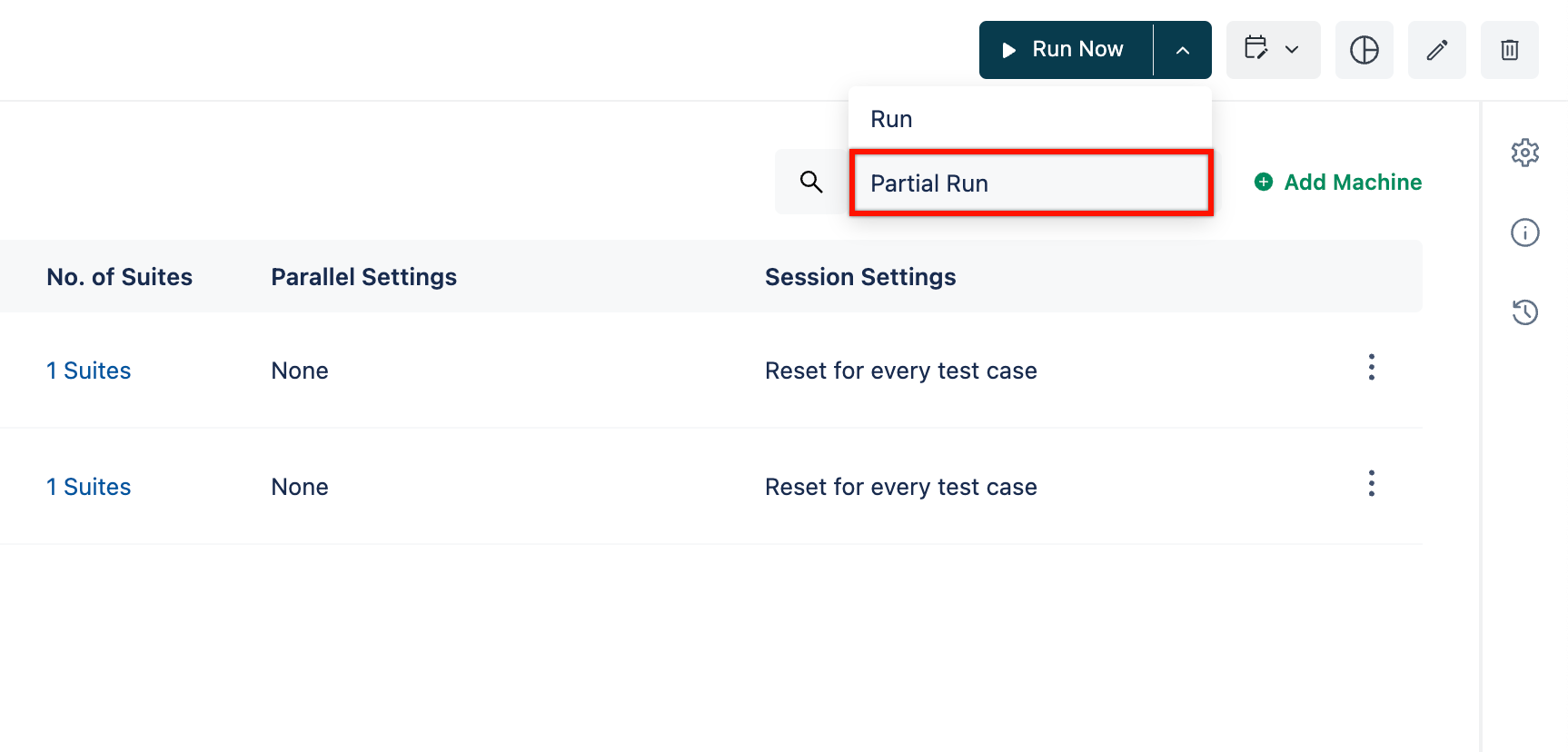

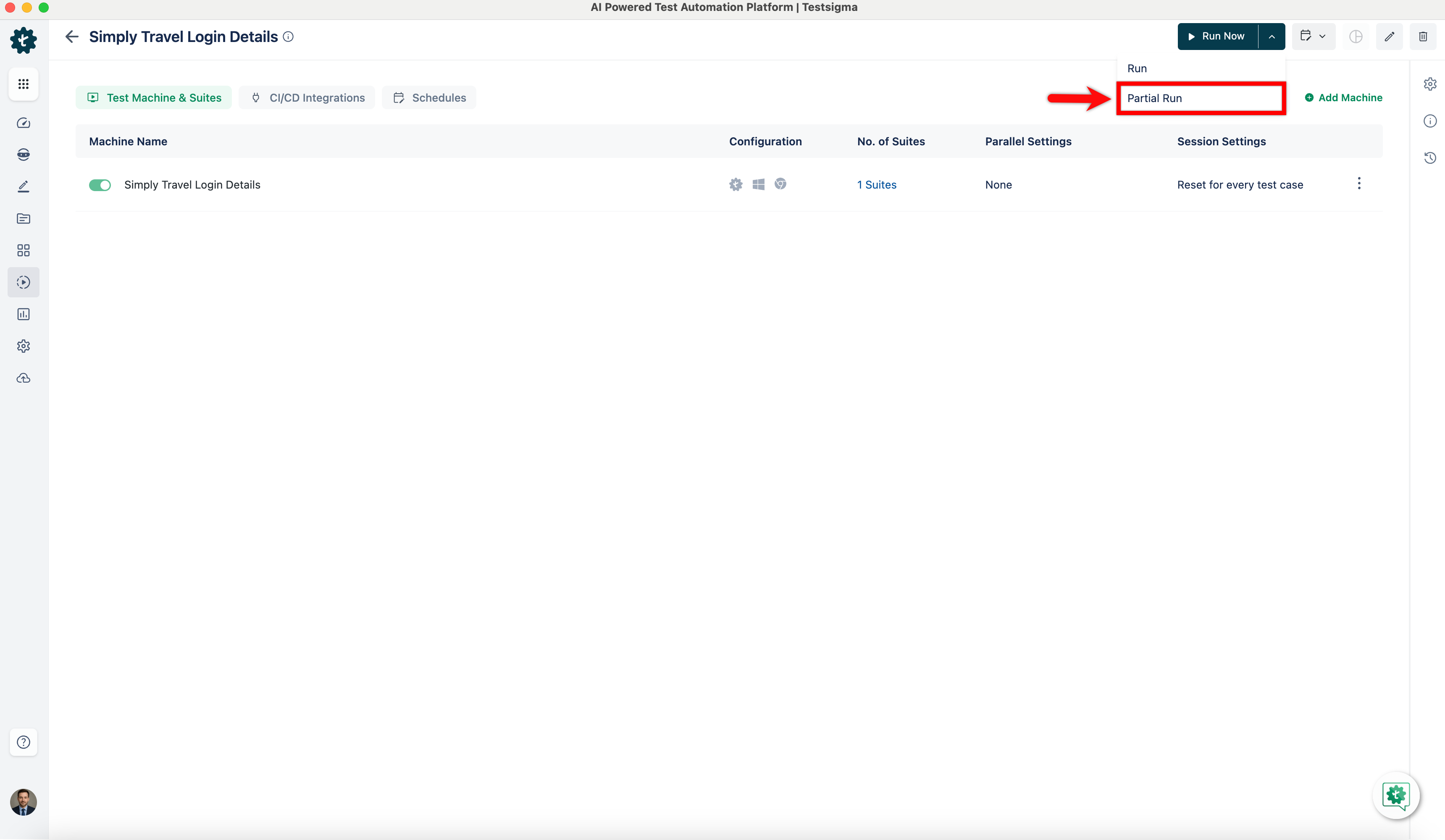

1. Go to the test plan that you want to execute partially and open the dropdown and click on **Partial Run**.

-

+

1. On **Partial Test Plan Run** overlay, configure the options according to how you want to select the test cases for your partial test run:

-

+

**Below are the options you see:**

@@ -85,7 +80,7 @@ There are instances when you need to execute your test plan only partially inste

3. **Test Case Filters**: You can identify the test cases to be included in the test suites using the below filters:

-

+

- **Labels**: Filter the test cases according to the labels assigned to them.

- **Type**: Filter the test cases according to the type of test cases there are.

- **Requirement**: Filter the test cases according to the requirements assigned to them.

@@ -117,10 +112,10 @@ Once you have applied the filters, you can:

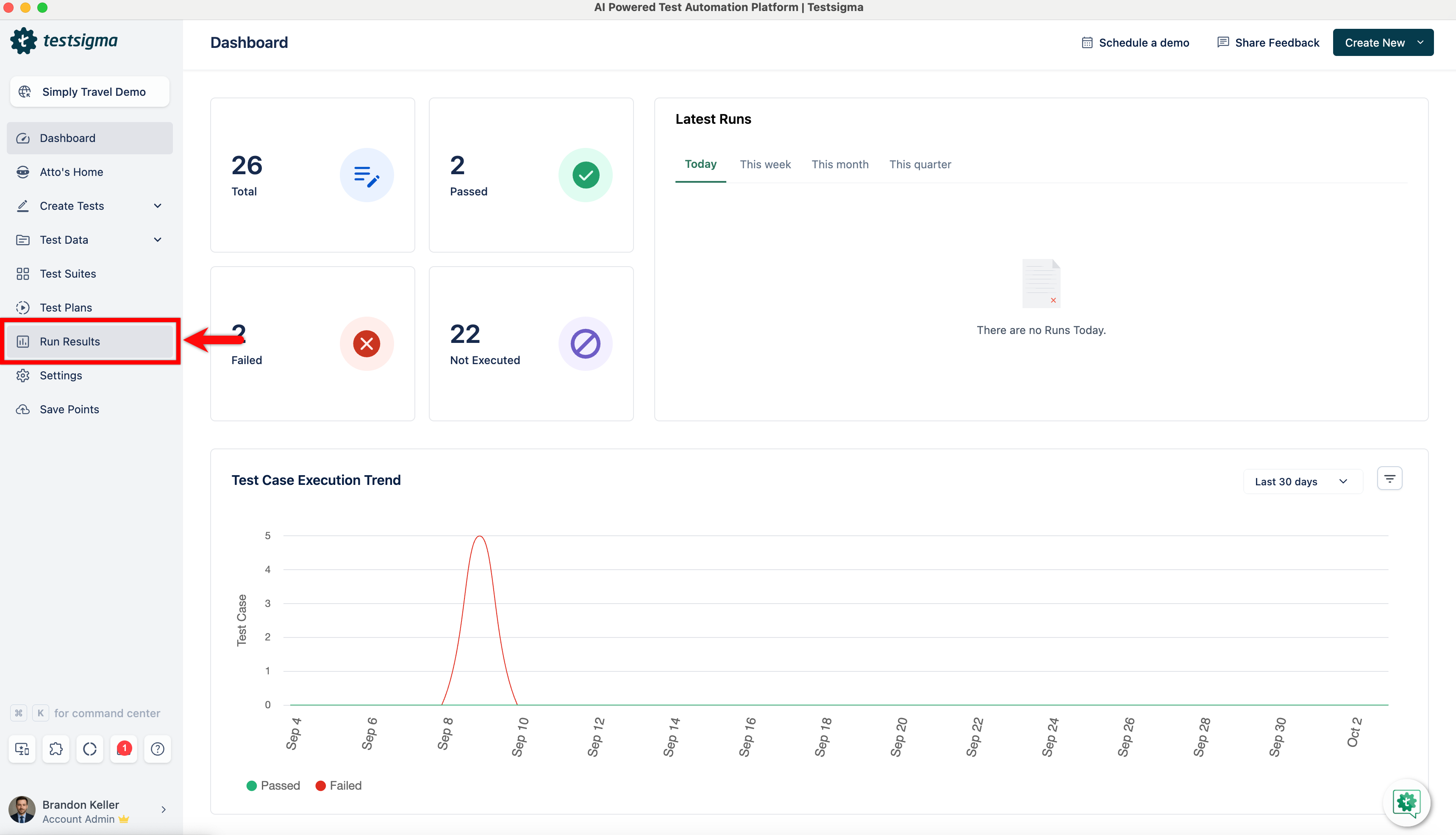

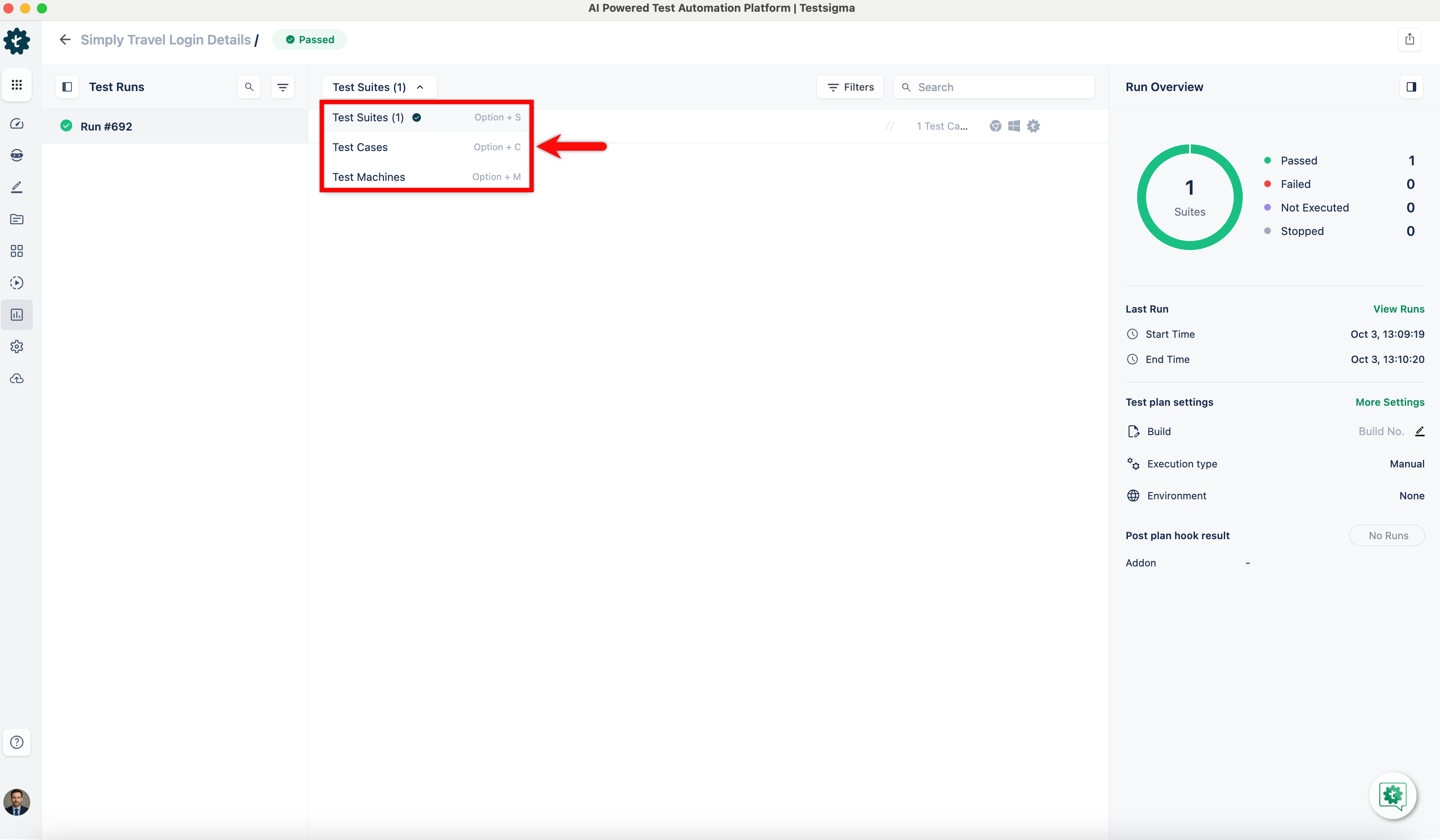

1. From the left navigation bar, go to **Run Results** and click on the test plan for which you want to check the results.

-

+

2. By default, you’ll see the results at test suite level.

-

+

[[info | **NOTE**:]]

| You can also view reports at test suite and test machine level by clicking on **Test Suite** and **Test Machine**.

diff --git a/src/pages/docs/salesforce-testing/special-nlps.md b/src/pages/docs/salesforce-testing/special-nlps.md

index 8c3b0692..af9bd588 100644

--- a/src/pages/docs/salesforce-testing/special-nlps.md

+++ b/src/pages/docs/salesforce-testing/special-nlps.md

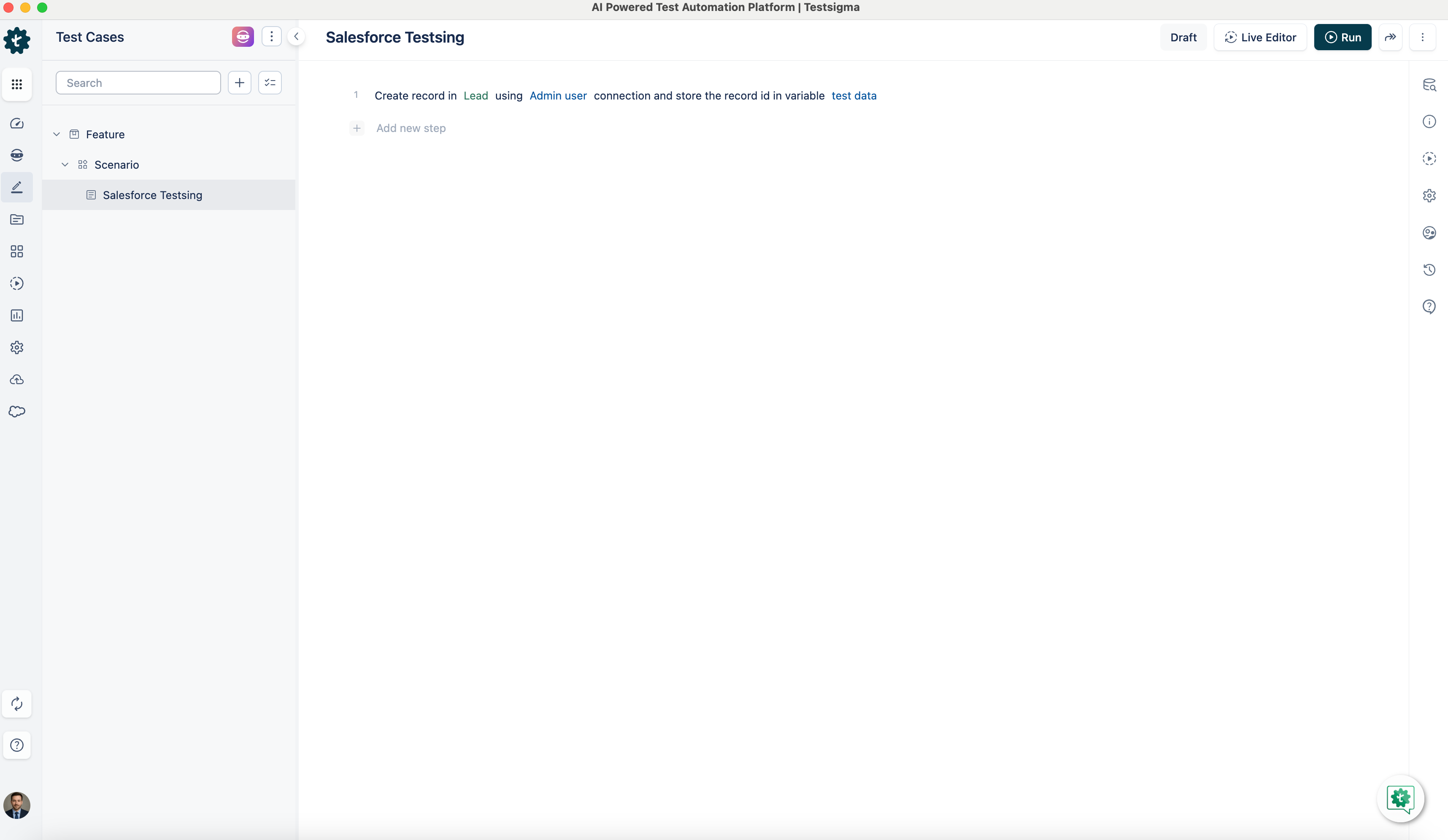

@@ -134,7 +134,7 @@ This NLP creates a new record for the selected **Salesforce object**.

See the GIF below demonstrating how to use this NLP.

-

+

[[info | **NOTE**:]]

| You can also select the **Record Type** when entering details in the API step. However, ensure you only enter details relevant to that specific **Record Type**, or the test case will fail.

@@ -150,7 +150,7 @@ This NLP opens edit form of the selected **Salesforce object** using **Record ID

See the GIF below demonstrating how to use this NLP.

-

+

---

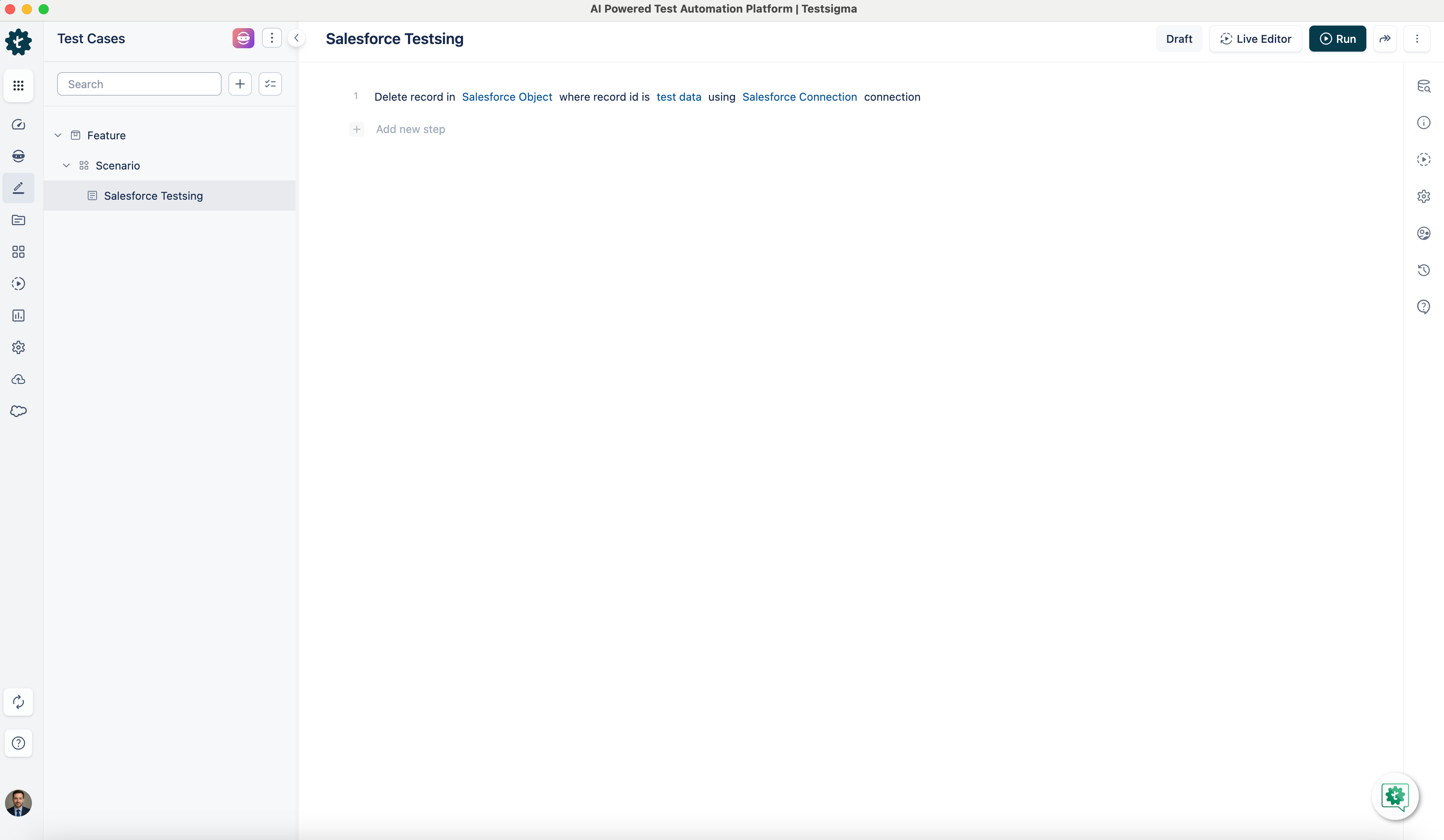

@@ -162,7 +162,7 @@ This NLP deletes a record for the selected **Salesforce object** using **Record

See the GIF below demonstrating how to use this NLP.

-

+

---

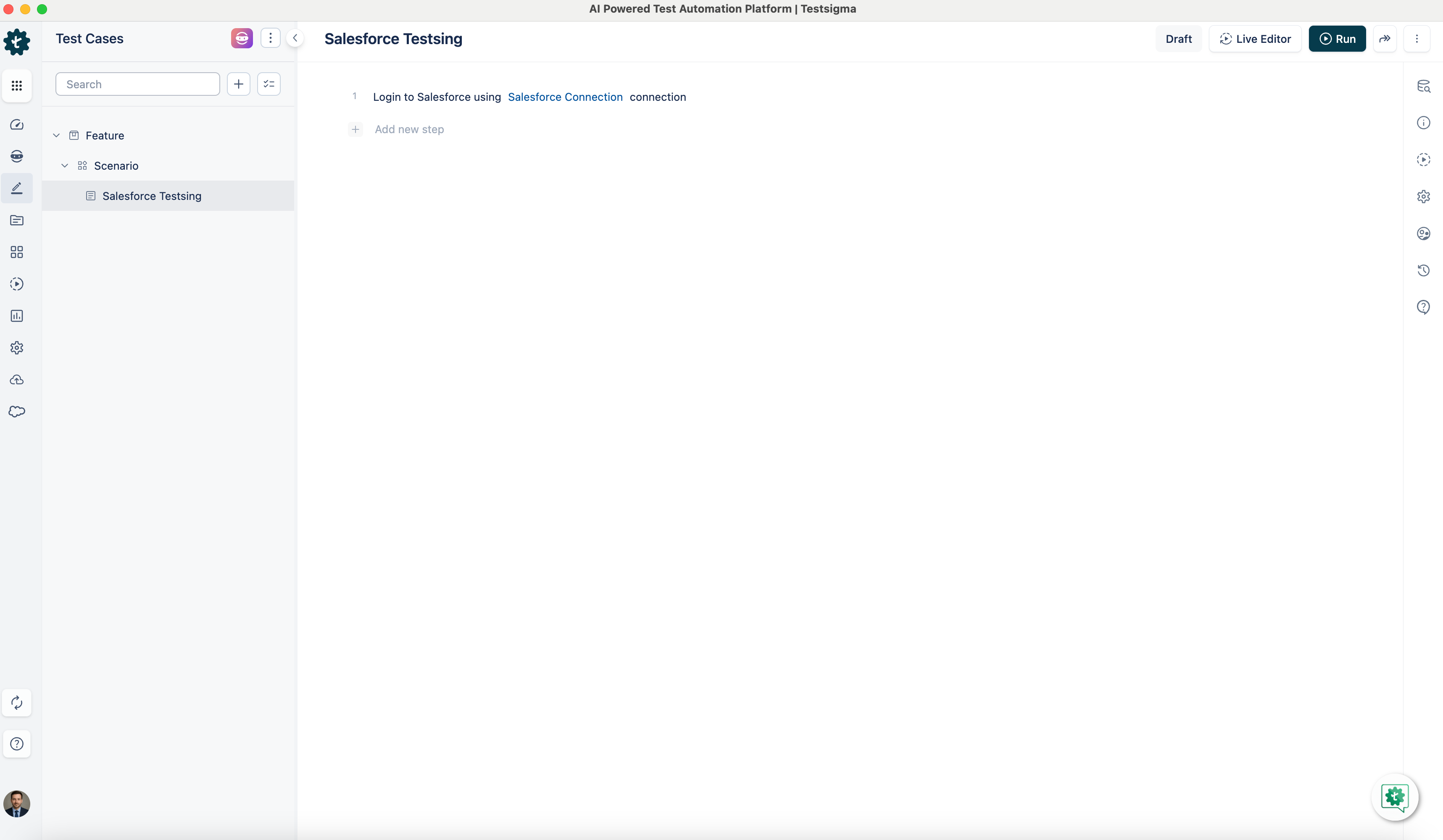

@@ -175,7 +175,7 @@ This NLP enables users to log in to Salesforce with a selected user connection.

See the GIF below demonstrating how to use this NLP.

-

+

---

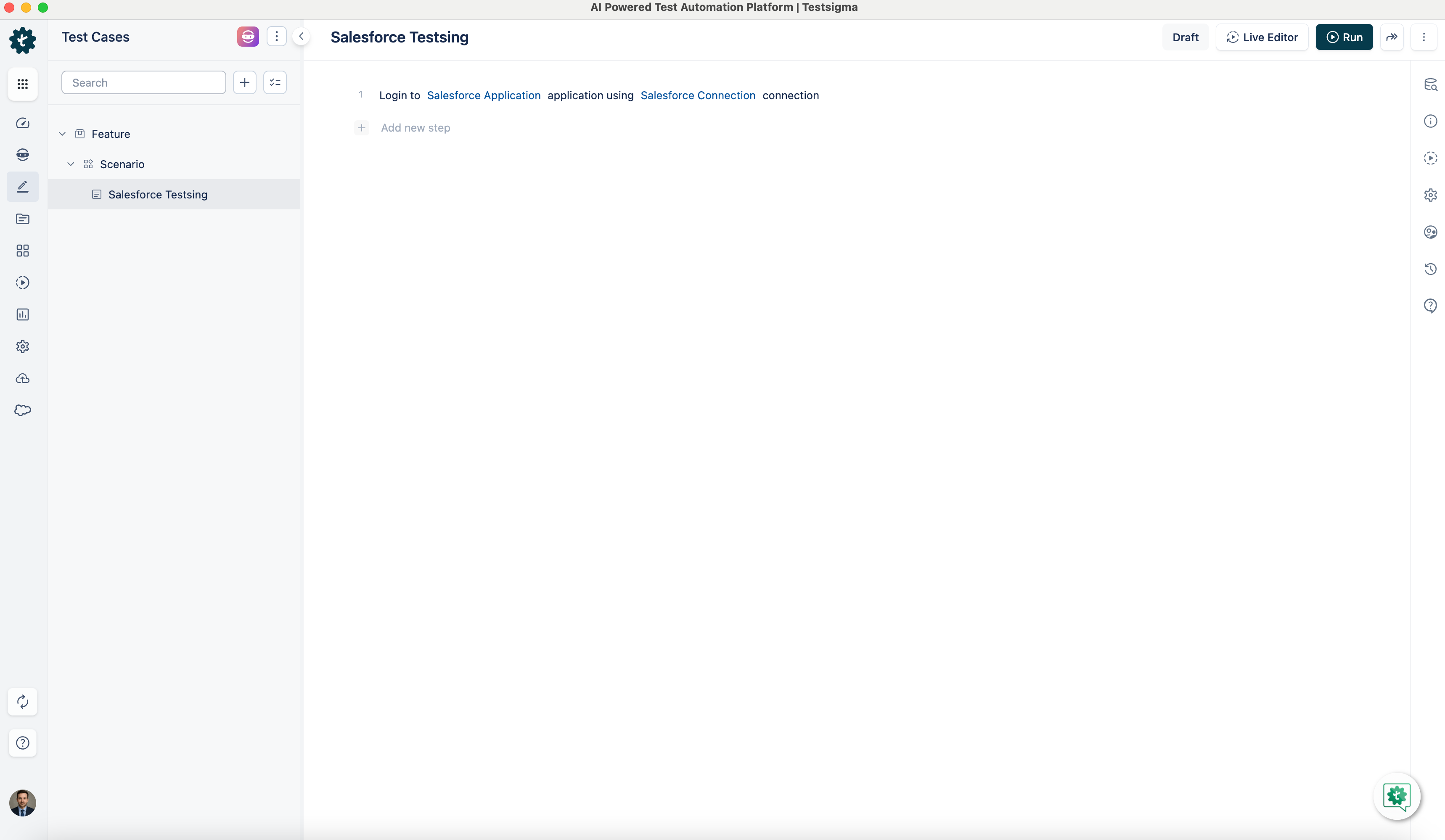

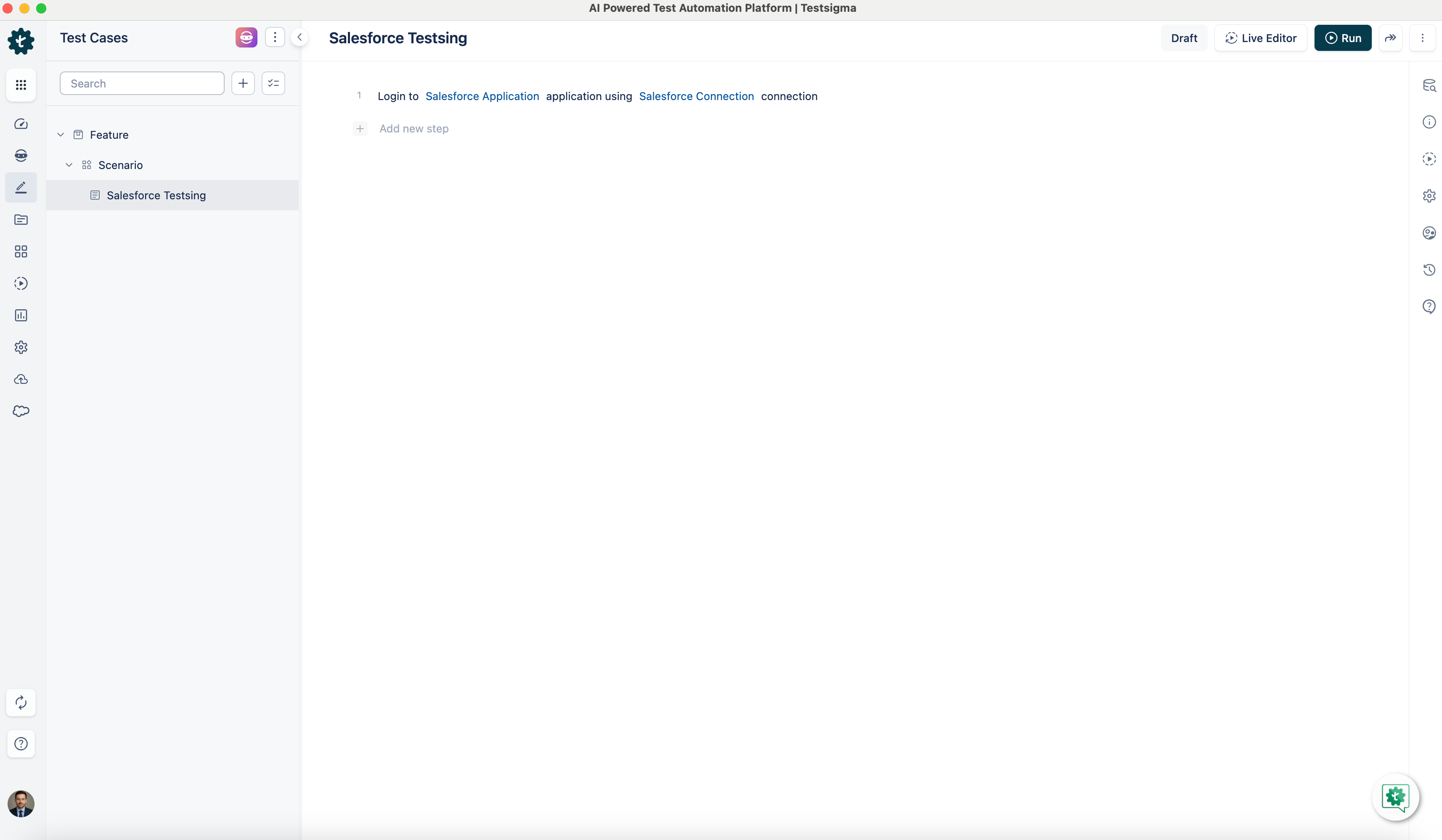

@@ -189,7 +189,7 @@ For example, select **Sales** from the dropdown to log in to **Sales CRM**.

See the GIF below demonstrating how to use this NLP.

-

+

---

@@ -203,7 +203,7 @@ For example, select the **HubSpot** application from the dropdown to switch from

See the GIF below demonstrating how to use this NLP.

-

+

---

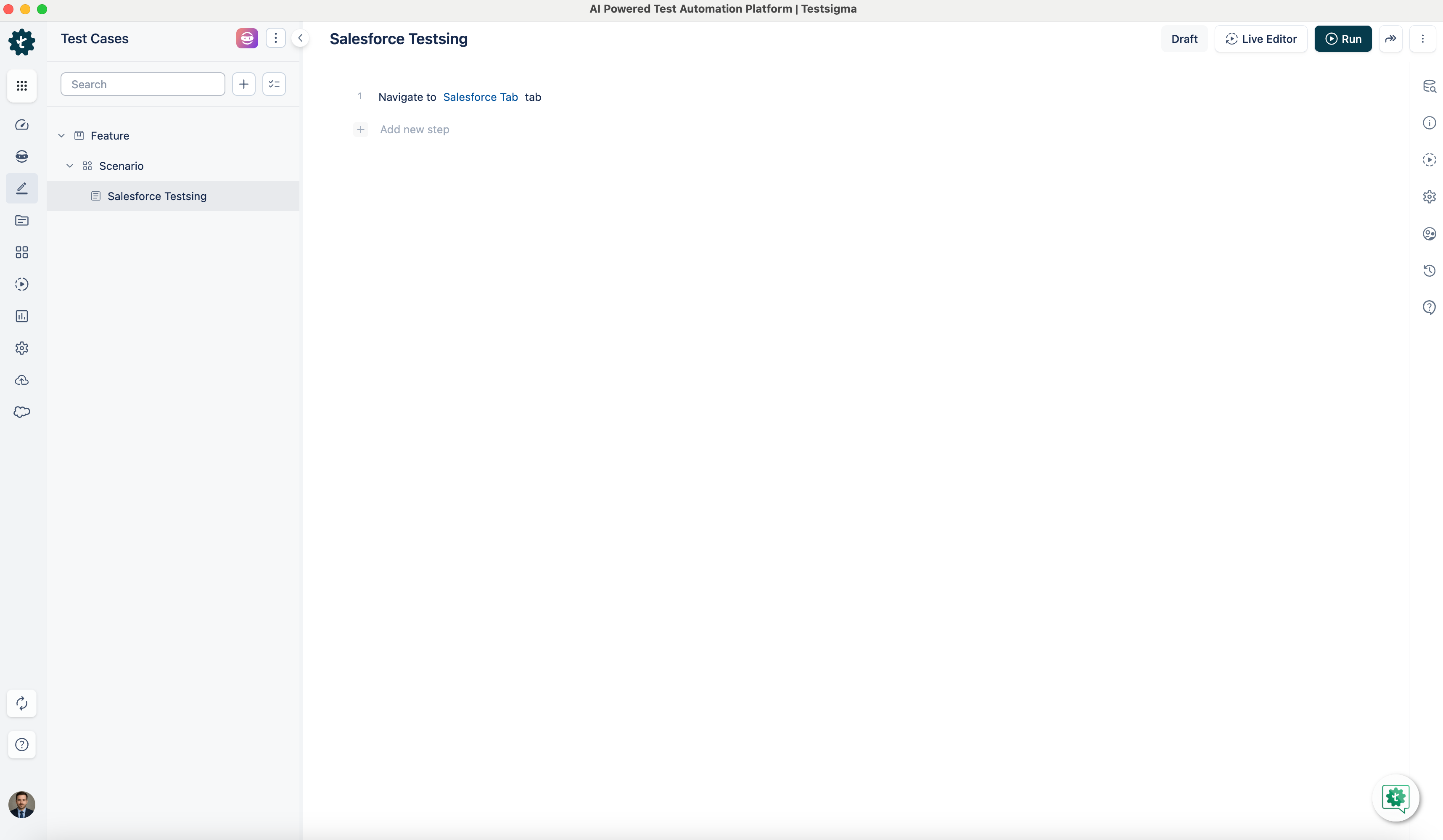

@@ -215,7 +215,7 @@ This NLP navigates users to any standard tabs on the **Salesforce** platform.

See the GIF below demonstrating how to use this NLP.

-

+

---

@@ -229,7 +229,7 @@ For example, to open the record details page of a specific lead, use this NLP.

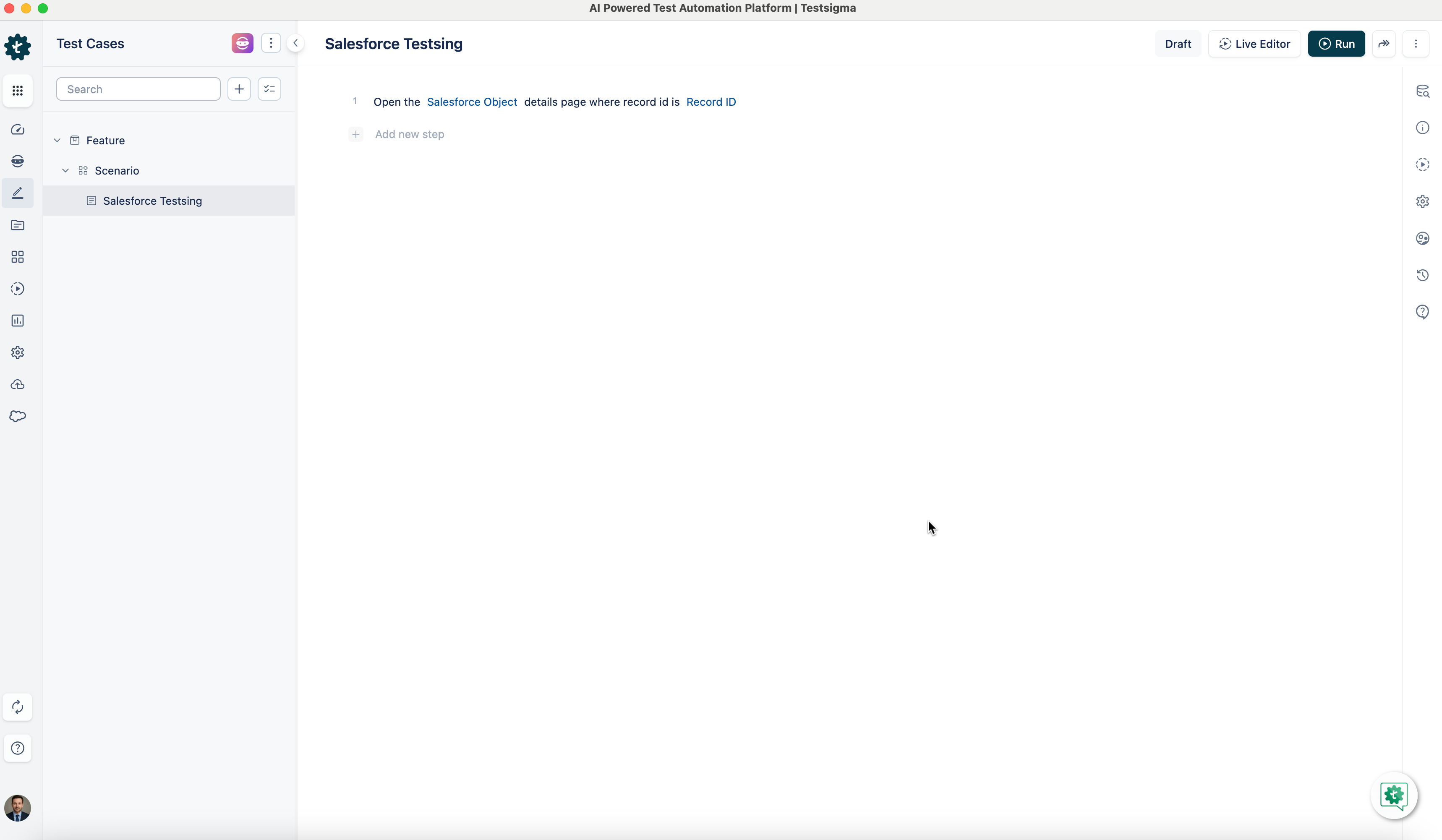

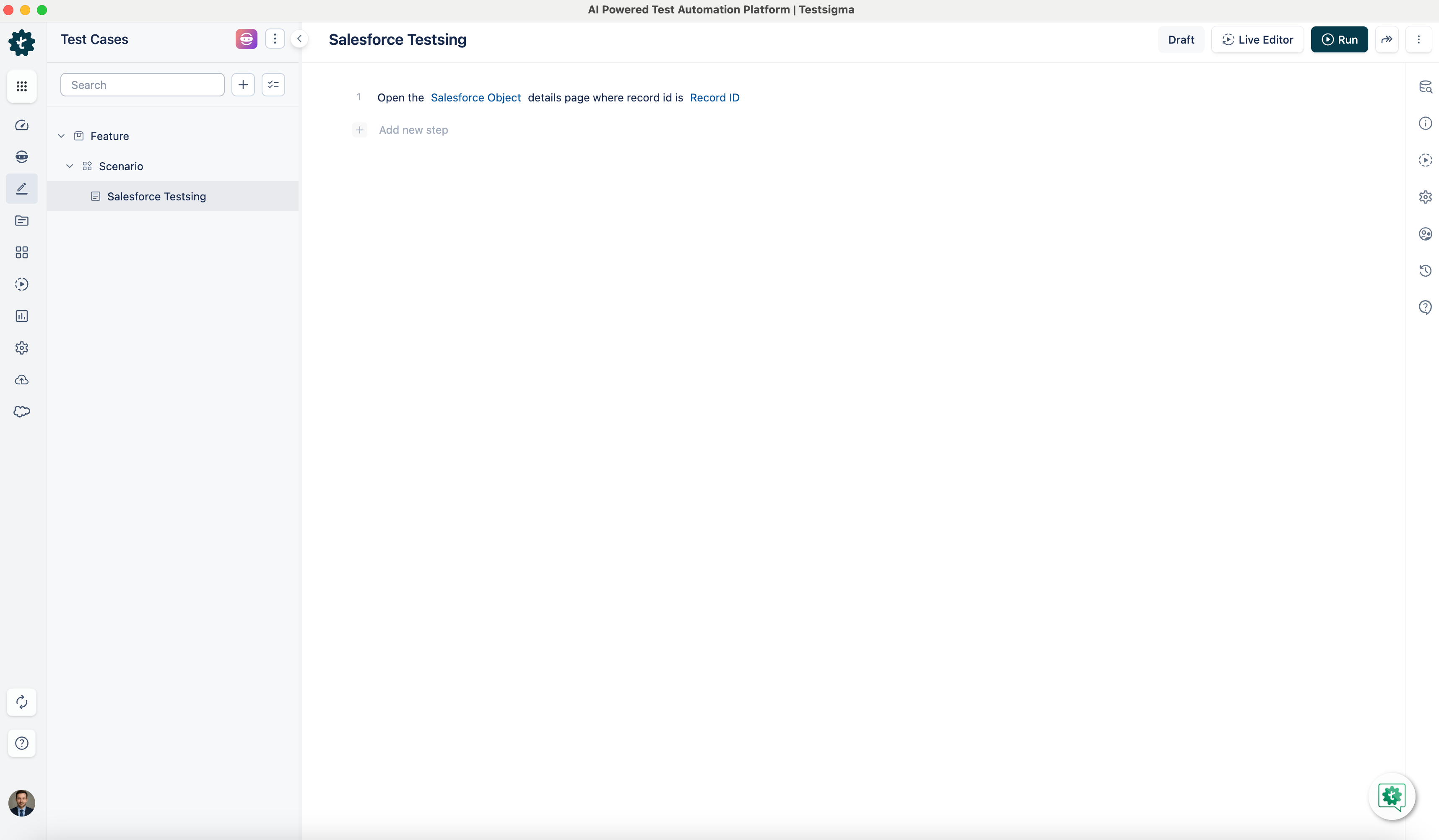

See the GIF below demonstrating how to use this NLP.

-

+

---

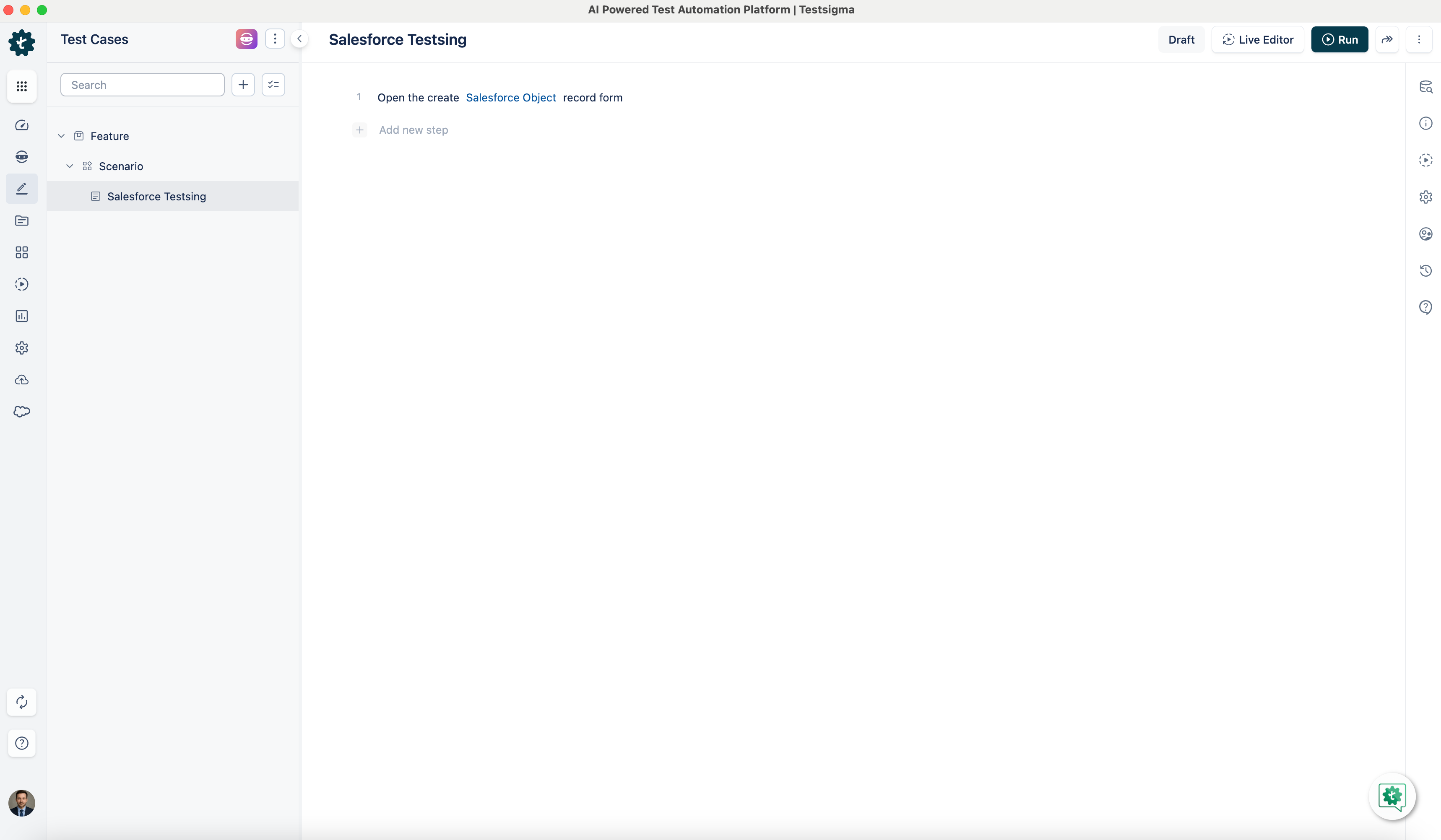

@@ -243,7 +243,7 @@ For example, to create a new lead, use this NLP.

See the GIF below demonstrating how to use this NLP.

-

+

---

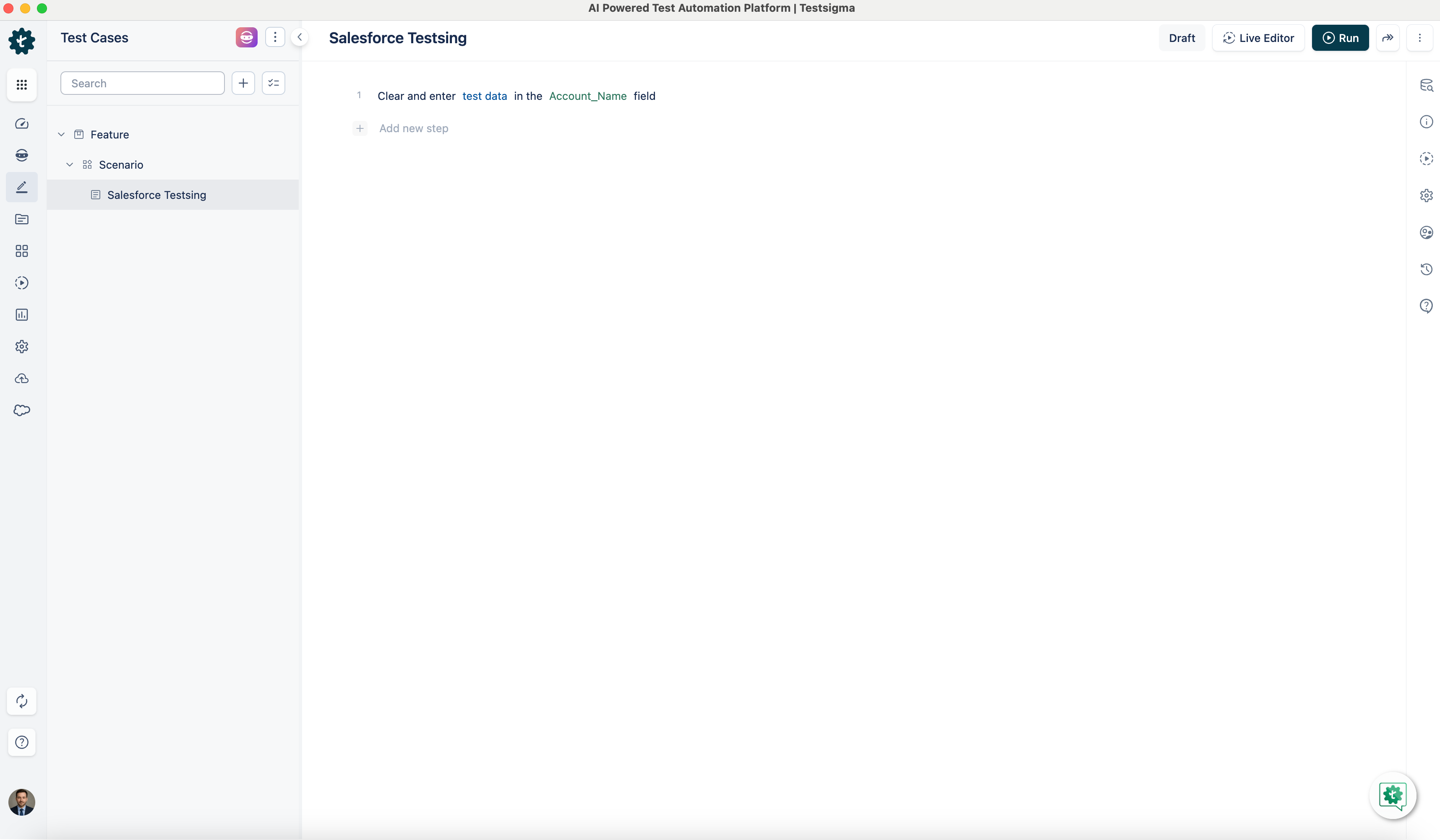

@@ -257,7 +257,7 @@ For example, to replace a URL in the website field of the lead details form, use

See the GIF below demonstrating how to use this NLP.

-

+

---

@@ -271,7 +271,7 @@ For example, to replace a URL with a previous one in the website field of the le

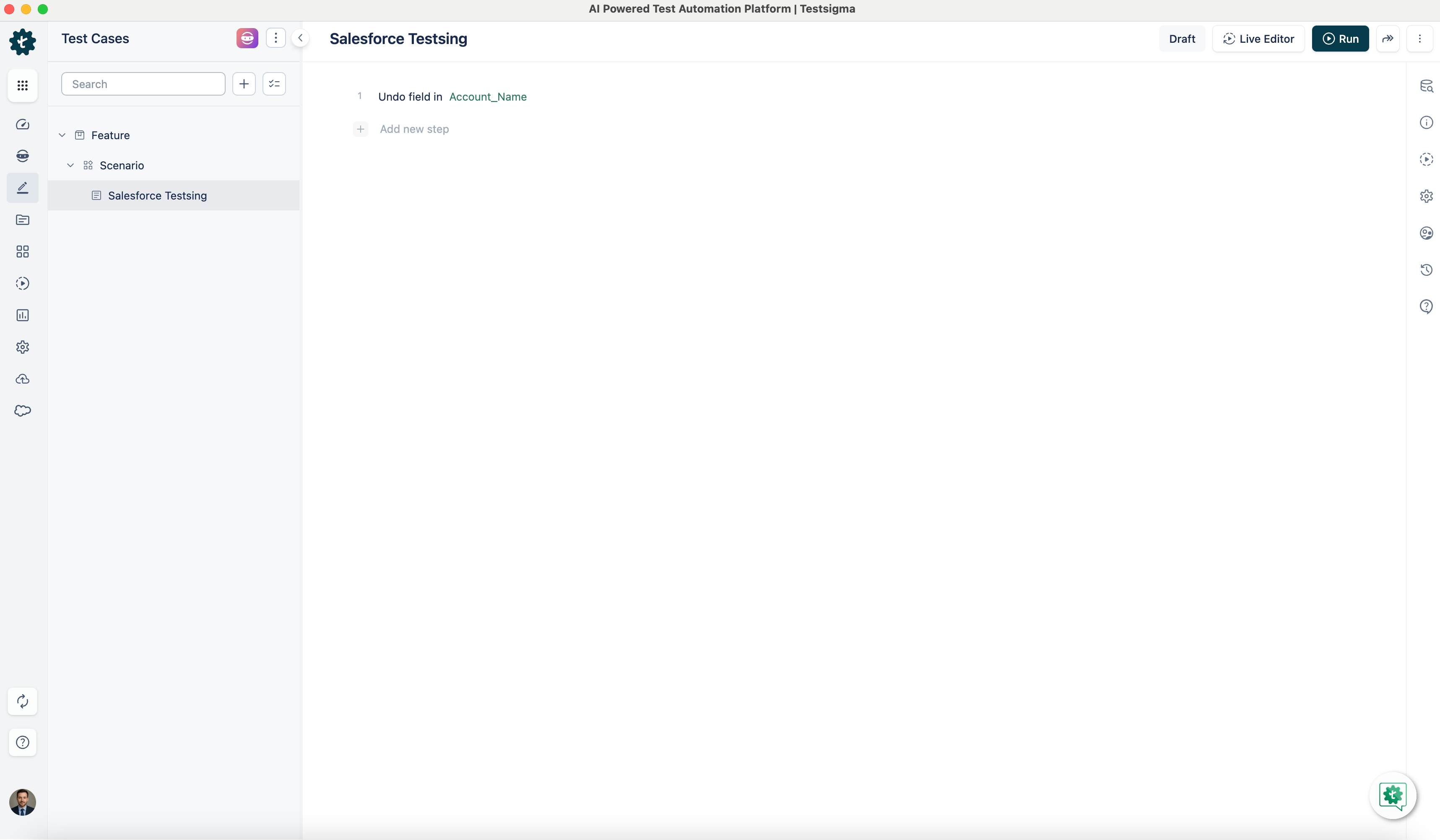

See the GIF below demonstrating how to use this NLP.

-

+

---

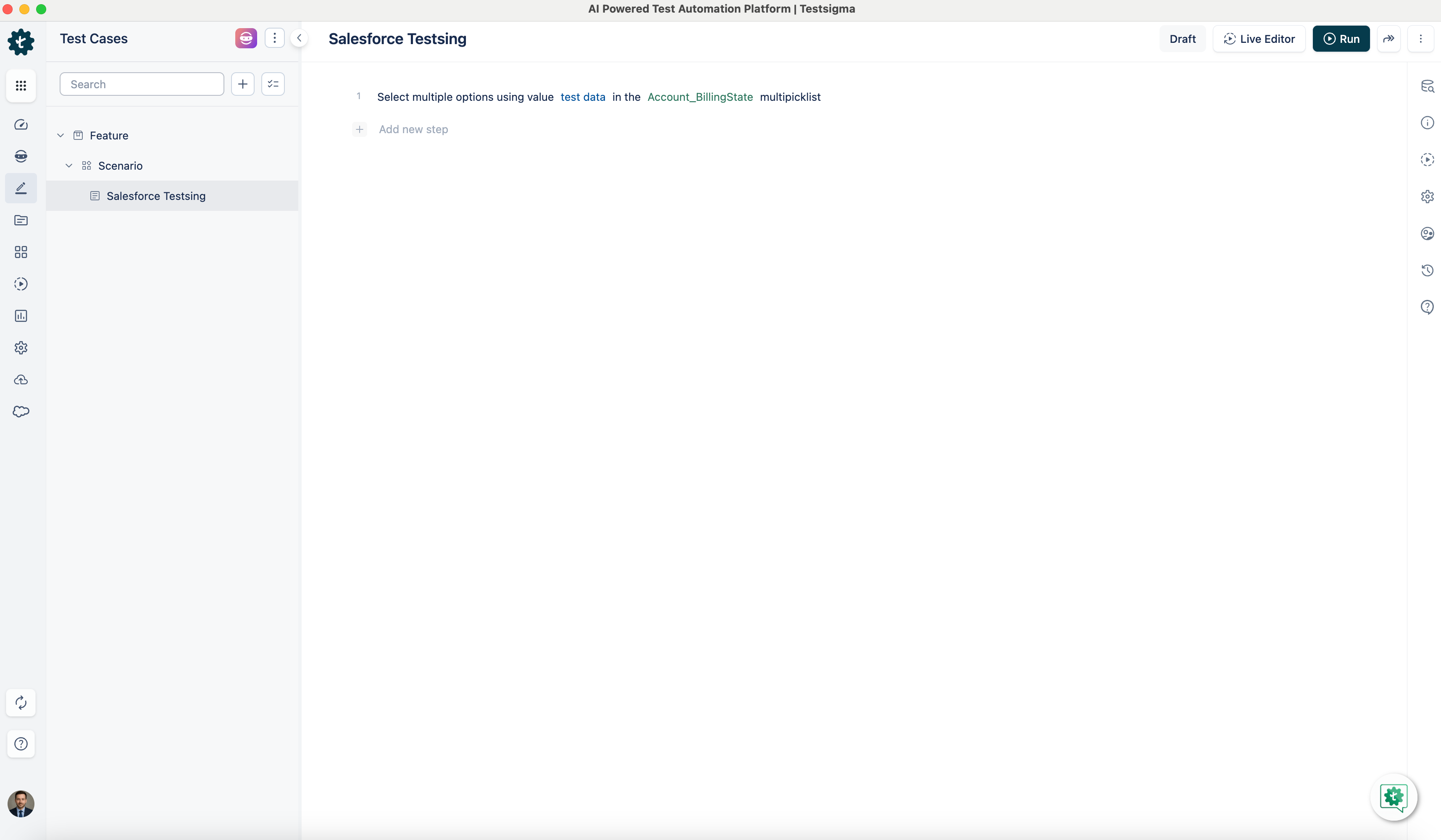

@@ -283,7 +283,7 @@ Use this NLP to select values in **multipicklist** into an **element**.

See the GIF below demonstrating how to use this NLP.

-

+

---

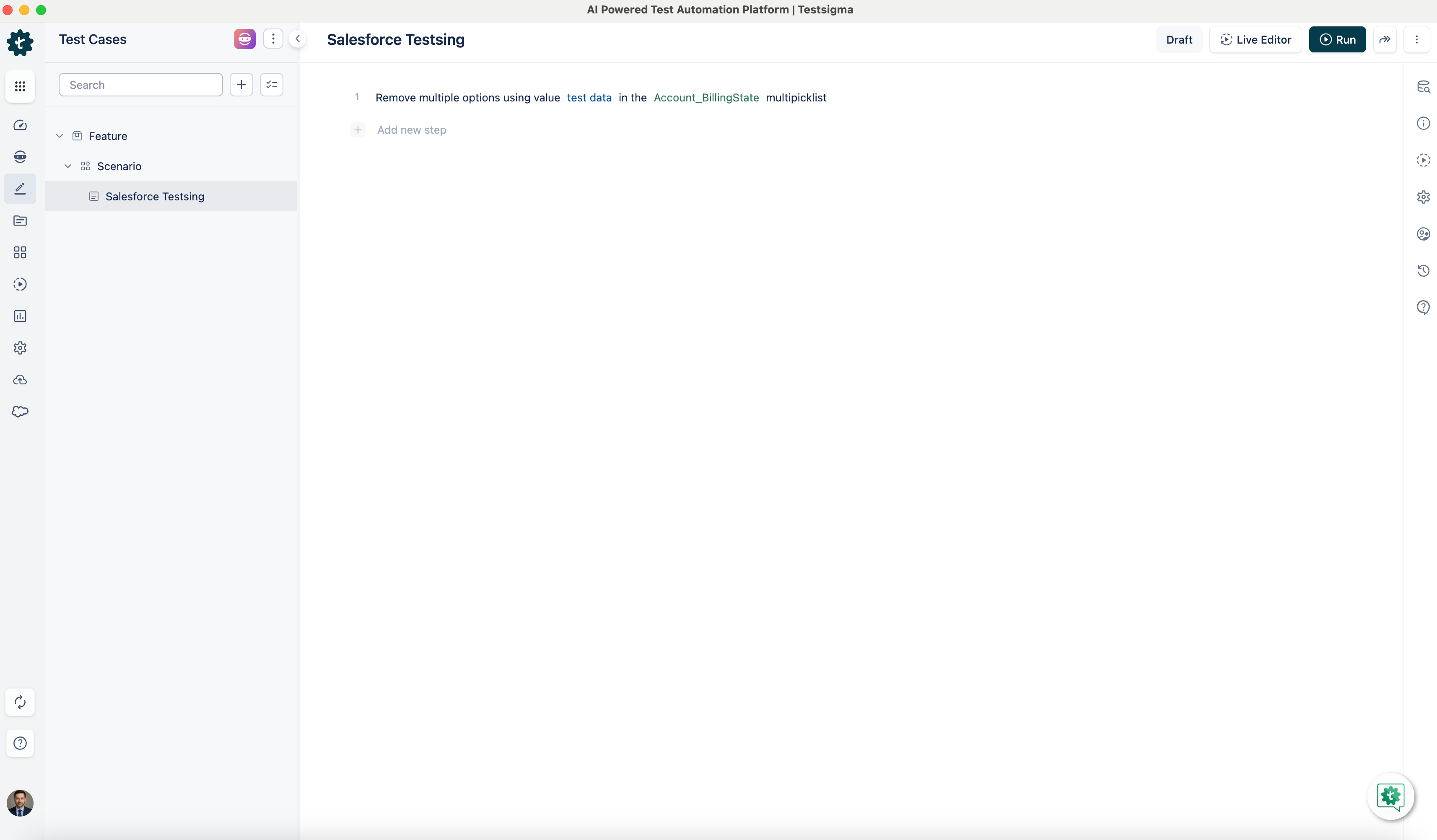

@@ -295,7 +295,7 @@ Use this NLP to remove multiple options from a **multipicklist** into an **eleme

See the GIF below demonstrating how to use this NLP.

-

+

---

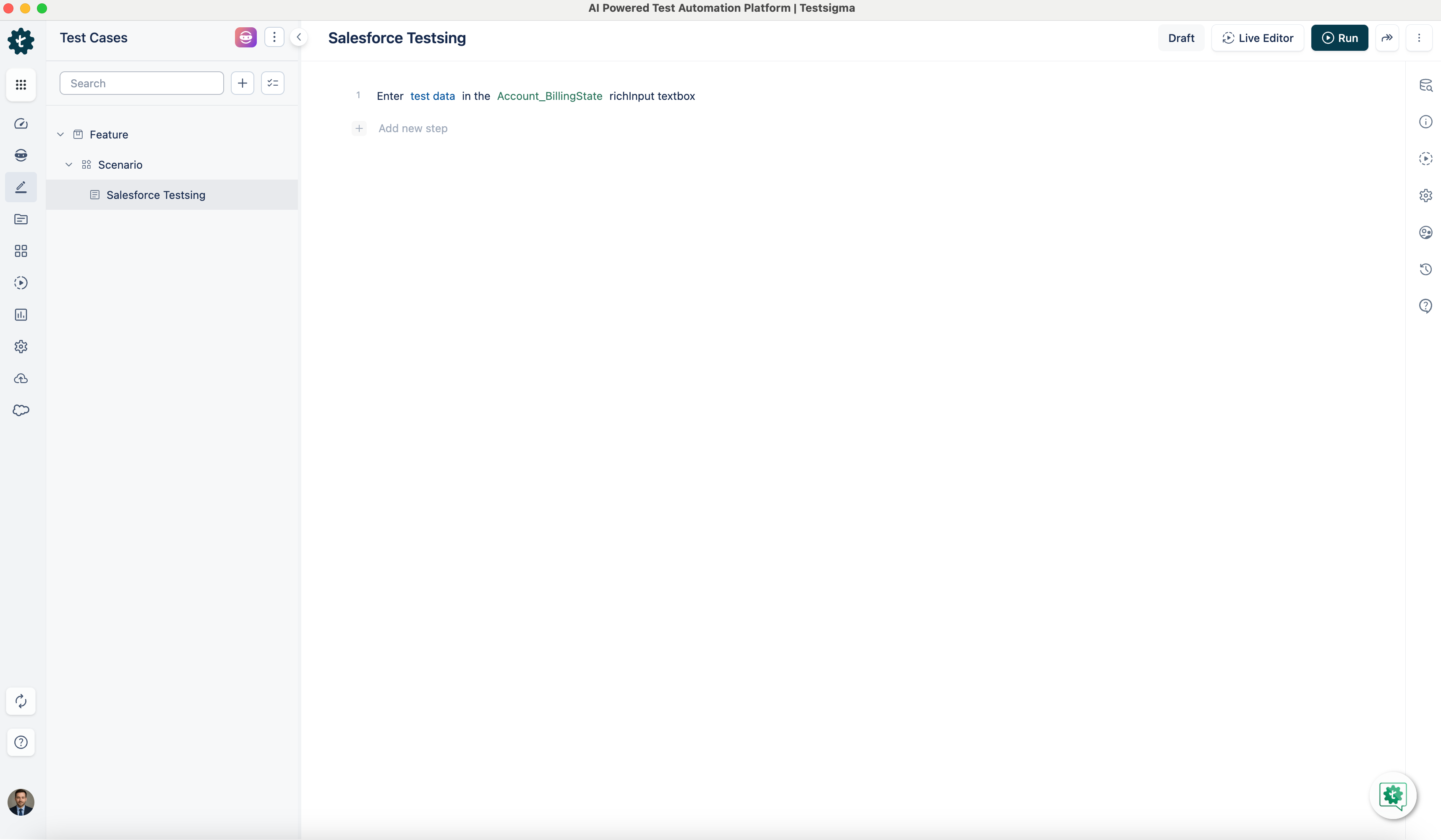

@@ -307,7 +307,7 @@ Use this NLP to enter data in a **RichInput text box**.

See the GIF below demonstrating how to use this NLP.

-

+

---

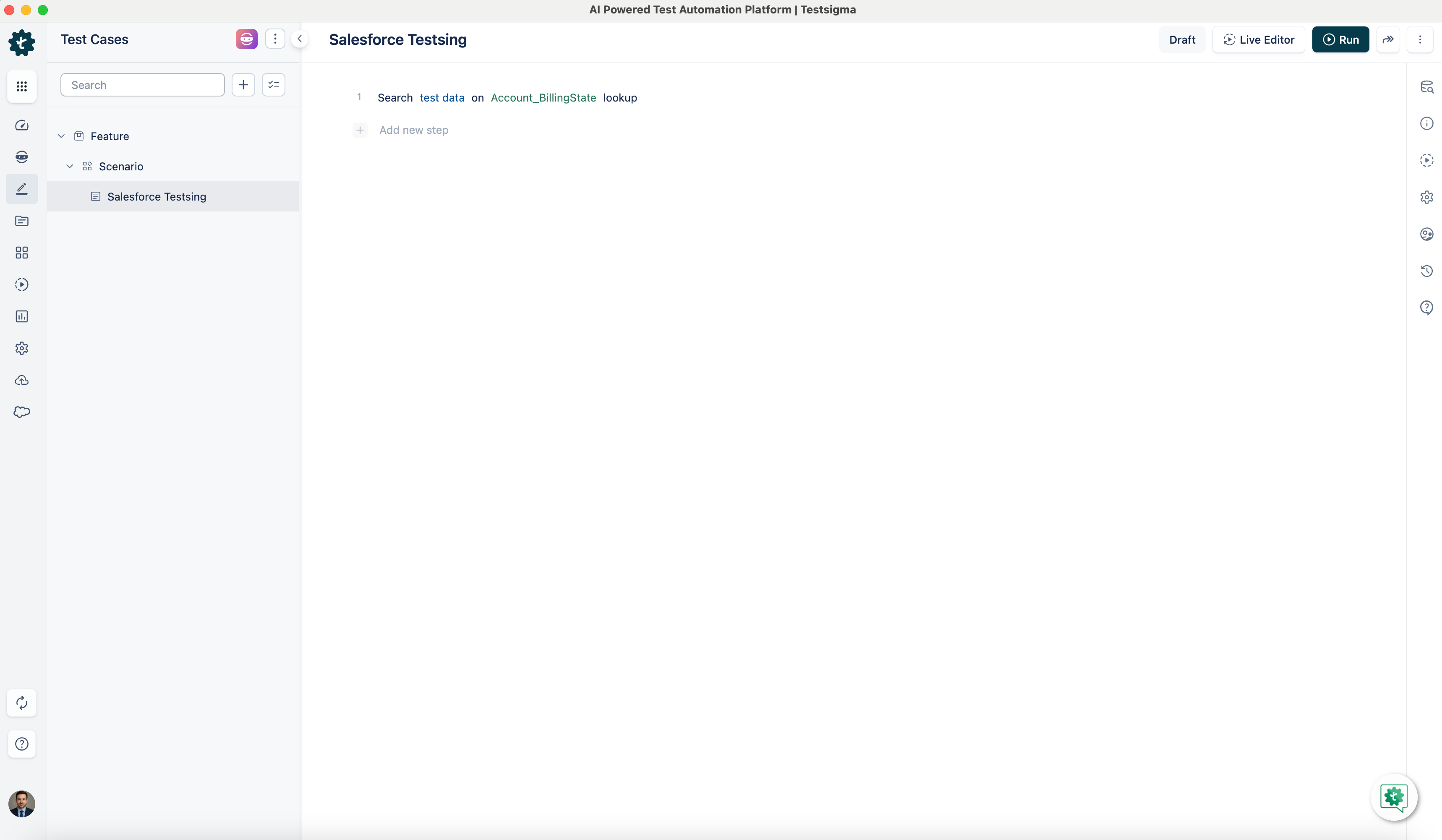

@@ -319,7 +319,7 @@ Use this NLP to select or record in a lookup field.

See the GIF below demonstrating how to use this NLP.

-

+

---

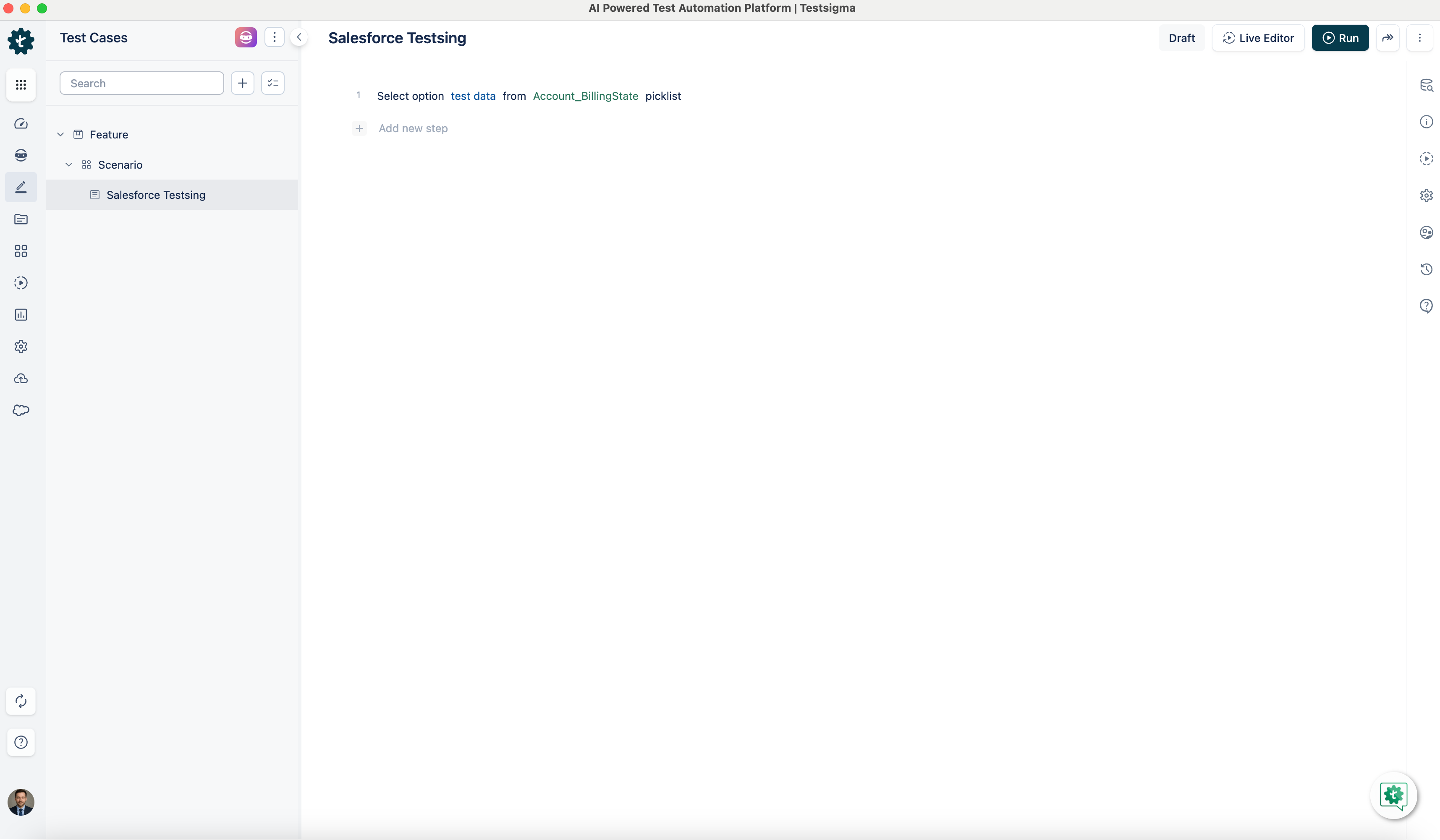

@@ -331,7 +331,7 @@ Use this NLP to select an option from a picklist element.

See the GIF below demonstrating how to use this NLP.

-

+

---

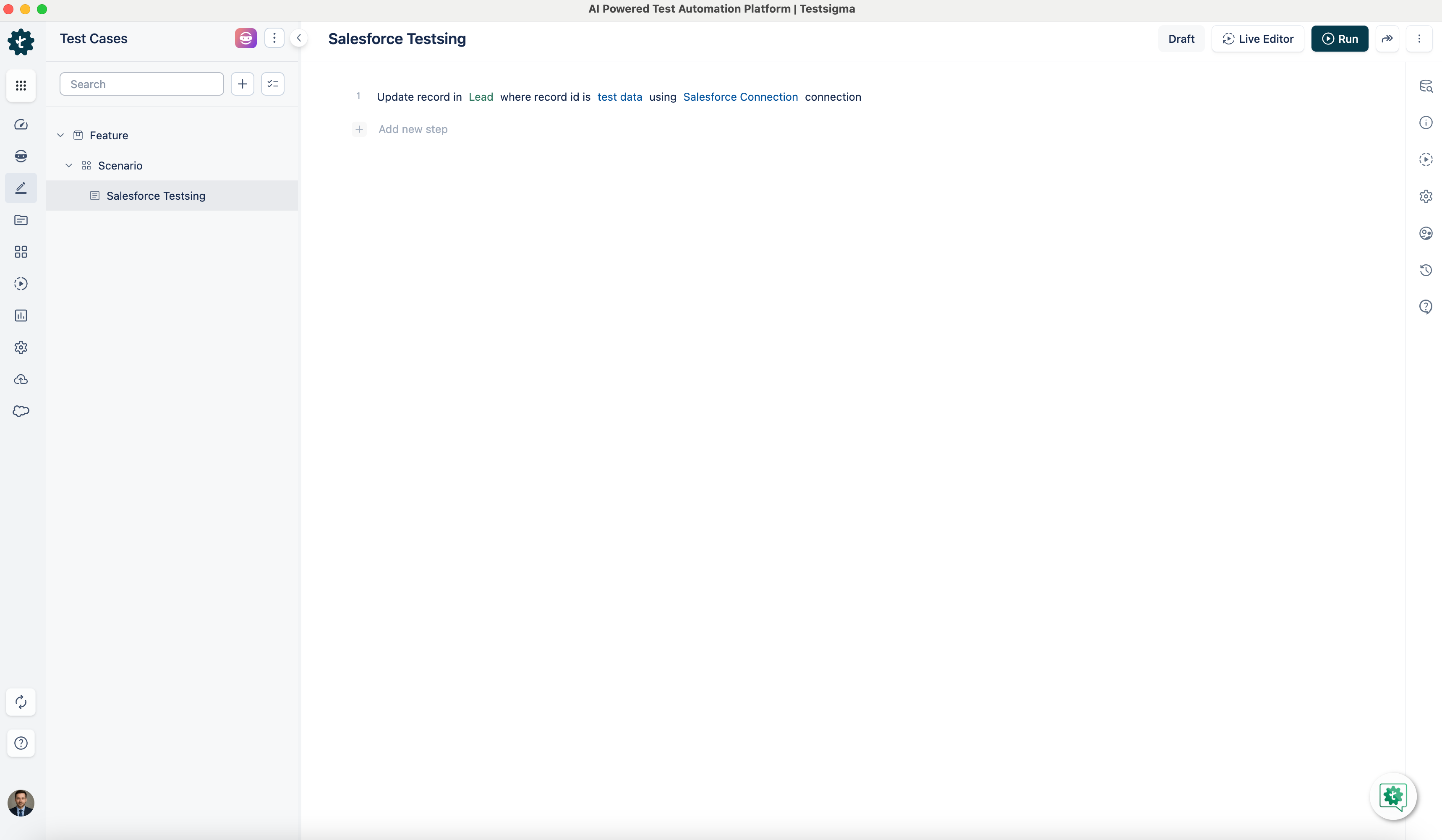

@@ -343,7 +343,7 @@ This NLP feature updates a Salesforce record with the details you enter in the A

See the GIF below demonstrating how to use this NLP.

-

+

---

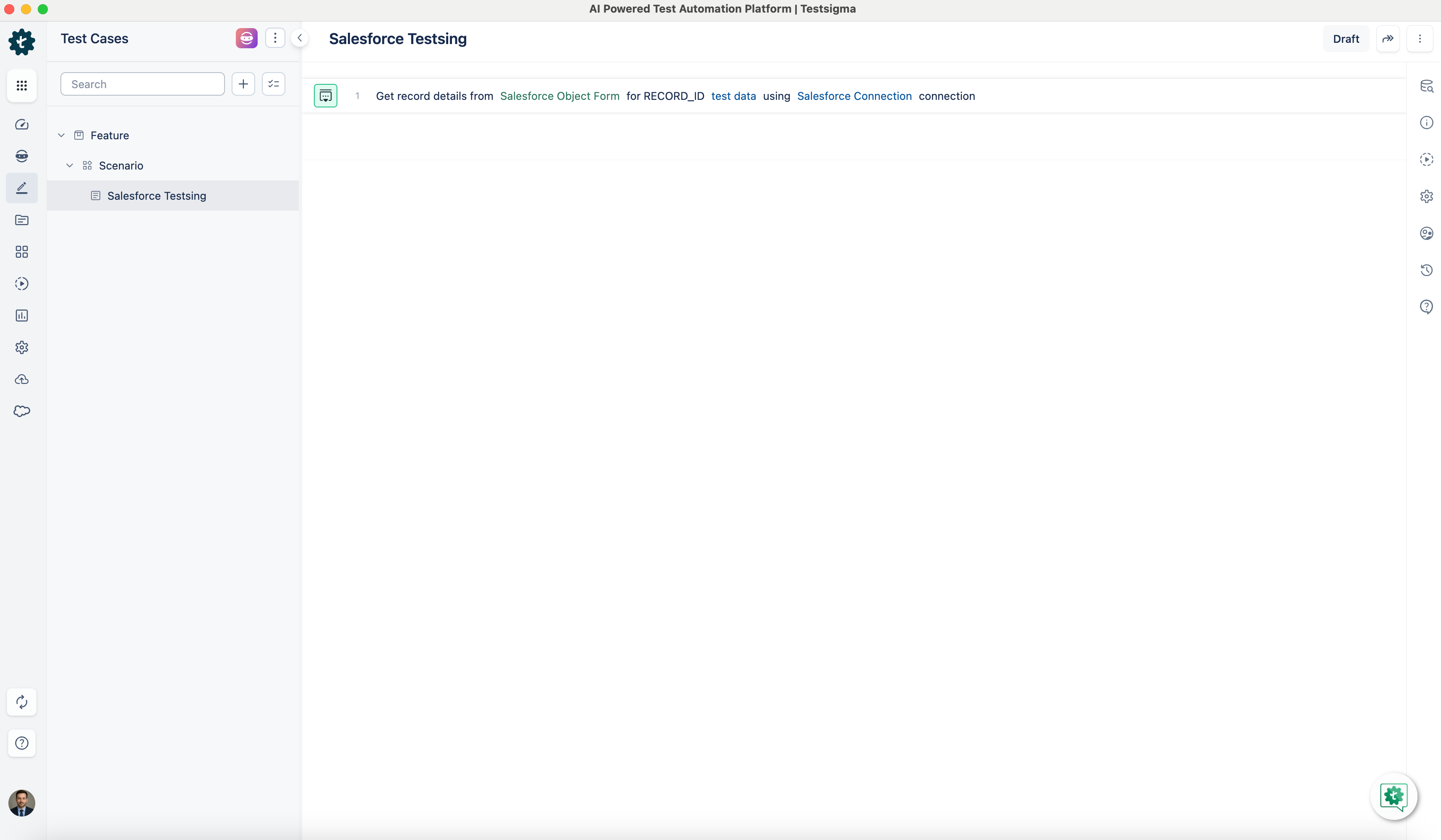

@@ -372,7 +372,7 @@ See the GIF below demonstrating how to use this NLP.

See the GIF below demonstrating how to use this NLP.

-

+

## **NLP 19:**

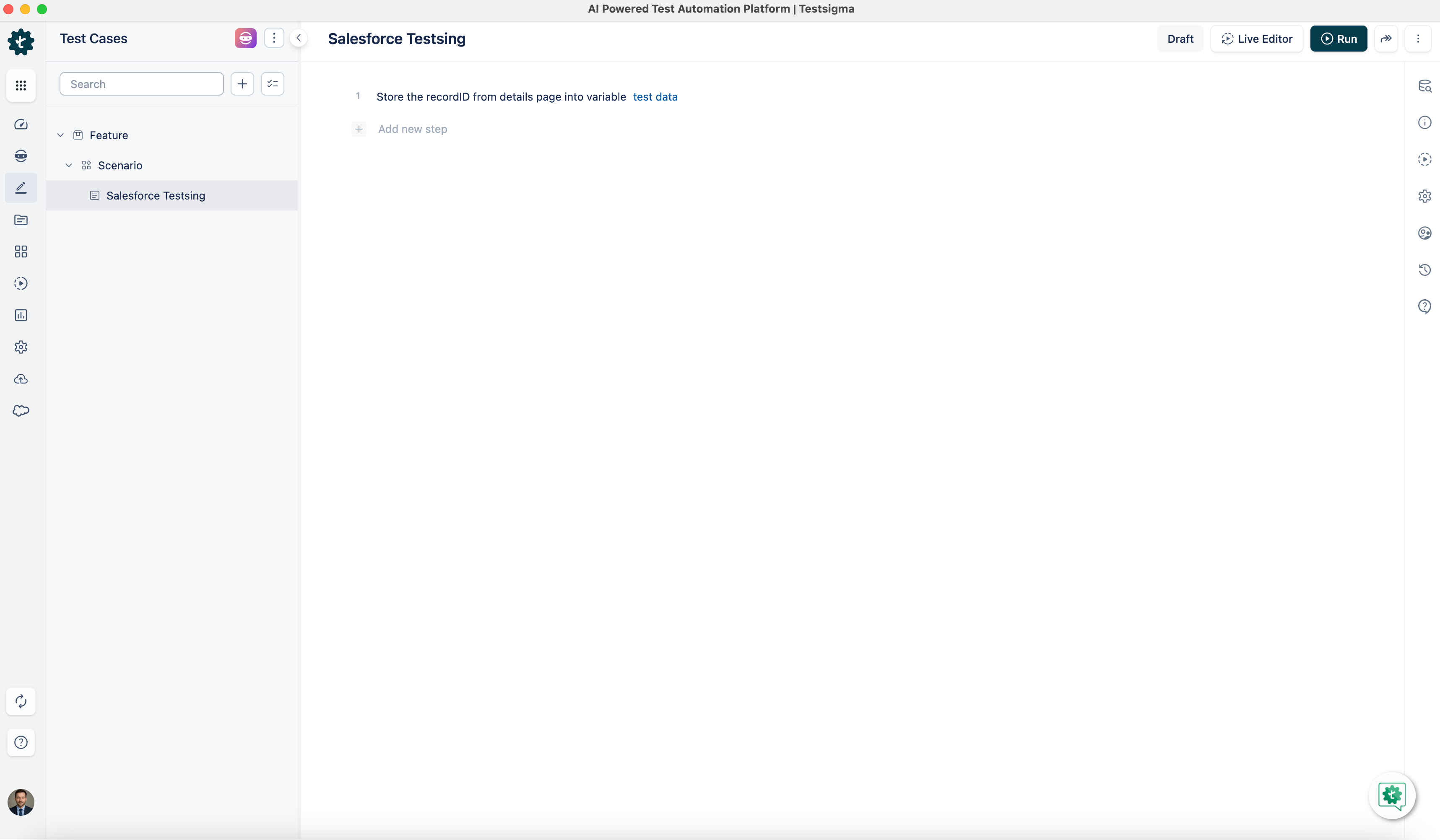

@@ -382,6 +382,6 @@ Use this NLP to automatically capture the record ID from the currently displayed

See the GIF below demonstrating how to use this NLP.

-

+

---

diff --git a/src/pages/docs/test-cases/create-test-steps/actions-and-options-manual/step-settings.md b/src/pages/docs/test-cases/create-test-steps/actions-and-options-manual/step-settings.md

index 8cdf9eb5..4aef6c10 100644

--- a/src/pages/docs/test-cases/create-test-steps/actions-and-options-manual/step-settings.md

+++ b/src/pages/docs/test-cases/create-test-steps/actions-and-options-manual/step-settings.md

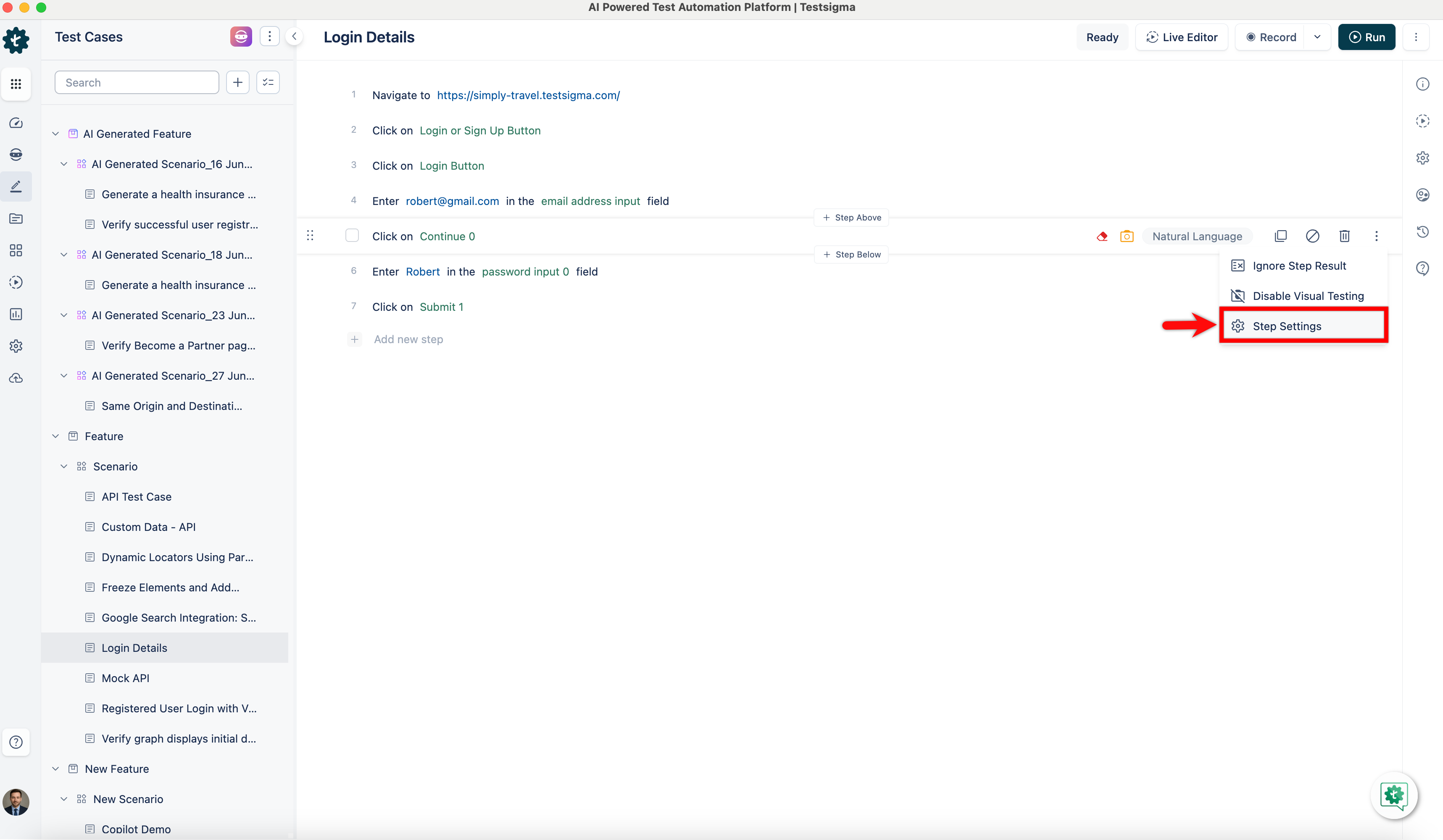

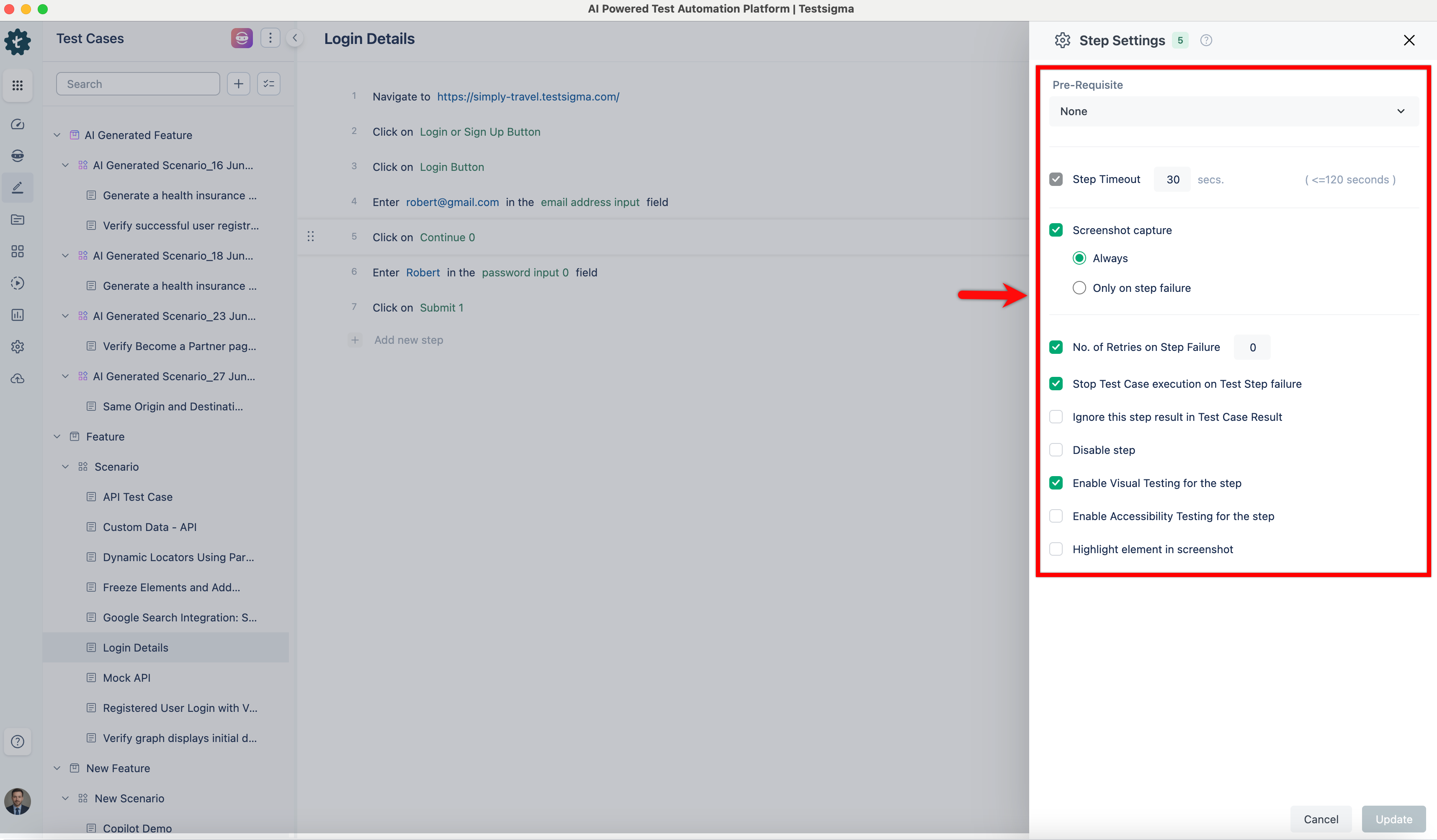

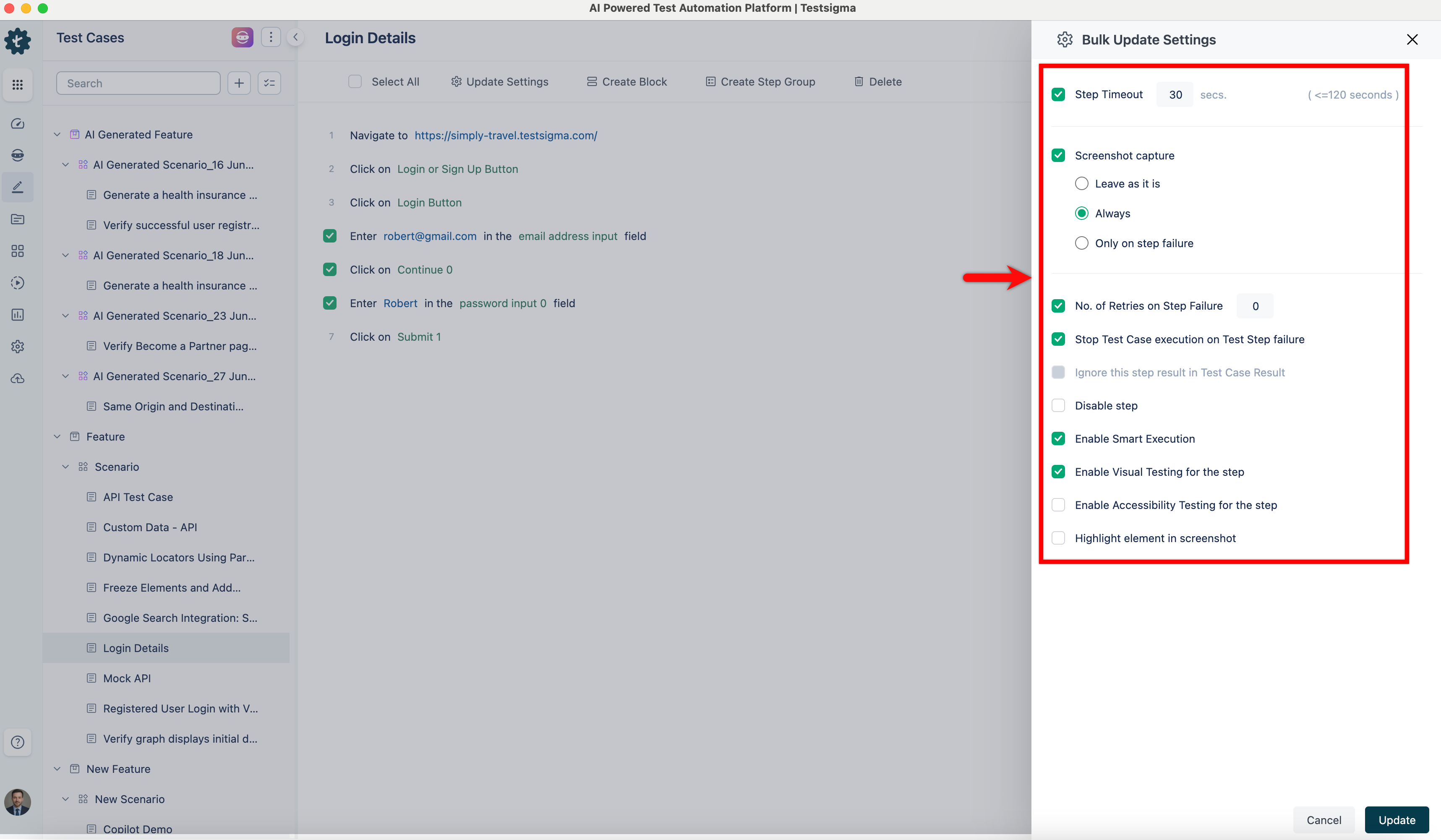

@@ -41,6 +41,7 @@ Controlling the behavior of each test step can be important to enhance your test

## **Use Test Step Settings**

To access **Test Step Settings**, click **kebab menu** on any test step, and choose **Step Settings** from the list.

+

Below is a summary of the available settings:

@@ -56,11 +57,7 @@ Below is a summary of the available settings:

|**Enable Visual Testing for the Step**|You can disable the test step by choosing this option, and the test step will not run. By default, this option is unchecked.|

|**Highlight element in screenshot**|Choose this option to highlight elements on the product screen for each step. Contact Testsigma support to enable this feature.

Navigation: **Settings > Preferences > Highlight element in screenshot**

|

-Here’s a quick GIF demonstrating how to access & update the test step settings.

-

-

-

----

+

## **Configuring Global Timeouts (New)**

@@ -81,7 +78,7 @@ Refer to the screenshot below to enable the **Global Step Timeout** toggle in th

---

## **Update Step Settings**

-To update several test steps, click the **checkboxes** next to the **test steps** you want to update and then click on **Update Settings** in the menu bar to open a popup window where you can make changes to the settings.

+To update several test steps, click the **checkboxes** next to the **test steps** you want to update and then click on **Update Settings** in the menu bar to open a popup window where you can make changes to the settings.

[[info | NOTE:]]

| Update Step Setting does not allow updating the **Pre-Requisite** and **Number of Retries on Step Failure**. You should use **Test Step Settings** for each step individually.

diff --git a/src/pages/docs/test-data/data-generators/mailboxaliasfunctions-function-type.md b/src/pages/docs/test-data/data-generators/mailboxaliasfunctions-function-type.md

index 07830687..91bb1ed6 100644

--- a/src/pages/docs/test-data/data-generators/mailboxaliasfunctions-function-type.md

+++ b/src/pages/docs/test-data/data-generators/mailboxaliasfunctions-function-type.md

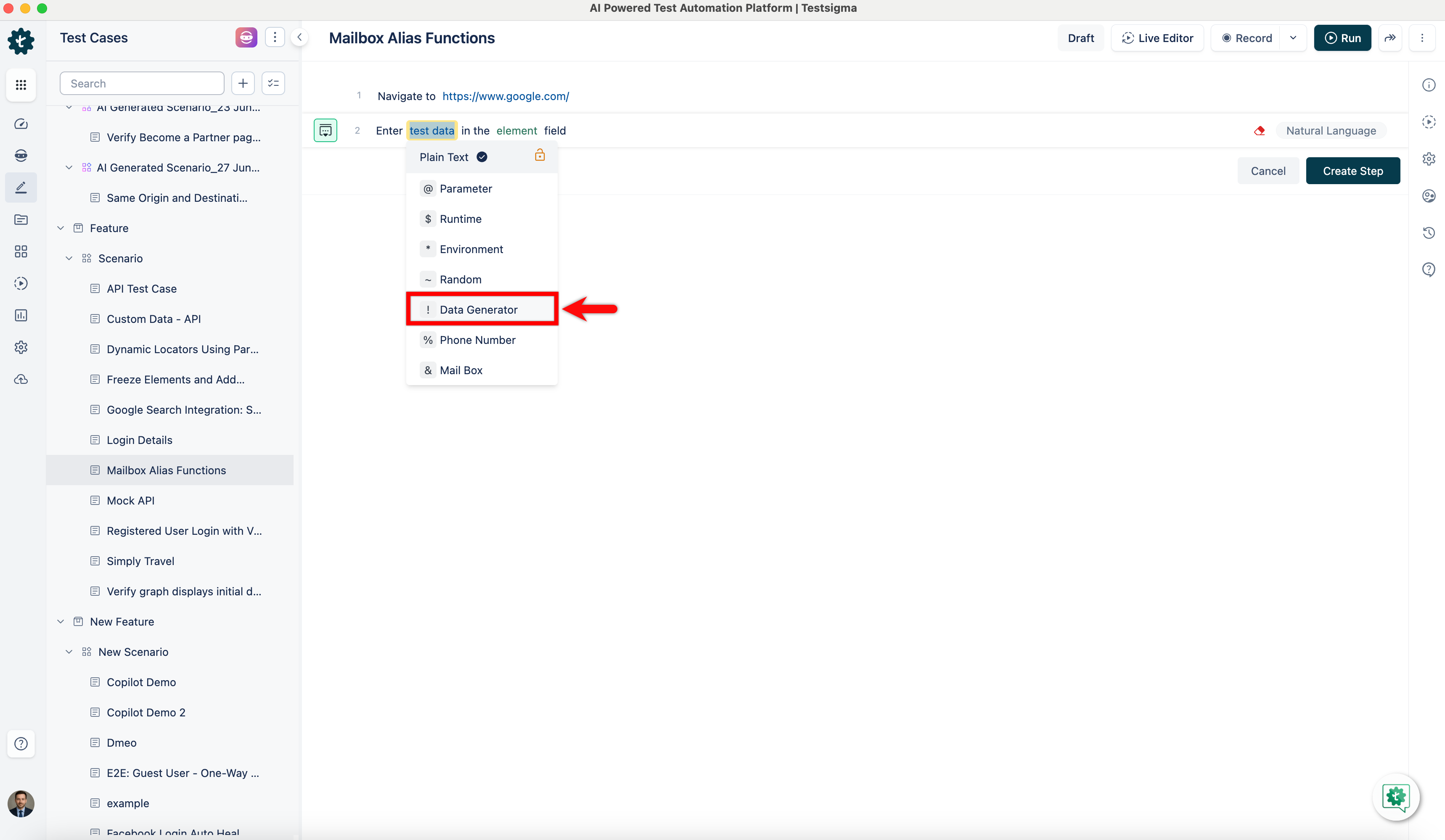

@@ -64,8 +64,10 @@ MailBoxAlias Functions enable dynamic interaction with email data in testing and

1. Use **NLP** to create a **new step** in the test case and include a placeholder for **test data**.

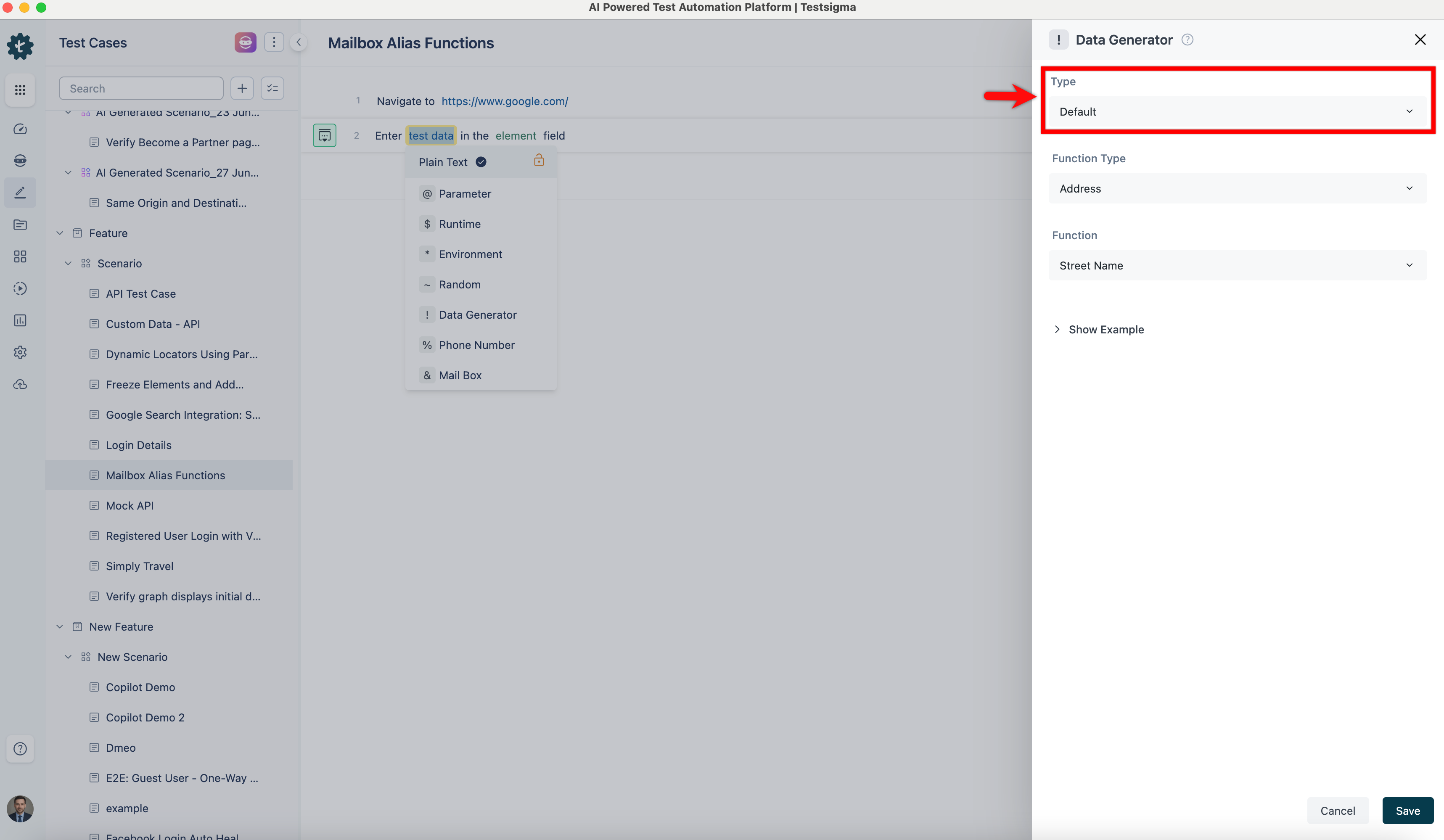

2. Click on the **test data** placeholder. From the **Test Data Types** dropdown menu, select the **! Data Generator** option.

+

3. On the **! Data Generators** overlay screen, select the **Type** to **Default**. This will enable you to use a collection of built-in test data generators.

-4. Select **MailboxAliasFunctions** from the **Function Type** dropdown list.

+

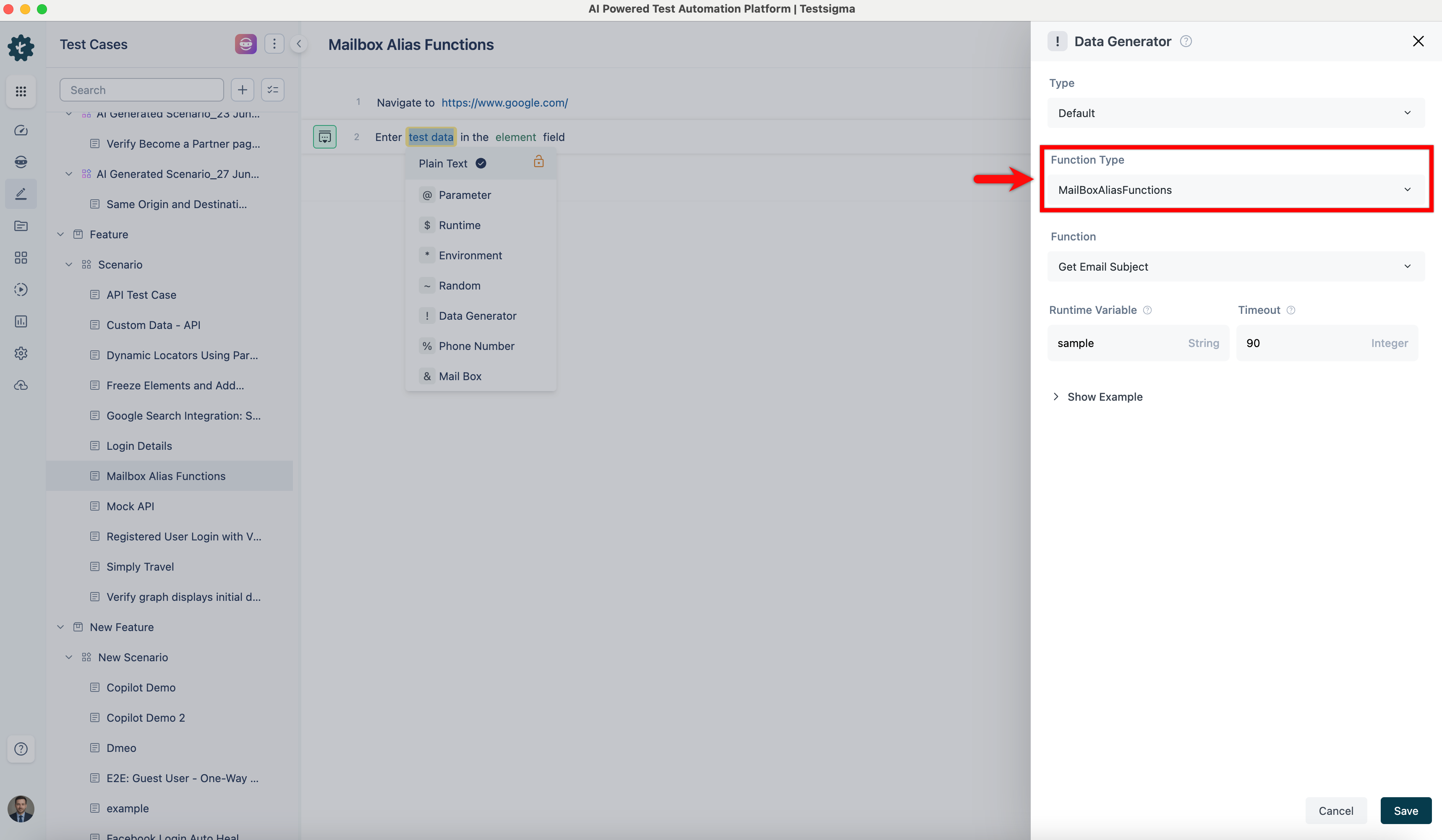

+4. Select **MailboxAliasFunctions** from the **Function Type** dropdown list.

[[info | NOTE:]]

| This action will display a list of built-in functions associated with the MailboxAliasFunctions function type, enabling you to select the appropriate function based on your requirements.

diff --git a/src/pages/docs/test-data/data-generators/namefunctions-function-type.md b/src/pages/docs/test-data/data-generators/namefunctions-function-type.md

index e71a73d8..dc059442 100644

--- a/src/pages/docs/test-data/data-generators/namefunctions-function-type.md

+++ b/src/pages/docs/test-data/data-generators/namefunctions-function-type.md

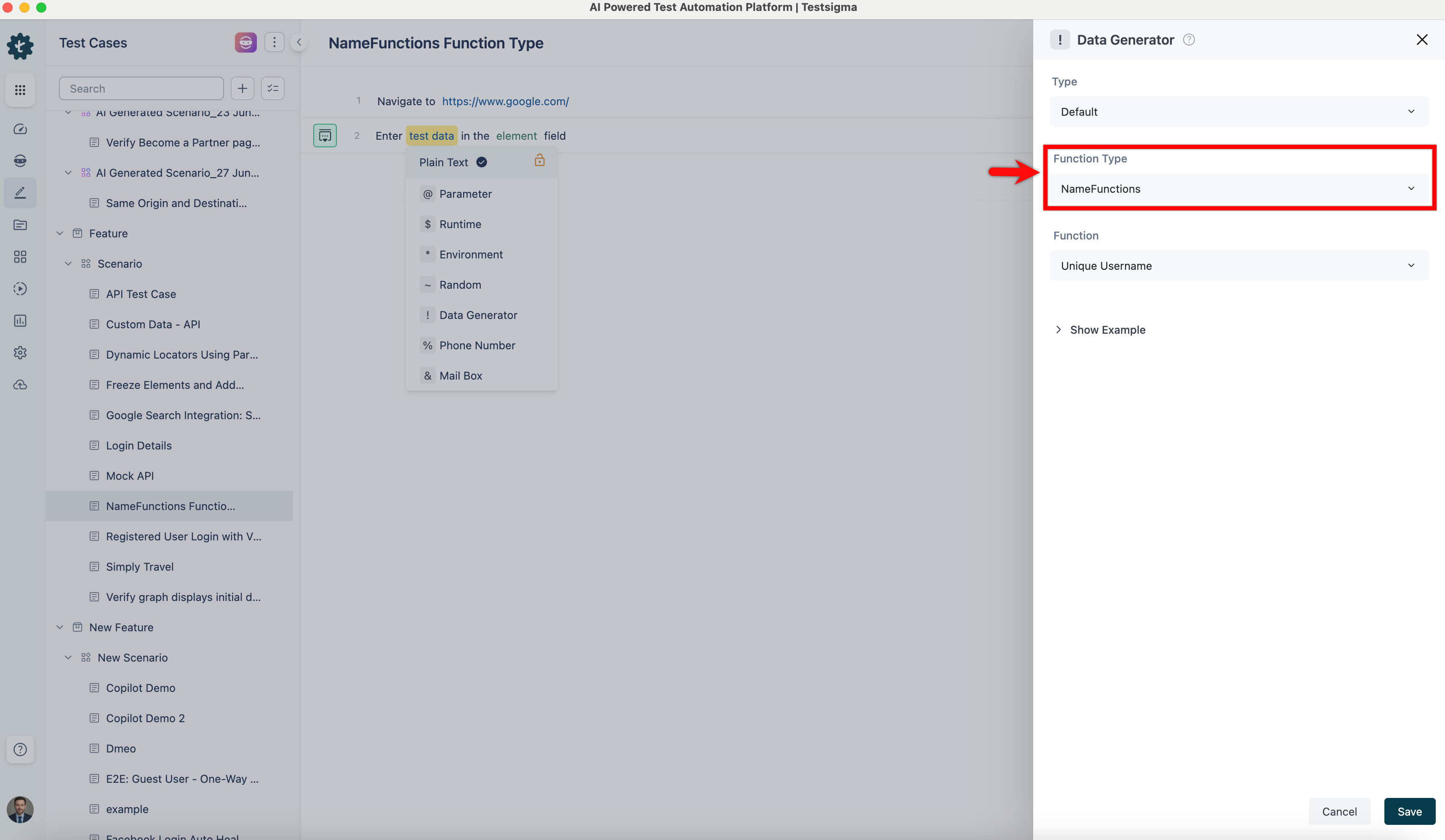

@@ -39,8 +39,10 @@ NameFunctions Function Type in data generators helps you create usernames. It pr

1. Use **NLP** to create a **new step** in the test case and include a placeholder for **test data**.

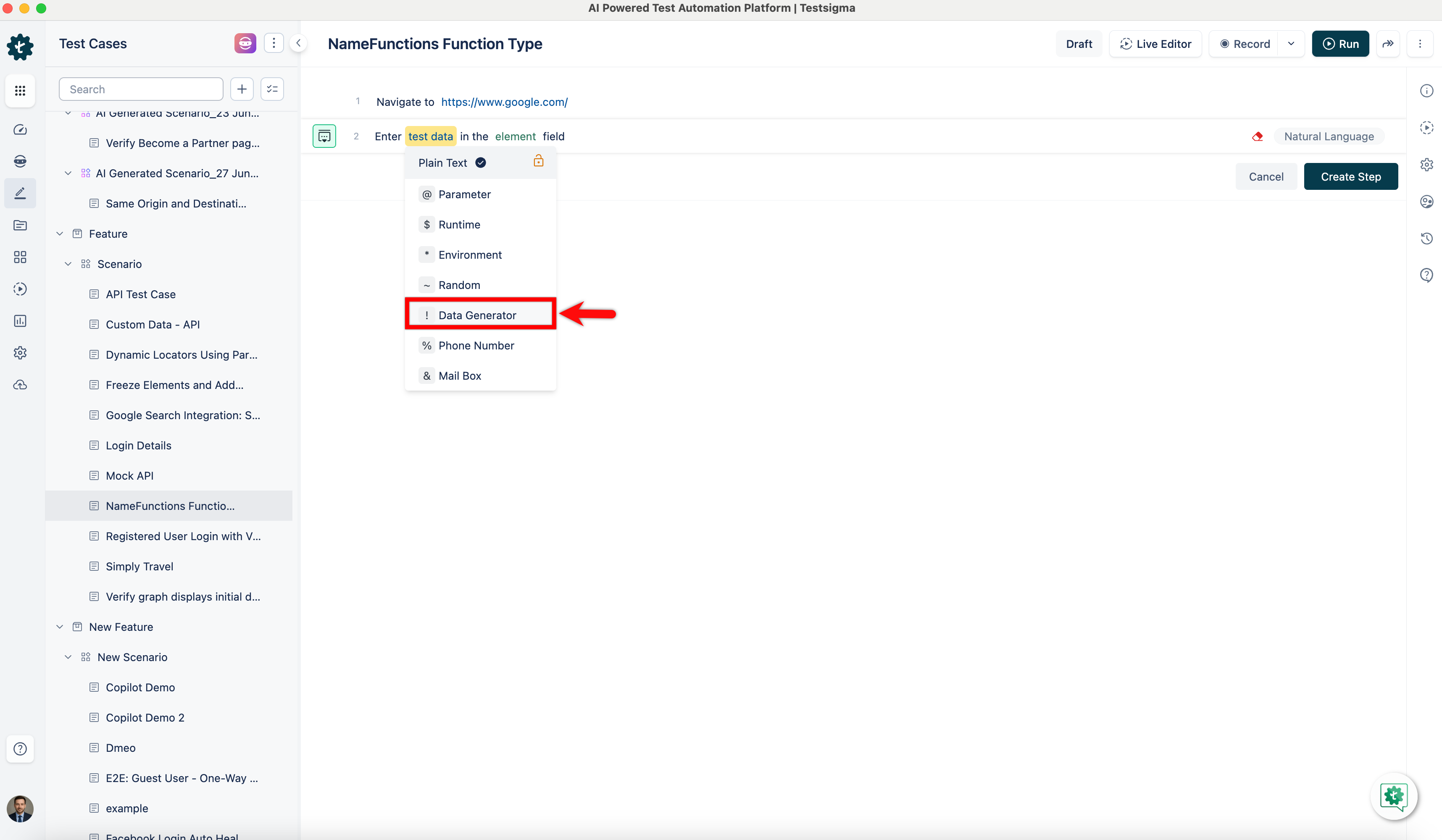

2. Click on the **test data** placeholder. From the **Test Data Types** dropdown menu, select the **! Data Generator** option.

+

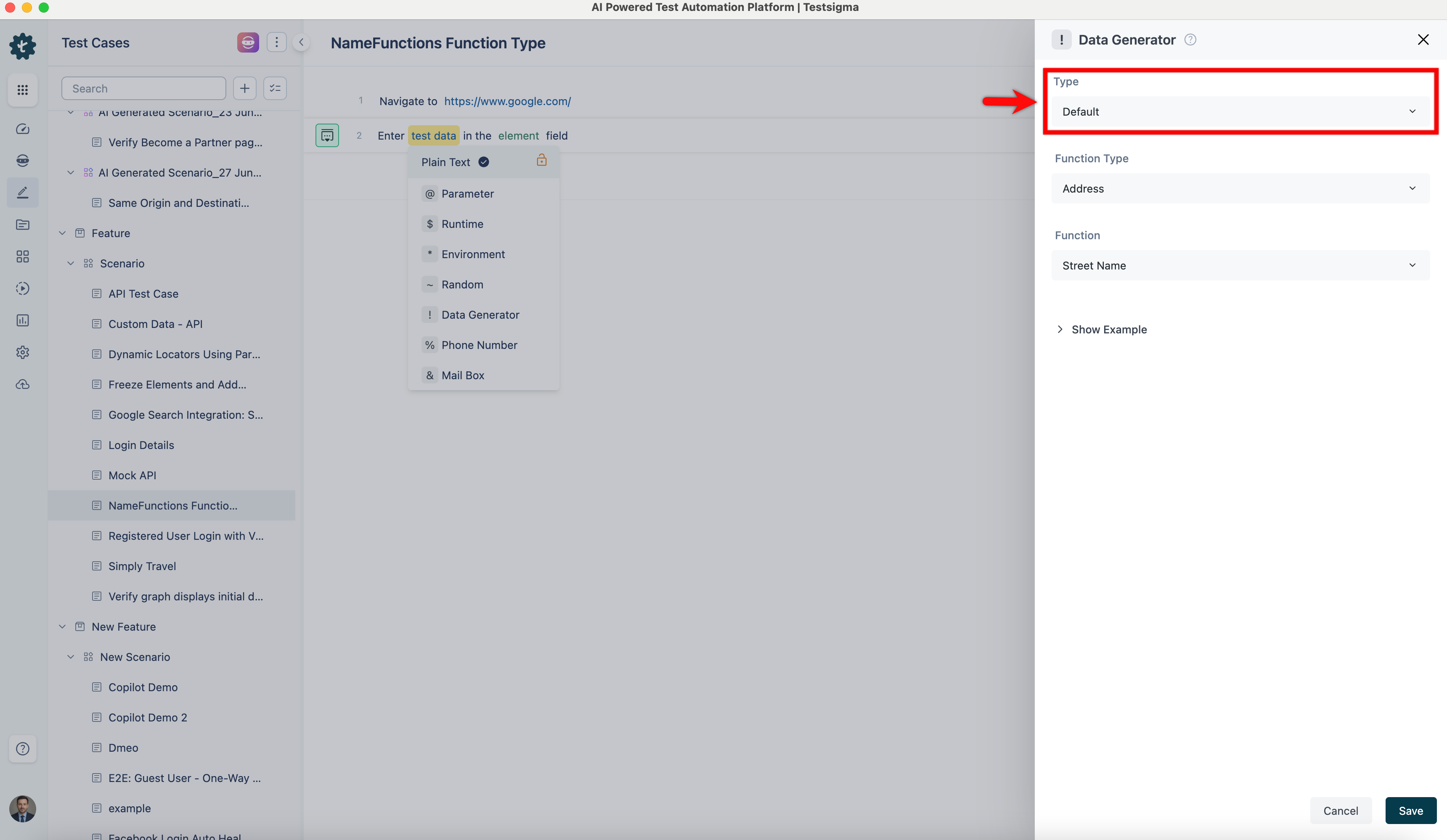

3. On the **! Data Generators** overlay screen, select the **Type** to **Default**. This will enable you to use a collection of built-in test data generators.

-4. Select **NameFunctions** from the **Function Type** dropdown list.

+

+4. Select **NameFunctions** from the **Function Type** dropdown list.

[[info | NOTE:]]

| This action will display a list of built-in functions associated with the NameFunctions function type, enabling you to select the appropriate function based on your requirements.

diff --git a/src/pages/docs/test-data/types/environment.md b/src/pages/docs/test-data/types/environment.md

index f1862f4d..3952f555 100644

--- a/src/pages/docs/test-data/types/environment.md

+++ b/src/pages/docs/test-data/types/environment.md

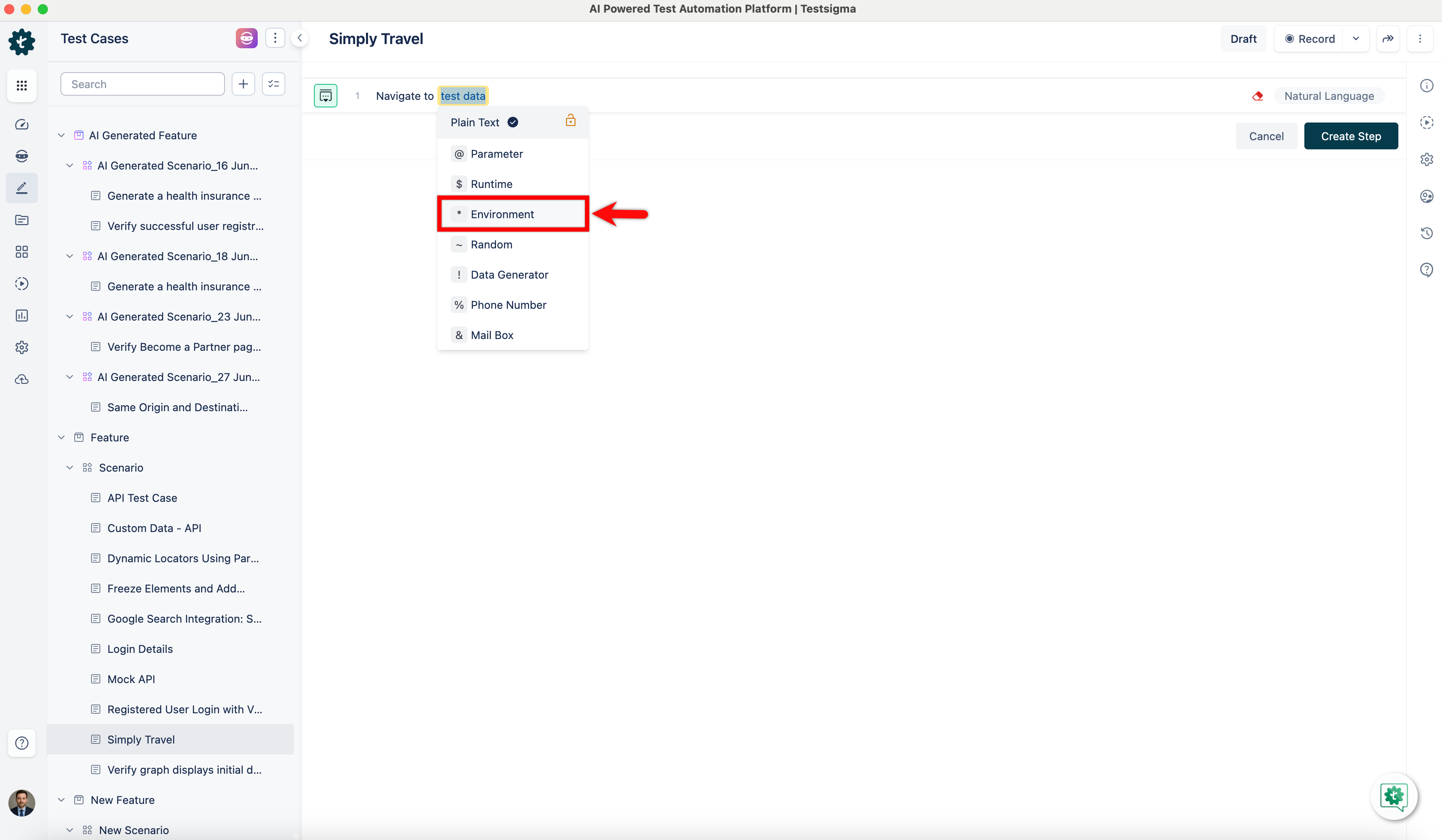

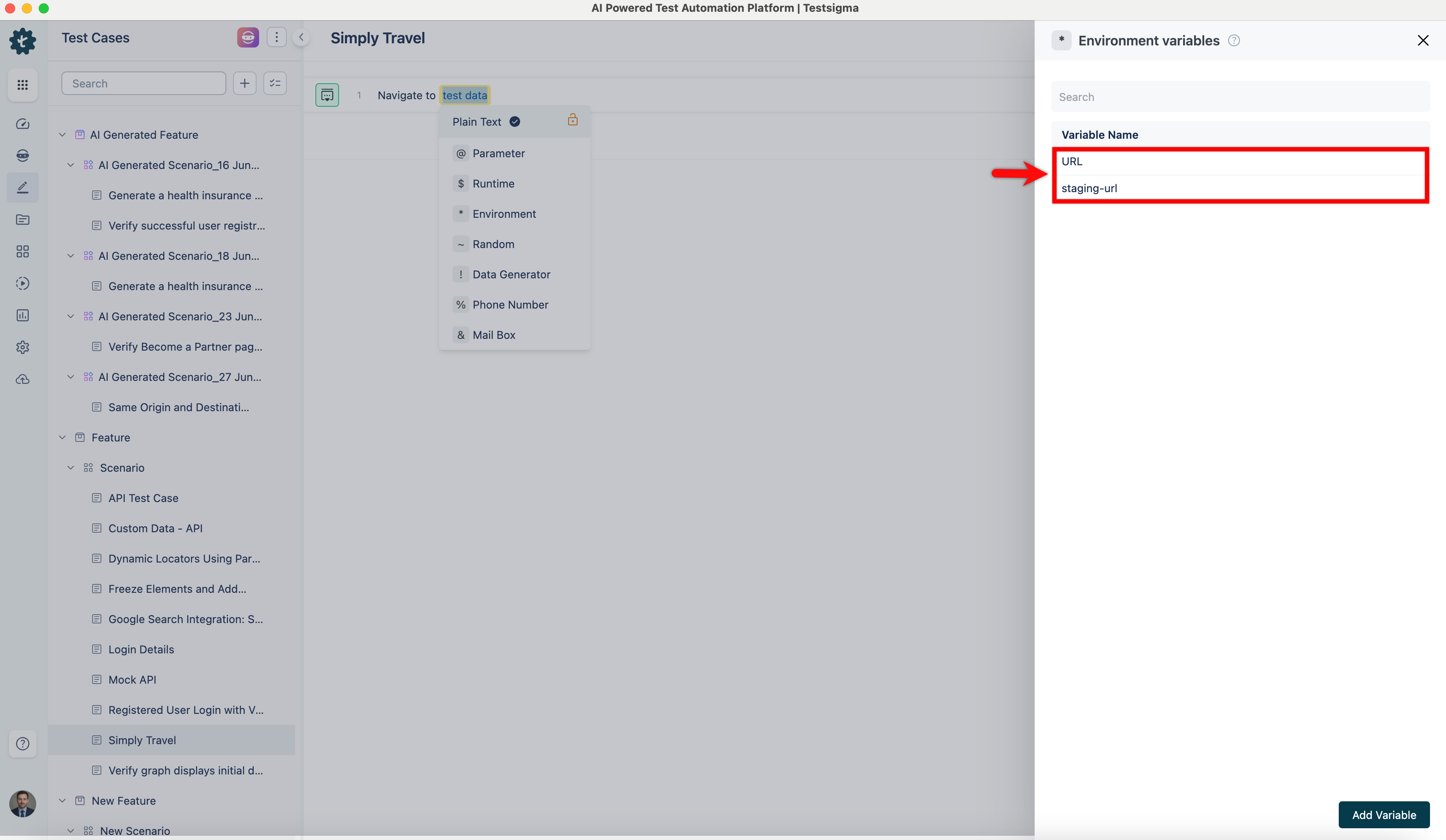

@@ -56,21 +56,21 @@ In Testsigma, you can handle and use specific sets of test data linked to differ

1. Use **NLP** to create a **new step** in the **Test Case** and include a placeholder for **test data**.

2. Click the **test data** placeholder to replace it, and then select the *** Environment** from the **Test Data Types** dropdown menu.

-3. Select **Environment** in the **Environments** overlay screen from the right-side panel.

+

+3. Select **Environment** in the **Environments** overlay screen from the right-side panel.

---

## **Use Environment in Ad-hoc Run page**

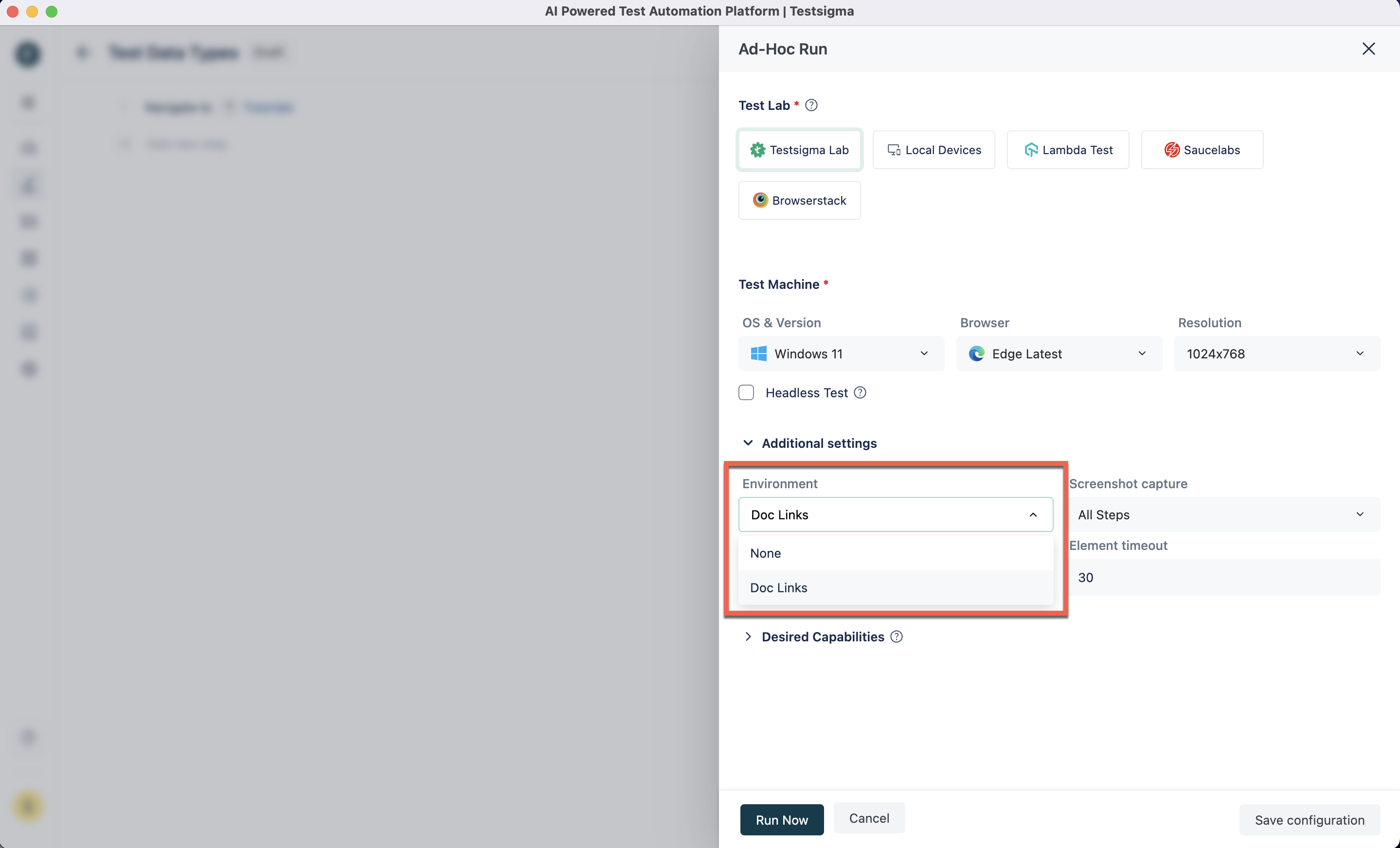

-1. On the **Ad-hoc Run** page, click the right arrow before **Additional Settings** to reveal the feature.

-2. Select **Environmet** from the drop-down list.

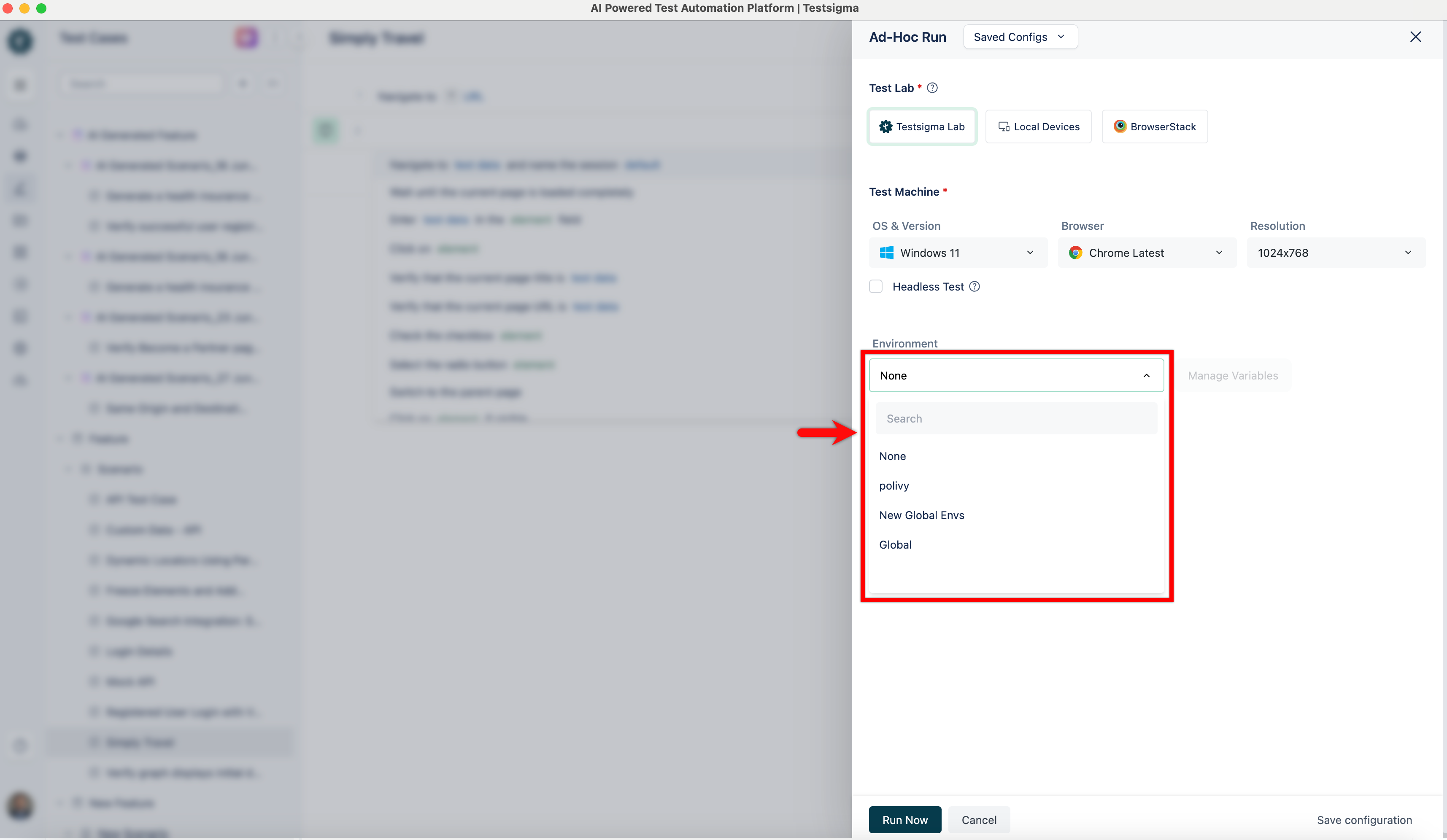

+1. On the **Ad-hoc Run** page, expand **Environment** and select an environment from the drop-down list.

---

## **Use Environment in Test Plan**

1. Navigate to the **Test Plan Settings** tab on the **Create** or **Edit Test Plan** page.

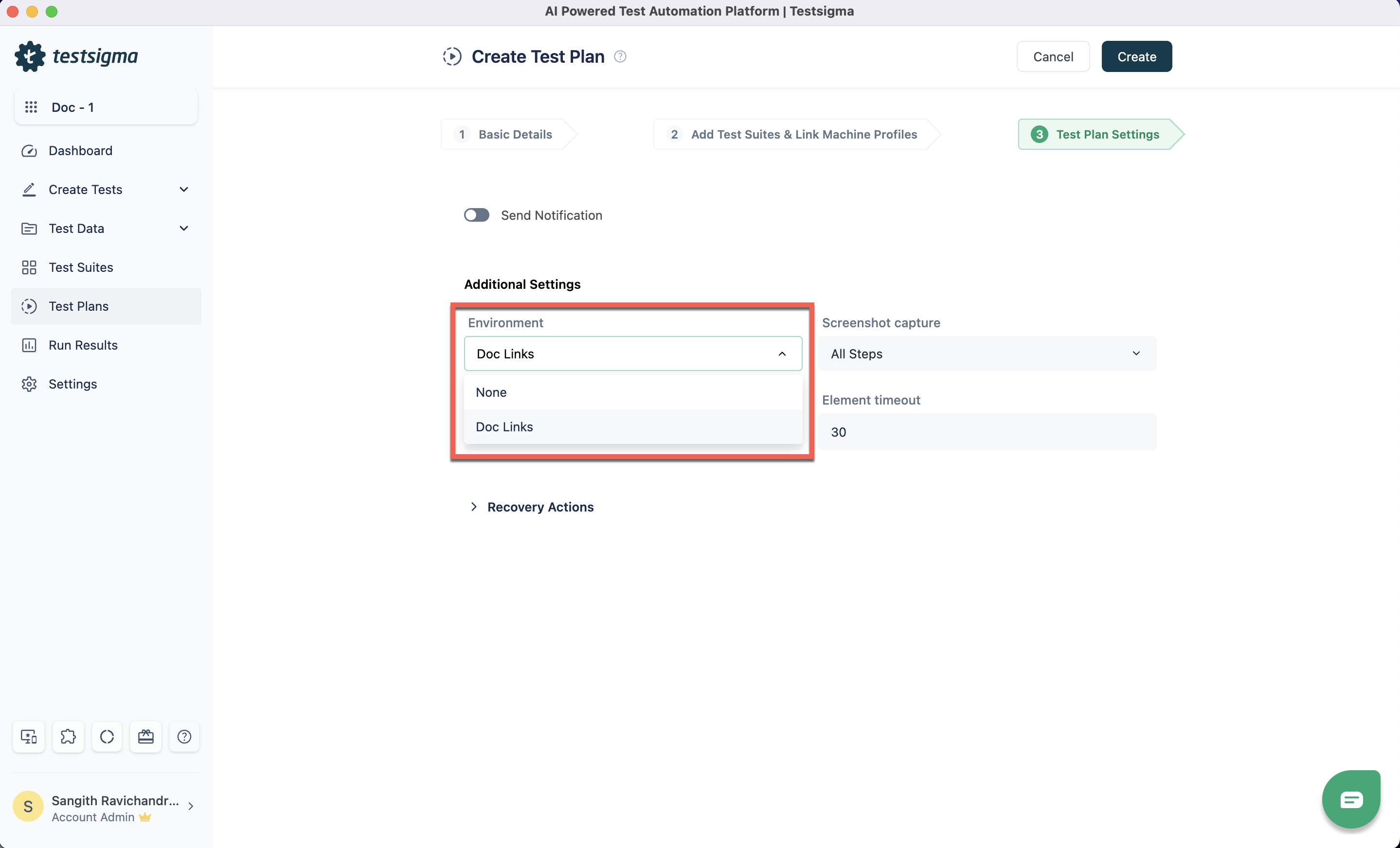

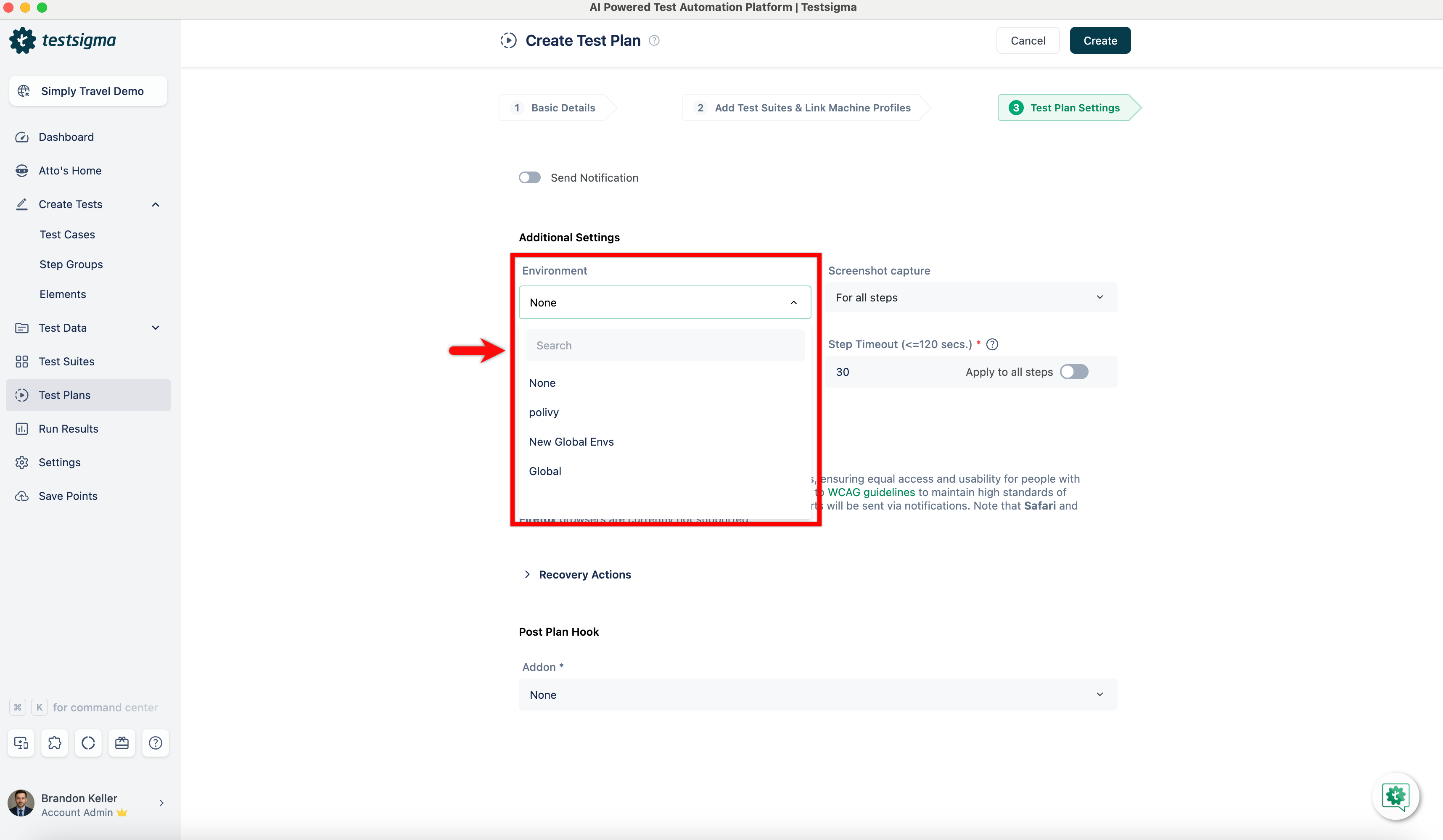

-2. In the **Additional Settings** section, select **Environment** from the drop-down list.

+2. In the **Additional Settings** section, select **Environment** from the drop-down list.

---

@@ -91,44 +91,41 @@ Testsigma also automates the creation of new and updated keys across all environ

## **Add Keys & Values to all Environments Globally**

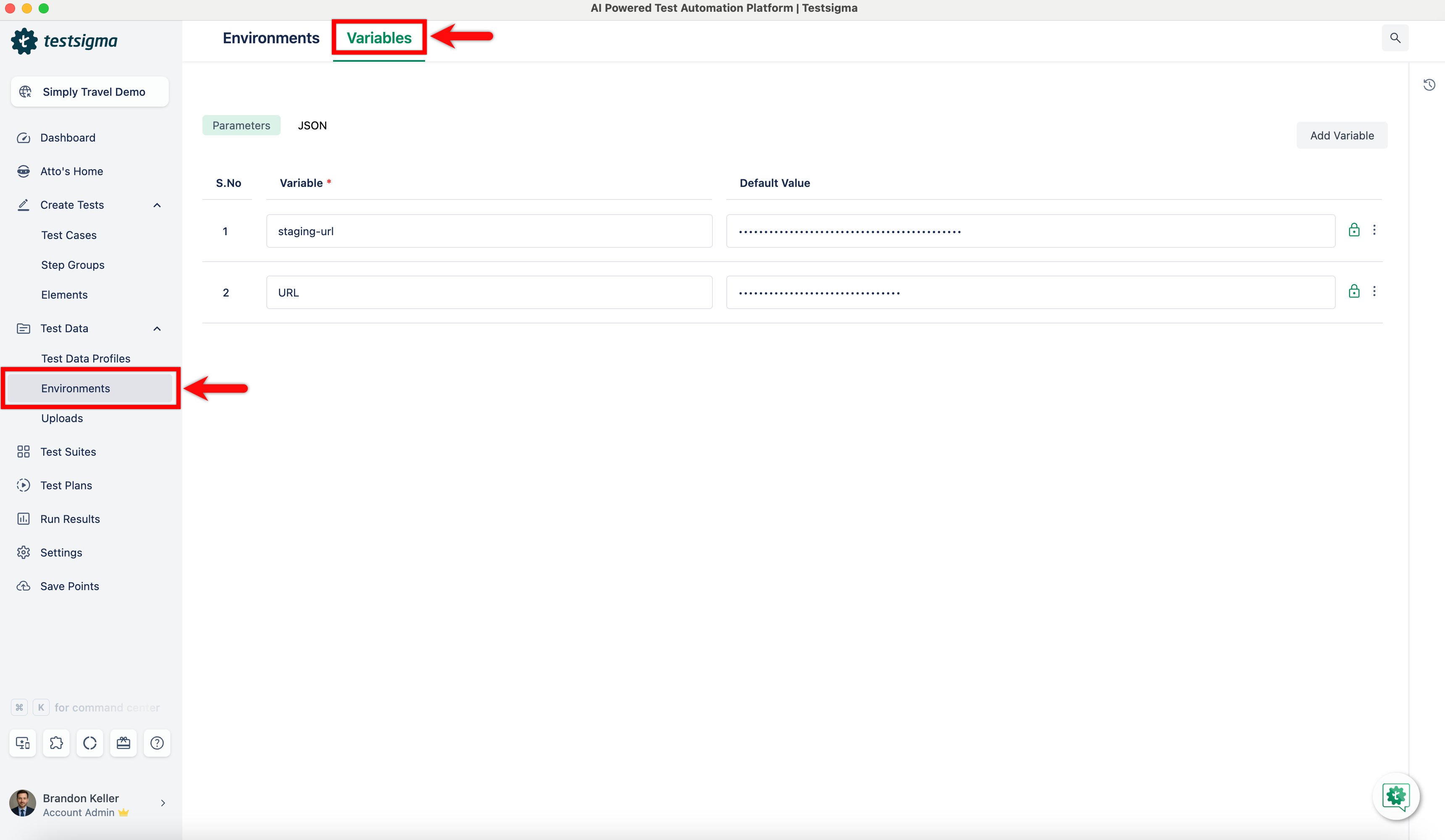

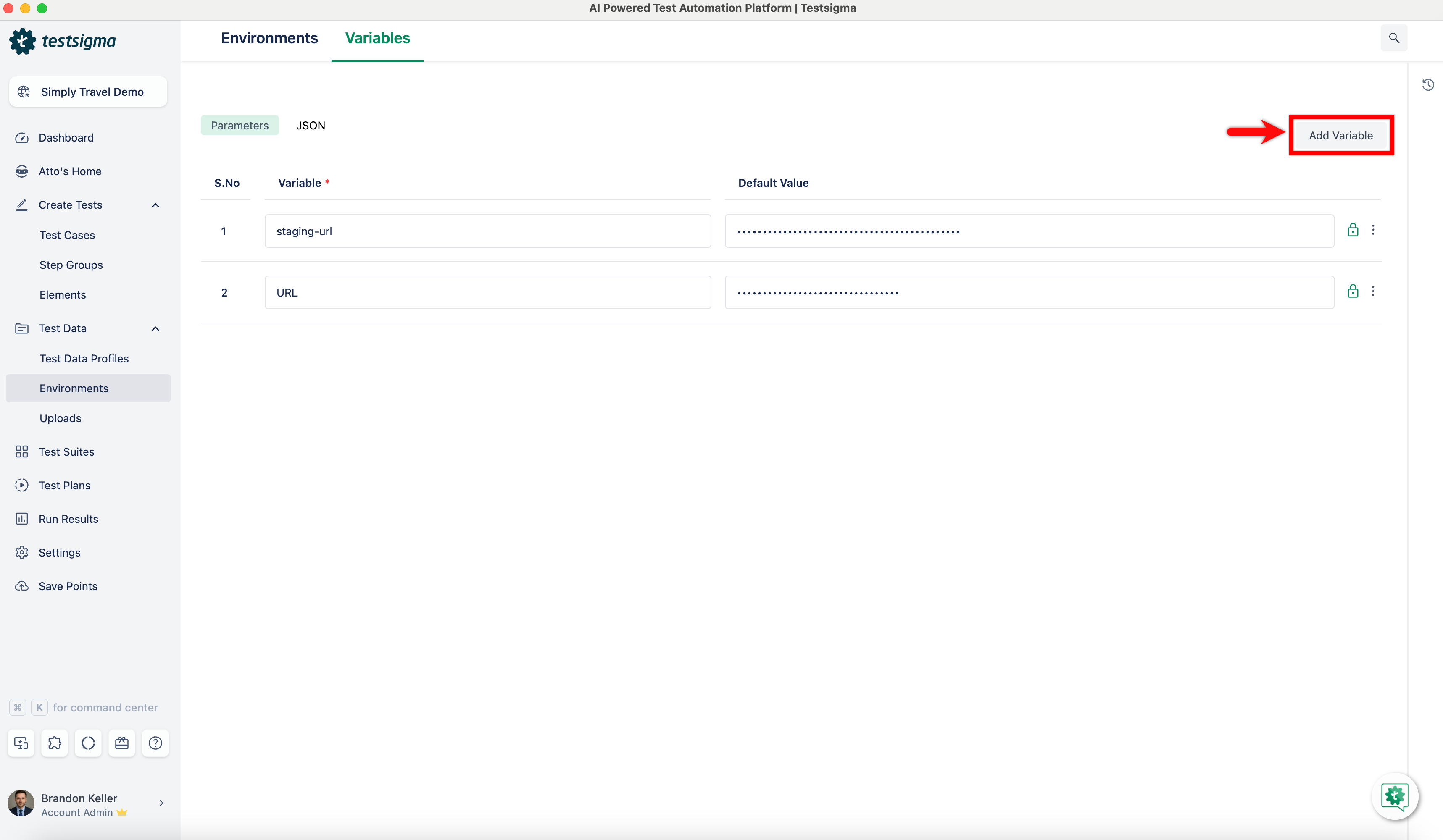

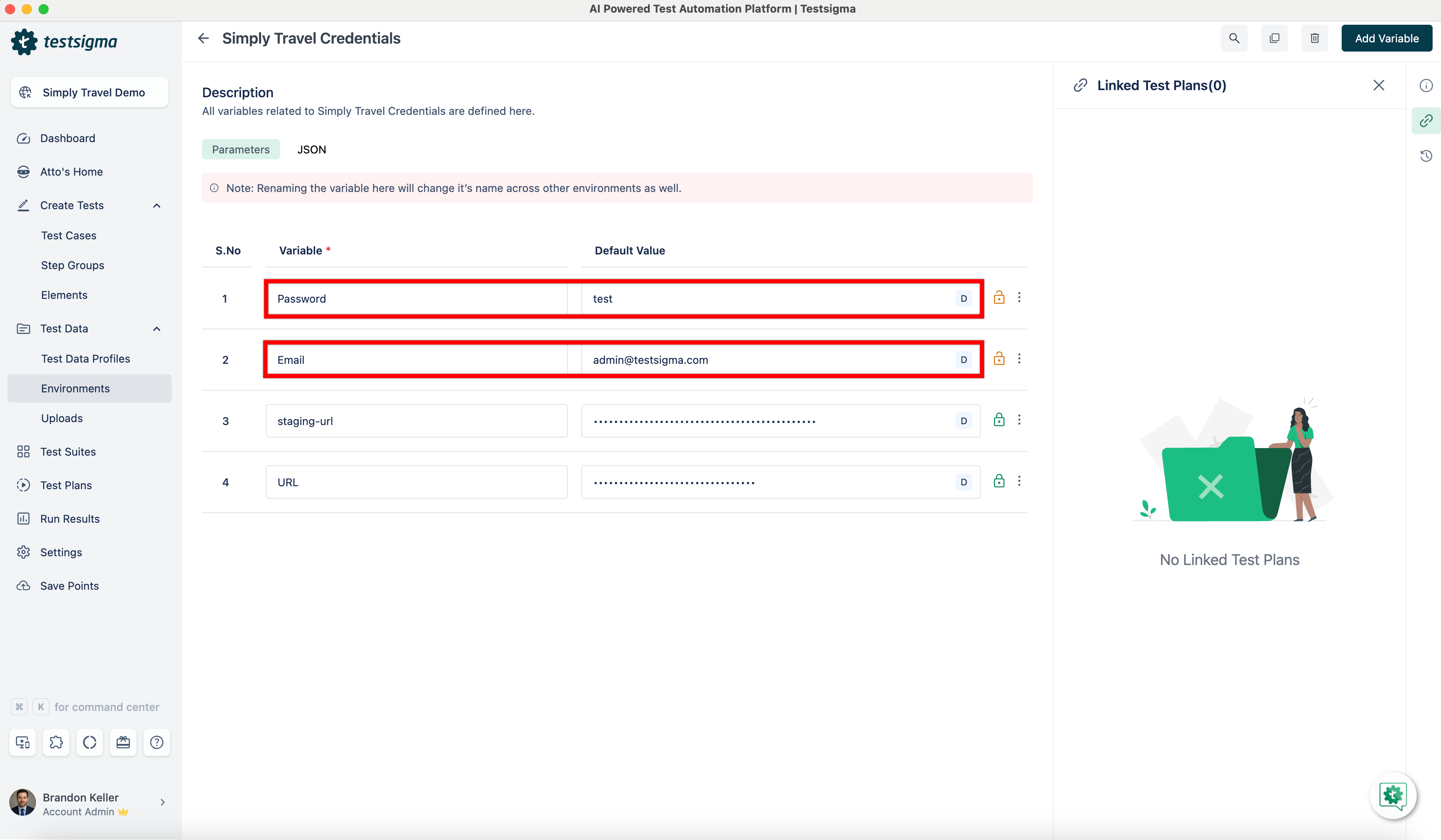

1. From the left navigation bar, go to **Test Data > Environments** and click the **Variables** tab.

-

+

-2. Enter the **Variable** name and **Value** and click **Add**.

-

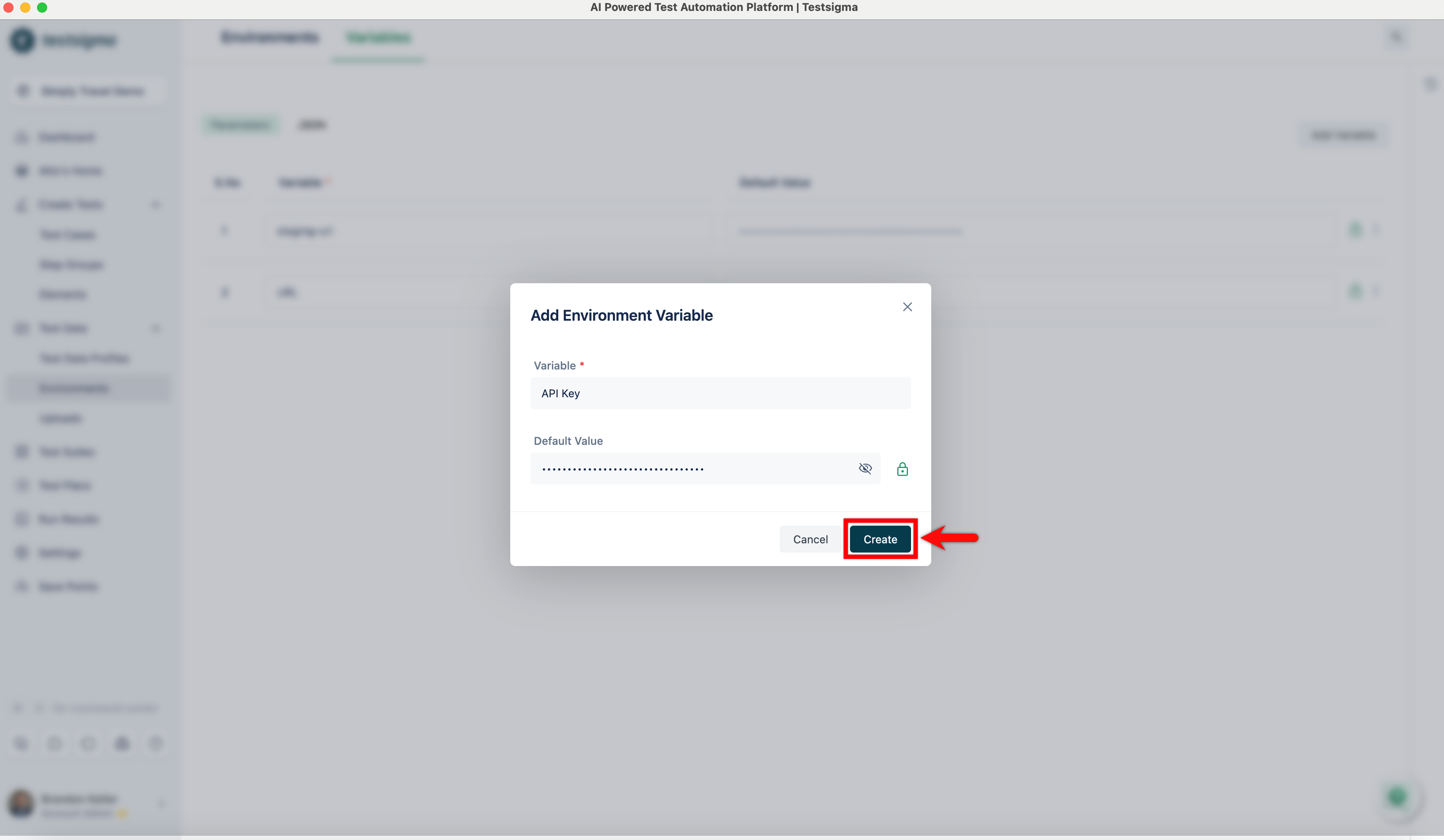

+2. Click **Add Varibale**. This will open the **Add Environment Variable** prompt.

+

+

+3. Enter the **Variable** name and **Value** and click **Create**.

+

[[info | **NOTE**:]]

| **Variable** names should be unique and can not be changed once added.

-3. Once the **Variable** is added to the list, click **Update**.

-

-

-4. Verify the **Variable** added in the **Environments**.

-

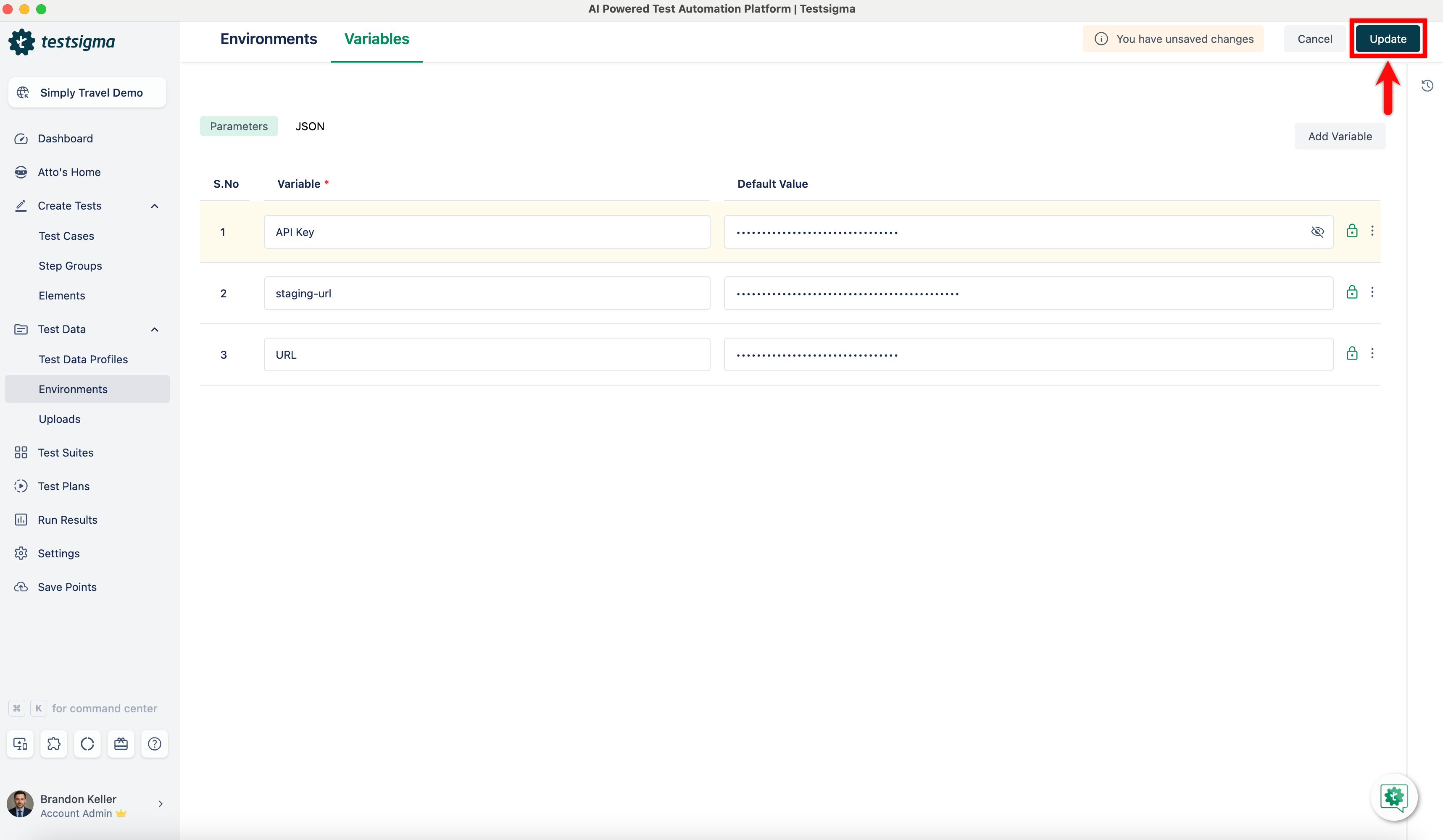

+4. Once the **Variable** is added to the list, click **Update**.

+

-> Alternatively, you can also add Variables by clicking on **Add Variable**. This will open the **Add Environment Variable** prompt.

->

->

-> - Enter the **Variable** name and **Value** and click **Create**.

->

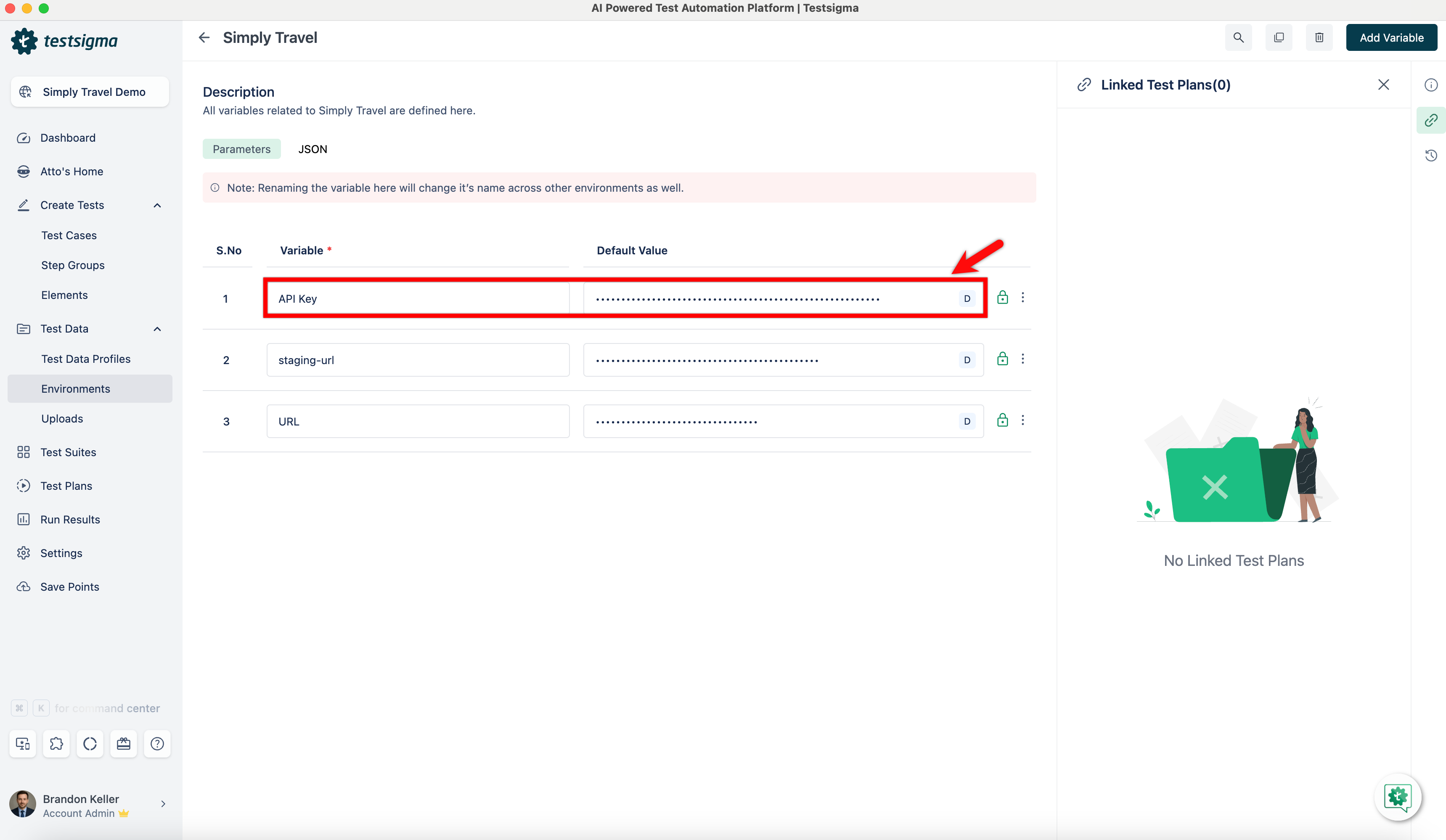

+5. Verify the **Variable** added in the **Environments**.

+

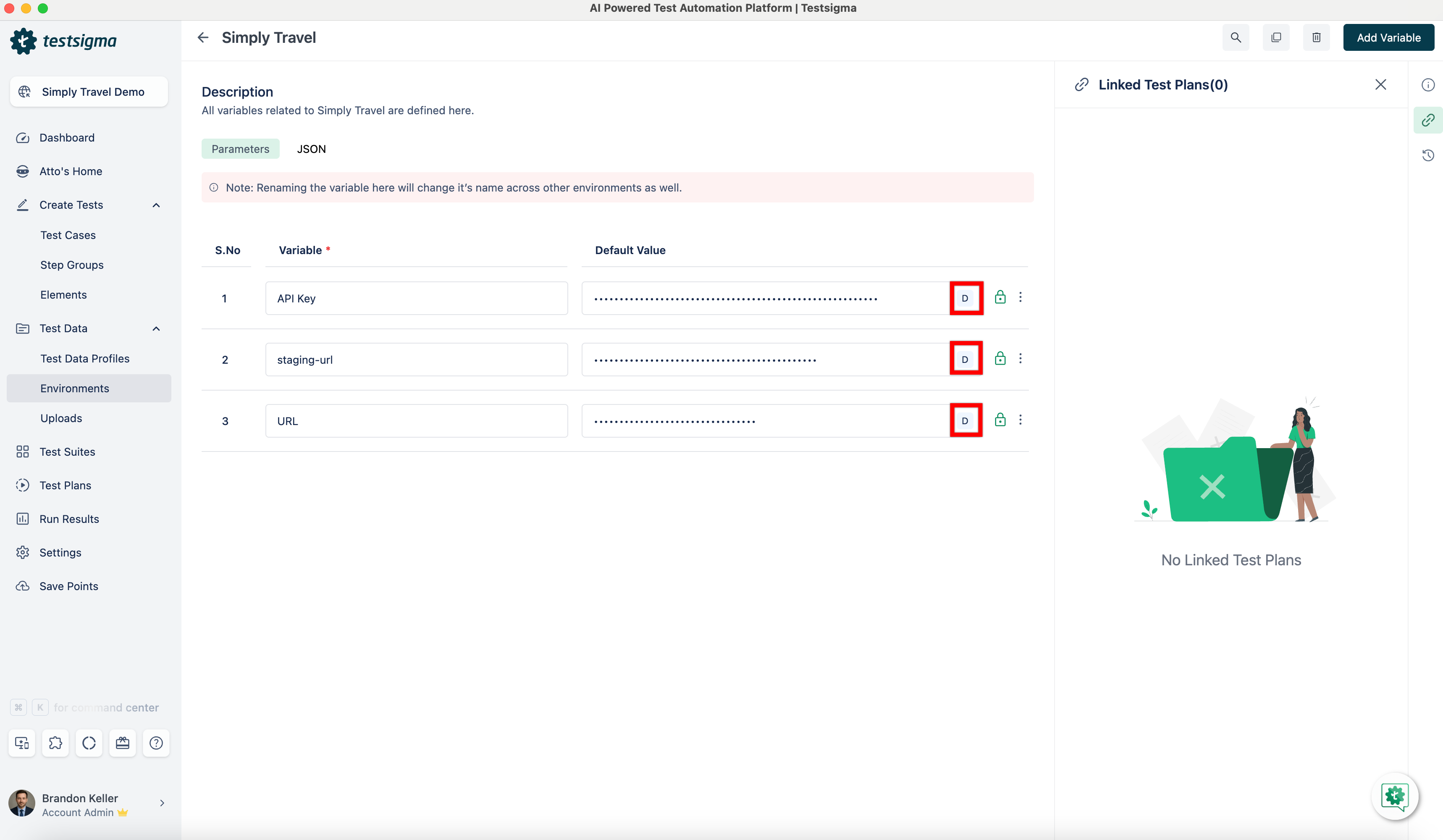

-5. There will be a default indication with the letter **D** for all the **Variables** added globally.

-

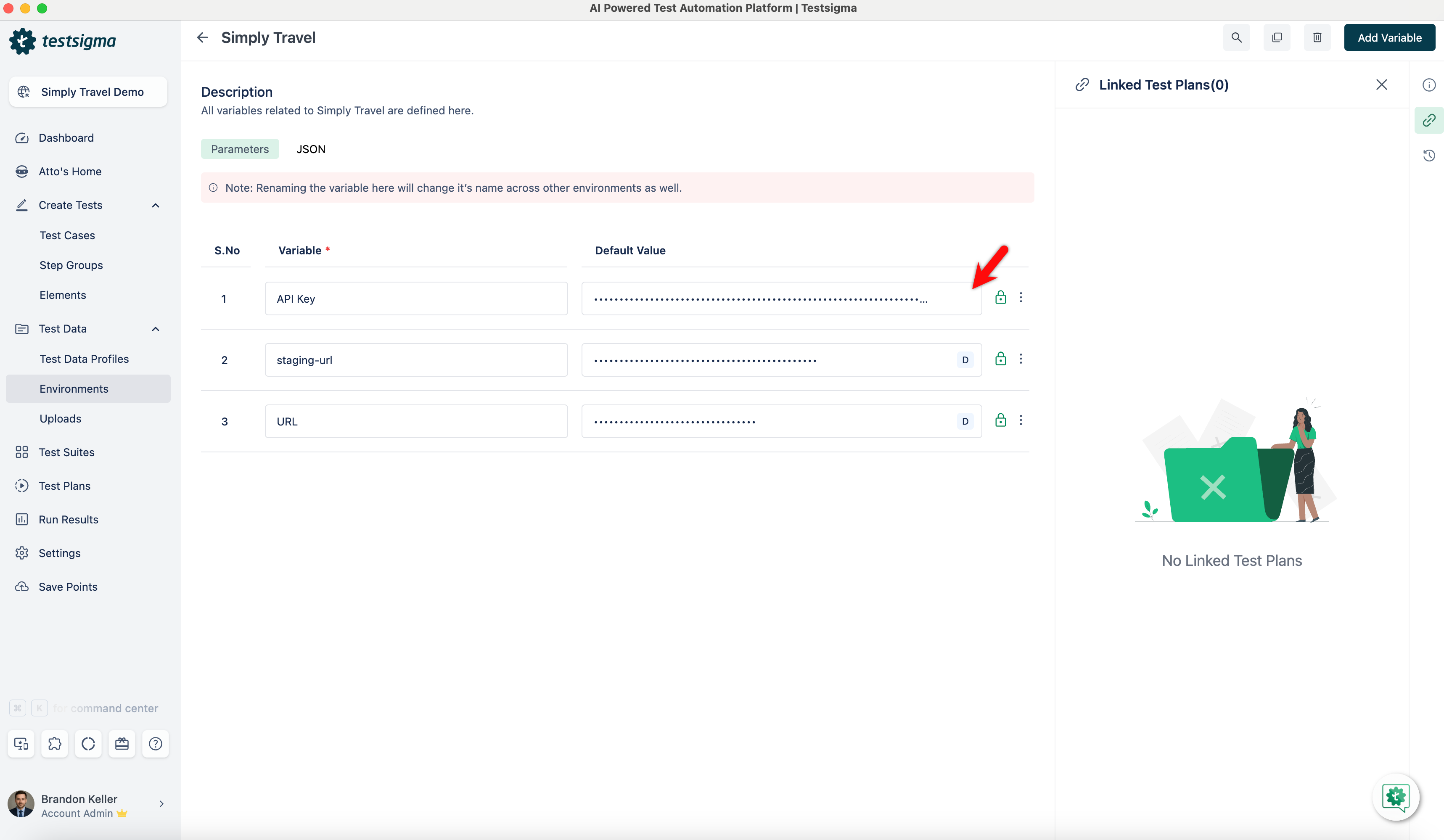

+6. There will be a default indication with the letter **D** for all the **Variables** added globally.

+

[[info | **NOTE**:]]

| Once you edit the **Variable** from the **Environment** page, the default indication with the letter **D** will not be displayed.

-|

+|

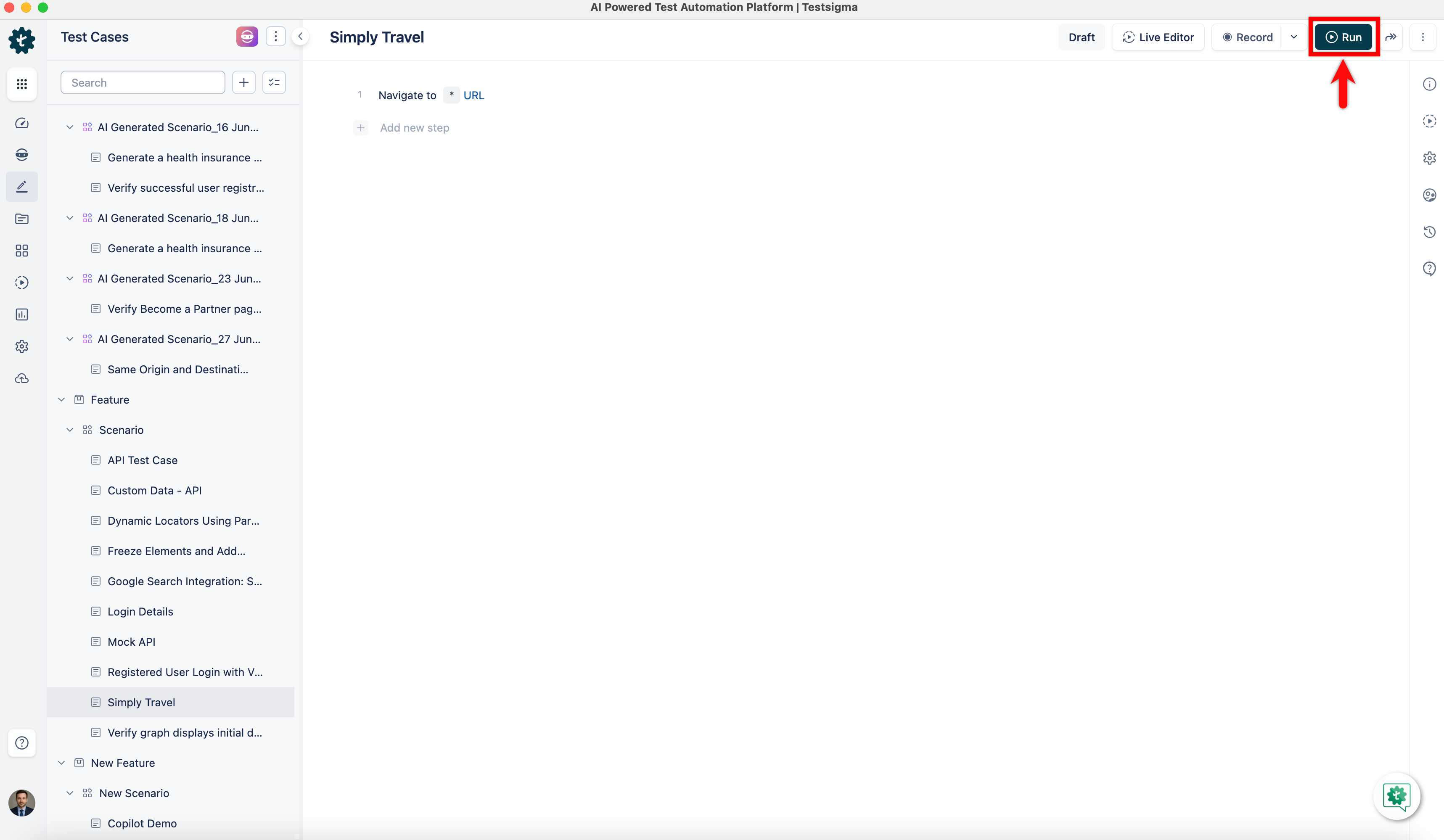

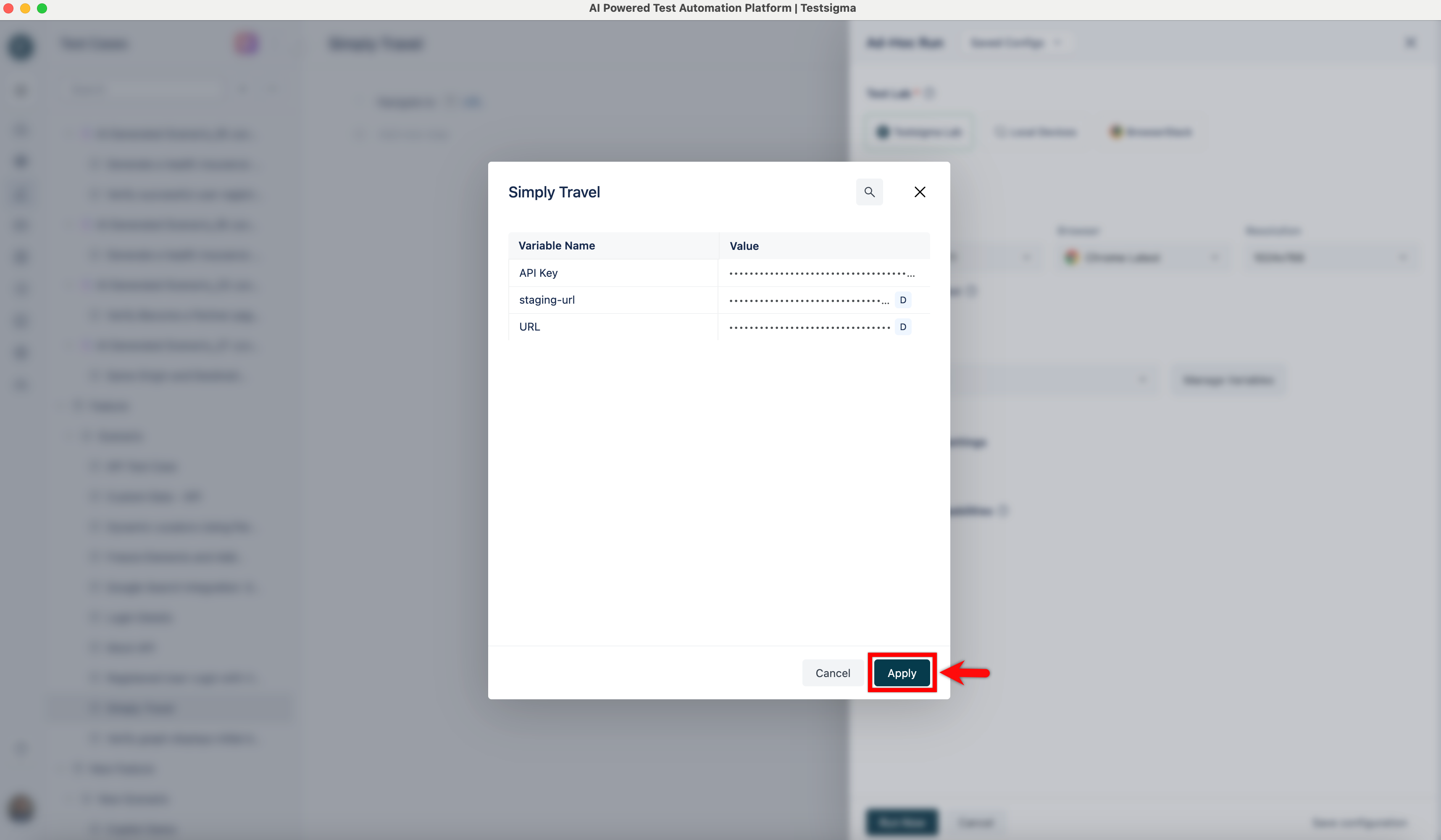

-6. From the **Test Case Details** page, click **Run**. This will open the **Ad-Hoc Run** overlay.

-

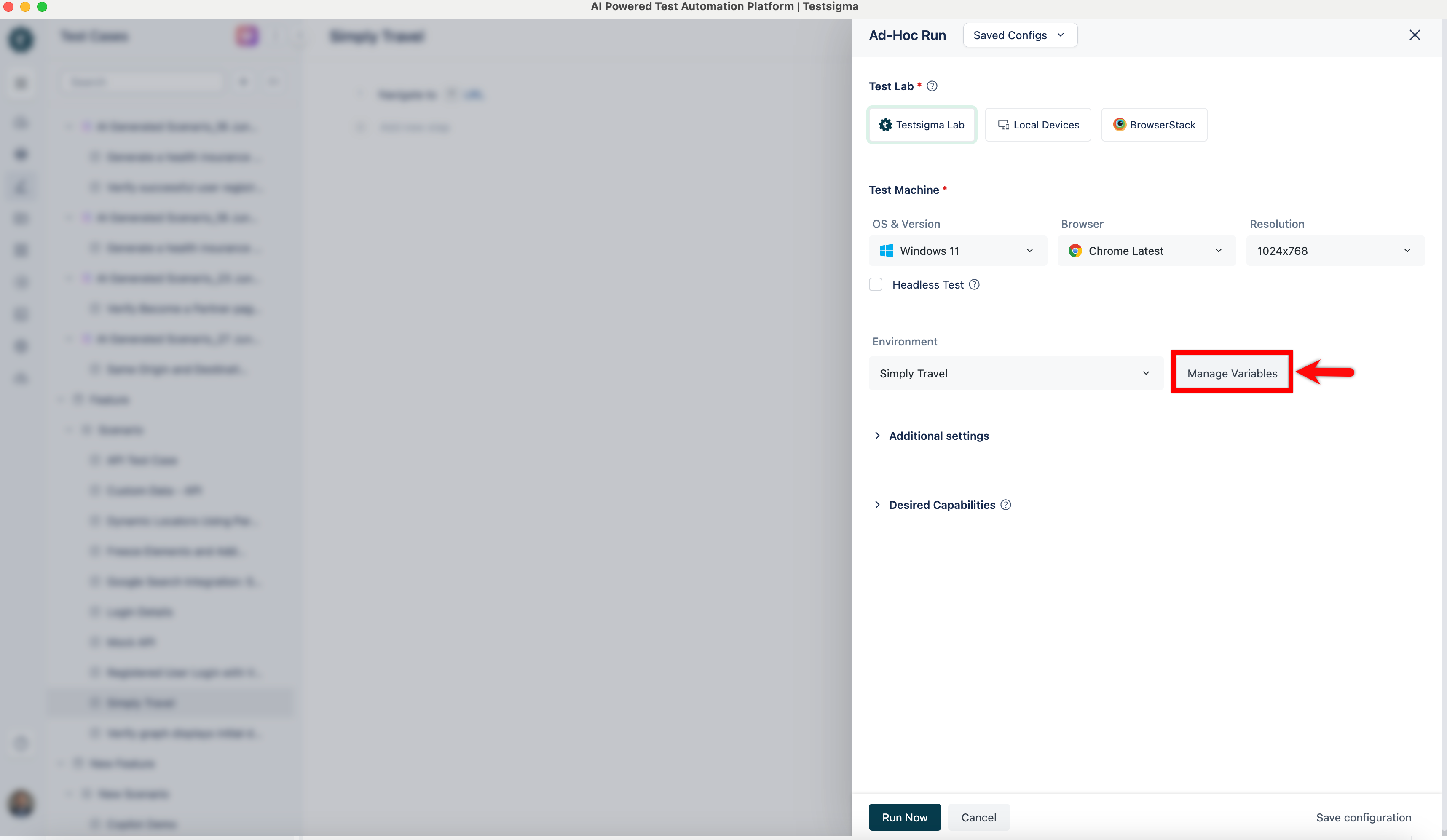

+7. From the **Test Case Details** page, click **Run**. This will open the **Ad-Hoc Run** overlay.

+

-7. Go to the **Environment** section and confirm the variables by clicking on **Manage Variables**.

-

+8. Go to the **Environment** section and confirm the variables by clicking on **Manage Variables**.

+

-8. Update the changes and click **Apply**.

-

+9. Update the changes and click **Apply**.

+

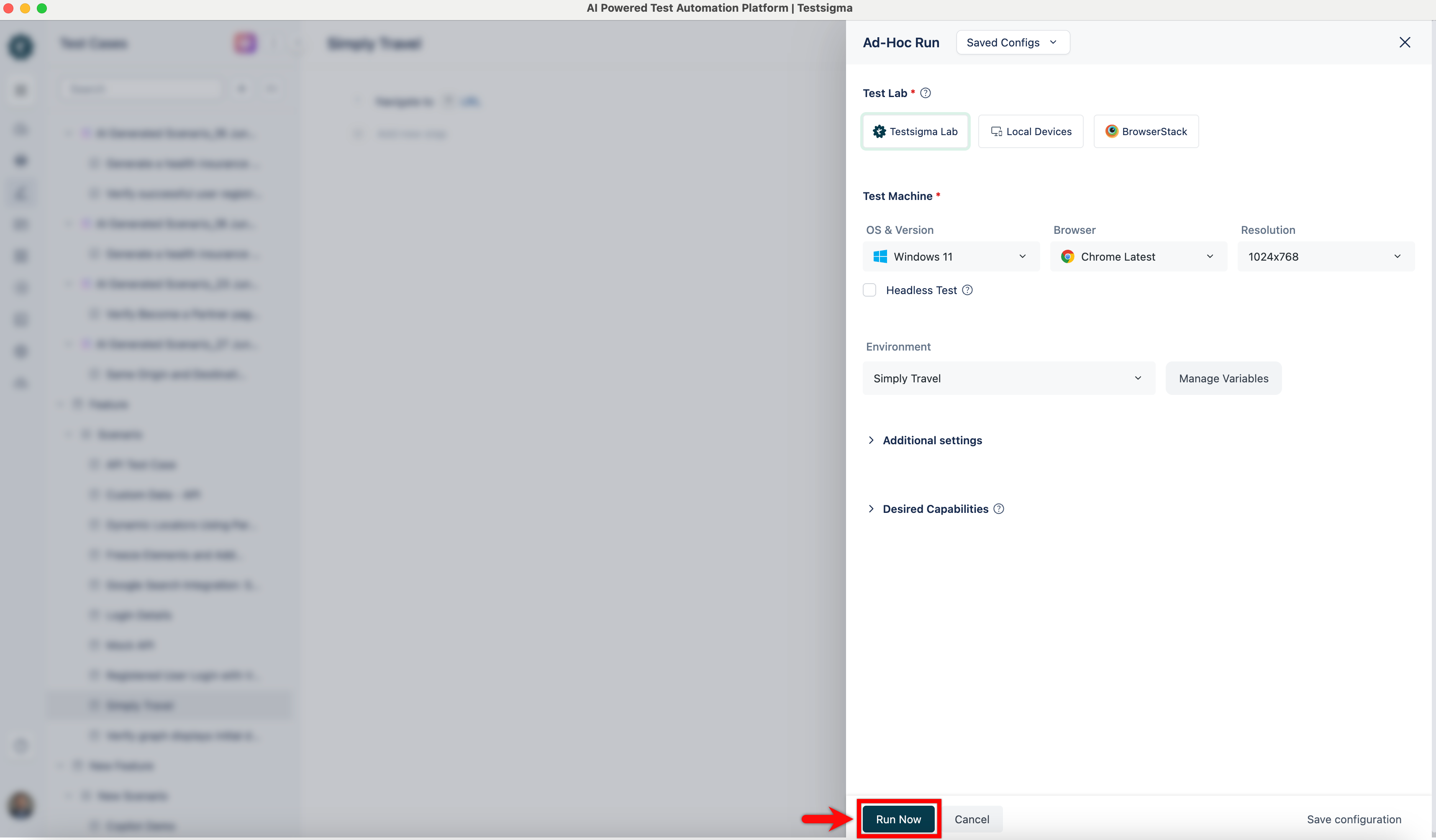

-9. Click on **Run Now** to execute the test with updated **Environment** and **Variable**.

-

+10. Click on **Run Now** to execute the test with updated **Environment** and **Variable**.

+

[[info | **NOTE**:]]

| Some existing users may notice some empty values in their Environment list. This happens as we have curated your Environment list into a master list. The empty values indicate that the corresponding Environment variables were not previously part of your Environment. With the transition to a master list, these variables are added to ensure the proper usage of the Environment functionality. This change will not impact your existing test cases.

@@ -139,11 +136,12 @@ Testsigma also automates the creation of new and updated keys across all environ

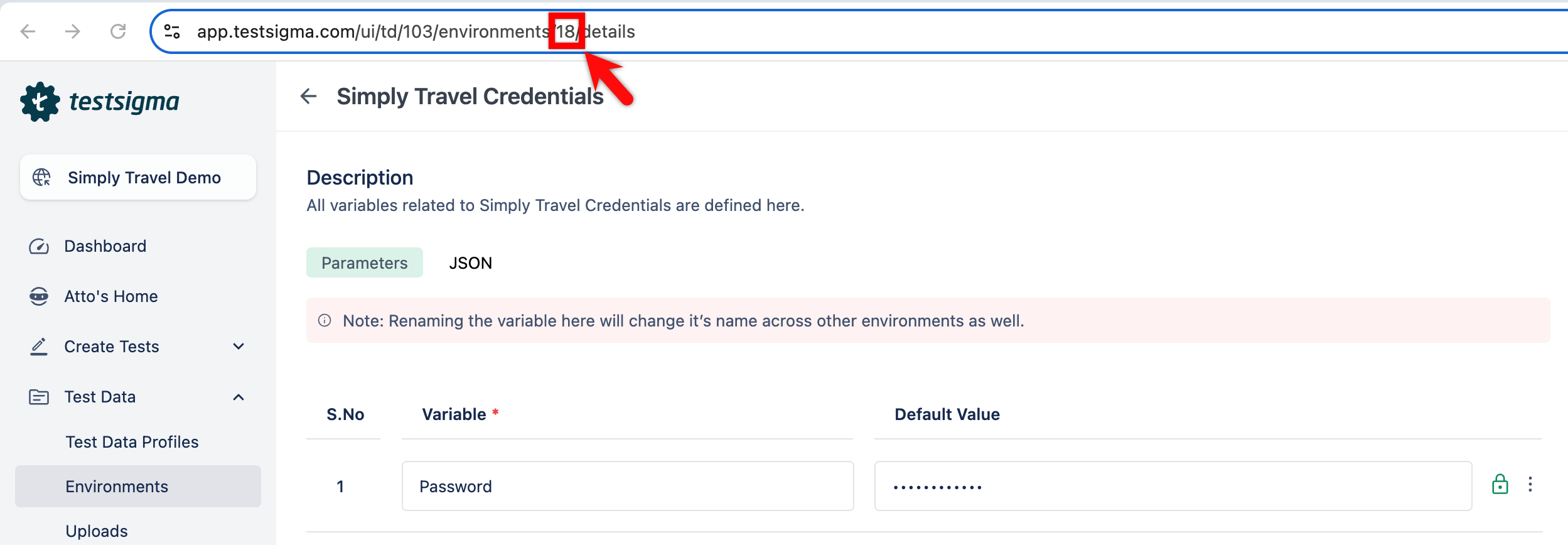

Environment variables can filter test data sets by name during data-driven testing. This method provides flexibility and reusability, making your test cases more adaptable to different scenarios.

-1. Create an **Environment** and add the name-value pairs that match the set names and values in your Test Data Profile.

+1. Create an **Environment** and add the name-value pairs that match the set names and values in your Test Data Profile.

2. On the Test Case Details page, click the **Test Case Settings** icon in the right navigation bar.

-3. Select the **Test Data Profile** from the dropdown menu to associate it with the test case. Enable the **Data-Driven** toggle.

+3. Select the **Test Data Profile** from the dropdown menu to associate it with the test case. Enable the **Data-Driven** toggle.

4. Choose **Parameters** as the **Filter type**. Select **Equals**, **Contains**, **Starts with** or **Ends with** as the **Comparison Metric** from the dropdown menu. Choose **Parameter Set Name** from the dropdown.

-5. Type *| to open the Environment overlay and choose the **Environment Parameter Name** to add the filters.

+

+5. Type *| to open the Environment overlay and choose the **Environment Parameter Name** to add the filters.

6. When you run the test case, it will execute using the specified filters.

@@ -157,6 +155,6 @@ Environment variables can filter test data sets by name during data-driven testi

3. The can be found in the URL of the Environment details page.

-

+

---

\ No newline at end of file

diff --git a/src/pages/docs/test-data/types/raw.md b/src/pages/docs/test-data/types/raw.md

index 6af6f017..75a0b21e 100644

--- a/src/pages/docs/test-data/types/raw.md

+++ b/src/pages/docs/test-data/types/raw.md

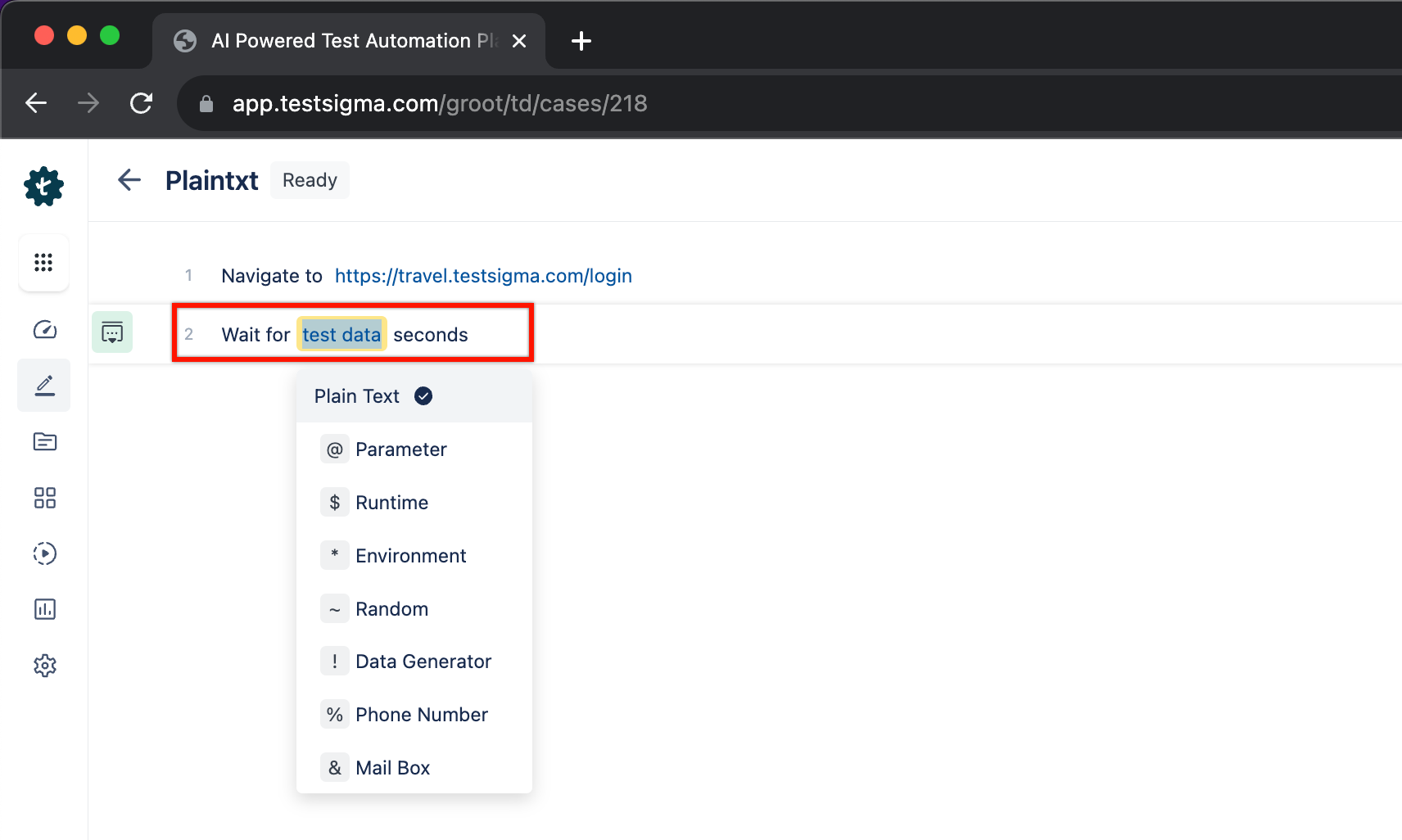

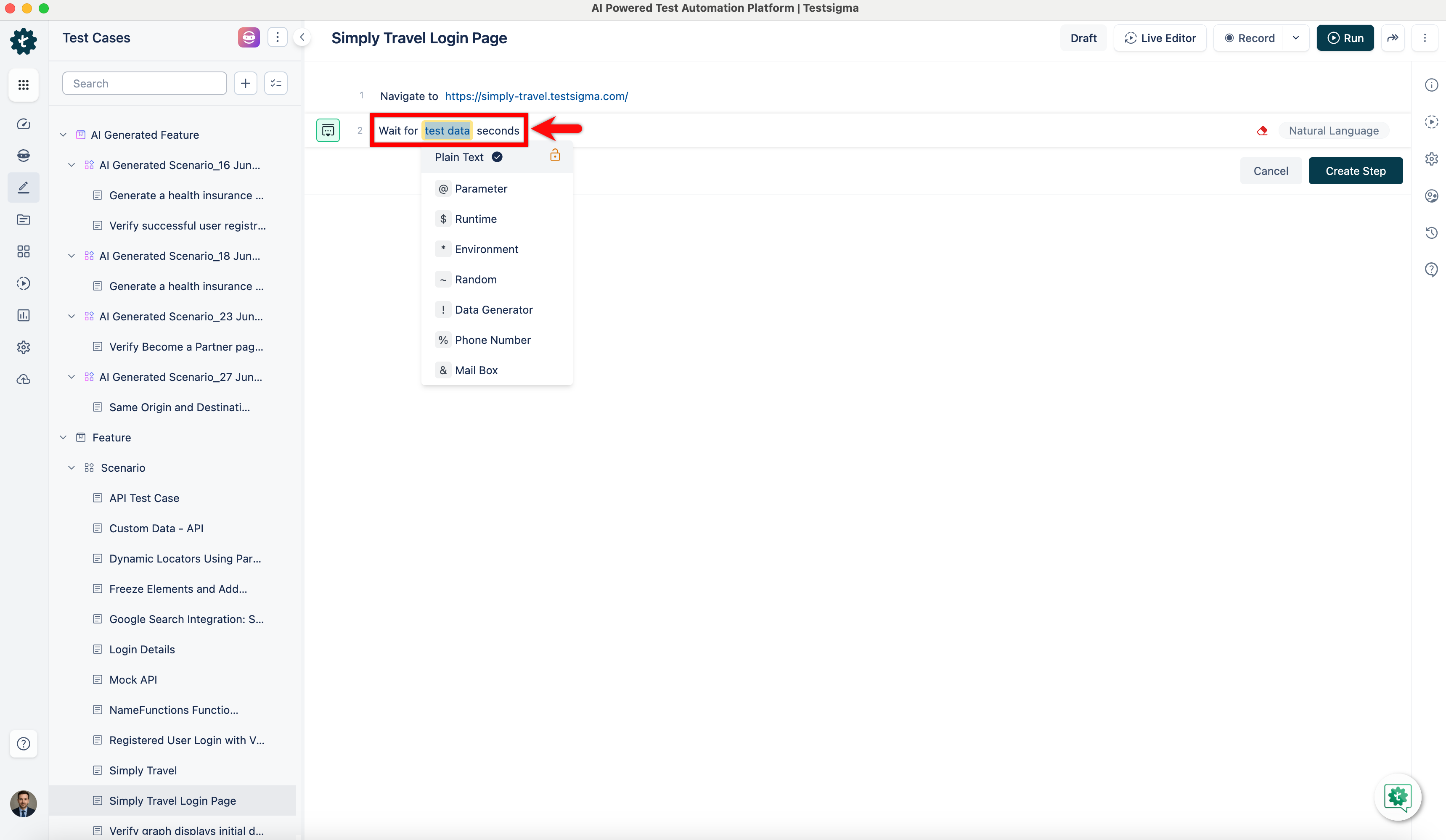

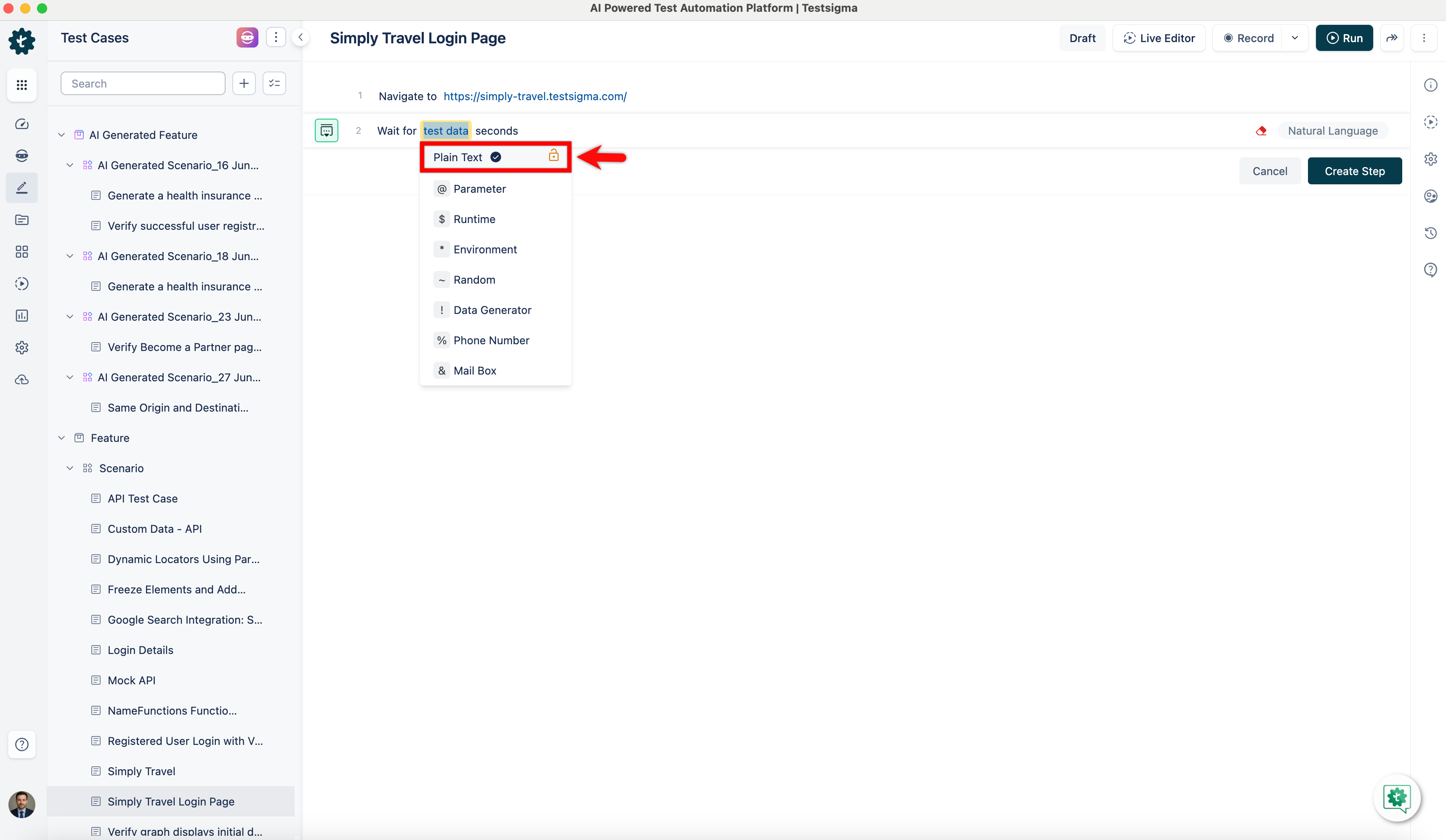

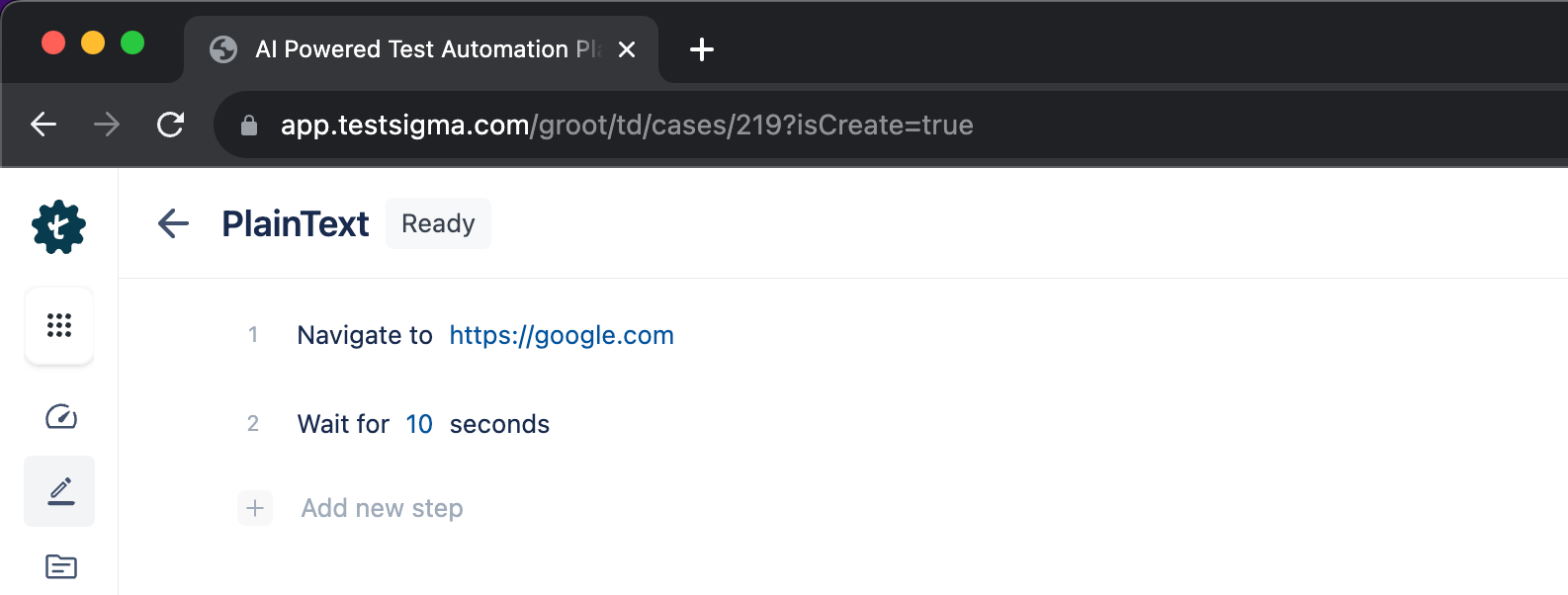

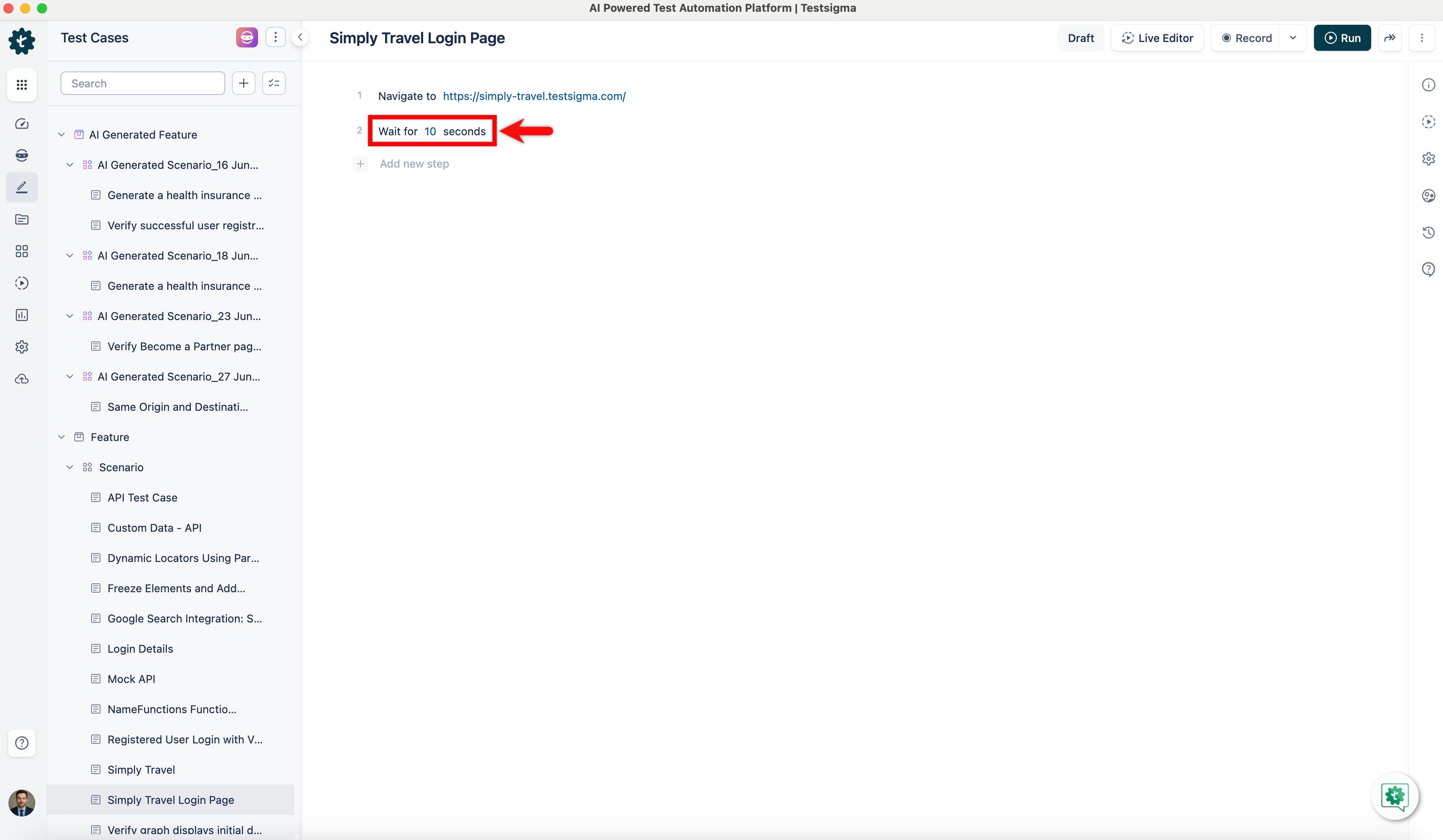

@@ -30,7 +30,7 @@ Plain Text or Raw data is used when you want to hardcode an input in your test.

**For Example:**

Here we are using the NLP, **Wait for *test data* seconds**.

-

+

We’ll select **Plain Text** from the dropdown and enter a number to create a test step.

@@ -39,13 +39,13 @@ We’ll select **Plain Text** from the dropdown and enter a number to create a t

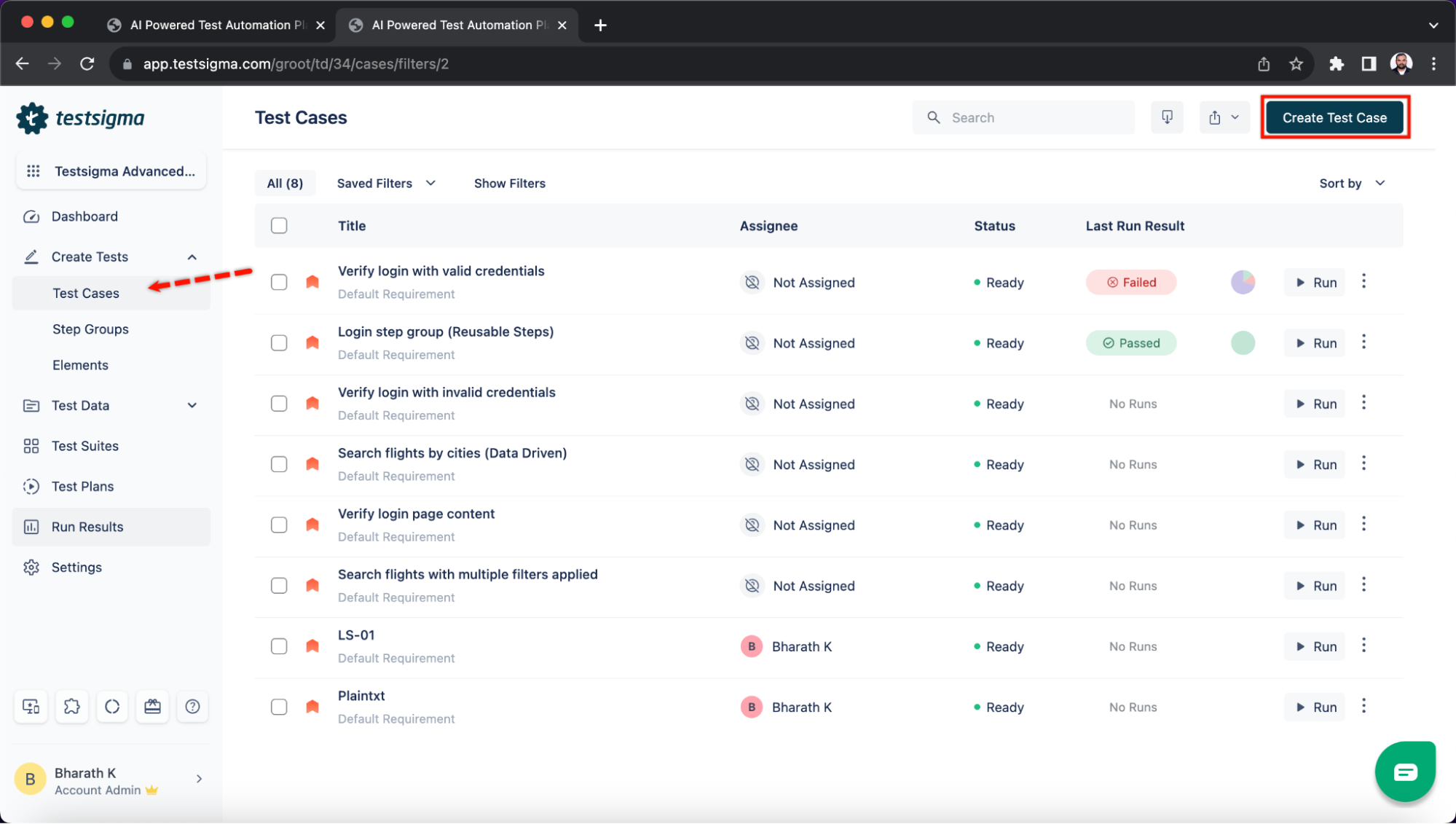

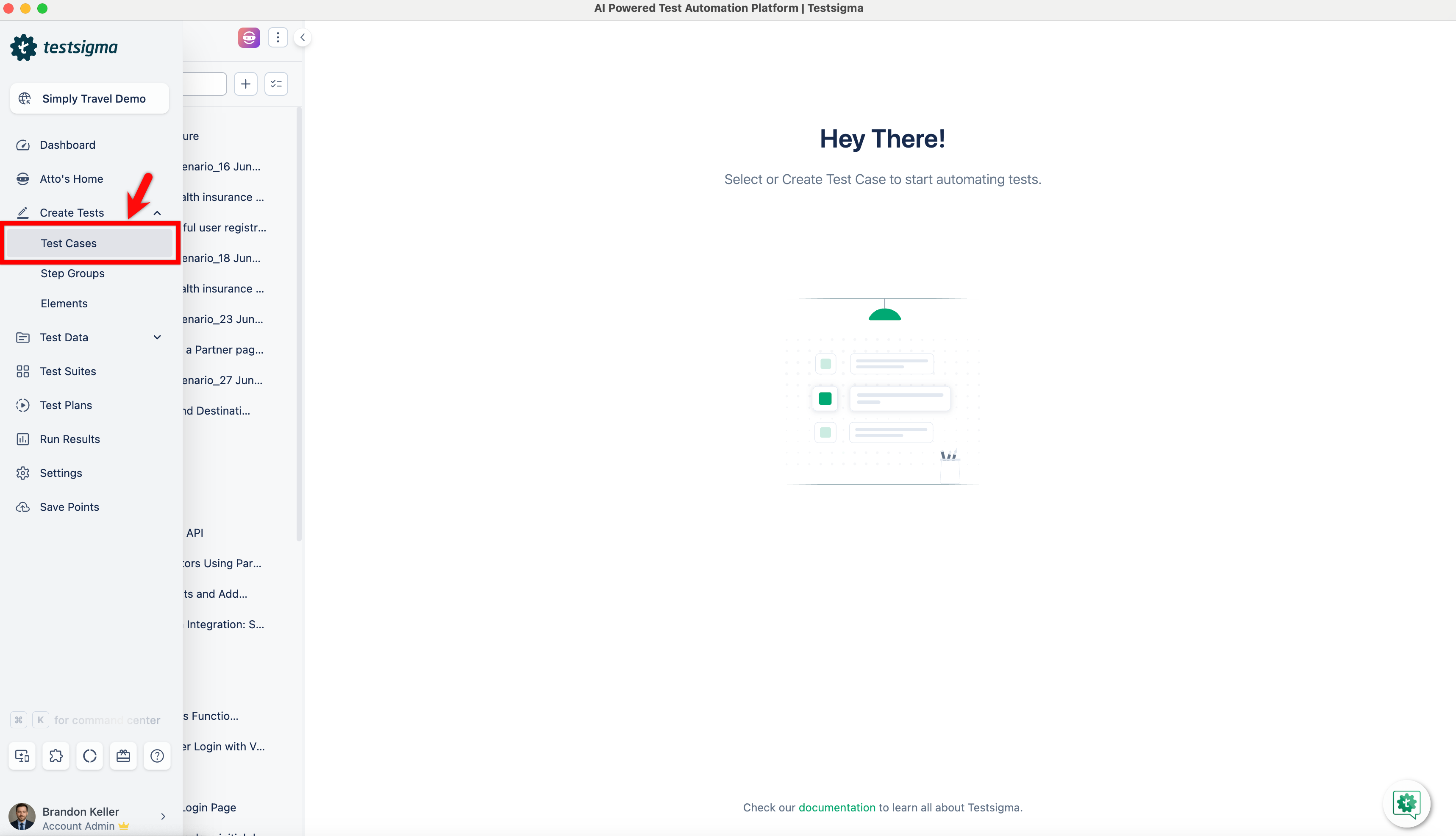

## **How to Use Plain Text in a Test Case?**

1. Navigate to **Create Tests > Test Cases** and click on **Create Test Case**.

-

+

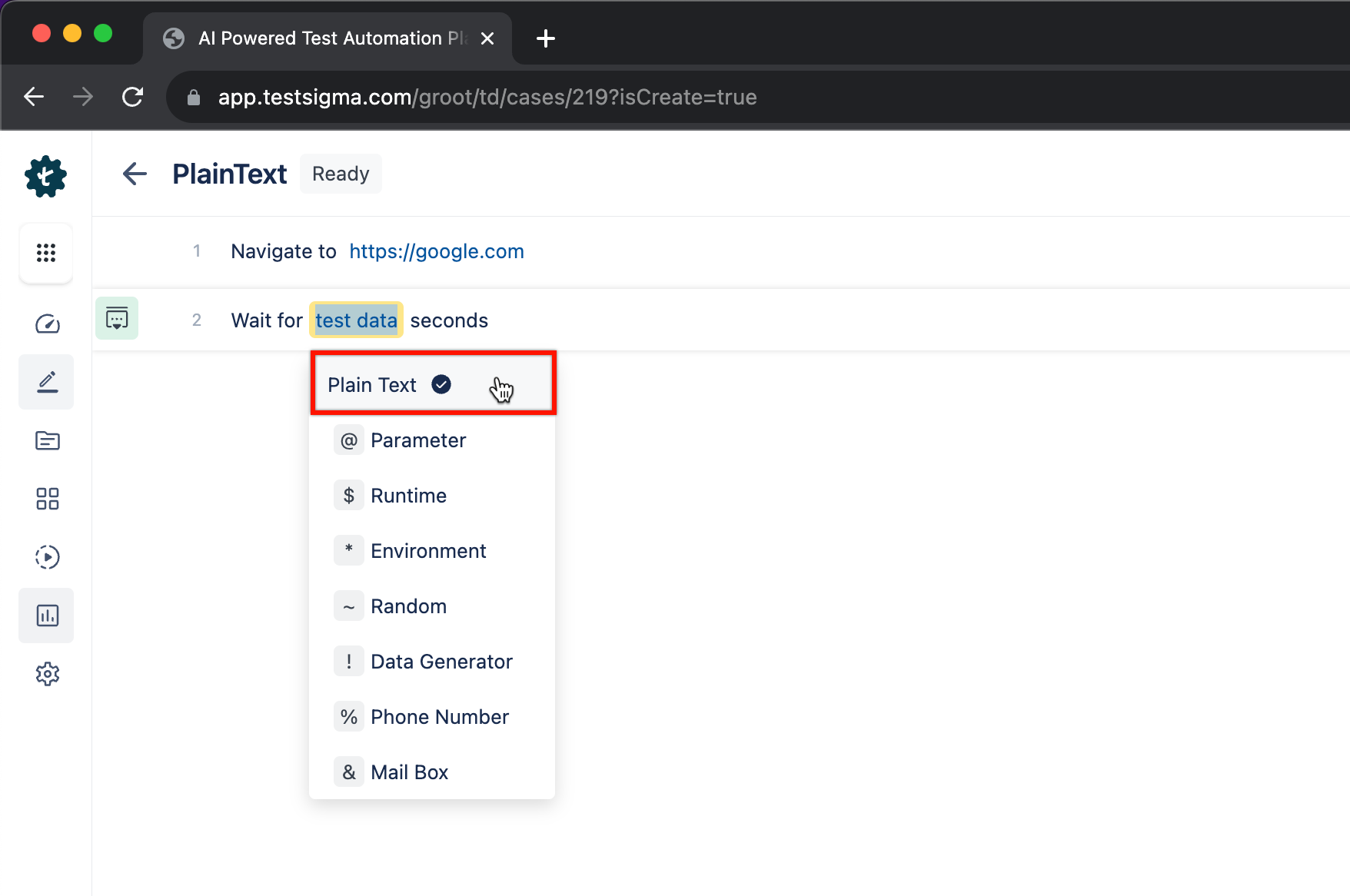

2. Create a test step with NLP that has ***test data*** in it and choose **Plain Text** from the dropdown.

-

+

3. Here, erase ***test data*** and enter a number to create the test step.

-

+

[[info | **NOTE**:]]

| You can replace plain text with anything depending on the test case scenario.

diff --git a/src/pages/docs/test-data/types/runtime.md b/src/pages/docs/test-data/types/runtime.md

index 4123adf8..eba06ca4 100644

--- a/src/pages/docs/test-data/types/runtime.md

+++ b/src/pages/docs/test-data/types/runtime.md

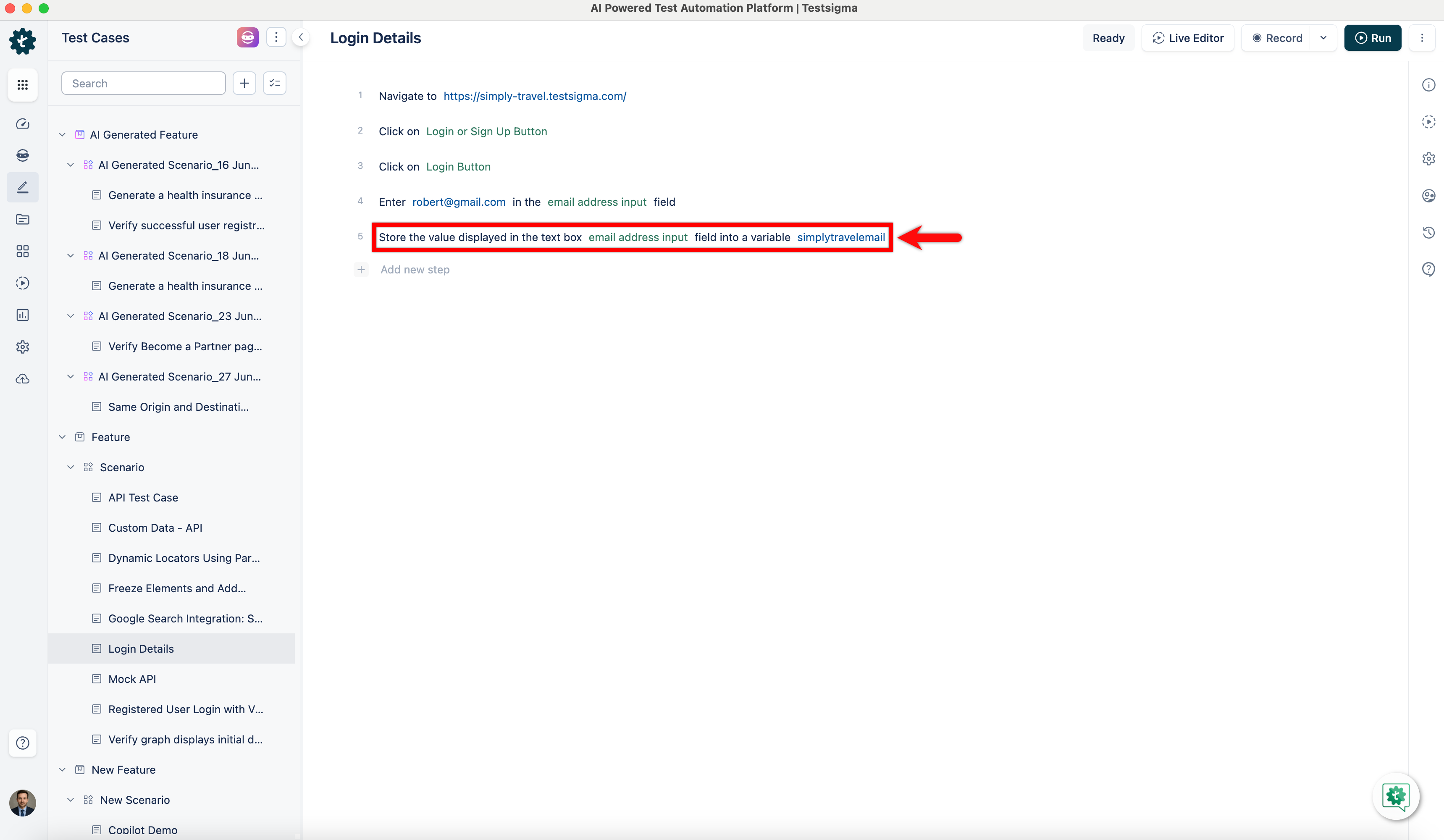

@@ -56,6 +56,7 @@ In Testsigma, Runtime Test Data allows you to save data gathered while running a

[[info | NOTE:]]

| During the first test run, the application stores the runtime variables and keeps them accessible for future runs. If you need to rerun a test that fails, the application will automatically apply the runtime variables from the initial run.

+

### **Example:**

@@ -85,11 +86,7 @@ In Testsigma, Runtime Test Data allows you to save data gathered while running a

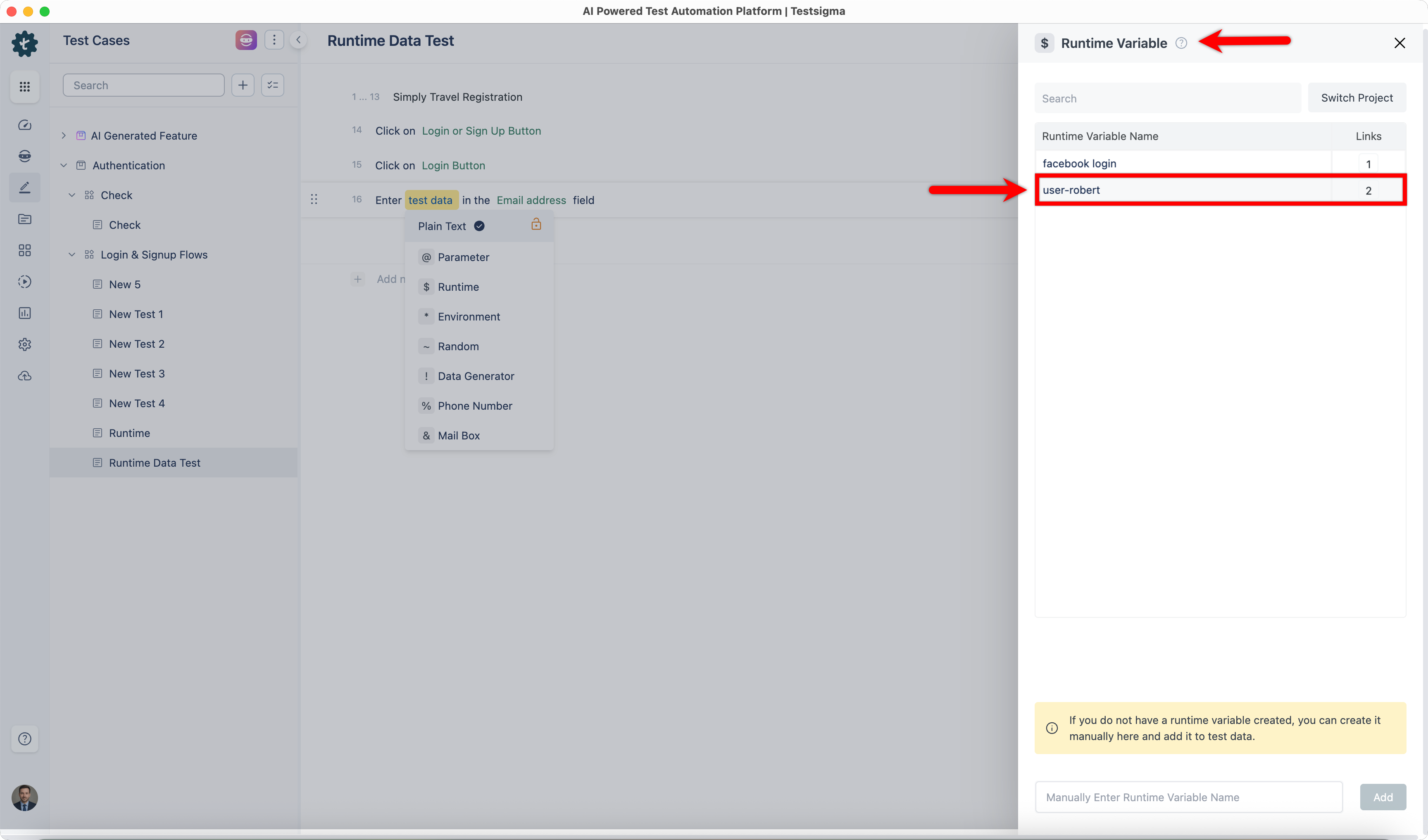

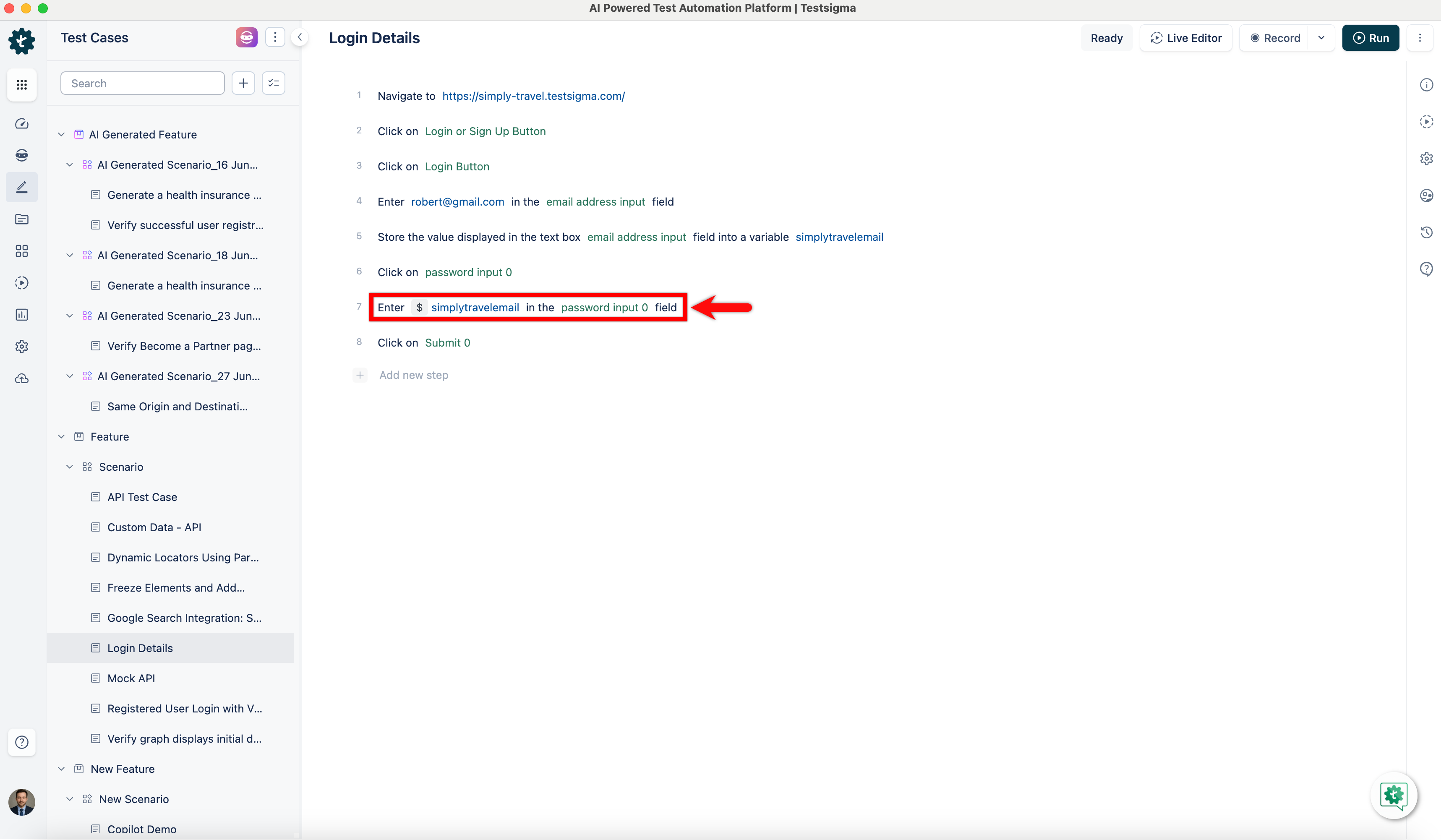

3. A list of runtime variables already created in the project will appear in the **Runtime Variables** overlay.

-

-4. Select the **Runtime Variable** from the overlay or **search** for a runtime variable and insert it into the test steps.

-

-

- You can also click the **Switch Project** button in the overlay, select the **Project**, Application, and Version, and use a Runtime Variable created in other projects.

+4. Select the **Runtime Variable** from the overlay or **search** for a runtime variable and insert it into the test steps. You can also click the **Switch Project** button in the overlay, select the **Project**, Application, and Version, and use a Runtime Variable created in other projects.

[[info | NOTE:]]

| The run report will show the following error if you enter a variable name without storing a value. Always remember to store the value in a variable before using it.

diff --git a/src/pages/docs/test-plans/email-configuration.md b/src/pages/docs/test-plans/email-configuration.md

index e2cd68c0..96707ee2 100644

--- a/src/pages/docs/test-plans/email-configuration.md

+++ b/src/pages/docs/test-plans/email-configuration.md

@@ -34,18 +34,19 @@ Setting up email notifications for test plans in Testsigma helps you stay inform

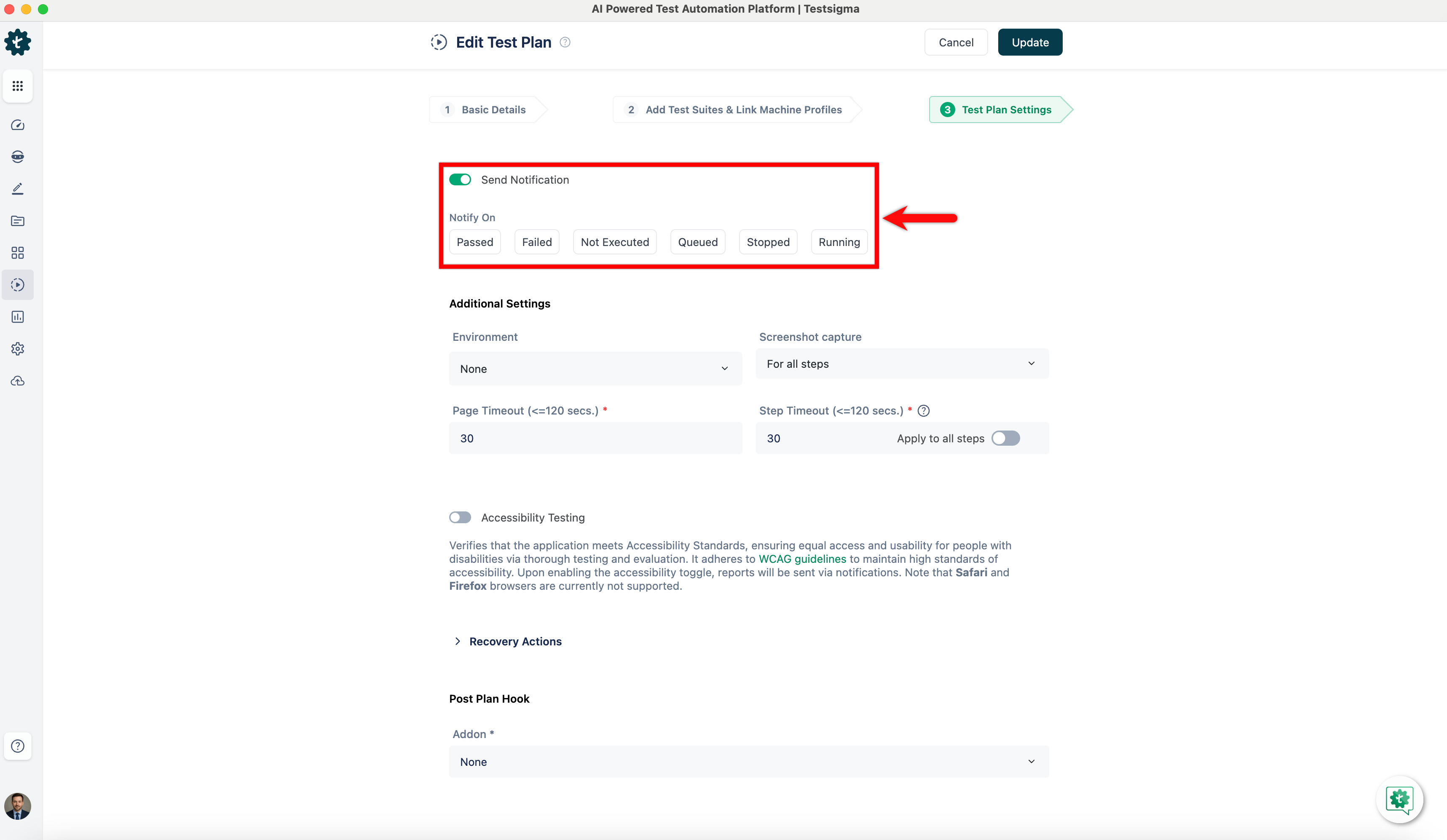

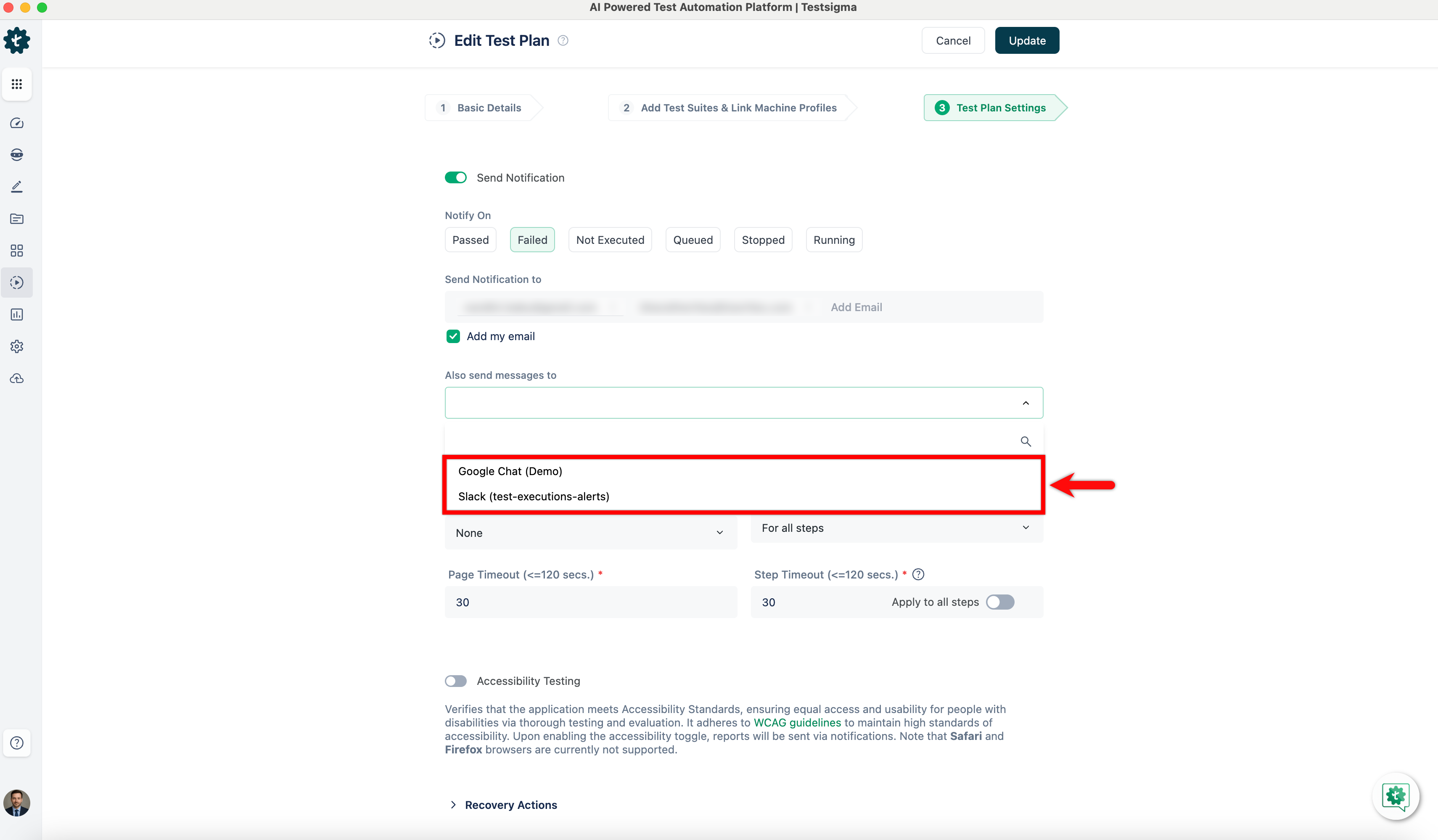

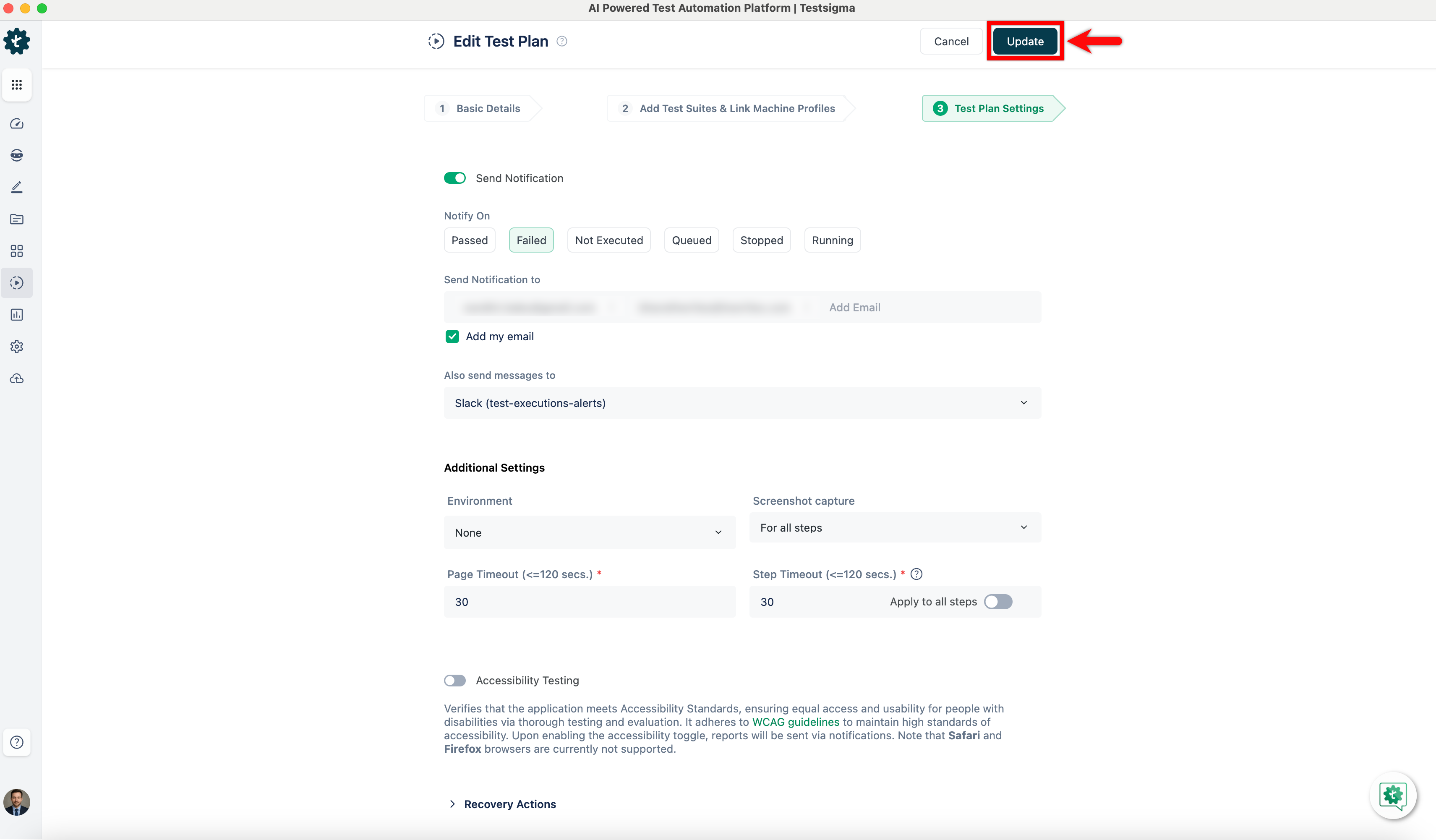

## **Configure email in Test Plan**

1. Navigate to **Test Plans** and create a new test plan or open an existing one.

+

2. On the **Create** or **Edit Test Plan** page, go to **Test Plan Settings** tab.

+

3. Enable **Send Notifications** toggle, select when to Notify On from below:

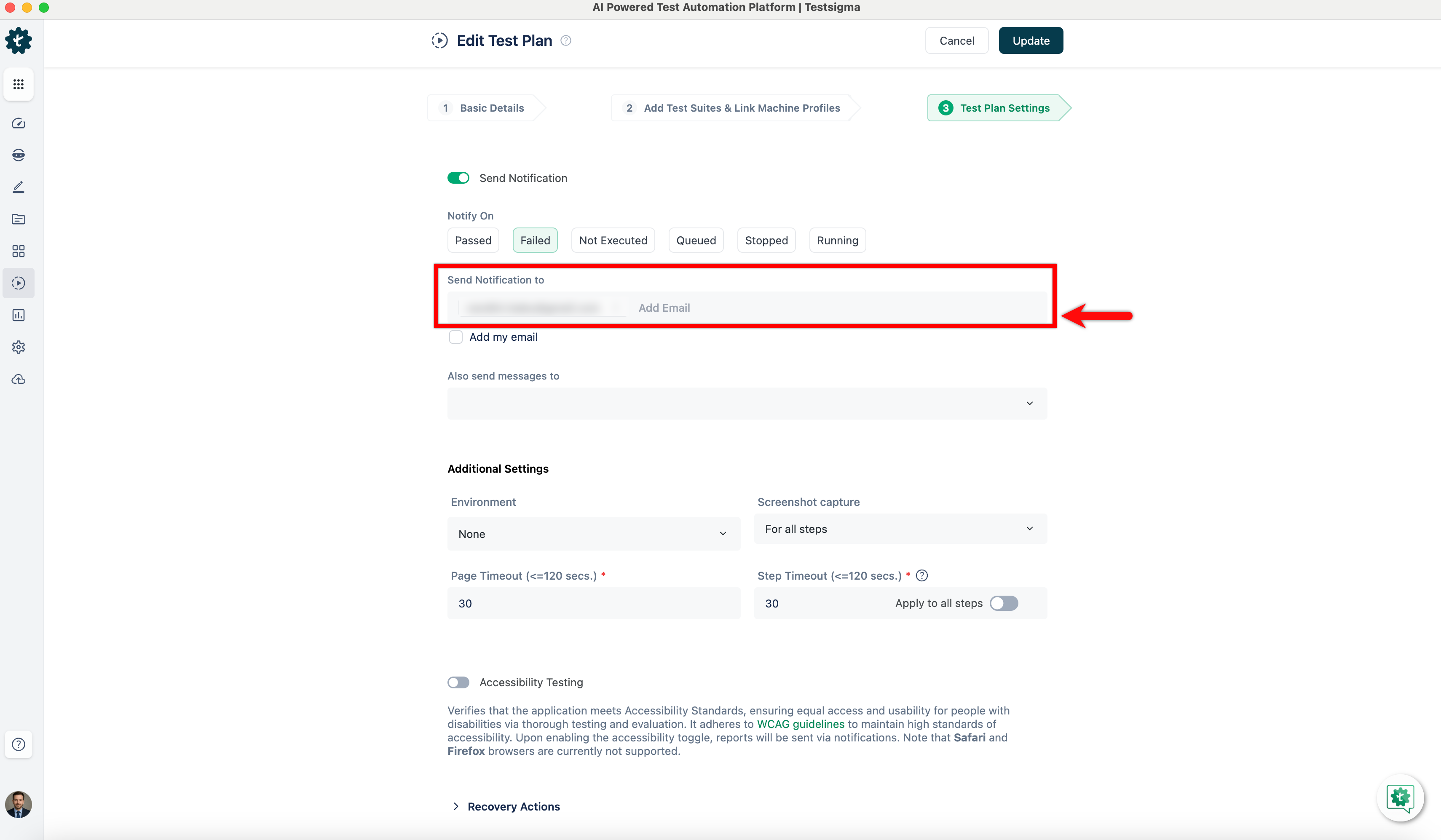

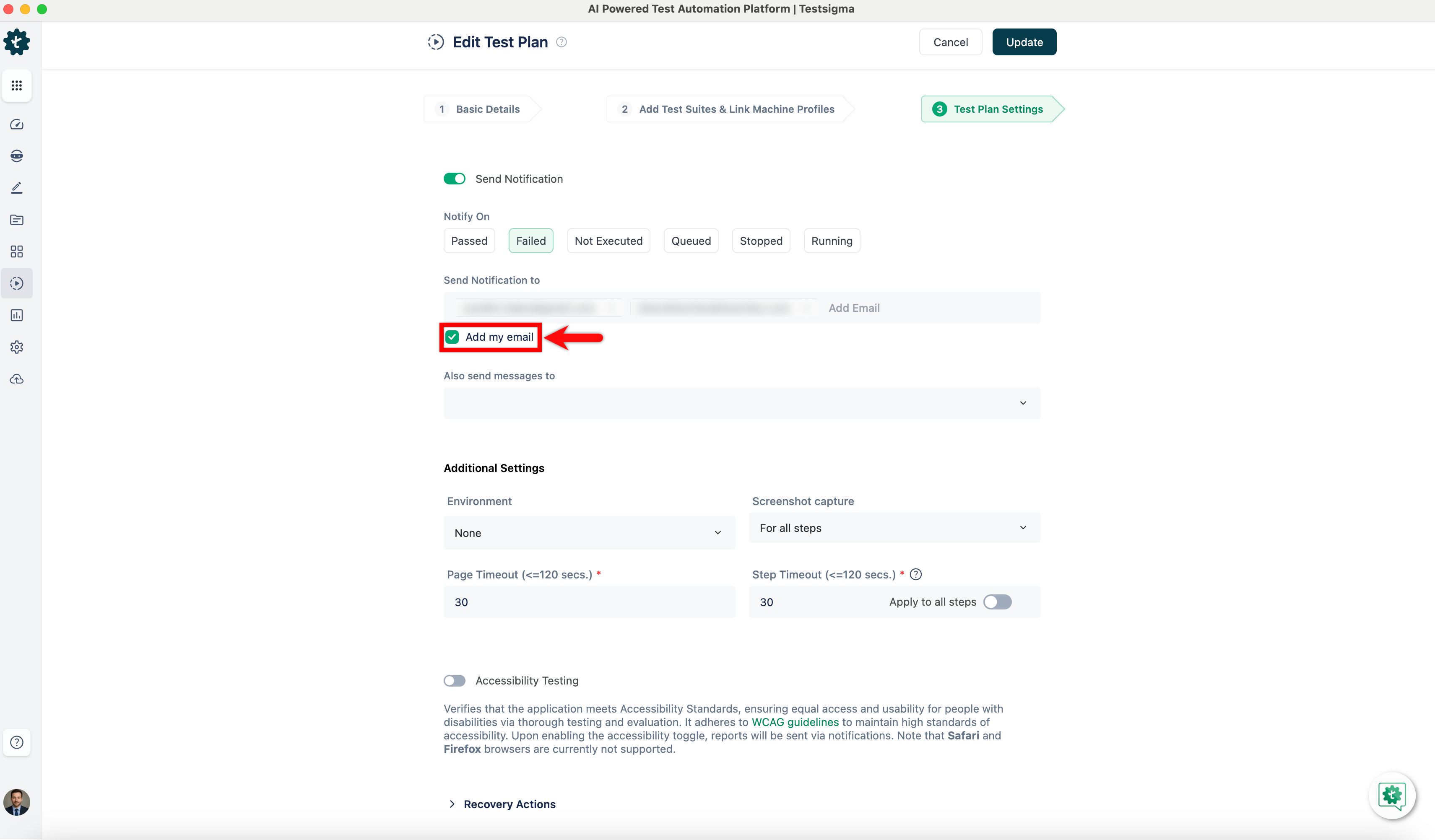

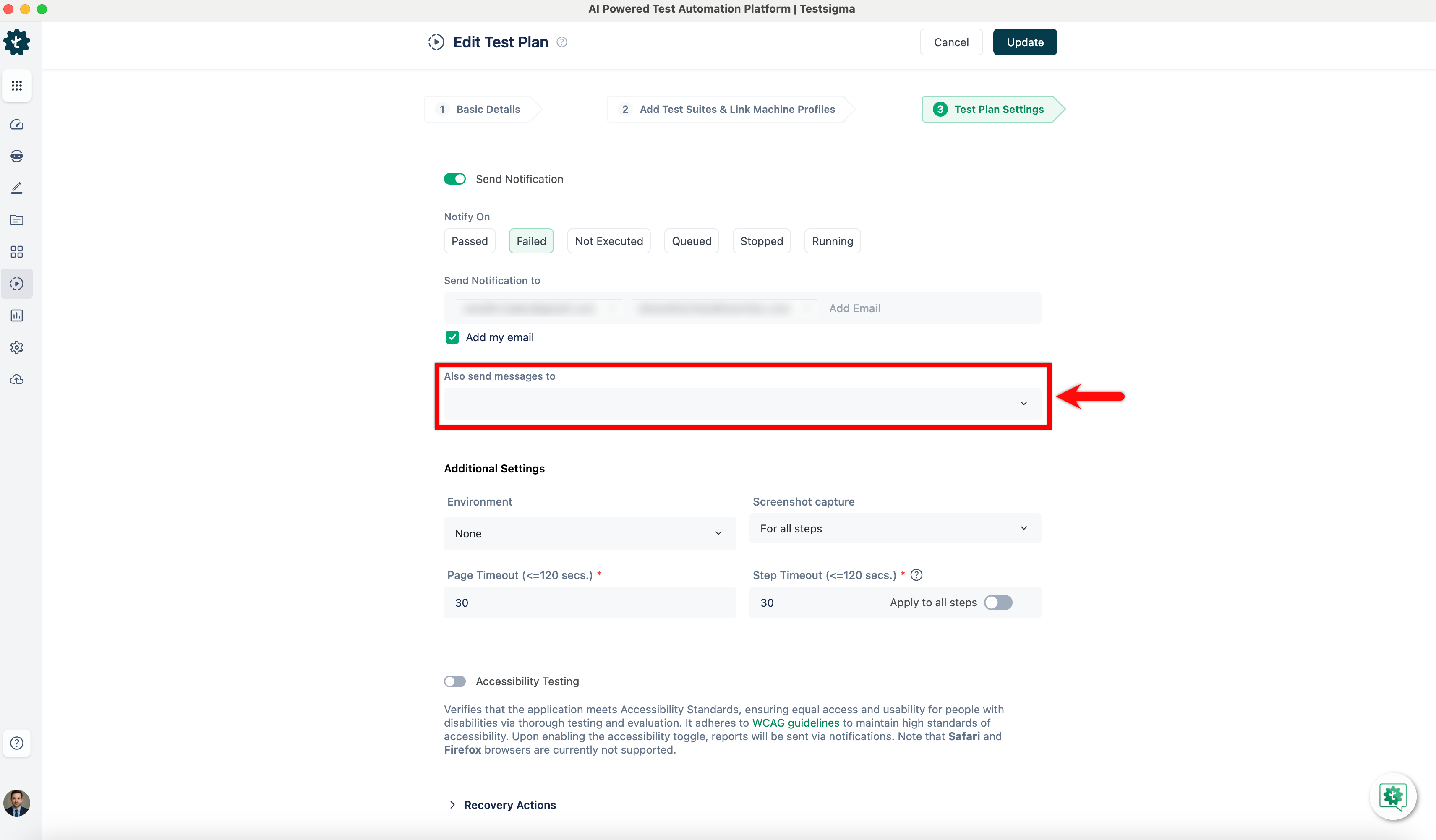

- - **Passed**

- - **Failed**

- - **Not Executed**

- - **Queued**

- - **Stopped**

- - **Running**

+

4. In the **Send Notification to** field, enter the **email address** where you want to receive the notifications.

+

5. If you want to use your Testsigma-registered email address, check the box labeled **Add my email**.

+

6. If you prefer to receive notifications via chat, click the drop-down list below **Also Send messages to**.

+

7. Select the chat channels where you want to receive the notifications, such as **Google Chat**, **Slack** or **Microsoft Teams**.

-8. Once all your preferences are set, click on **Create** or **Update** to save the changes.

+

+8. Once all your preferences are set, click on **Create** or **Update** to save the changes.

---

\ No newline at end of file

diff --git a/src/pages/docs/test-suites/overview.md b/src/pages/docs/test-suites/overview.md

index d209cc49..207c4027 100644

--- a/src/pages/docs/test-suites/overview.md

+++ b/src/pages/docs/test-suites/overview.md

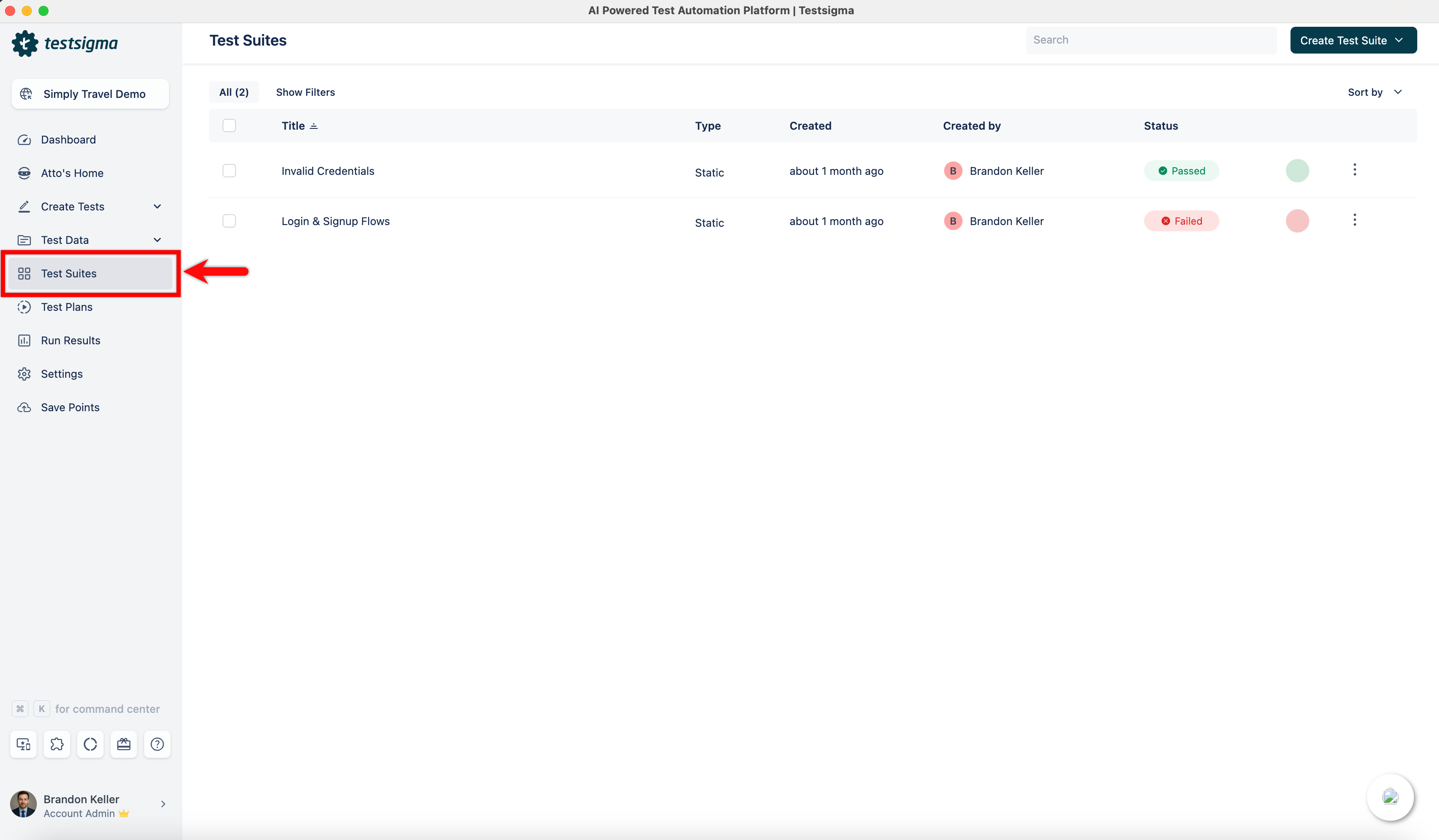

@@ -45,26 +45,53 @@ Organise your test cases into test suites based on common functionalities or sce

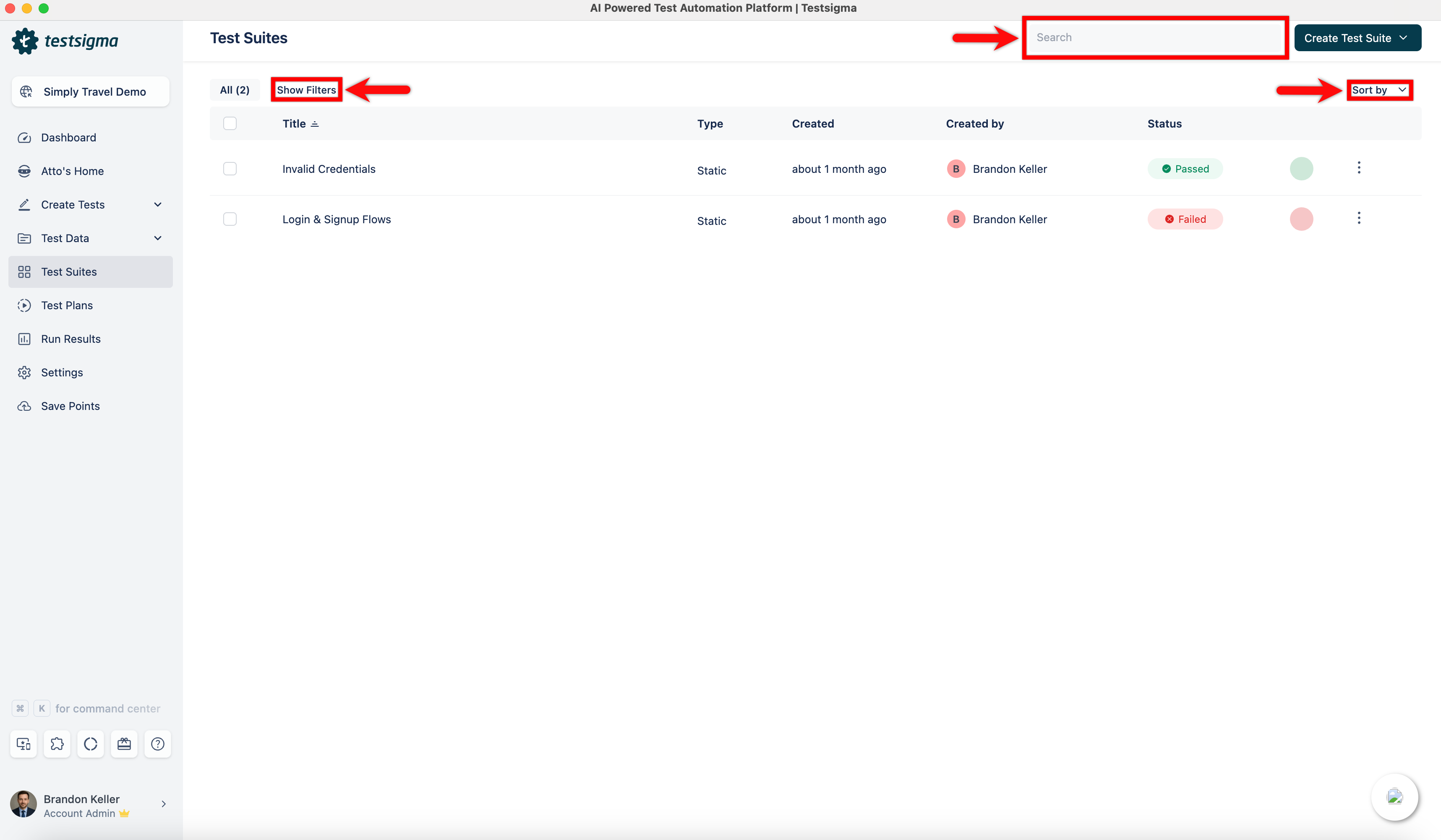

On the Test Suites List page, you will have the below options:

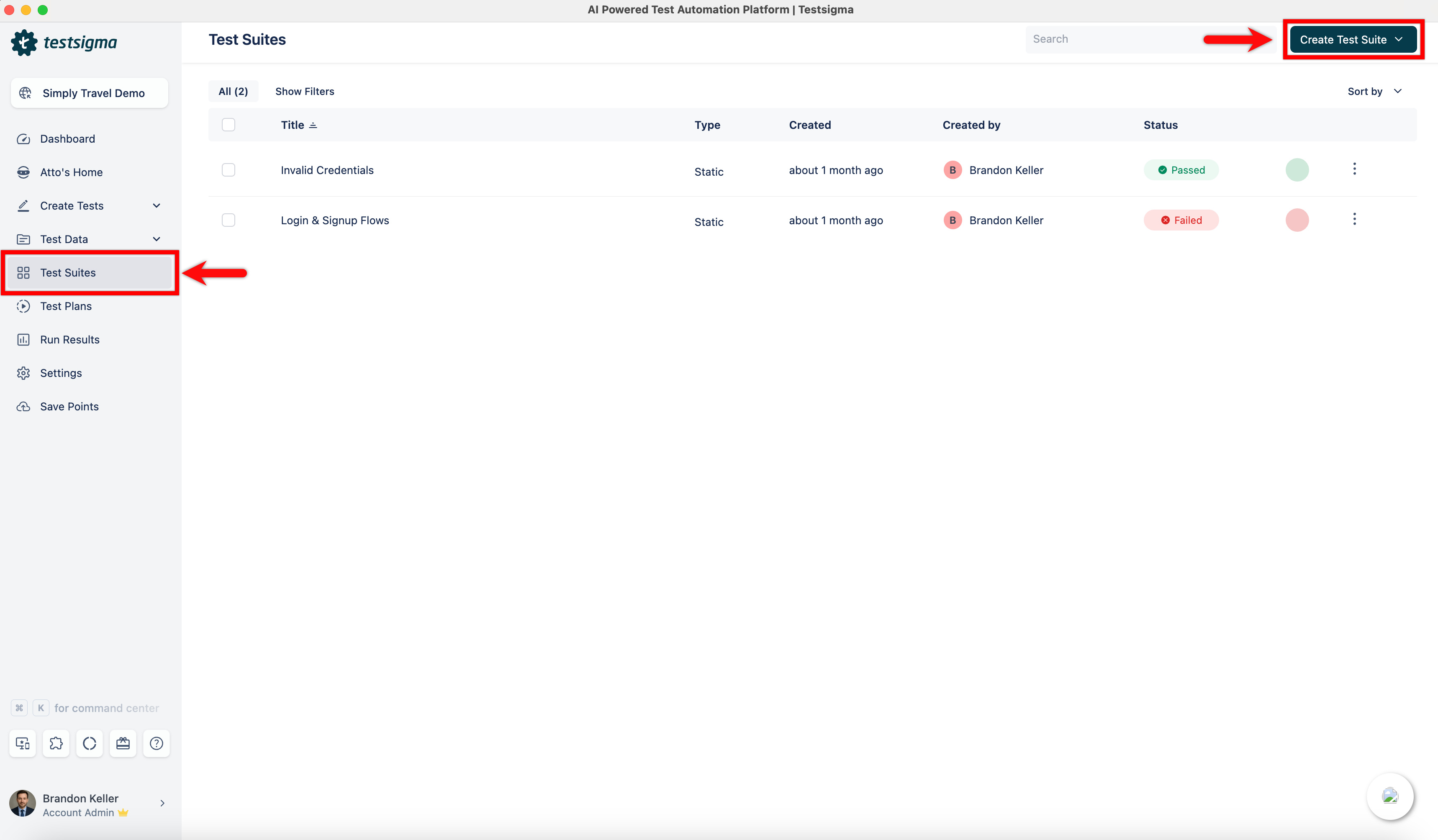

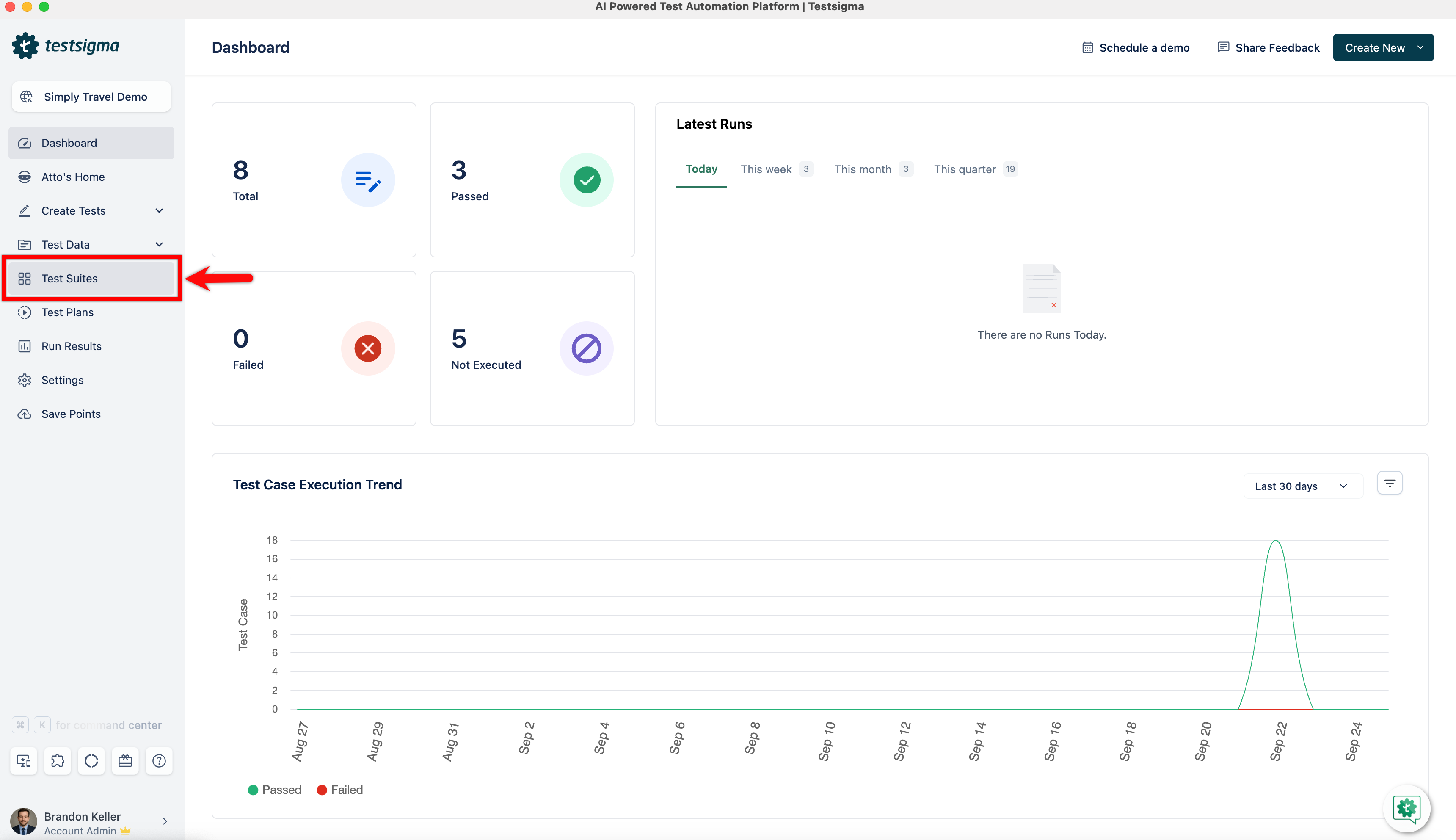

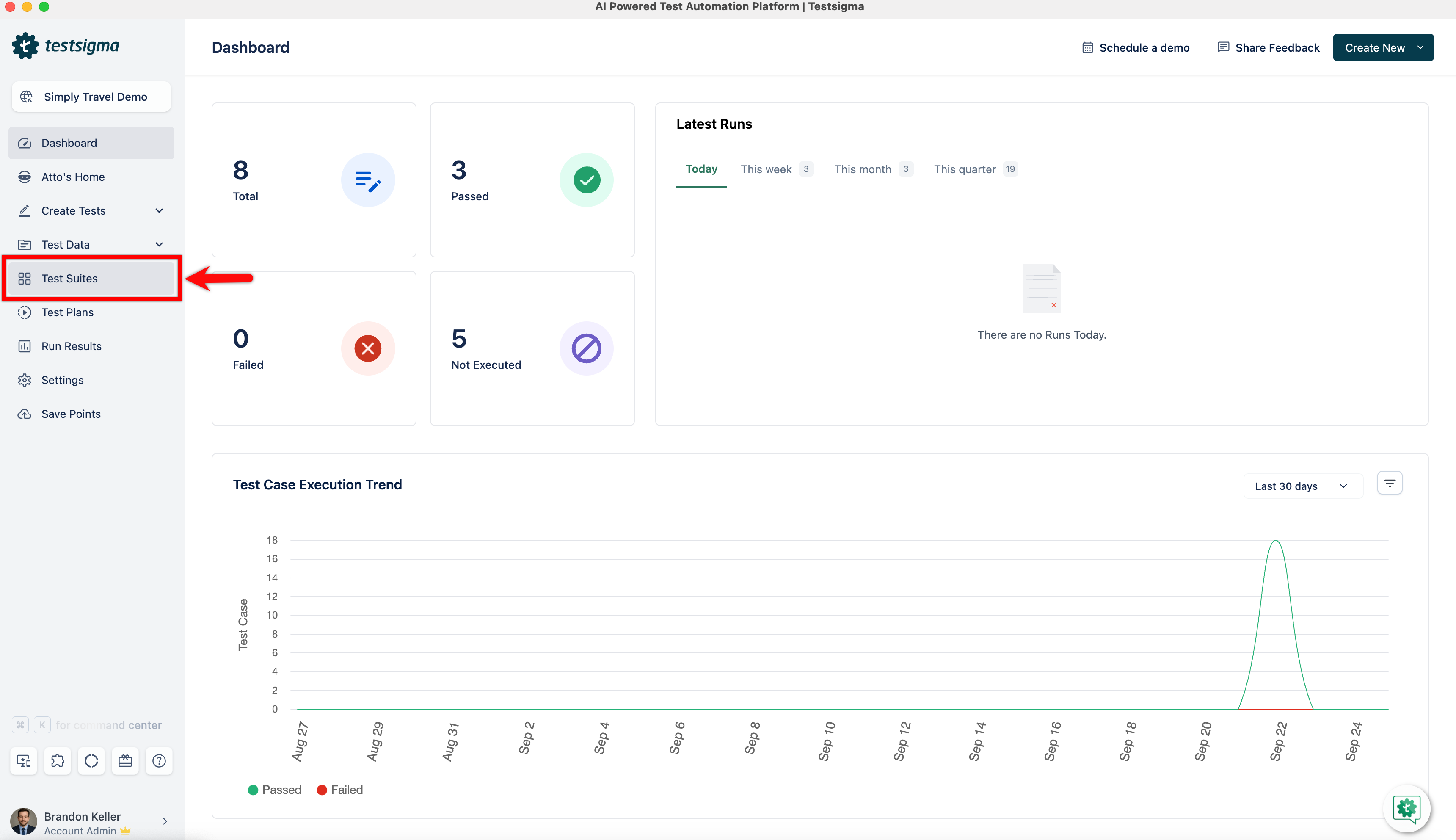

1. Navigate to **Test Suites** in the left-side navbar.

+

+

2. You can easily manage test suites on the **Test Suites** list page by **sorting**, **filtering**, or **searching**. The page displays test suites with **titles**, **types**, **creation dates**, **creators**, and **statuses**.

-3. Click **Create Test Suite** in the top right corner of the screen.

+

+

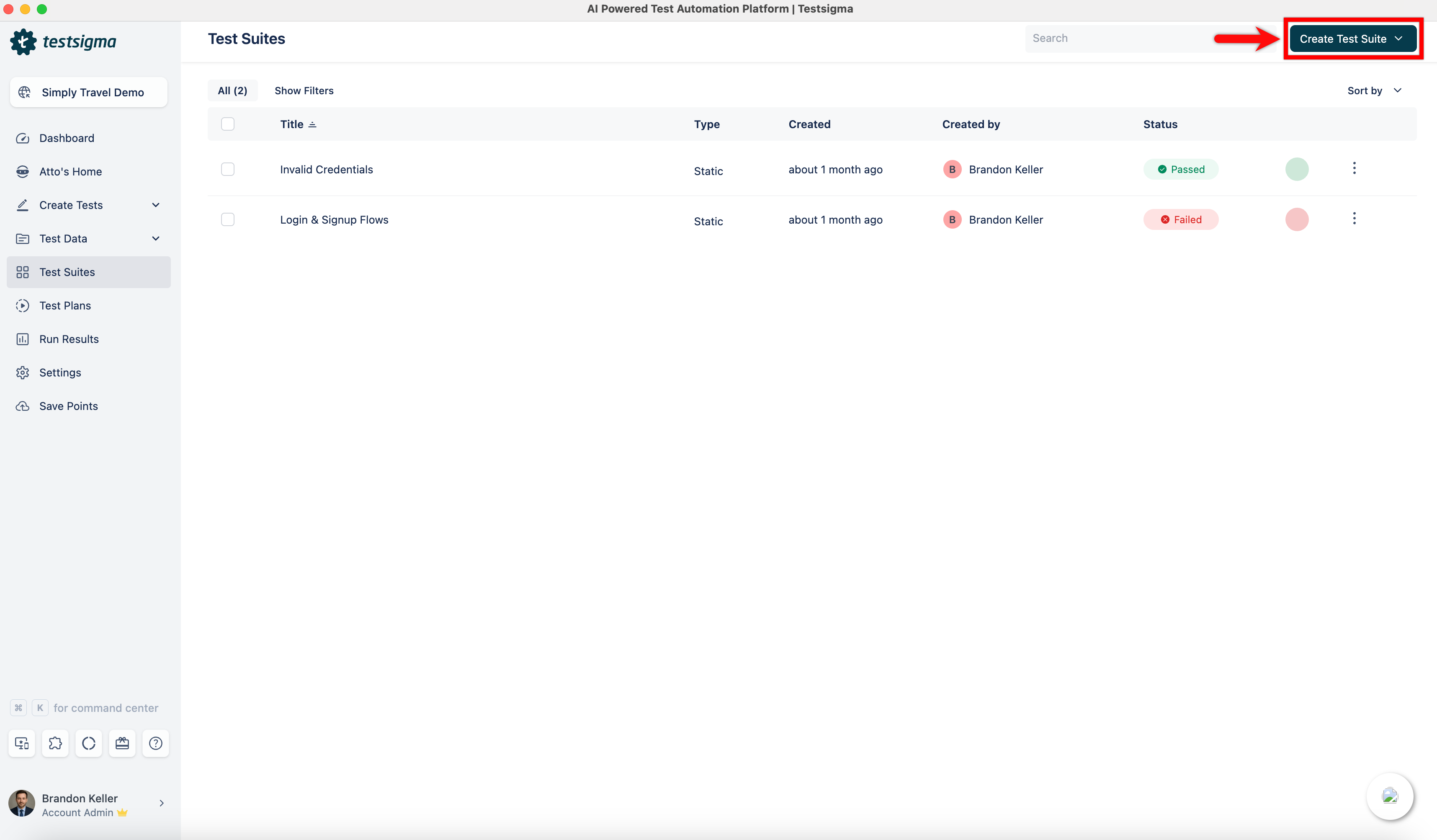

+3. Click **Create Test Suite** in the top right corner of the screen.

+

---

## **Creating a Test Suite**

-1. Navigate to **Test Suites** in the left-side navbar. Click the **Create Test Suite** button in the top right corner of the Test Suites List page. Provide below details to **Create Test Suite**:

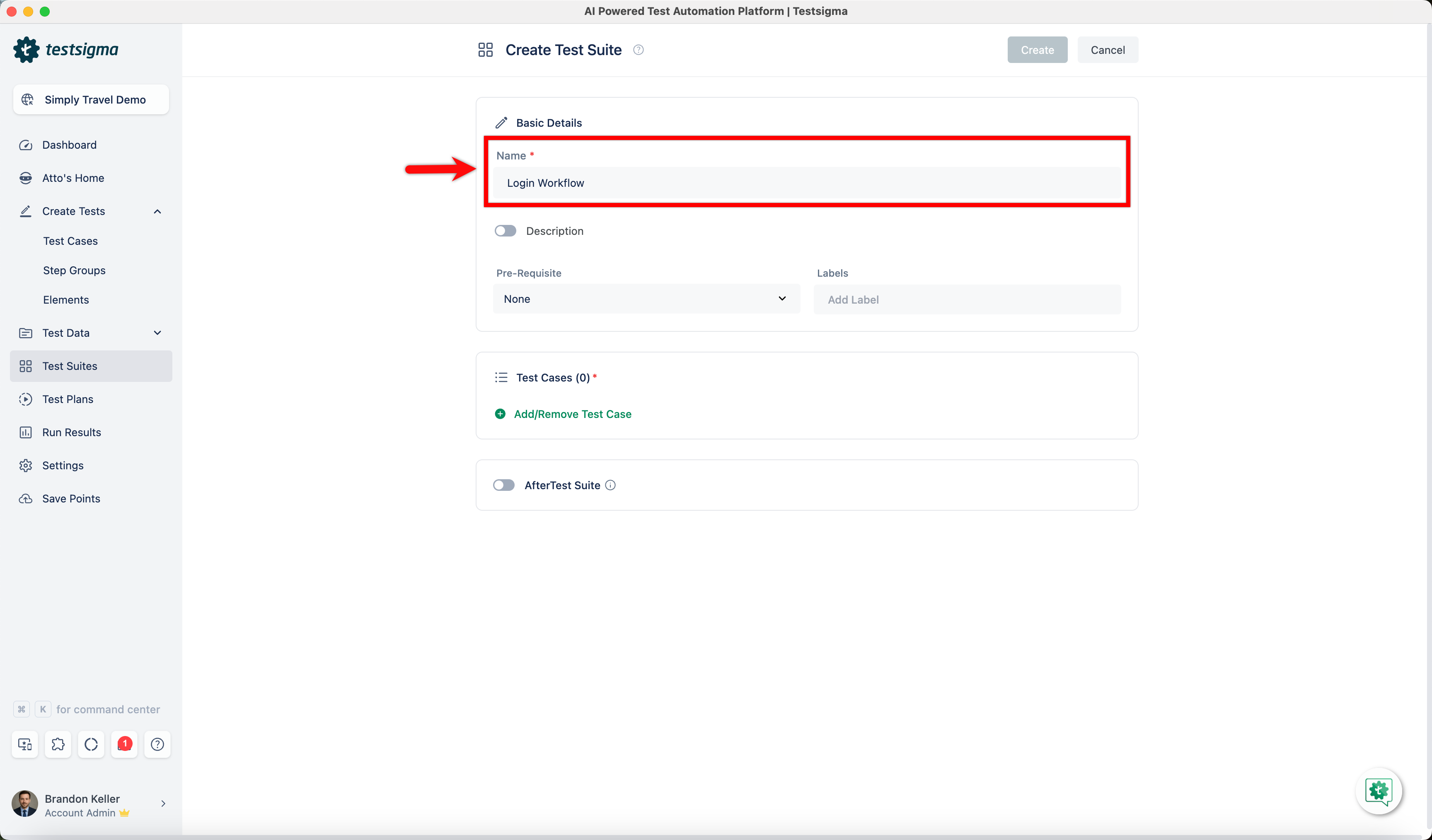

+1. Navigate to **Test Suites** in the left-side navbar. Click the **Create Test Suite** button in the top right corner of the Test Suites List page.

+

+ Provide below details to **Create Test Suite**:

+

2. **Name(Required)**: Enter the **title** of the Test Suite in the Name field on the Create Test Suite page.

+

+

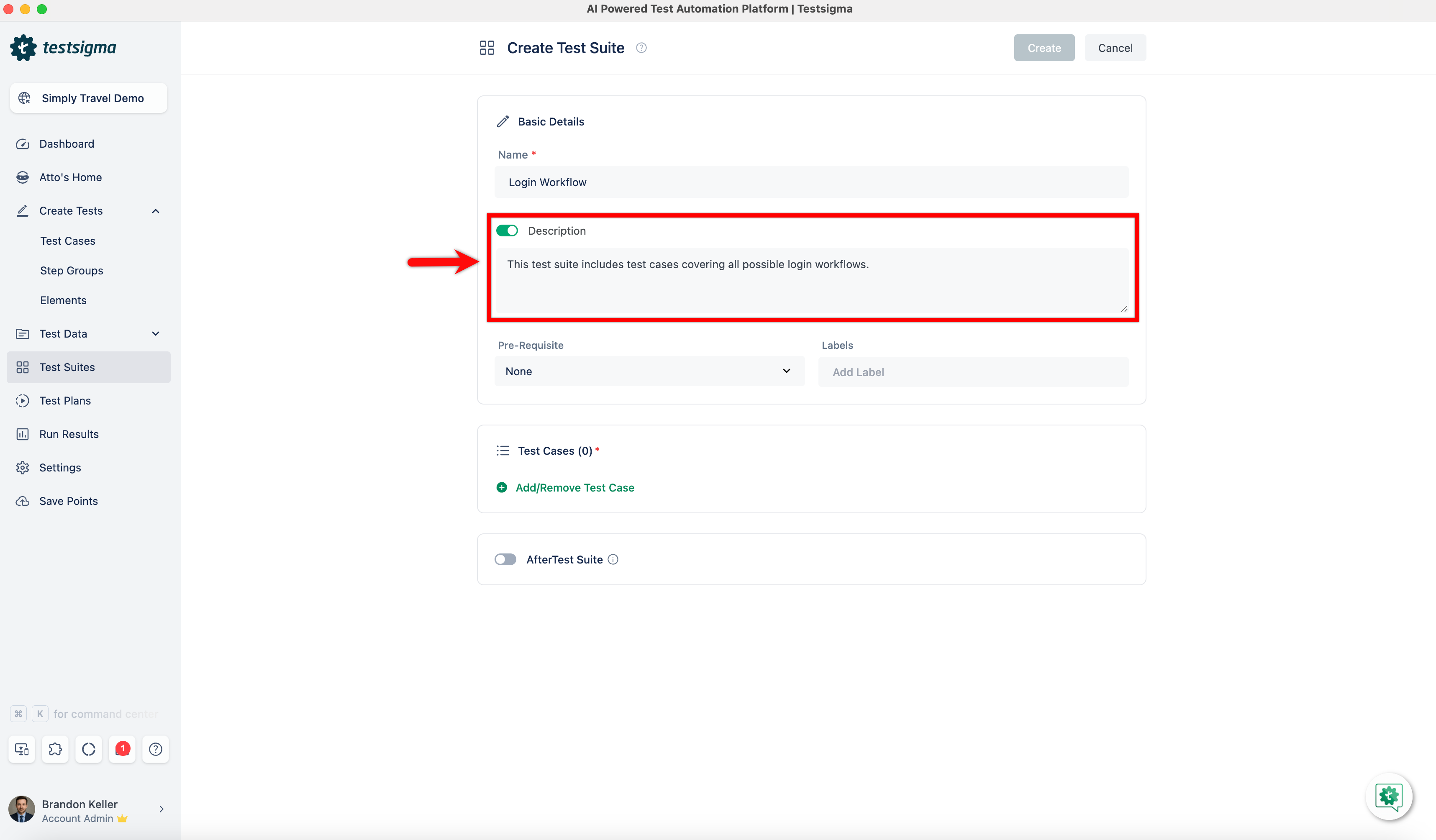

3. **Description**: You can enable the toggle switch for the **Description** and provide a brief description explaining the test suite's purpose and scope. This will help your team members understand the objectives of the test suite.

+

+

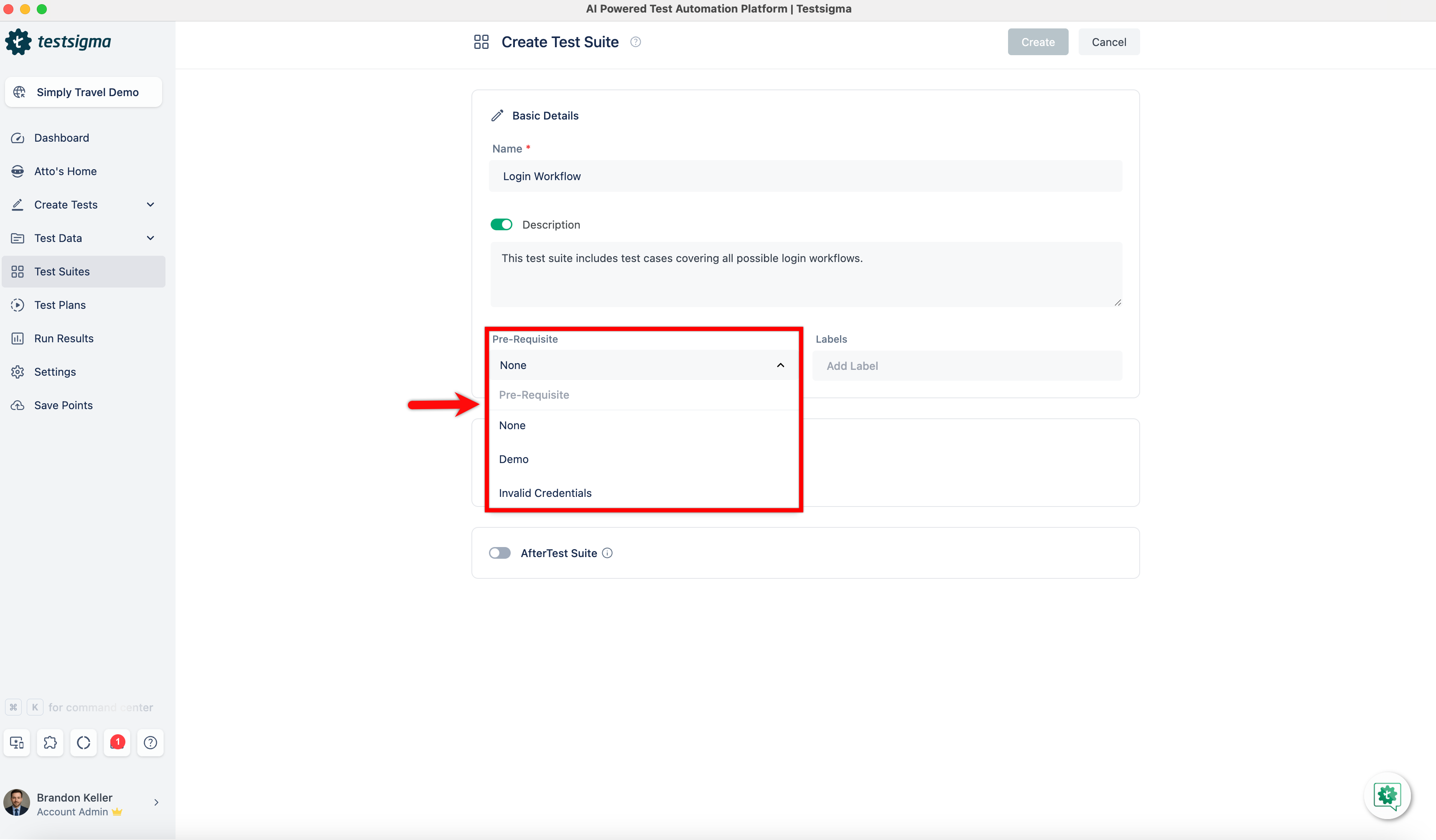

4. **Pre-Requisite**: Specify any **Pre-Requisite** for executing the test suite, such as specific test data, system configurations, or test environment setup.

+

+

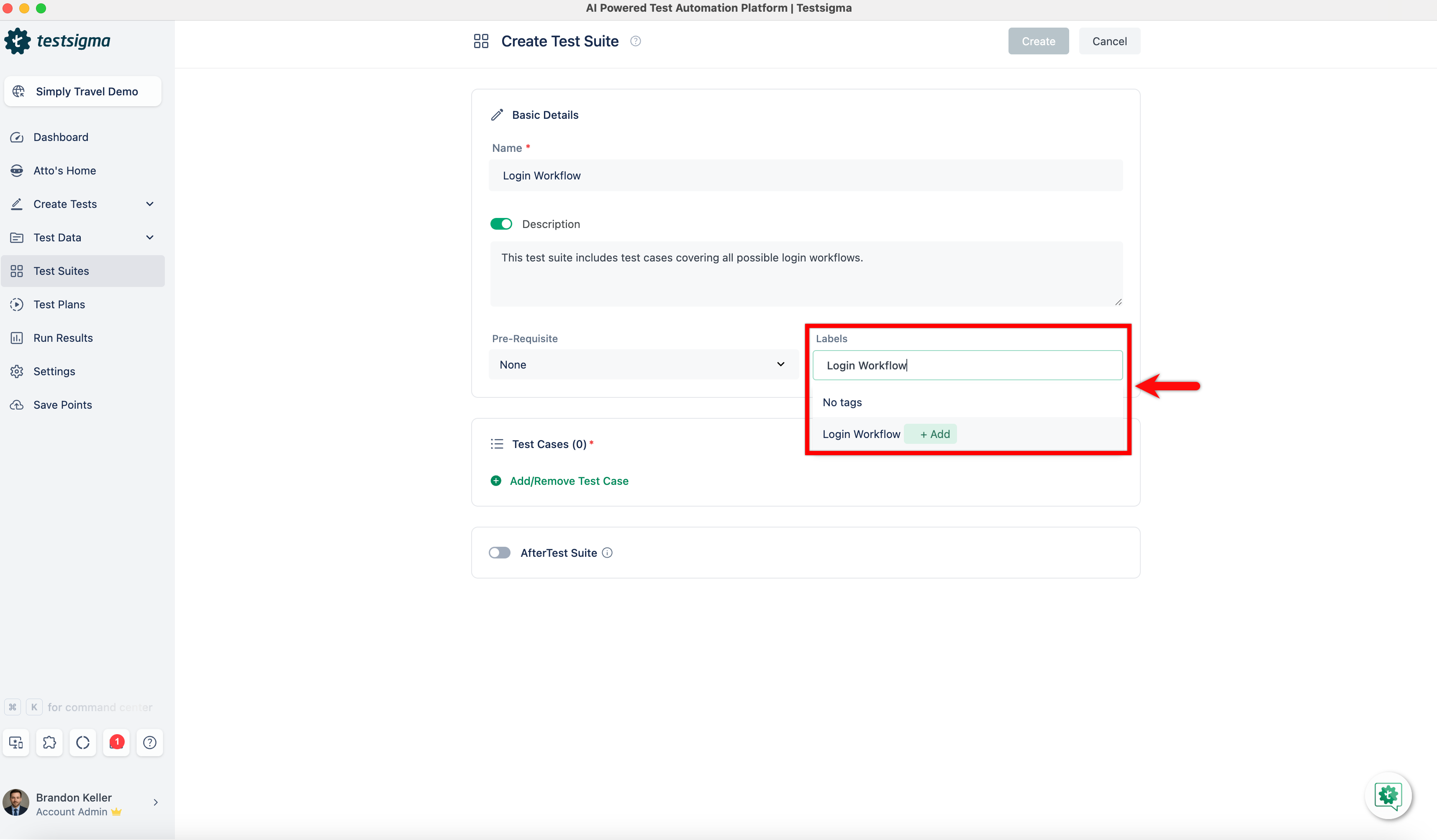

5. **Label**: You can assign a **Label** to the test suite. This will allow easier management of multiple test suites, as labels can be used for categorisation and filtering.

+

+

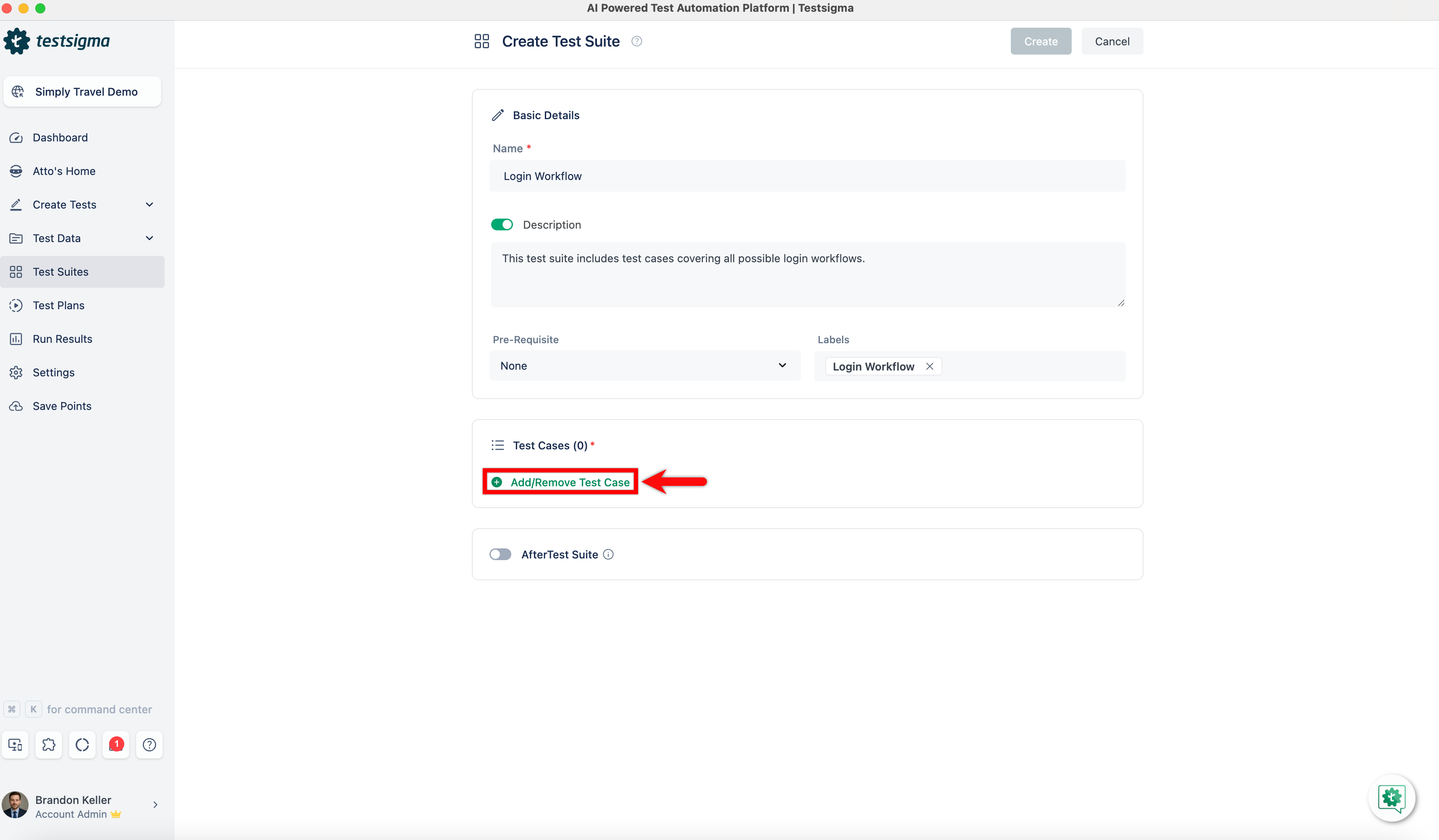

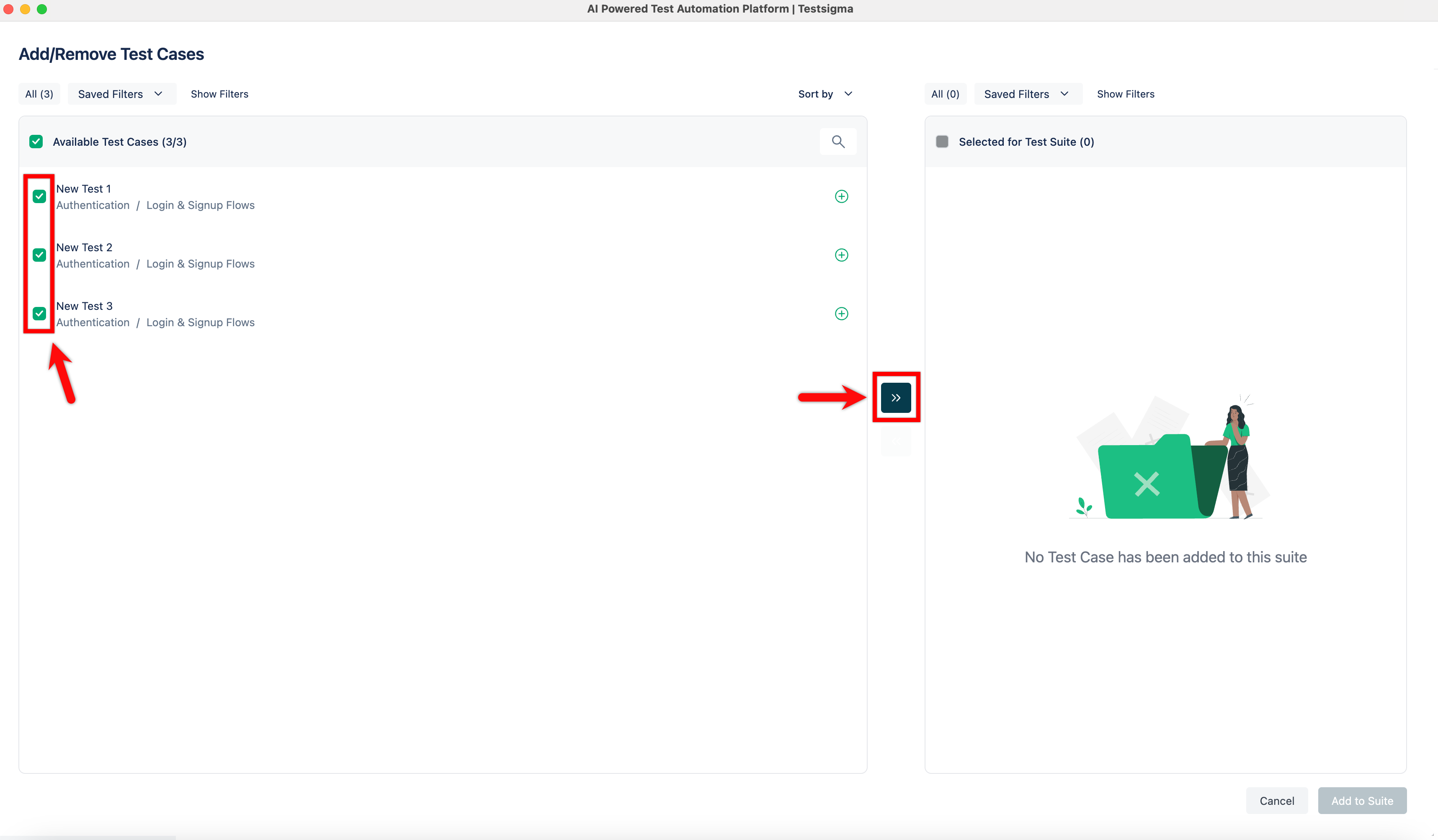

6. **Add/Remove Test Cases**: Click the **Add/Remove Test Cases** button to add or remove test cases from the Test Suite. An **Add/Remove Test Case** overlay will appear, allowing you to select test cases from the **Available Test Case** list by checking the box next to them or clicking the **+** icon to select individual test cases.

+

+

7. **Add to Suite**: Click the **>>** icon to move the desired test cases from **Available Test Case** to **Selected for Test Suite**. You can drag and drop the test cases to change their order in the Test Suite. Finally, add the selected test cases to the Test Suite by clicking the **Add to Suite** button. To remove test cases from the Test Suite, select them from **Selected Test Cases** and click the **<<** icon.

+

+

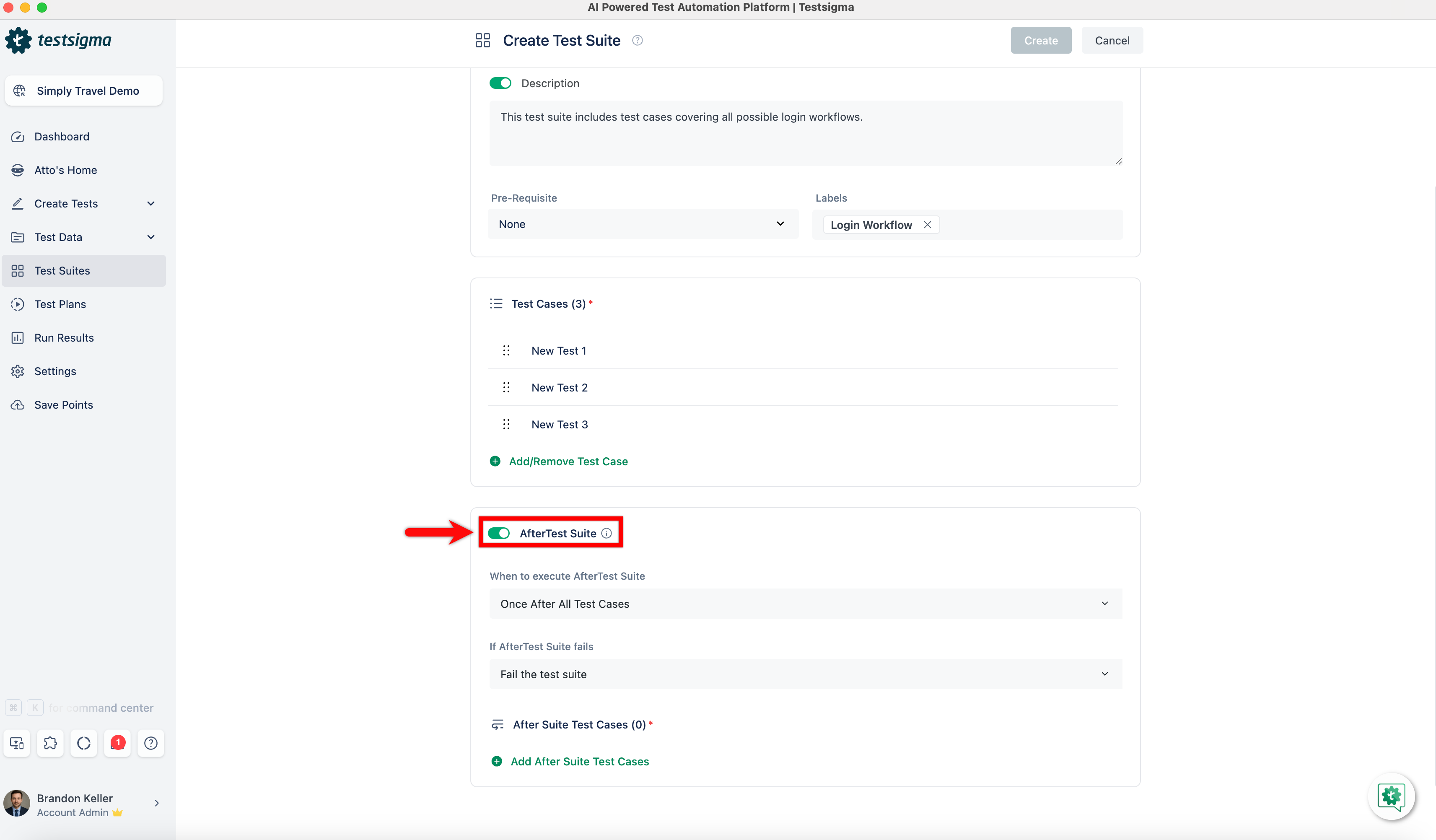

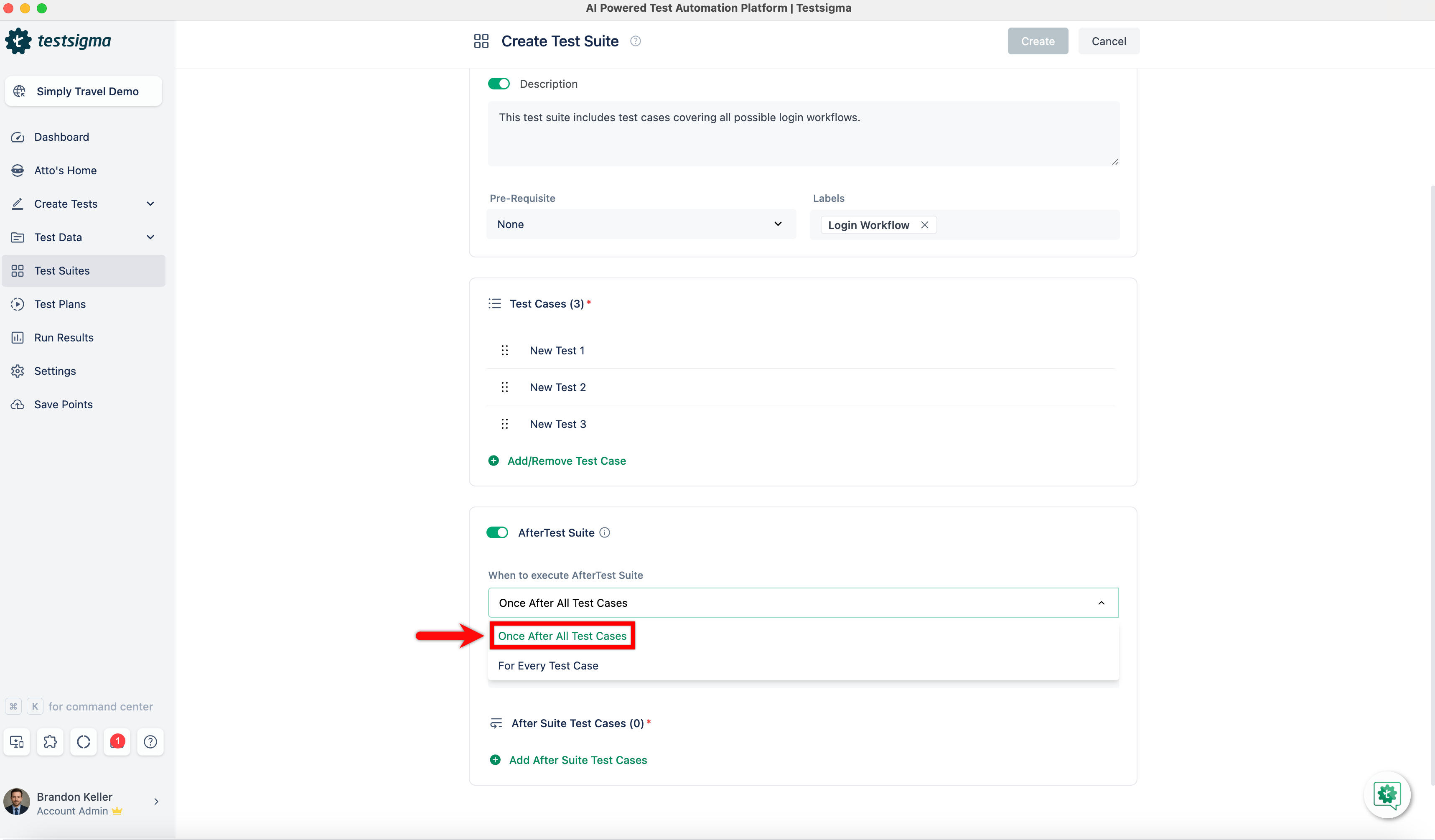

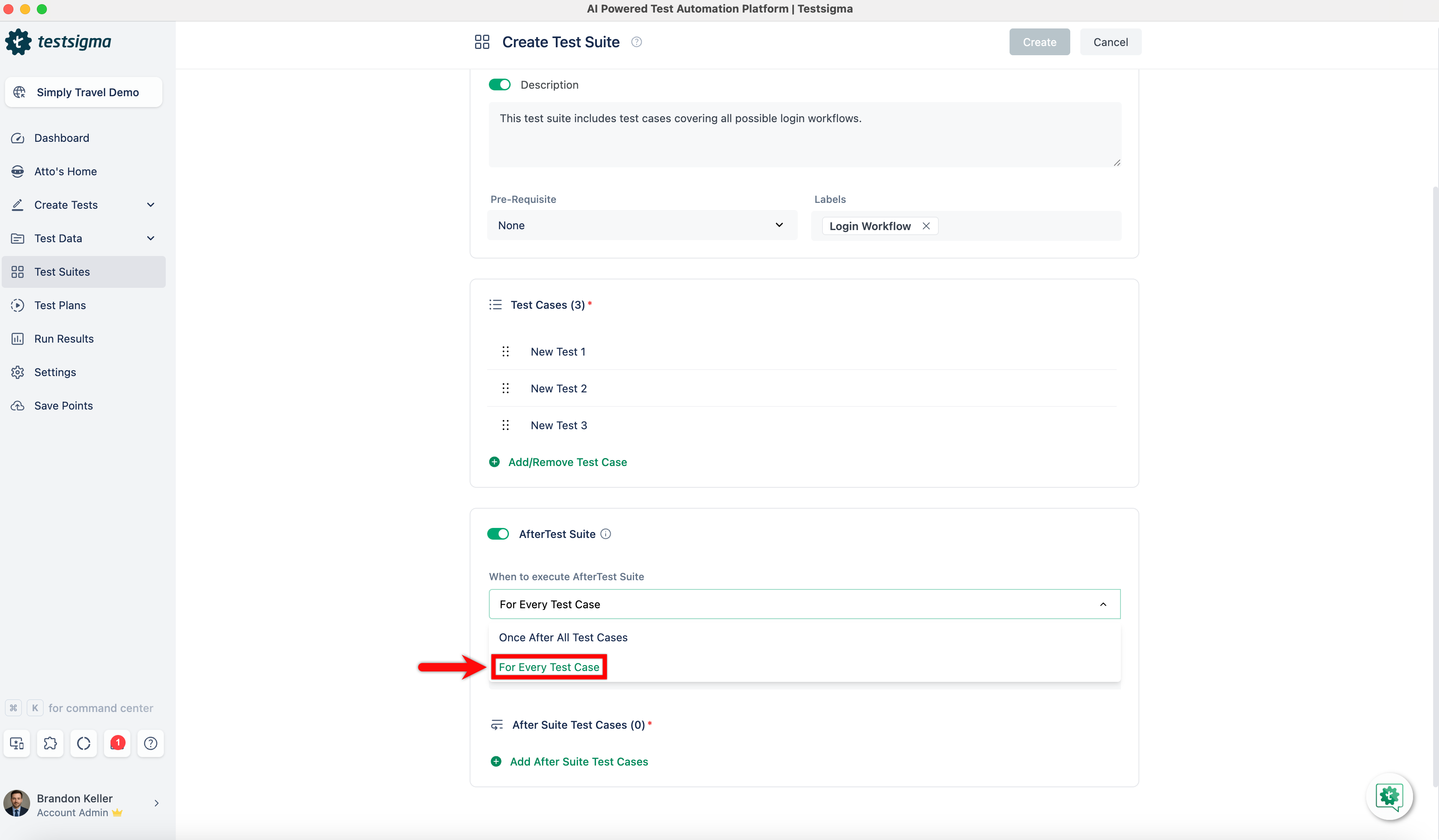

8. **After Test Suite**: Enable the **AfterTest Suite** feature toggle switch, which enables you to define actions or test cases to execute after completing all test cases within the suite. You can use this feature to perform clean-up or post-test activities.

+

+

- **When to execute After Test Suite - Once After All Test Cases**: Select this option to execute the actions or test cases within the **After Test Suite** section only once after all the test cases have completed execution.

+

- **When to execute After Test Suite - For Every Test Case**: Select this option to execute the actions or test cases within the **After Test Suite** section for every individual test case within the suite after each test case is executed.

+

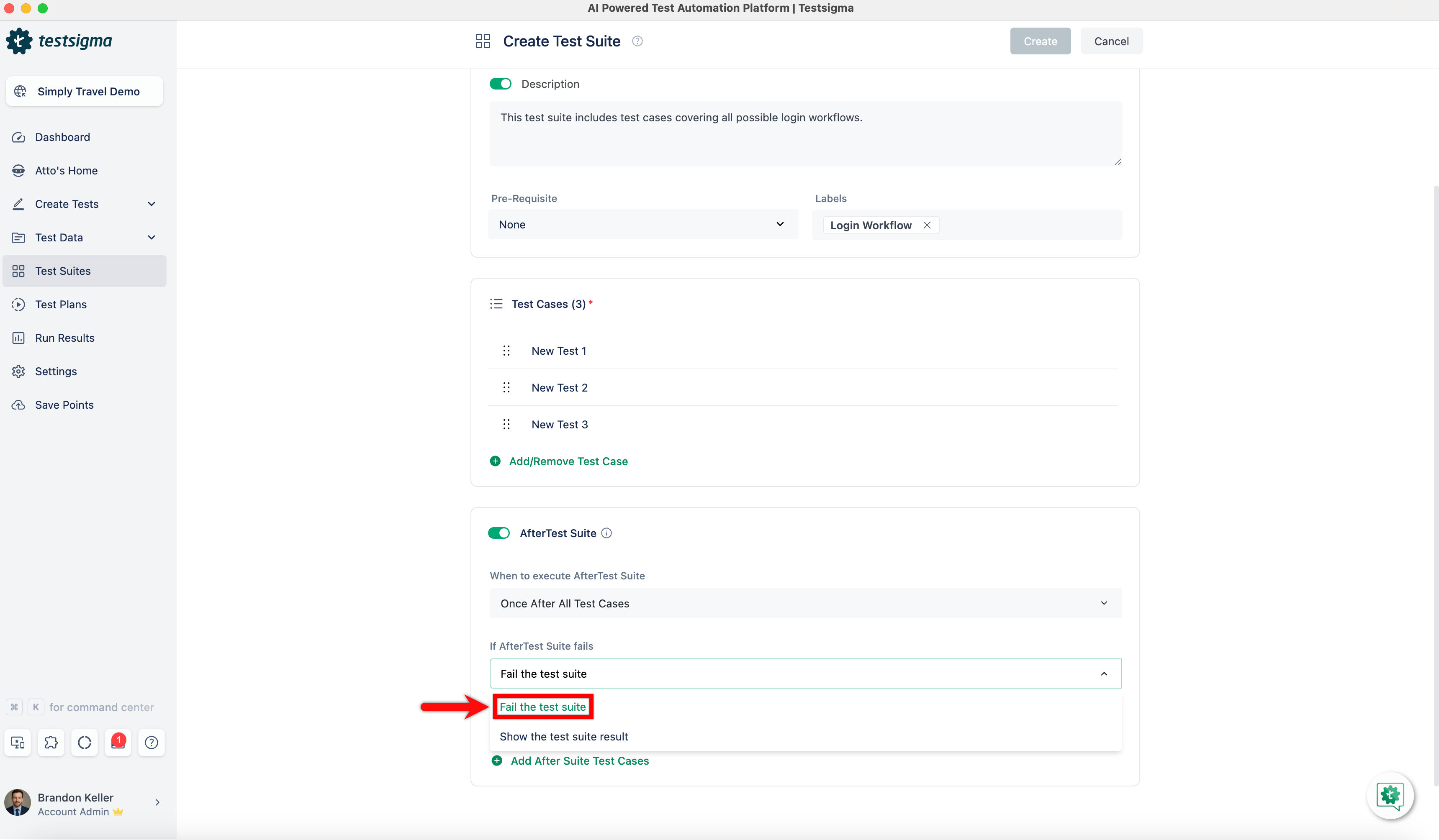

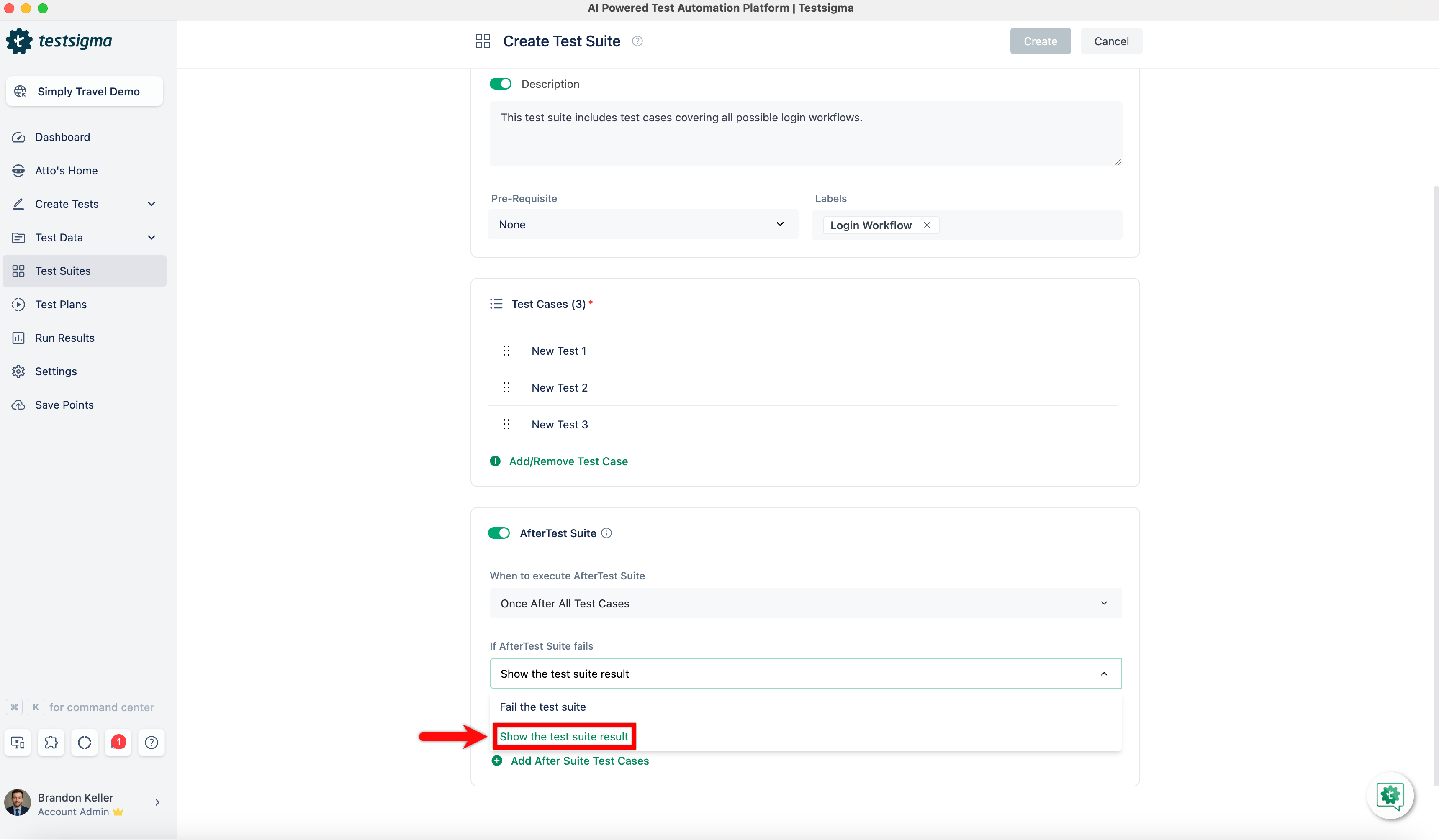

- **If After Test Suite fails - Fail the test suite**: Choose this option to mark the test suite as failed during execution if any of the actions or test cases within the **After Test Suite** section fails.

+

- **If After Test Suite fails - Show the test suite result**: Select this option to display the test suite result even if any of the actions or test cases within the **After Test Suite** section fail during execution.

-9. **Add After Suite Test Cases**: You can add specific test cases within the After Test Suite section that will execute after completing all the other test cases in the suite.

+

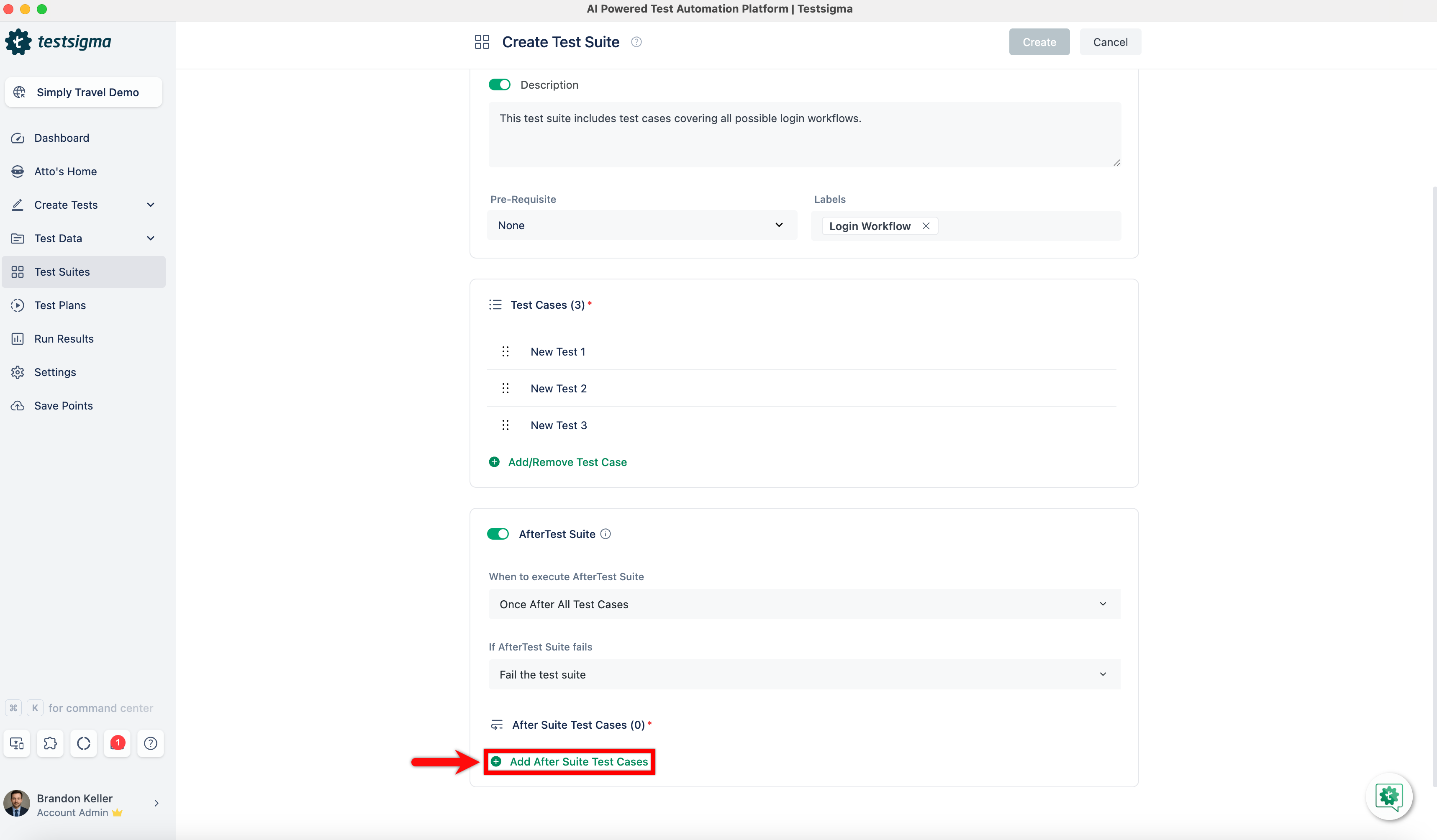

+9. **Add After Suite Test Cases**: You can add specific test cases within the After Test Suite section that will execute after completing all the other test cases in the suite.

+

[[info | NOTE:]]

| Carefully consider the order and dependencies between test cases when defining the After Test Suite section to ensure effectiveness and that it does not impact the subsequent test cases or cause environmental conflicts.

@@ -73,27 +100,42 @@ On the Test Suites List page, you will have the below options:

## **Editing a Test Suite**

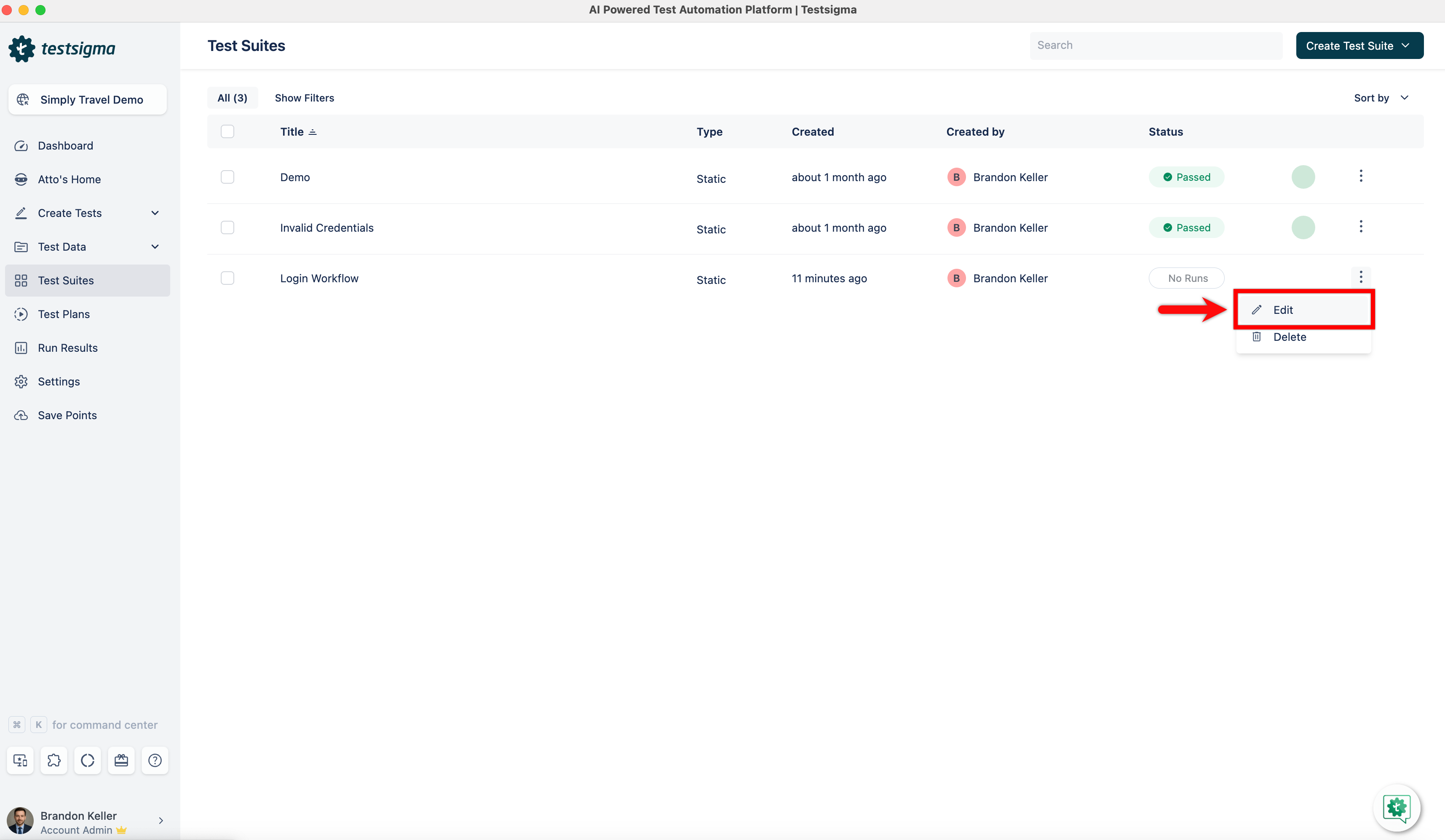

-1. Navigate to **Test Suites** in the left-side navbar.

+1. Navigate to **Test Suites** in the left-side navigation bar.

+

+

2. You can edit the test case using any of the following methods:

- - Click on the **ellipsis** icon for the **Test Suite** you want to edit from the Test Suite List page, and select **Edit** from the drop-down menu.

+ - Click on the **ellipsis** icon for the **Test Suite** you want to edit from the Test Suite List page, and select **Edit** from the drop-down menu.

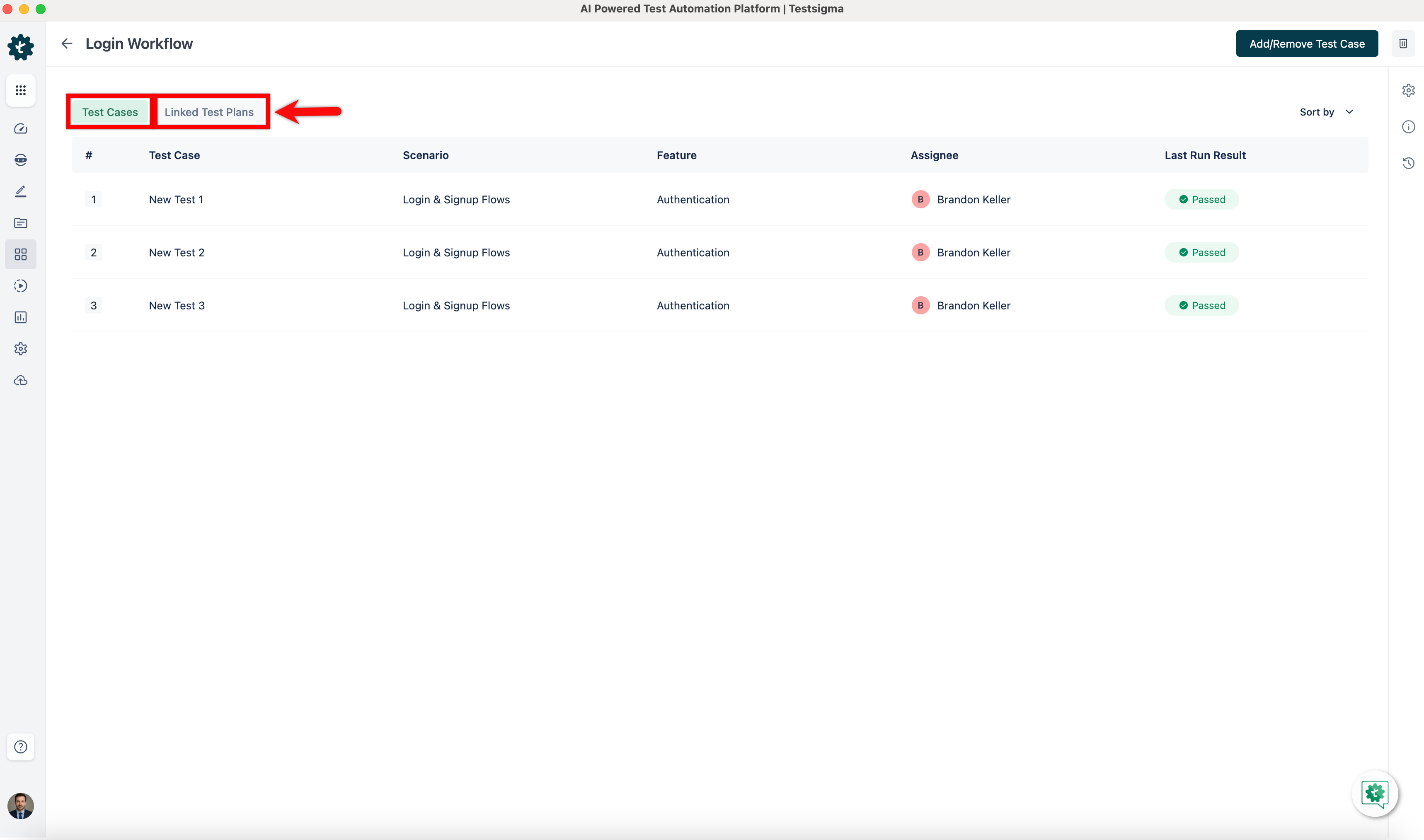

- Click the **Test Suite** you want to edit and open the Test Suite Details Page.

3. You can view **Test Cases** and **Test Plans** linked to your Test Suite on the **Test Suites Details** page.

+

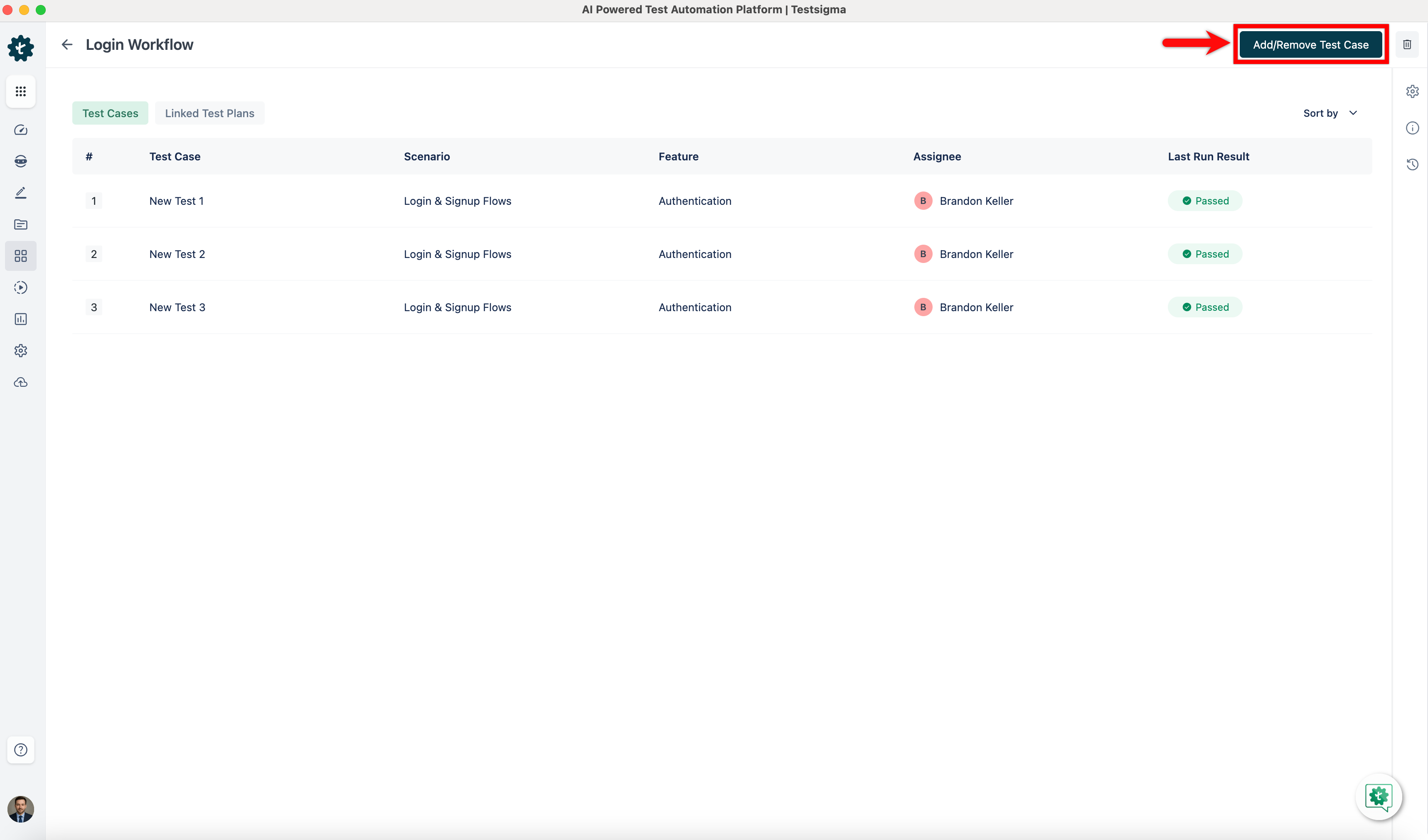

+

4. Click the **Add/Remove Test Cases** button in the top right corner of the Test Suite details page. An **Add/Remove Test Case** overlay will appear, allowing you to select test cases from the **Available Test Case** list by checking the box next to them or clicking the **+** icon to select individual test cases.

+

+

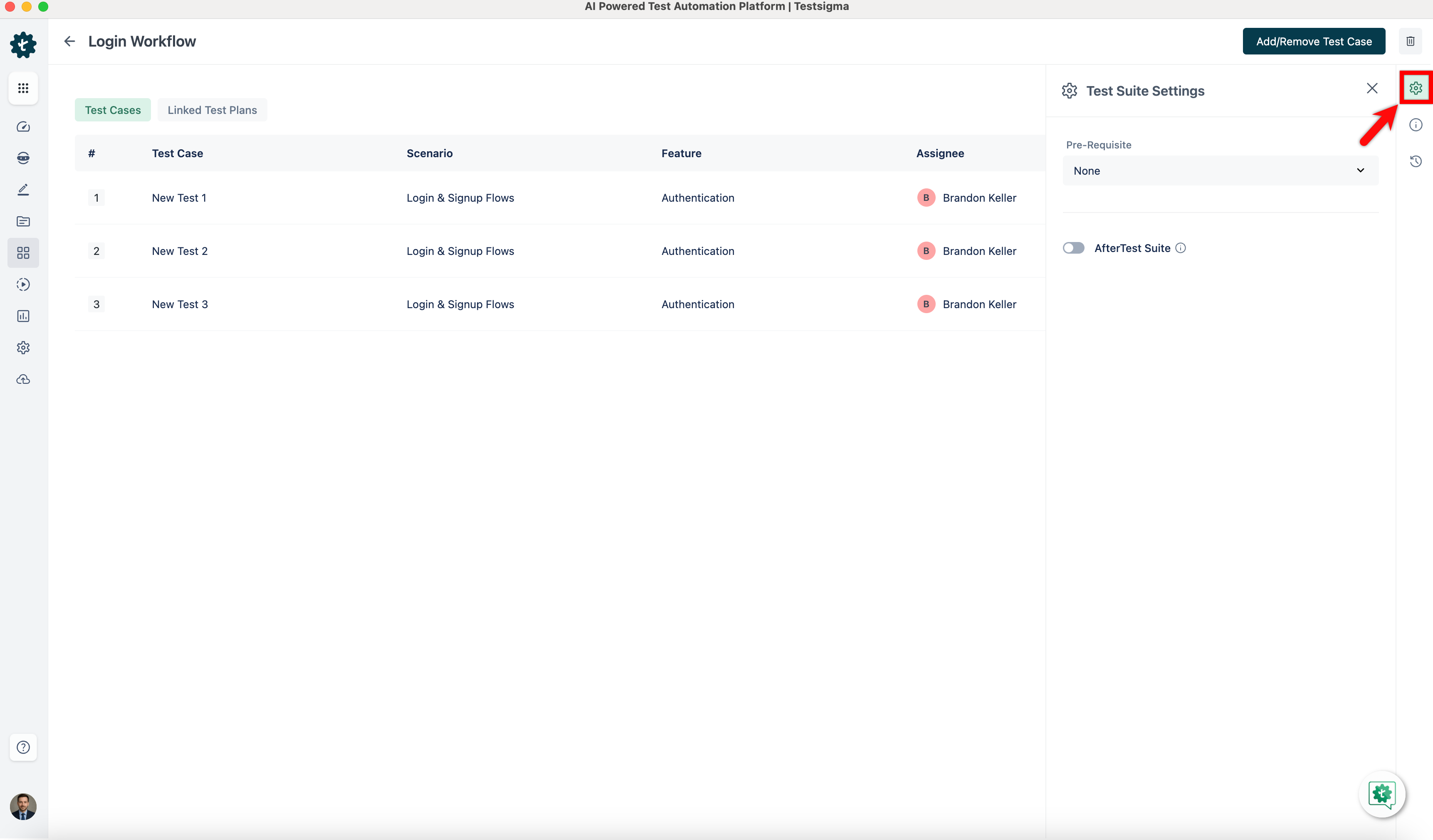

5. Click **Test Suite Settings** in the right-side navbar, and follow steps 4 & 8 in the [Creating a Test Suite](https://testsigma.com/docs/test-management/test-suites/overview/#creating-a-test-suite) section to add **Pre-Requisite**, **After Test Suite** for the Test Suite.

+

+

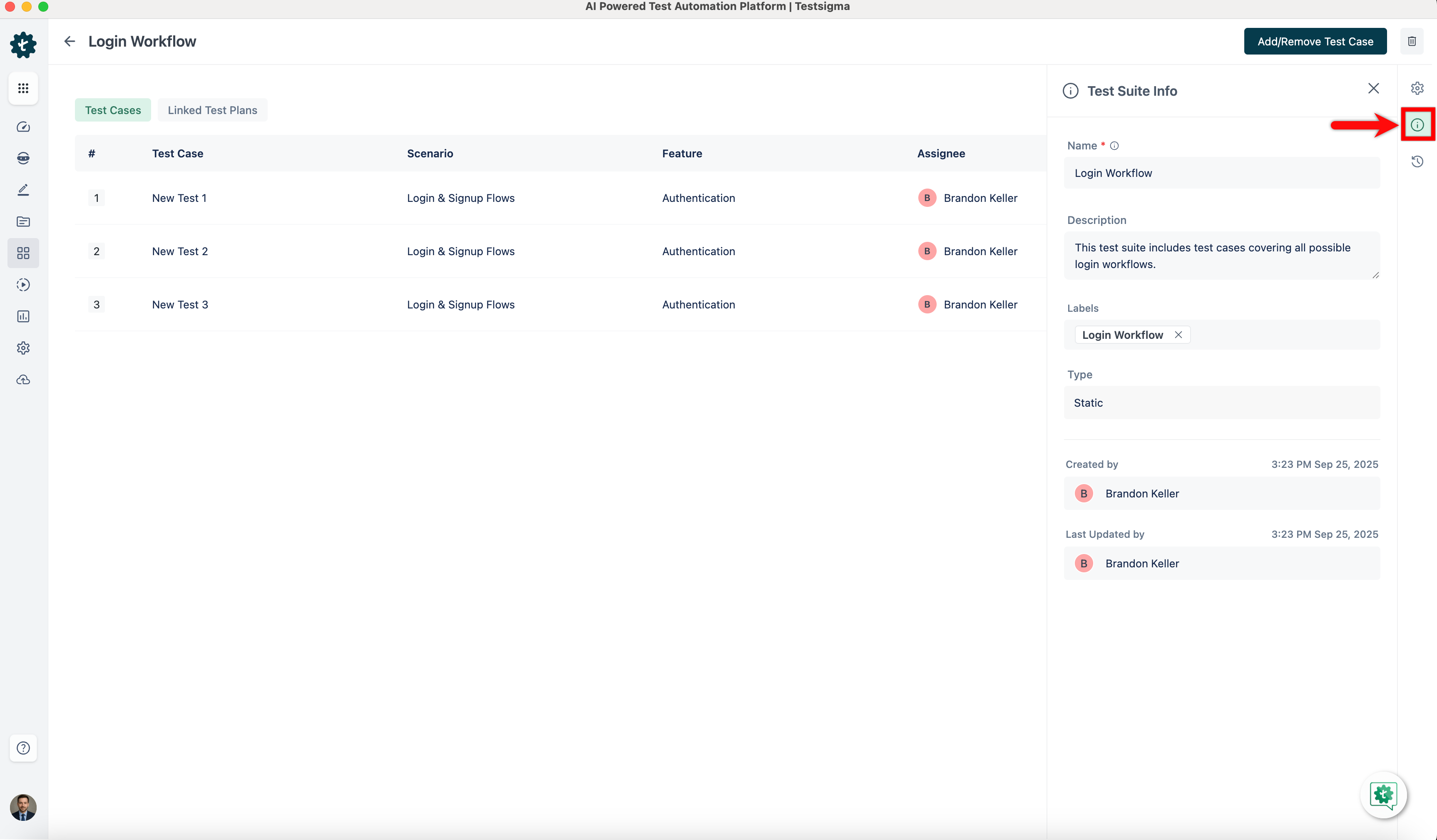

6. Click **Test Suite Info** in the right-side navbar. You can update the Test Suite **Name**, **Type** and **Description** from there and view information about the Test Suite's creation and last update.

-7. Click **Activity** in the right-side navbar and view the **History** and **Comments** of test suite.

+

+

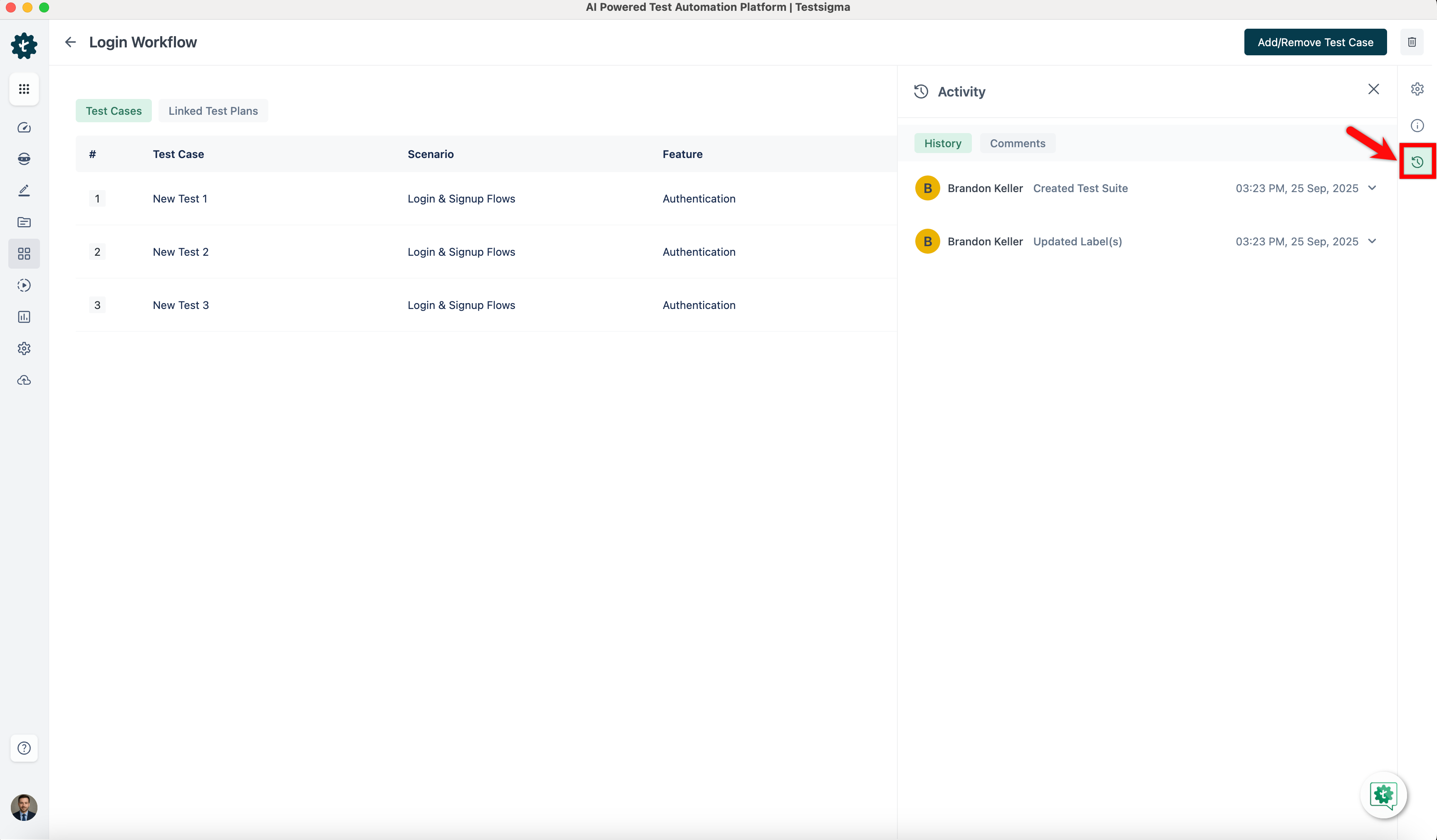

+7. Click **Activity** in the right-side navbar and view the **History** and **Comments** of test suite.

+

---

## **Deleting a Test Suite**

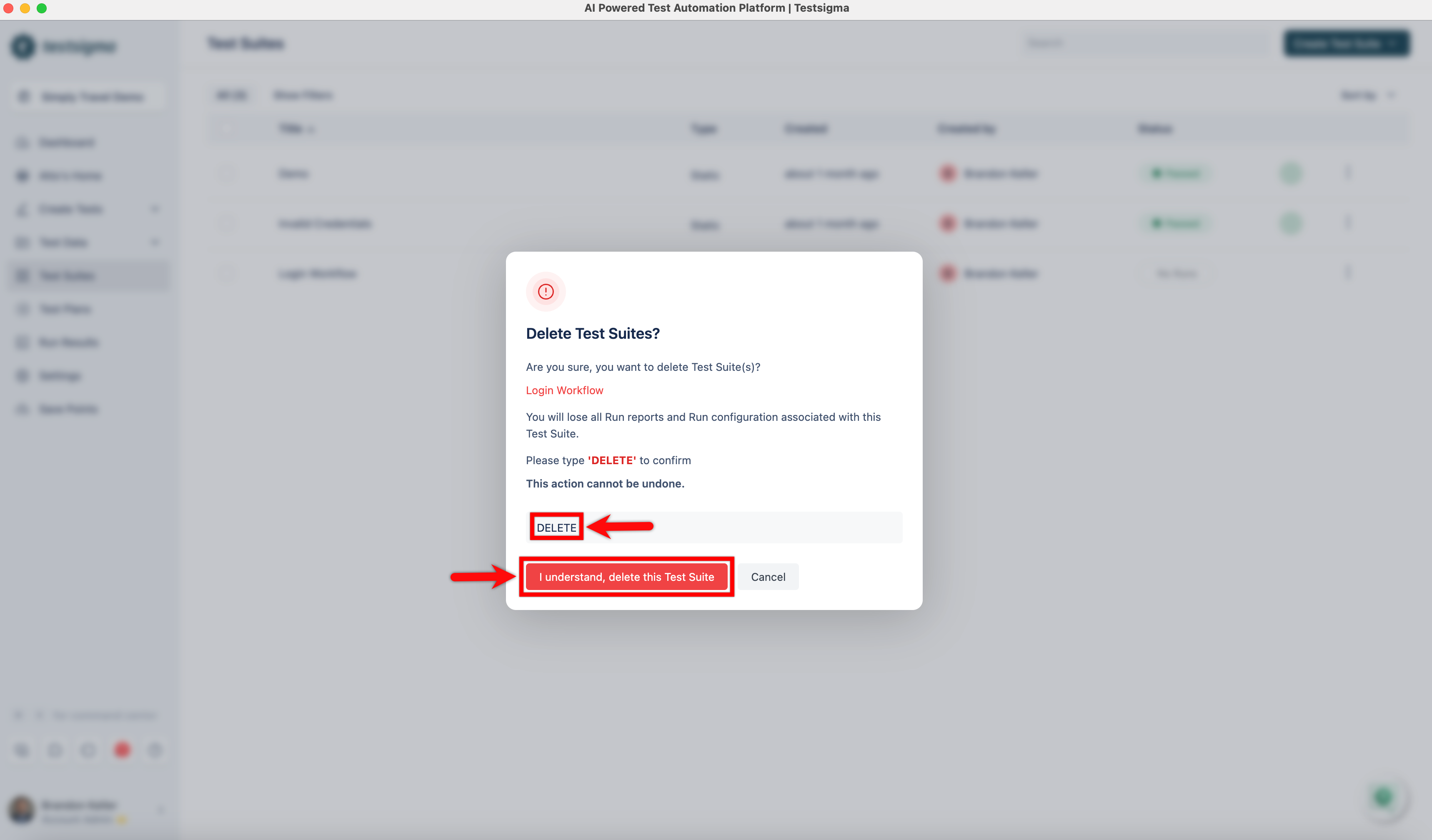

-1. Navigate to **Test Suites** in the left-side navbar.

+1. Navigate to **Test Suites** in the left-side navigation bar.

+

+

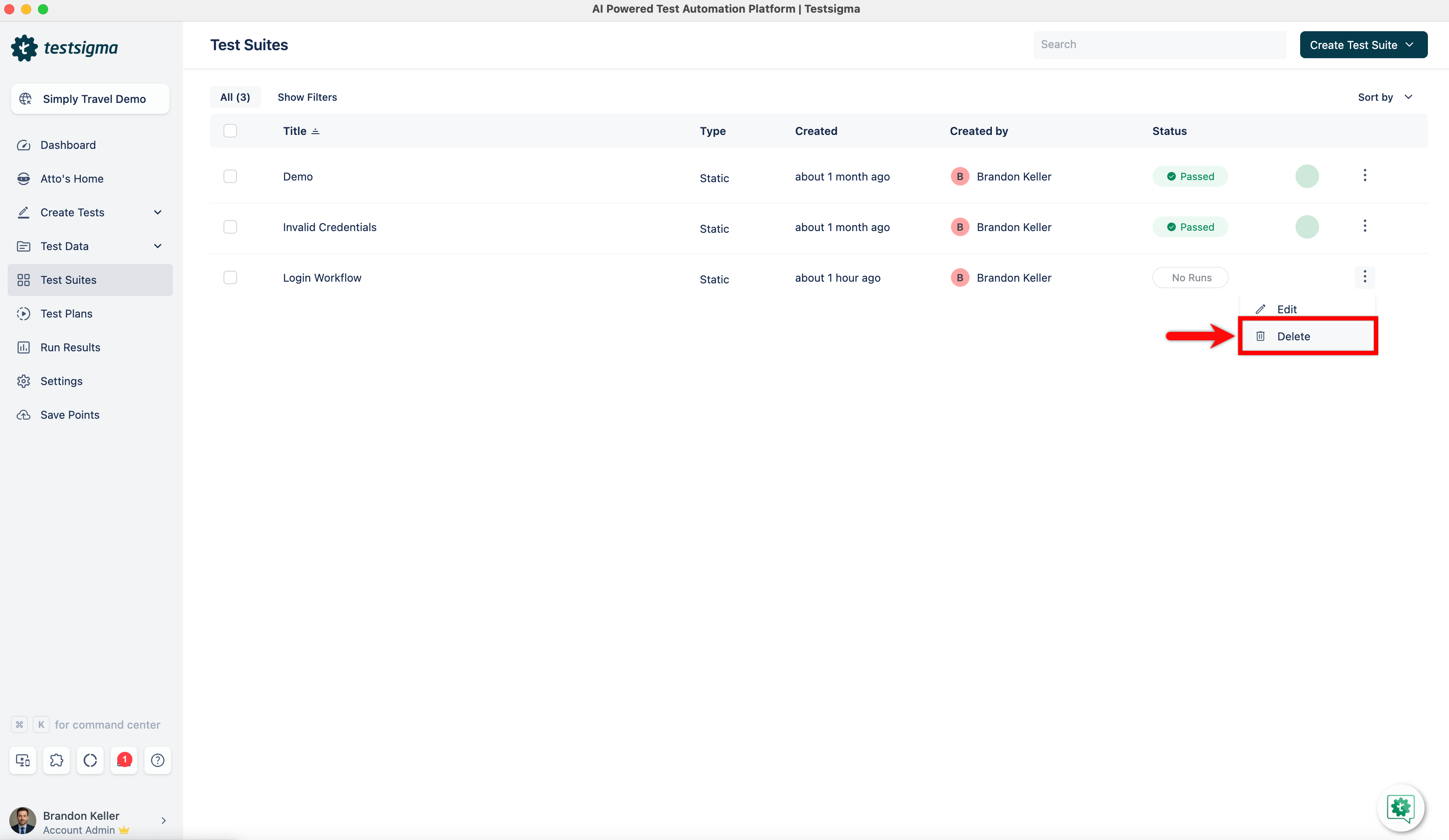

2. You can delete the test suite using any of the following methods:

- - Click on the **ellipsis** icon for the **Test Suite** you want to delete from the Test Suite List page, and select **Delete** from the drop-down menu to remove the test suite.

+ - Click on the **ellipsis** icon for the **Test Suite** you want to delete from the Test Suite List page, and select **Delete** from the drop-down menu to remove the test suite.

- Click the **Test Suite** you want to delete and open the Test Suite Details Page. Then, click the **Delete** Button in the screen's top right corner.

[[info | NOTE:]]

| Note: If you attempt to delete a Test Suite linked to a Test Plan, the application will display a warning and prevent the deletion. You must remove the Test Suite from the associated Test Plans before trying to delete it again.

-3. A **Delete Confirmation** pop-up will appear. To delete the Test Suite, you need to confirm the deletion by entering **DELETE** and then clicking on **I understand, delete this Test Suite**.

+3. A **Delete Confirmation** pop-up will appear. To delete the Test Suite, you need to confirm the deletion by entering **DELETE** and then clicking on **I understand, delete this Test Suite**.

+

+

[[info | NOTE:]]

| If you delete the Test Suite, you will lose all Run reports and Run configuration associated with it.

diff --git a/src/pages/docs/visual-testing/configure-test-steps.md b/src/pages/docs/visual-testing/configure-test-steps.md

index 77d9b837..37e006b9 100644

--- a/src/pages/docs/visual-testing/configure-test-steps.md

+++ b/src/pages/docs/visual-testing/configure-test-steps.md

@@ -57,8 +57,12 @@ Testsigma allows you to check your app's appearance during tests using its Visua

You can configure the visual testing for a test step on a test case details page or a test step recorder. Here are the steps to do it:

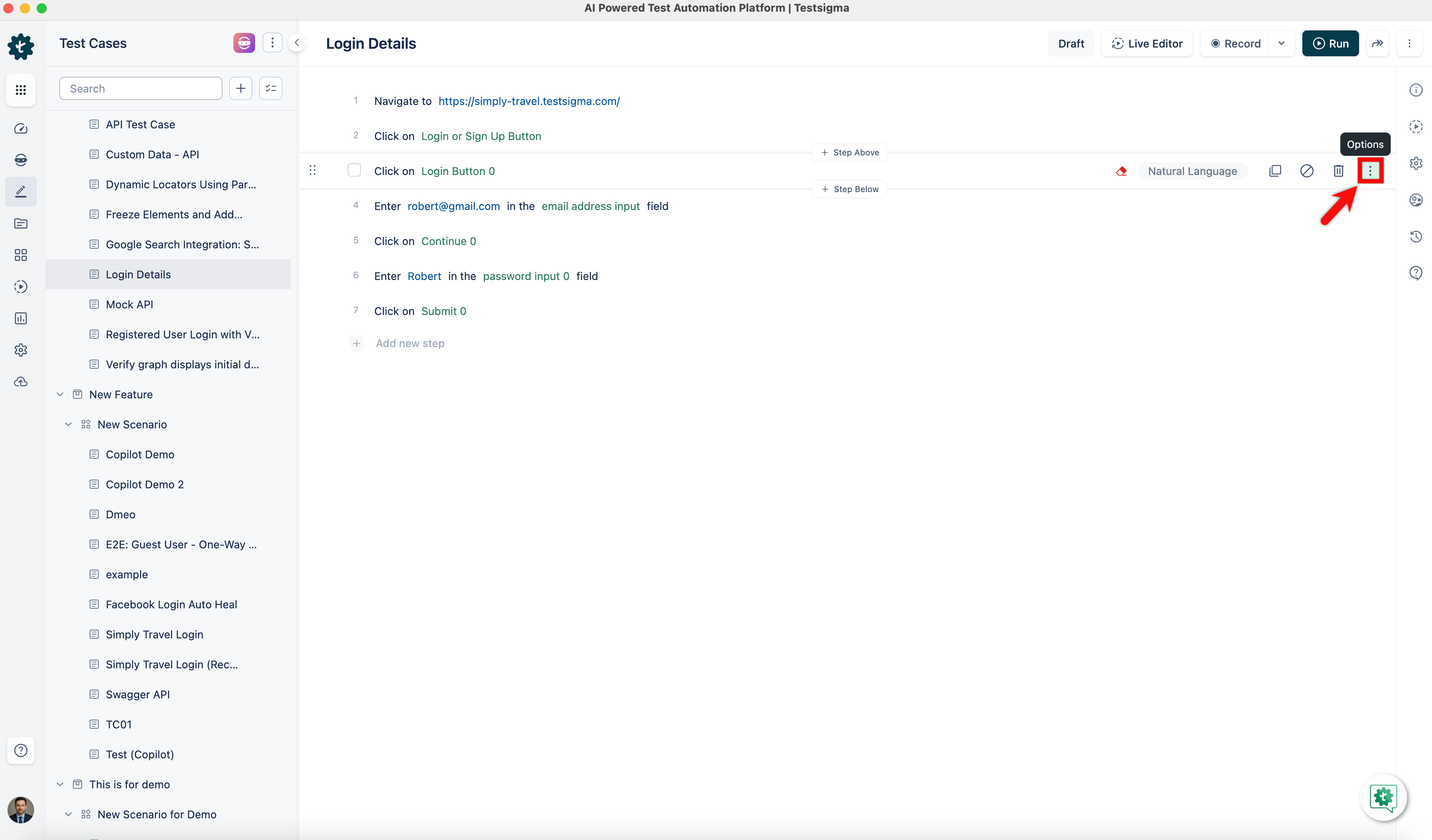

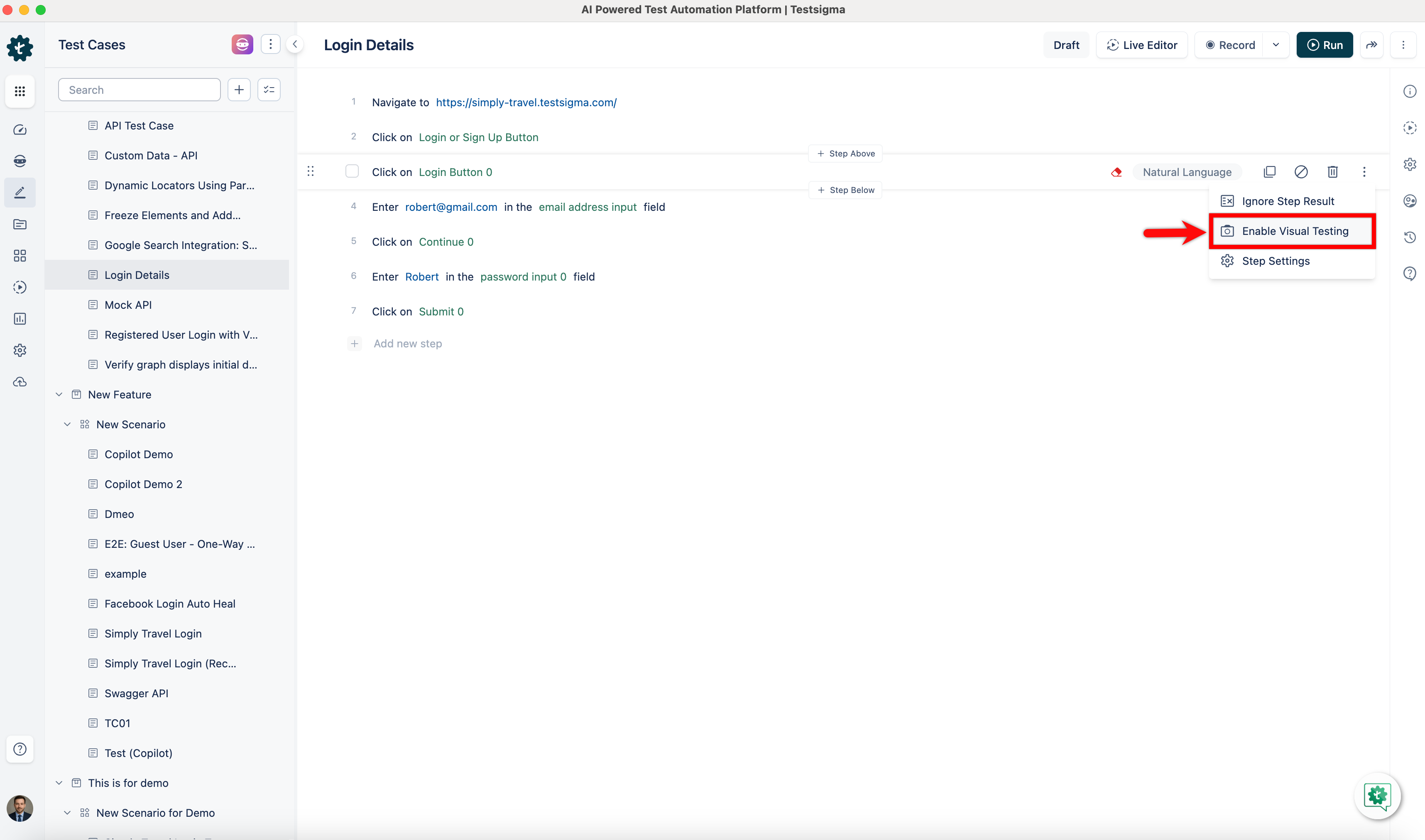

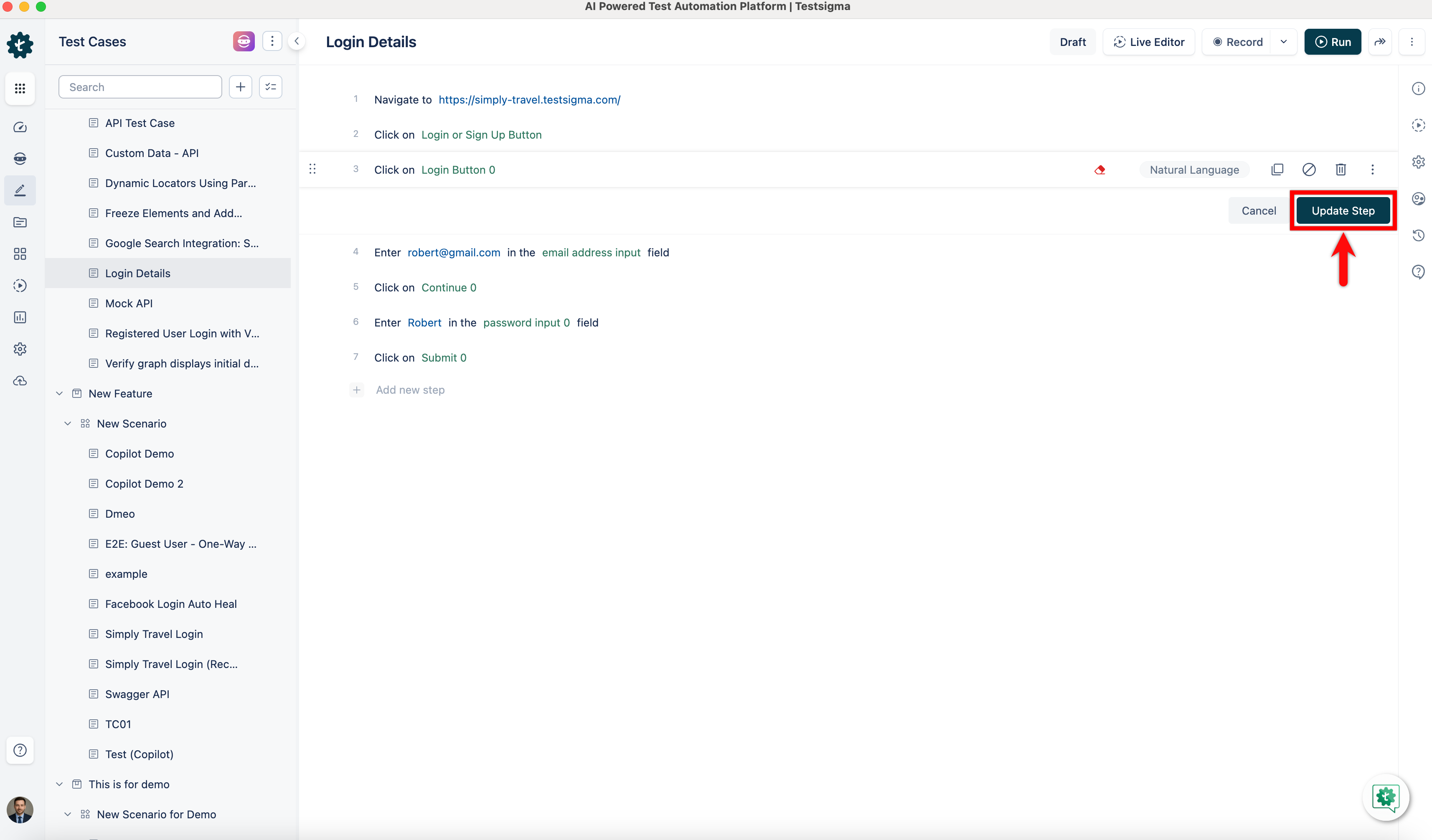

1. Enable visual testing in the **test step** by clicking the **ellipsis** button (**Test Step Options**).

+

+

2. Select **Enable Visual Testing** from the drop-down list that will appear.

-3. Click **Update Step** after enabling Visual Testing for the step.

+

+

+3. Click **Update Step** after enabling Visual Testing for the step.

[[info | NOTE:]]

| After you enable visual testing, Testsigma requires a baseline image corresponding to the test step for executing visual testing. Visual testing will be available only after the next successful run, as Testsigma needs this baseline image.

@@ -69,9 +73,18 @@ You can configure the visual testing for a test step on a test case details page

In Testsigma, you can save time and reduce redundant efforts by selecting multiple steps in a test case and simultaneously turning on/off the visual testing settings. Testsigma supports bulk action of steps for this purpose.

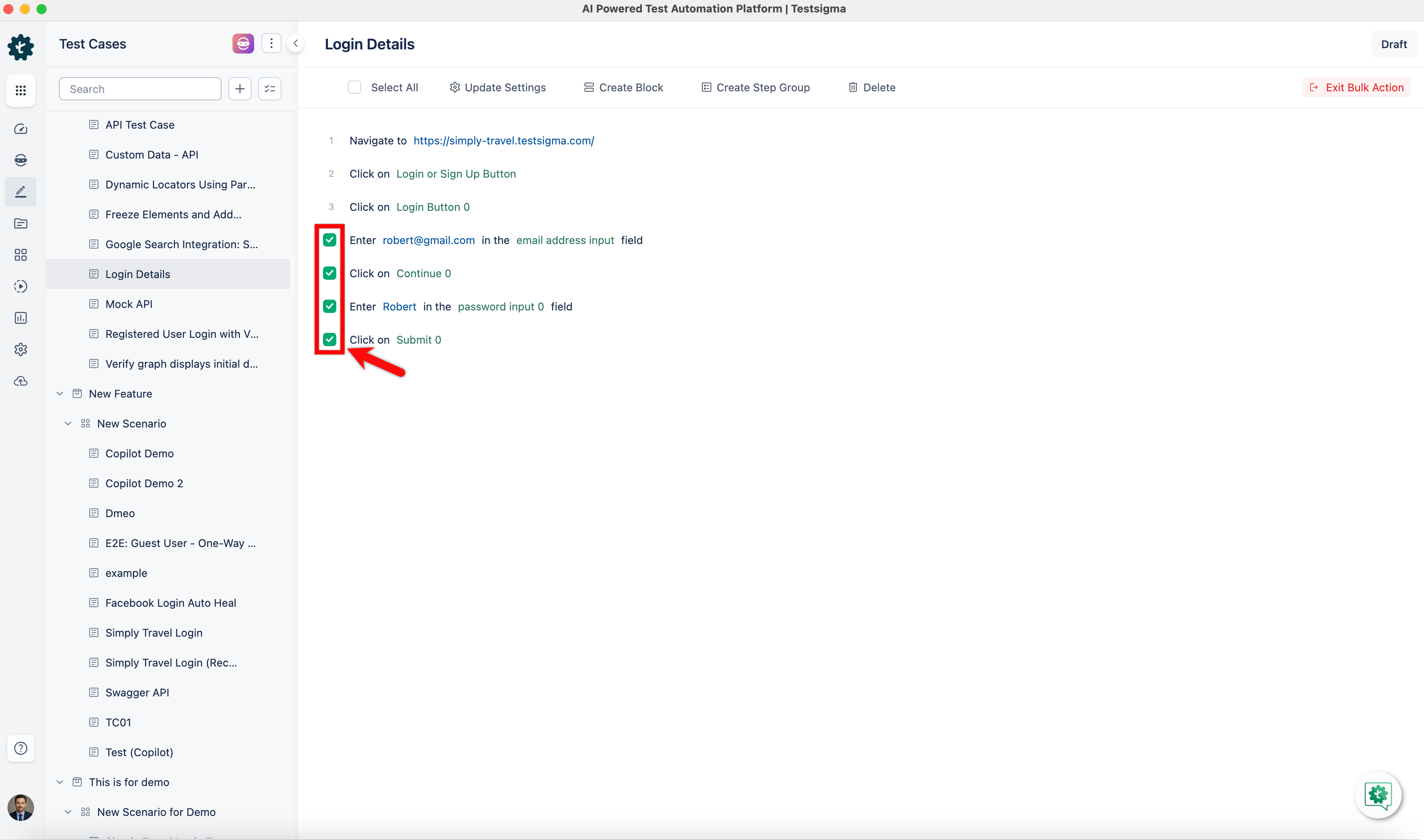

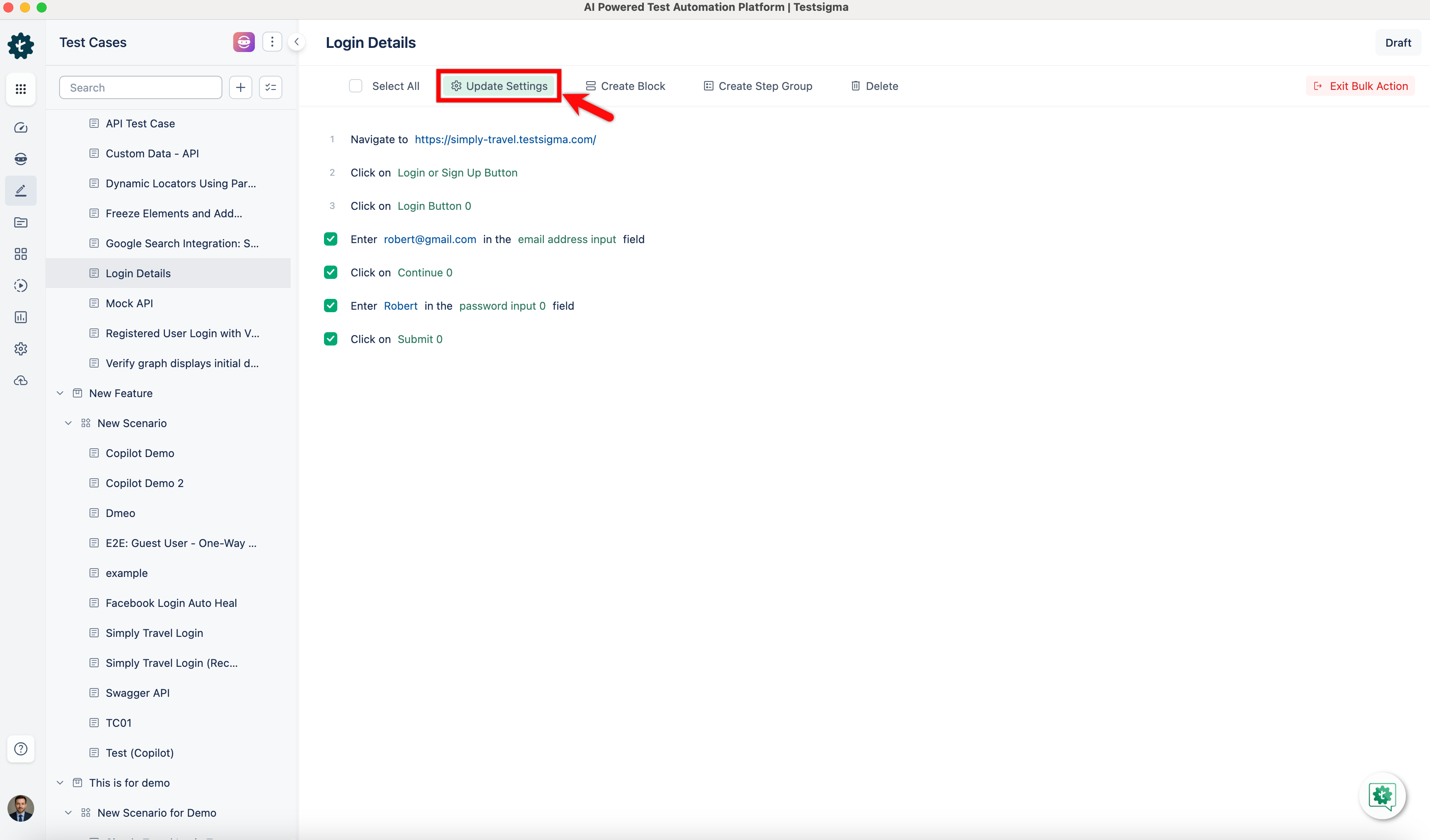

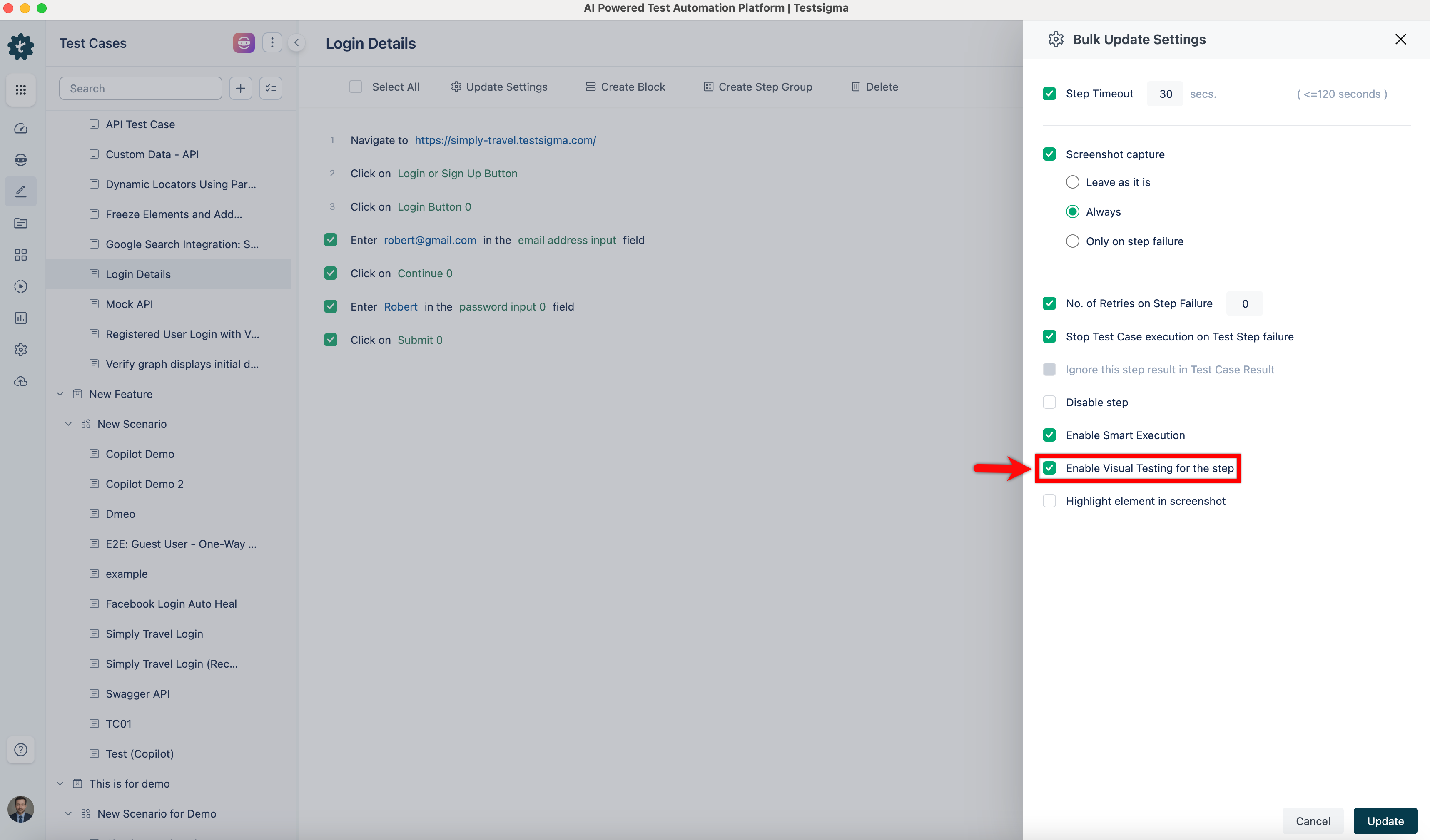

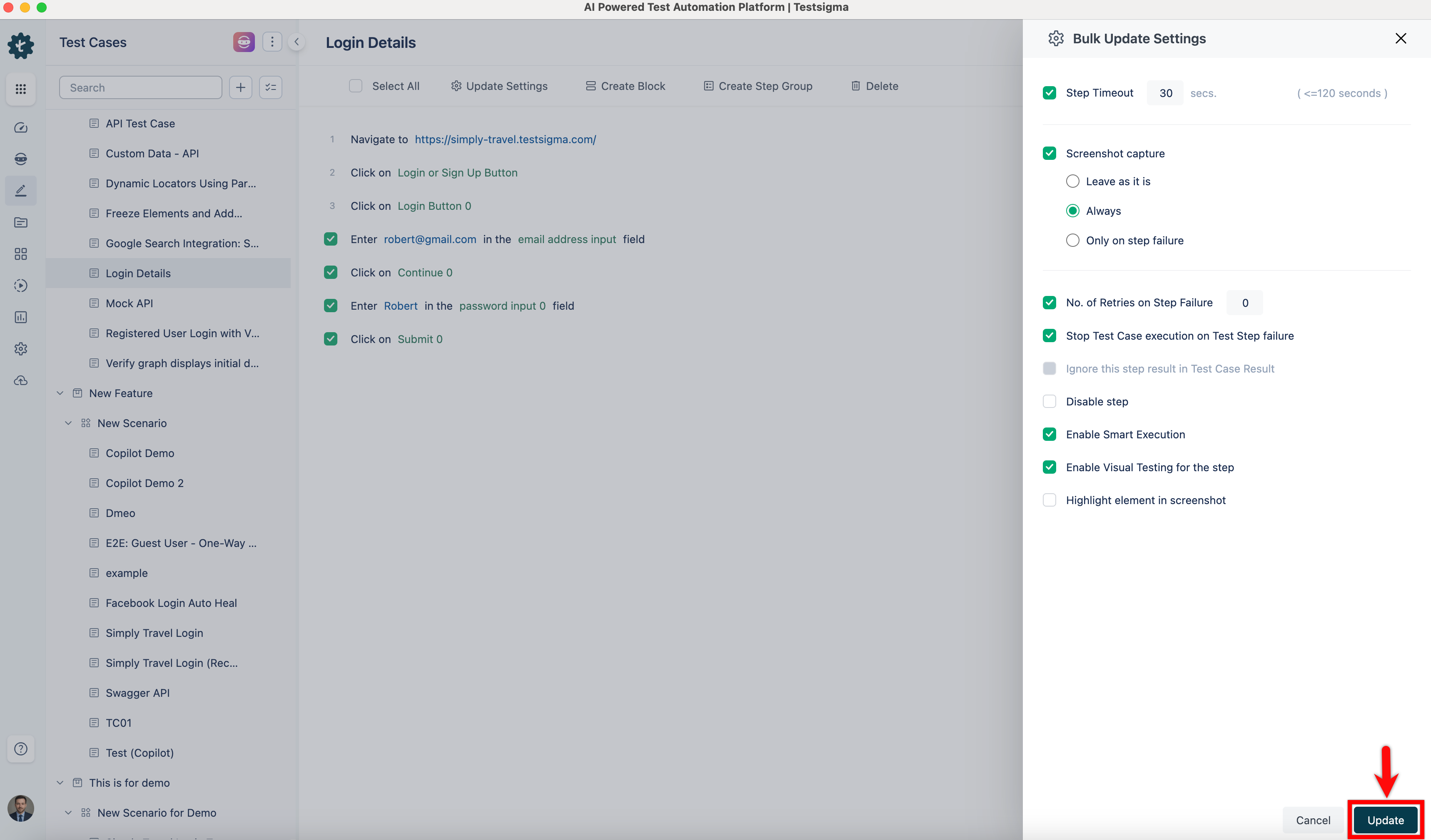

1. Select the multiple test steps by clicking on **checkboxes** to enable Visual Testing.

-2. In the menu bar, click on **Update Settings**. Then, select **Enable Visual Testing** for the step from the pop-up menu that appears.

-3. Click **Update** after enabling Visual Testing for the steps.

-4. **Run** the Test Case to view the visual difference.

+

+

+2. In the menu bar, click on **Update Settings**.

+

+

+3. Select **Enable Visual Testing** for the step from the pop-up menu that appears.

+

+

+4. Click **Update** after enabling Visual Testing for the steps.

+

+

+5. **Run** the Test Case to view the visual difference.

---

@@ -80,17 +93,22 @@ In Testsigma, you can save time and reduce redundant efforts by selecting multip

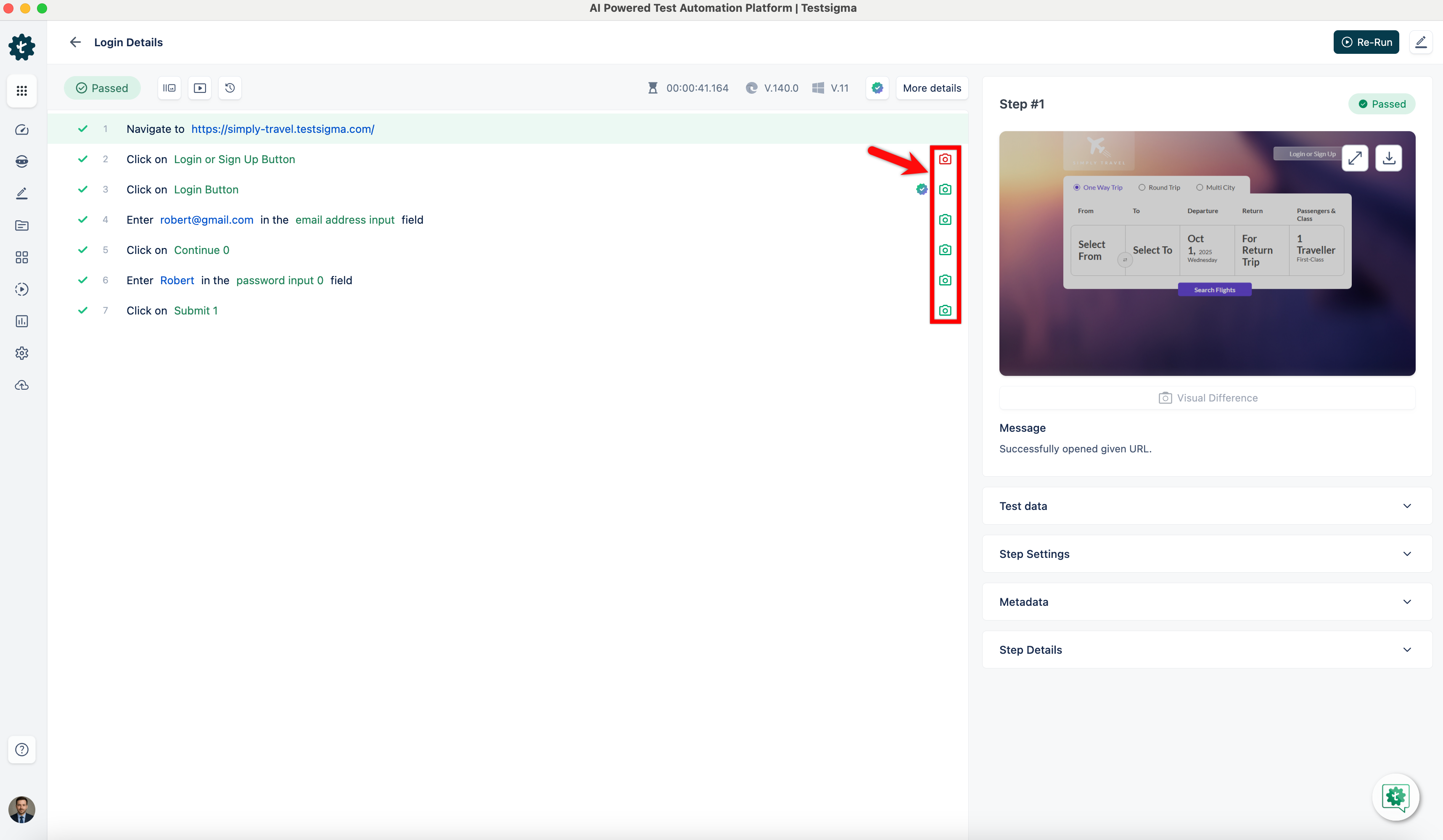

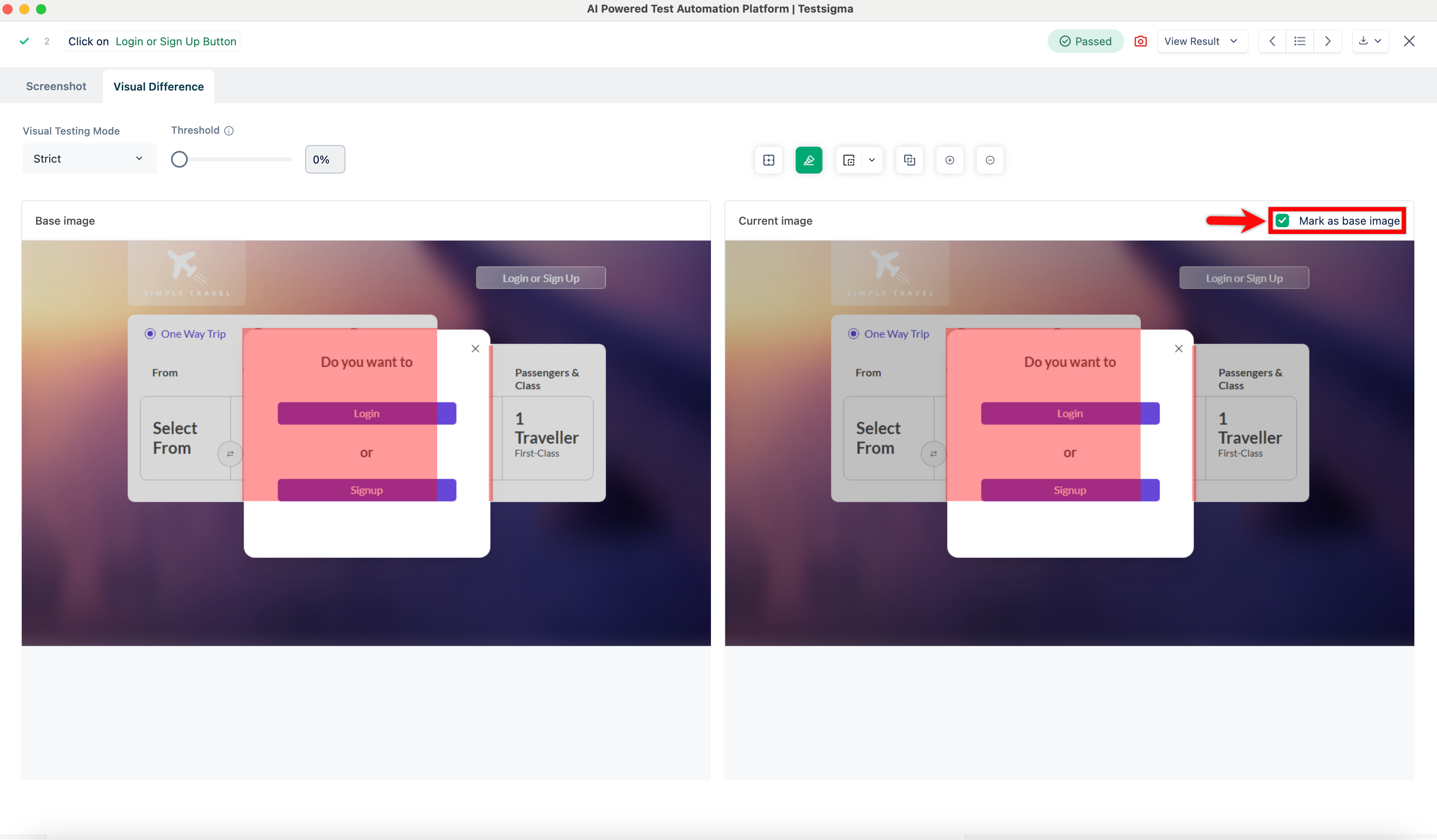

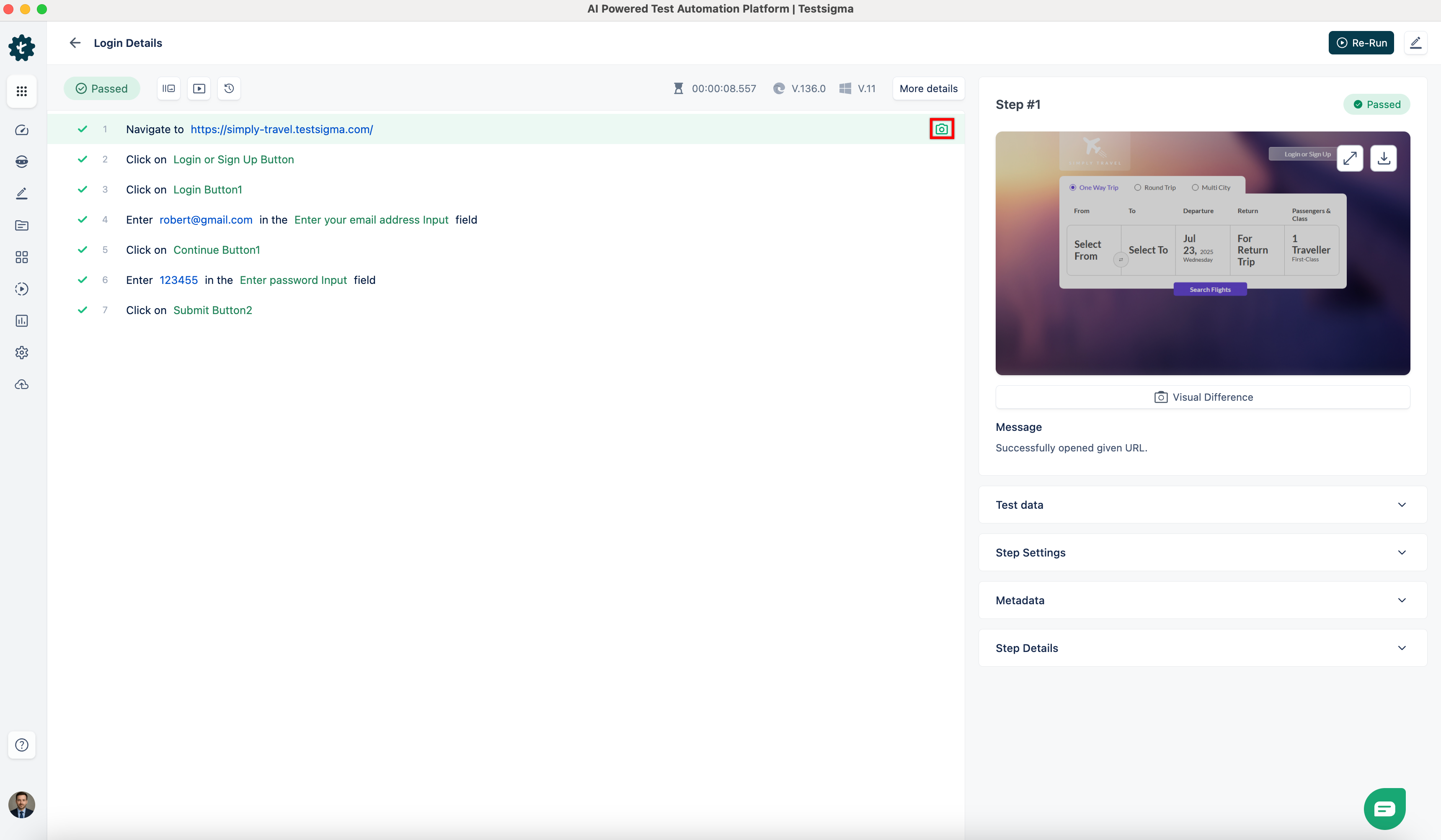

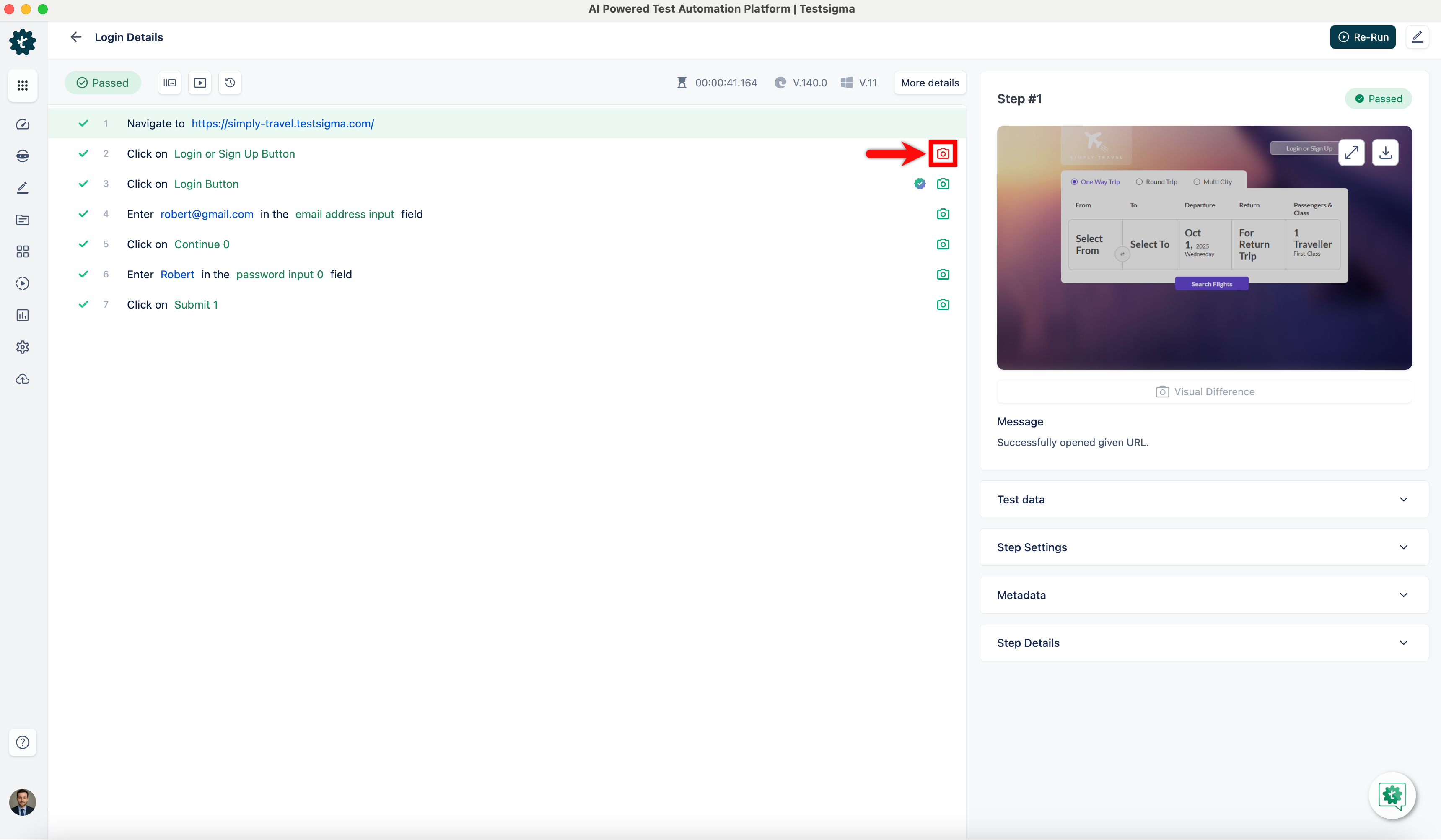

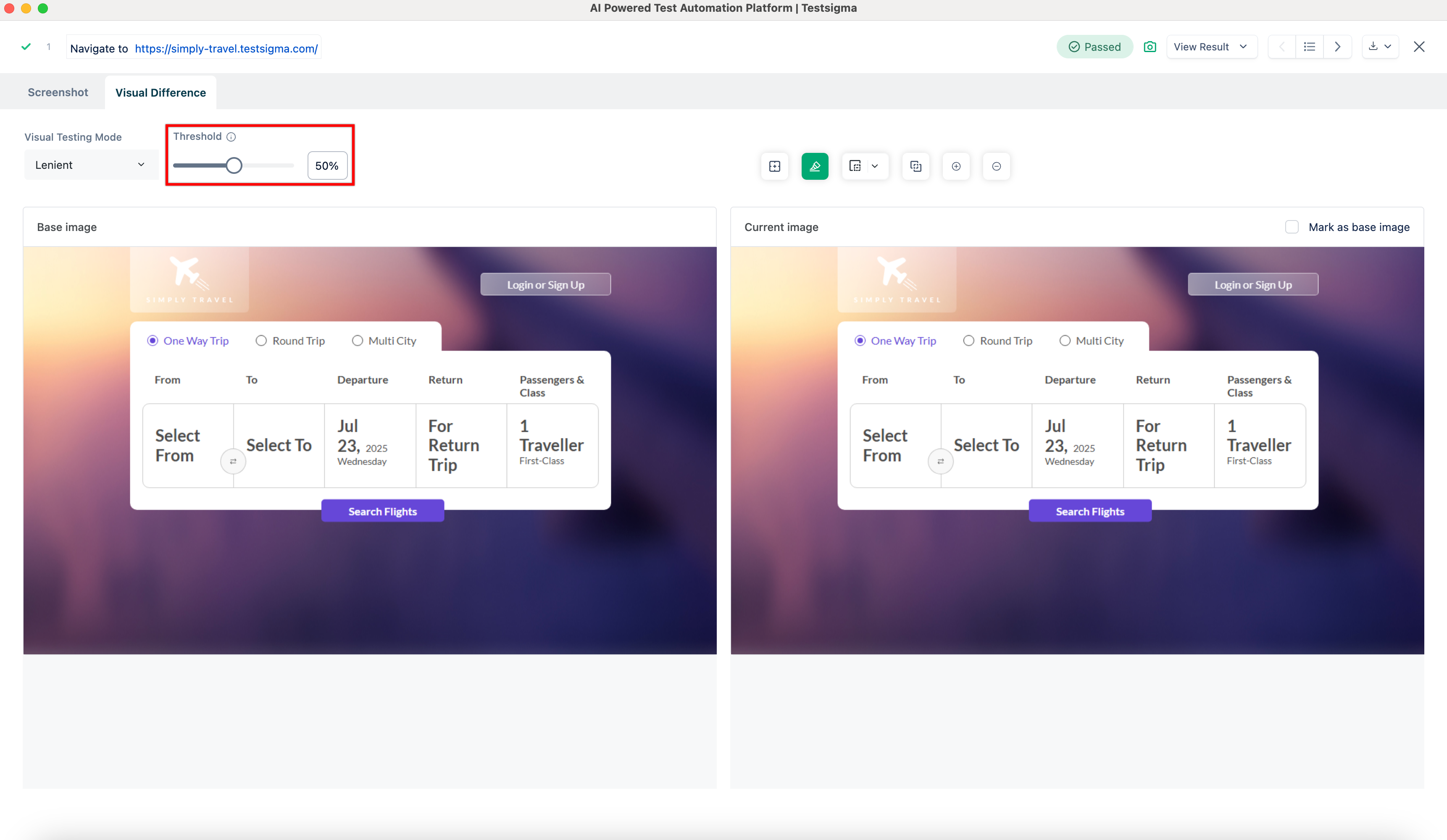

When you conduct visual testing using Testsigma, you should compare the **current image** (reference image) with the original image (baseline image) and ensure that the reference image matches the baseline image. You should update the baseline image whenever you apply changes to the UI using the following steps:

1. You have two options to enable visual testing in your test steps: [Documentation on enabling visual testing in test steps](https://testsigma.com/docs/visual-testing/configure-test-steps/#enable-visual-testing-in-test-steps) or [Documentation on bulking action for visual testing steps](https://testsigma.com/docs/visual-testing/configure-test-steps/#bulk-action-for-visual-testing-steps). **Run** the test case after enabling it in Test Step.

-2. **Re-Run** the test case to enable visual testing and see any visual differences.

+2. **Re-Run** the test case to enable visual testing and see any visual differences.

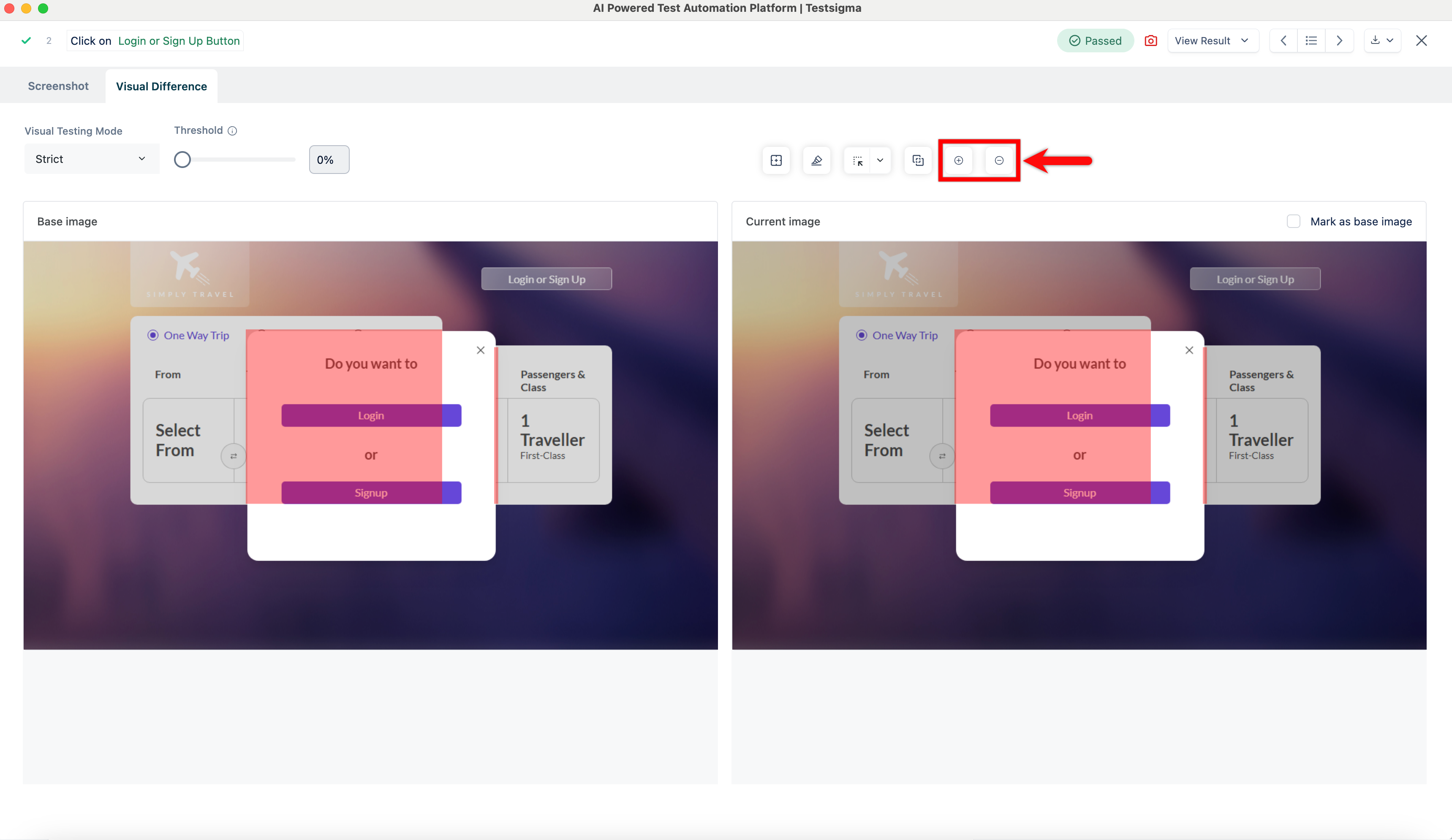

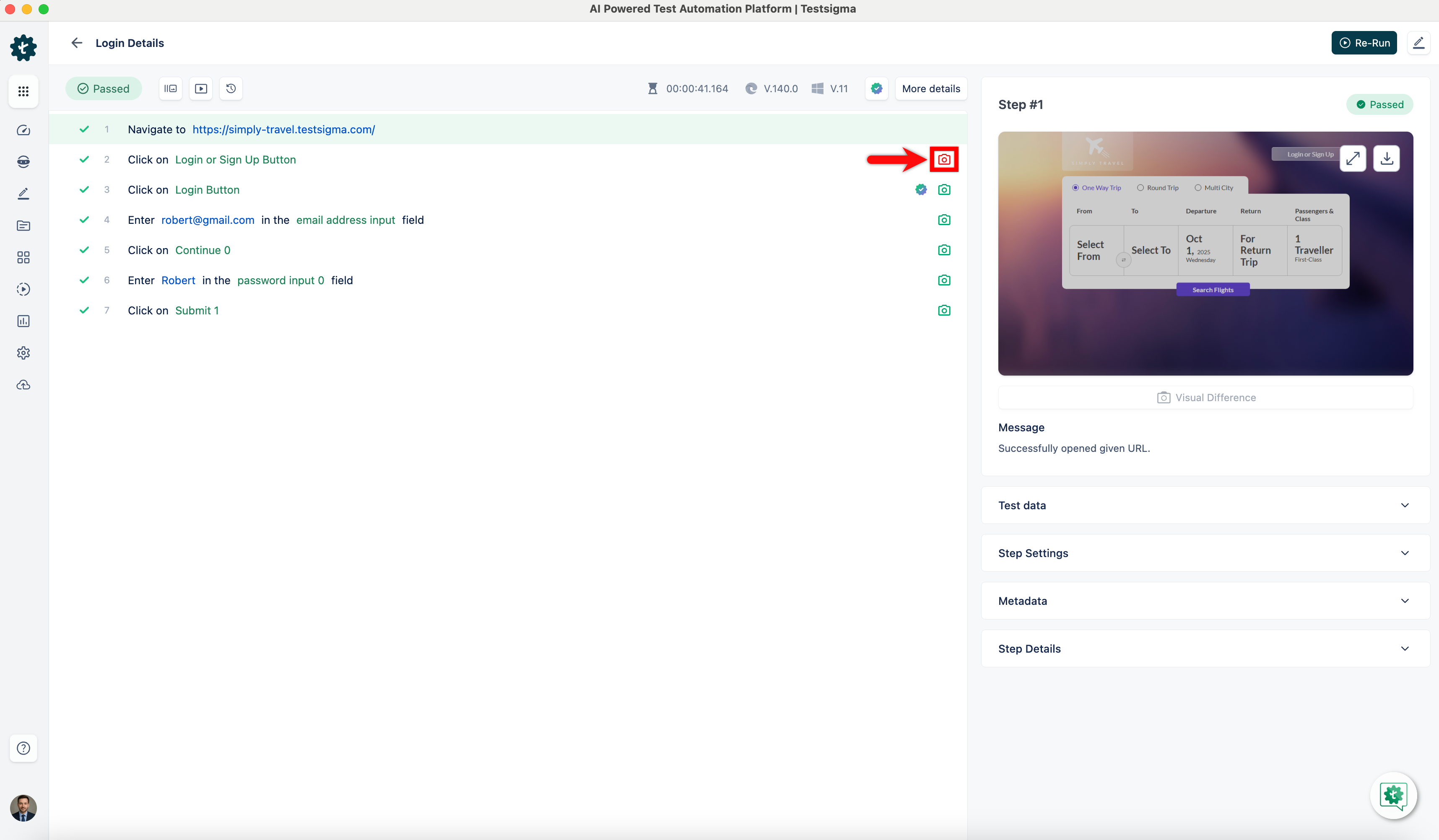

3. After executing a test case for visual testing, the **Test Case Result** page will display a **green camera** icon on steps with **no visual differences** between the reference and baseline images and a **red camera** icon where there are **visual differences**.

+

4. Click on the **camera** icon to open the **Visual Difference** overlay screen. Then, go to the top right of the page and **check** the box **Mark as base image** as **Current Image**. This action will update your base image to match your current reference image.

+

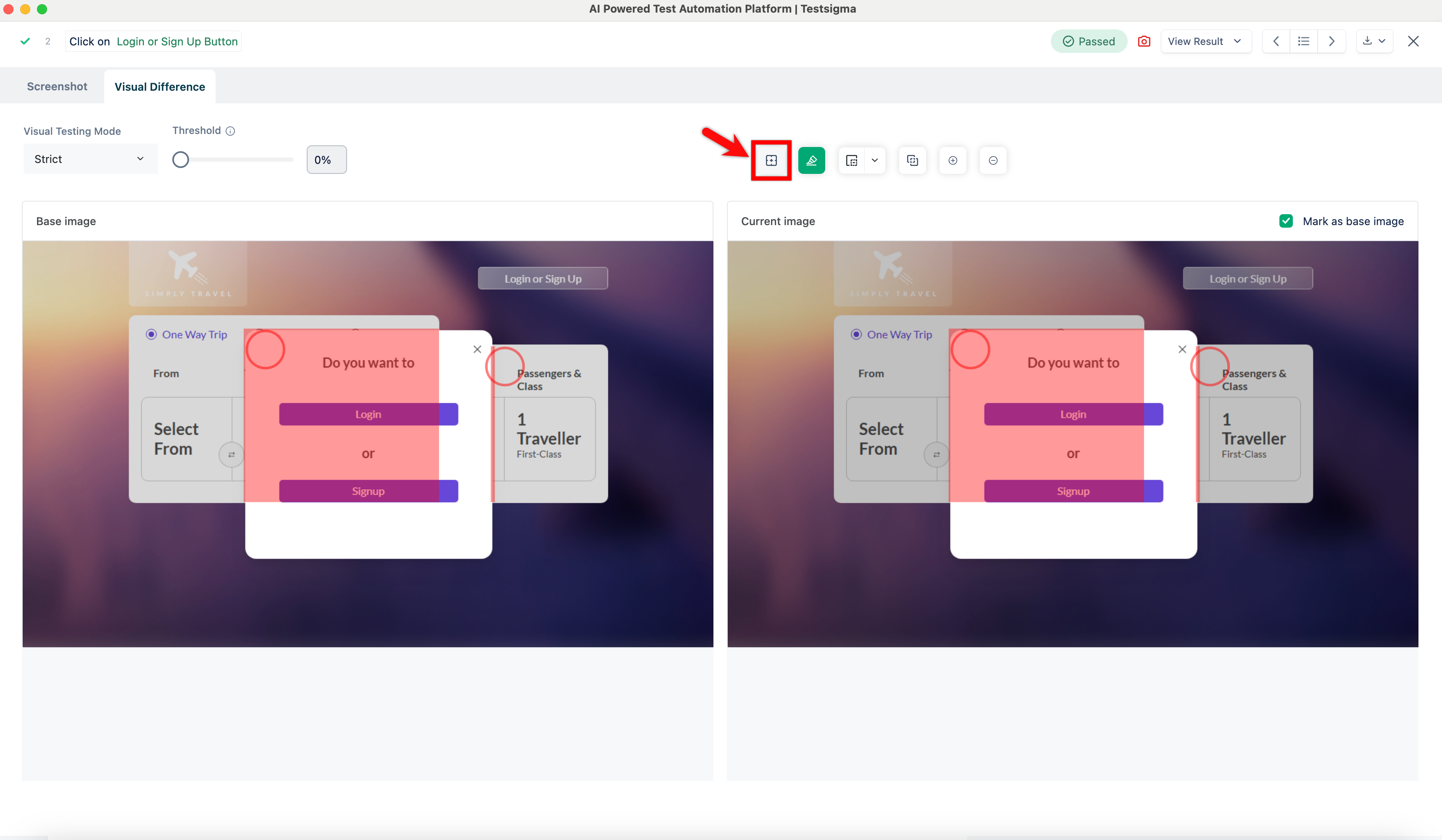

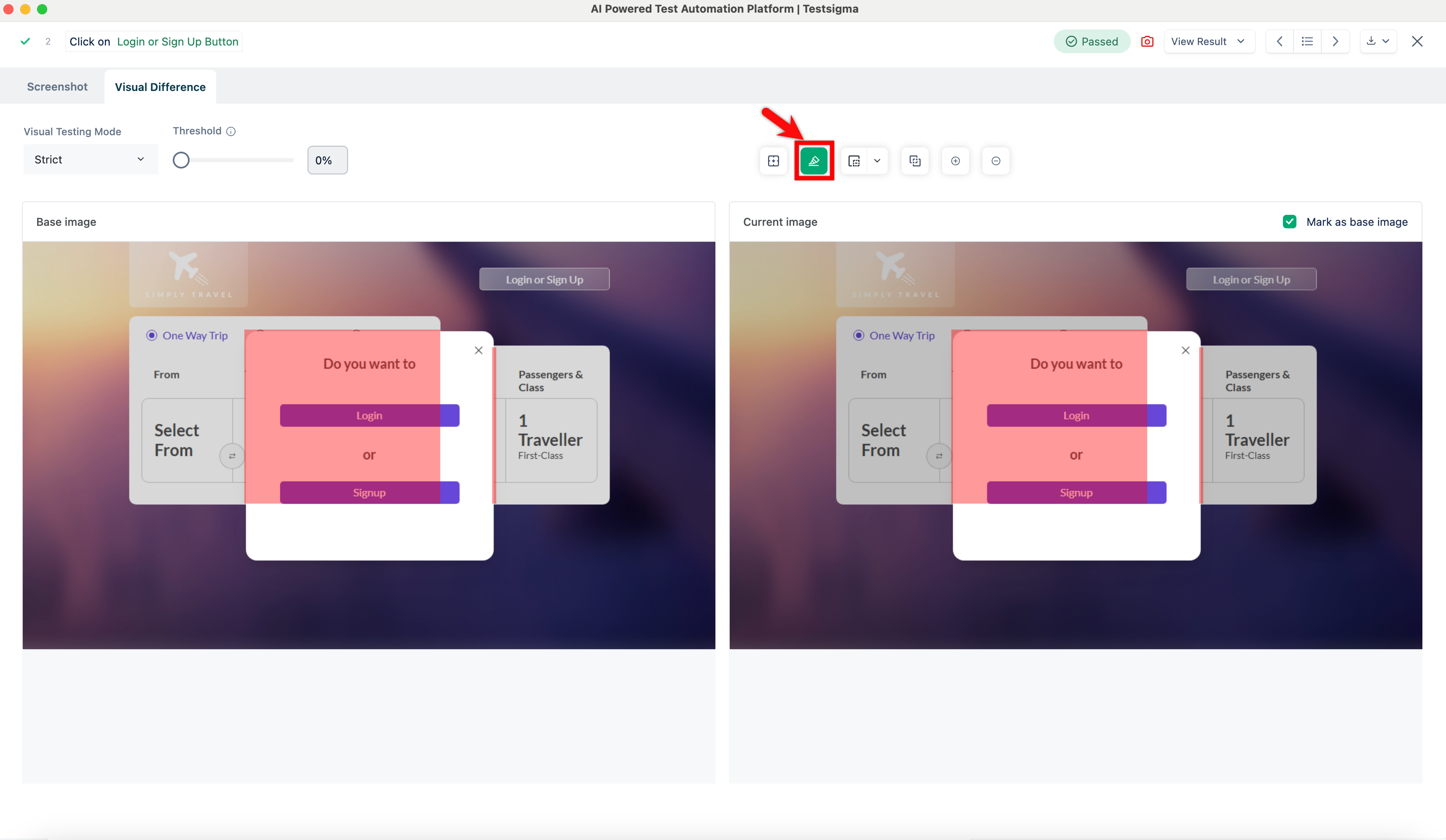

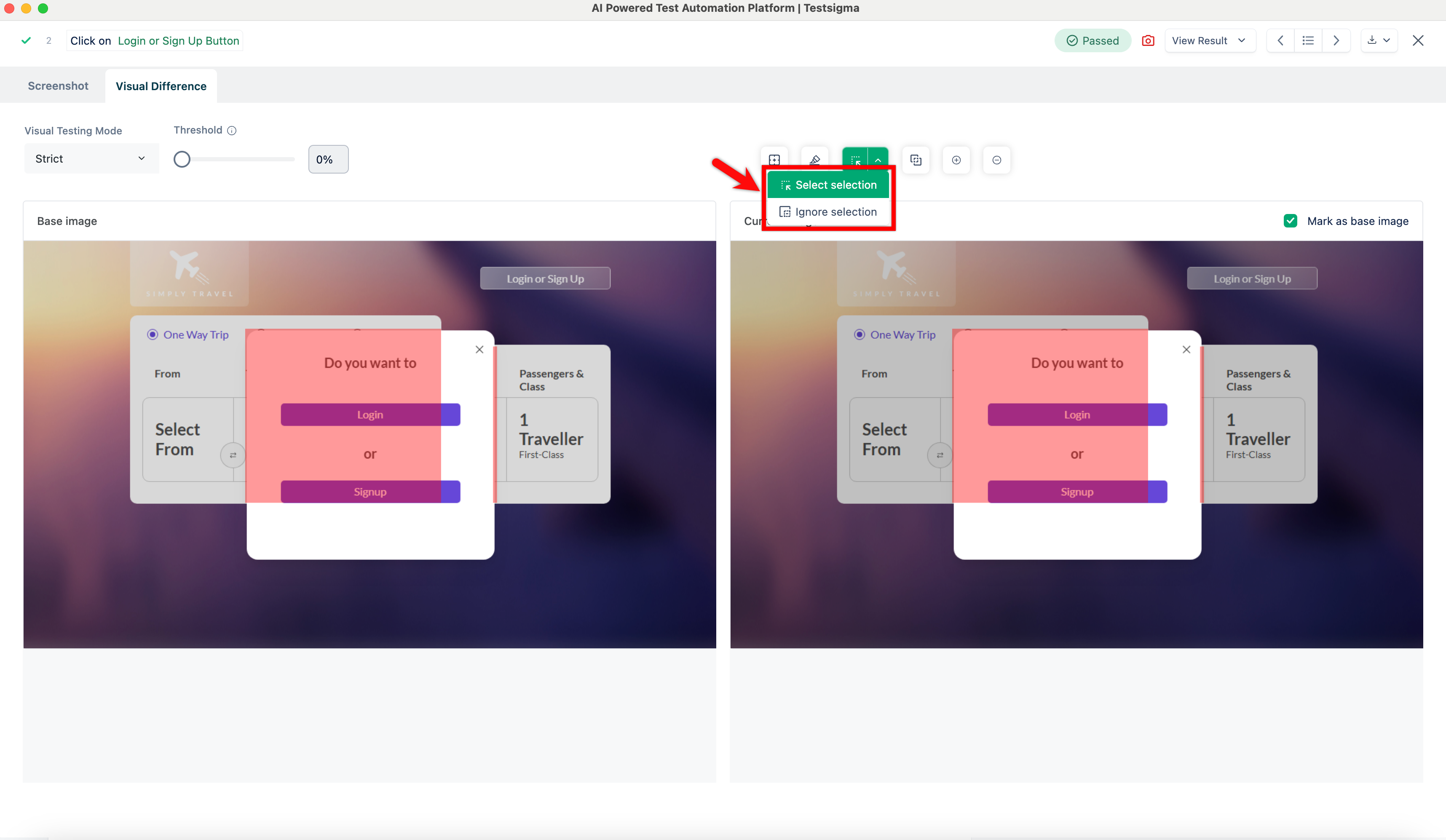

5. In Visual Difference overlay, you can perform the following options:

- **Highlight Differences**: Highlight variations in the display.

+

- **Hide Visual Differences**: Conceal any visible disparities.

+

- **Select region to ignore from visual comparison**: Select areas not to compare visually.

+

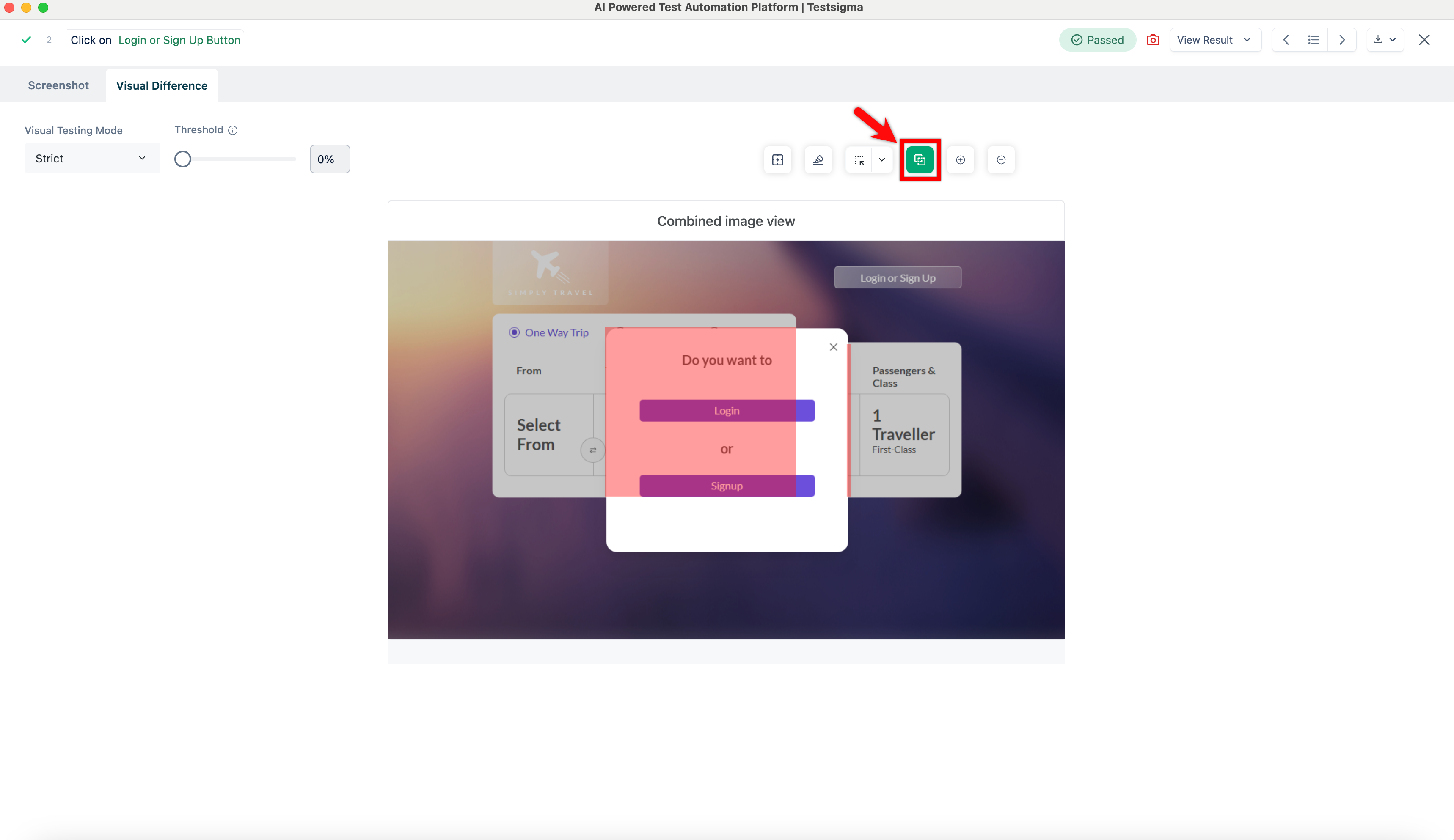

- **Merge base image and current image**: Combine the original and current images for comparison.

+

- **Zoom in** and **Zoom out**: Enlarge or reduce the view for closer inspection.

-

-

+

6. Next time you run the test case, you will compare the visuals from future runs with the new base image.

@@ -99,7 +117,7 @@ When you conduct visual testing using Testsigma, you should compare the **curren

## **Perform Visual Testing in Test Case**

1. Follow the steps in the above section to Enable Visual Testing in Test Steps using [documentation on utilising test step options](https://testsigma.com/docs/visual-testing/configure-test-steps/#enable-visual-testing-in-test-steps) or [documentation on updating settings](https://testsigma.com/docs/visual-testing/configure-test-steps/#bulk-action-for-visual-testing-steps). Refer to the [documentation on marking the baseline image](https://testsigma.com/docs/visual-testing/configure-test-steps/#bulk-action-for-visual-testing-steps) and run the test case to identify visual differences in the UI.

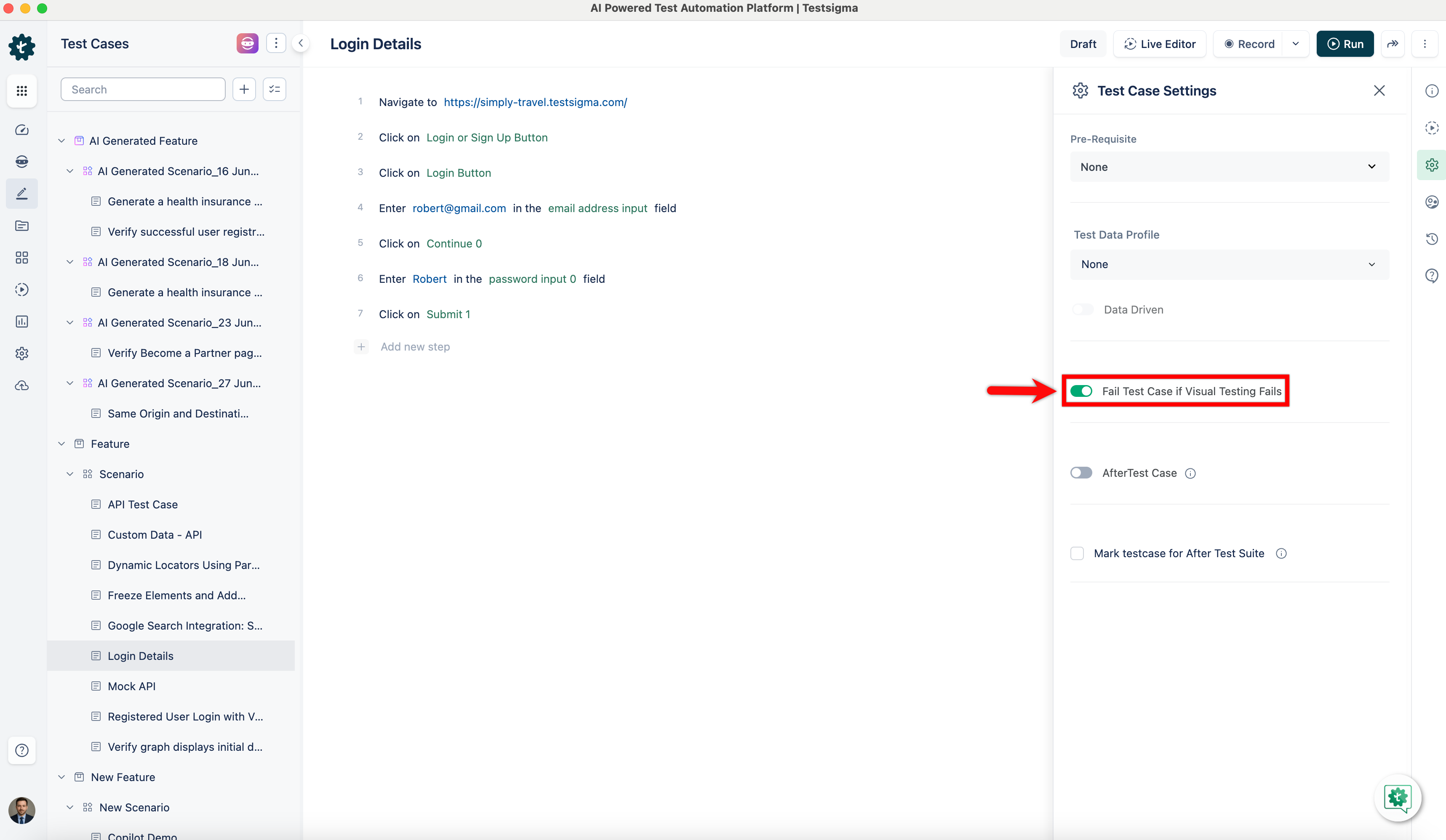

-2. Click on **Test Case Settings** in the right-side navbar and enable the **Fail Test Case if Visual Testing Fails** toggle to automatically mark a test case as failed if it detects visual differences during execution.

+2. Click on **Test Case Settings** in the right-side navbar and enable the **Fail Test Case if Visual Testing Fails** toggle to automatically mark a test case as failed if it detects visual differences during execution.

---

@@ -111,16 +129,18 @@ When you conduct visual testing using Testsigma, you should compare the **curren

### **Steps to Configure Ignore Sections for Visual Testing**

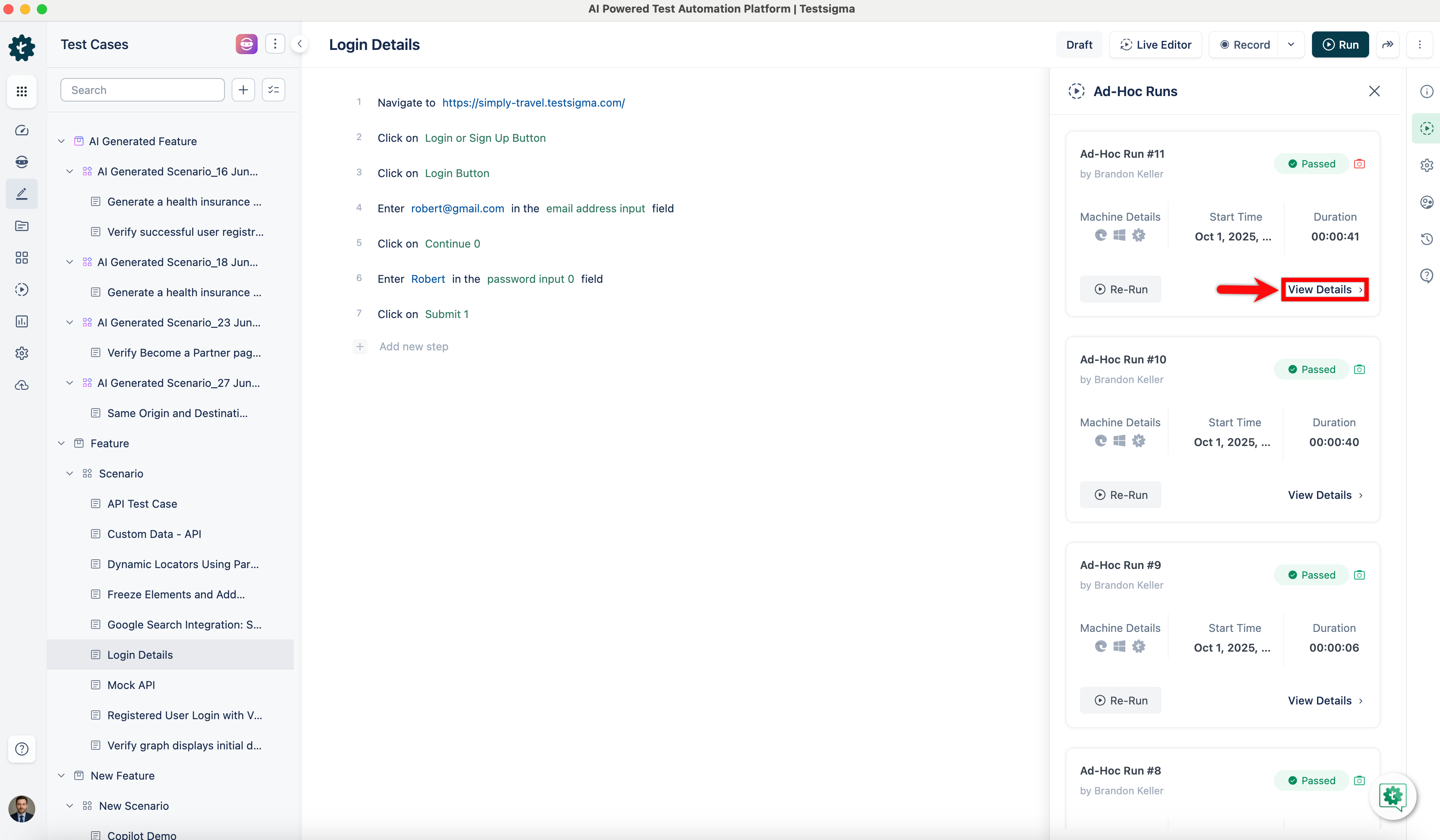

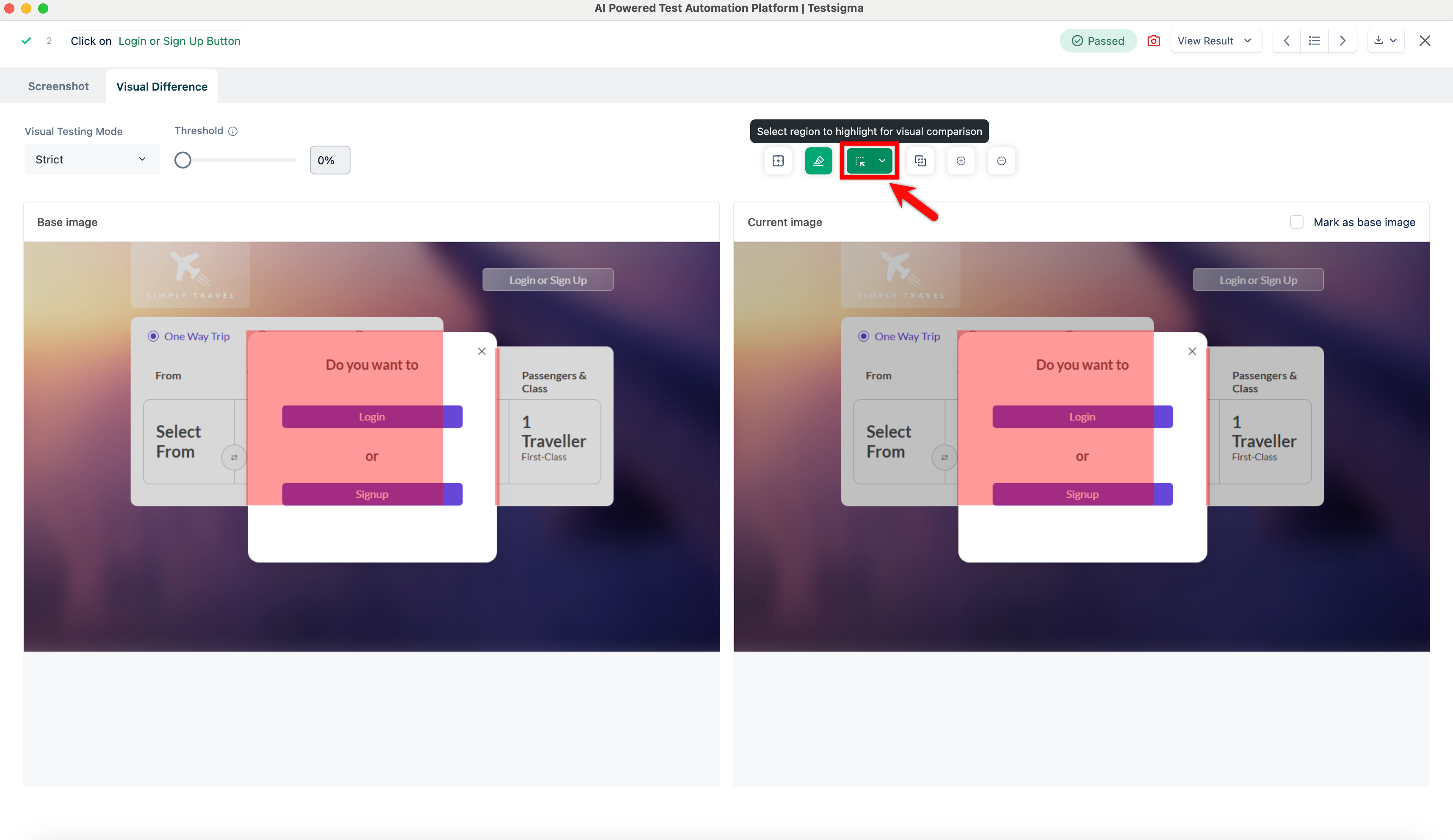

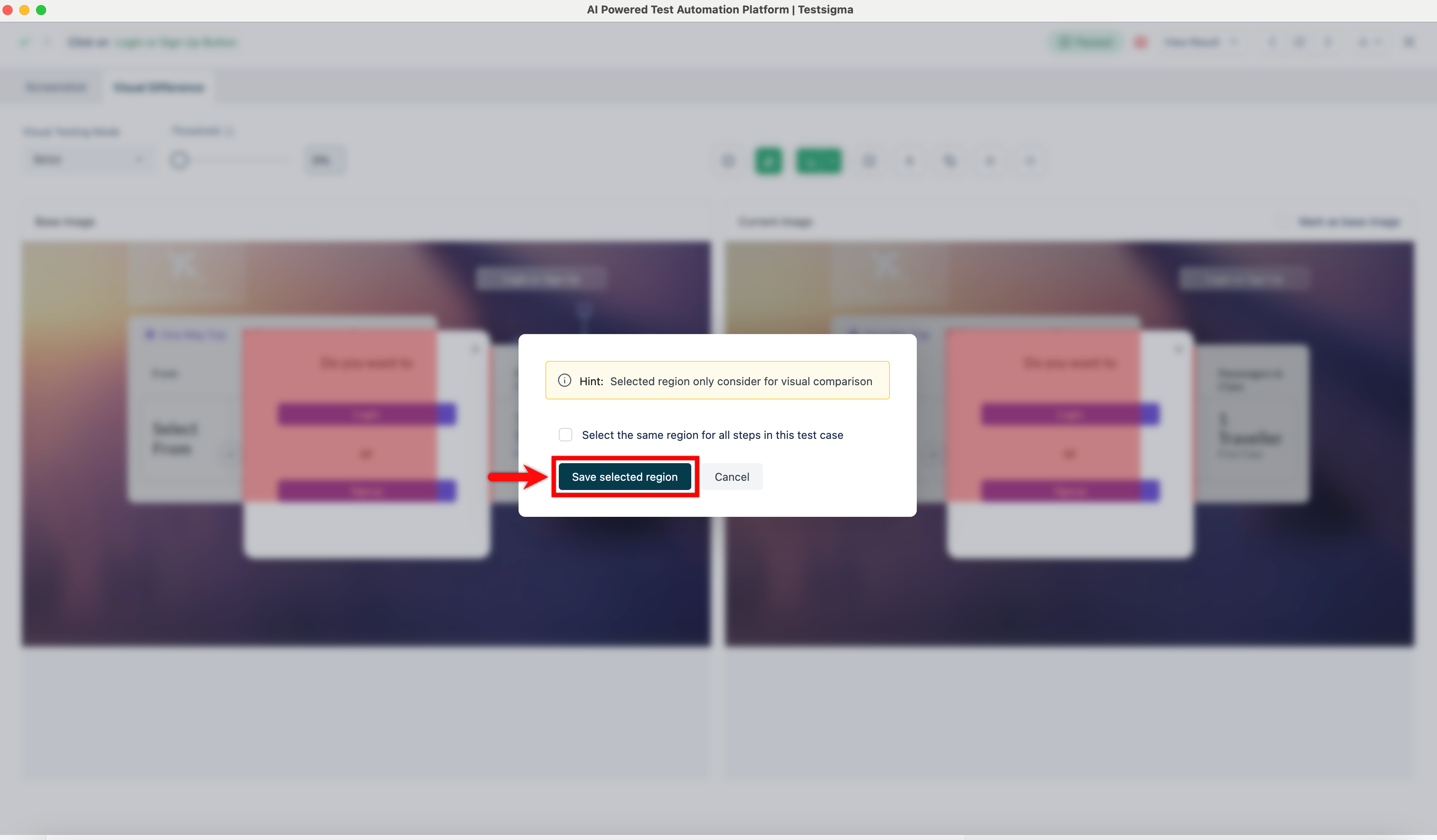

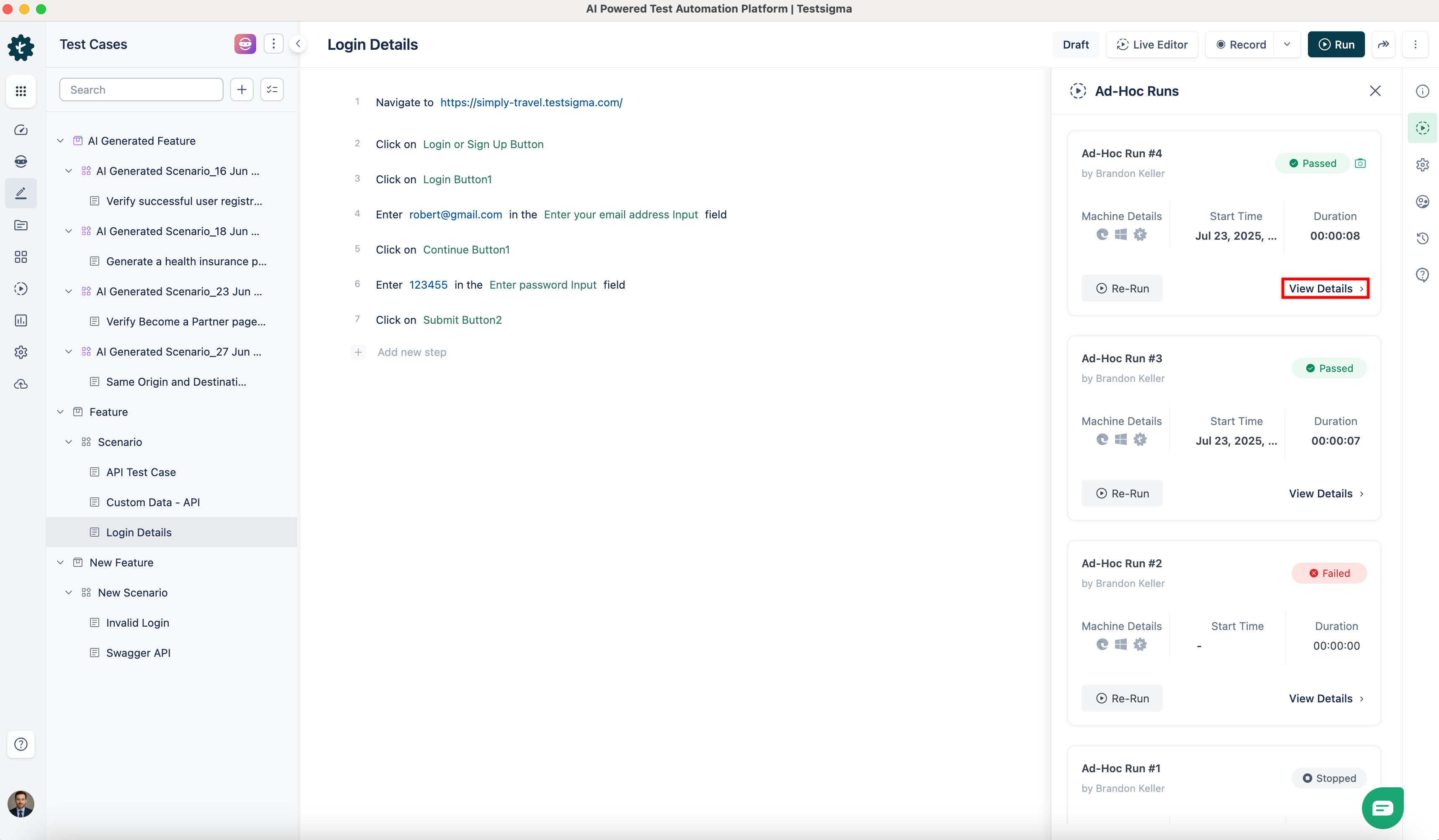

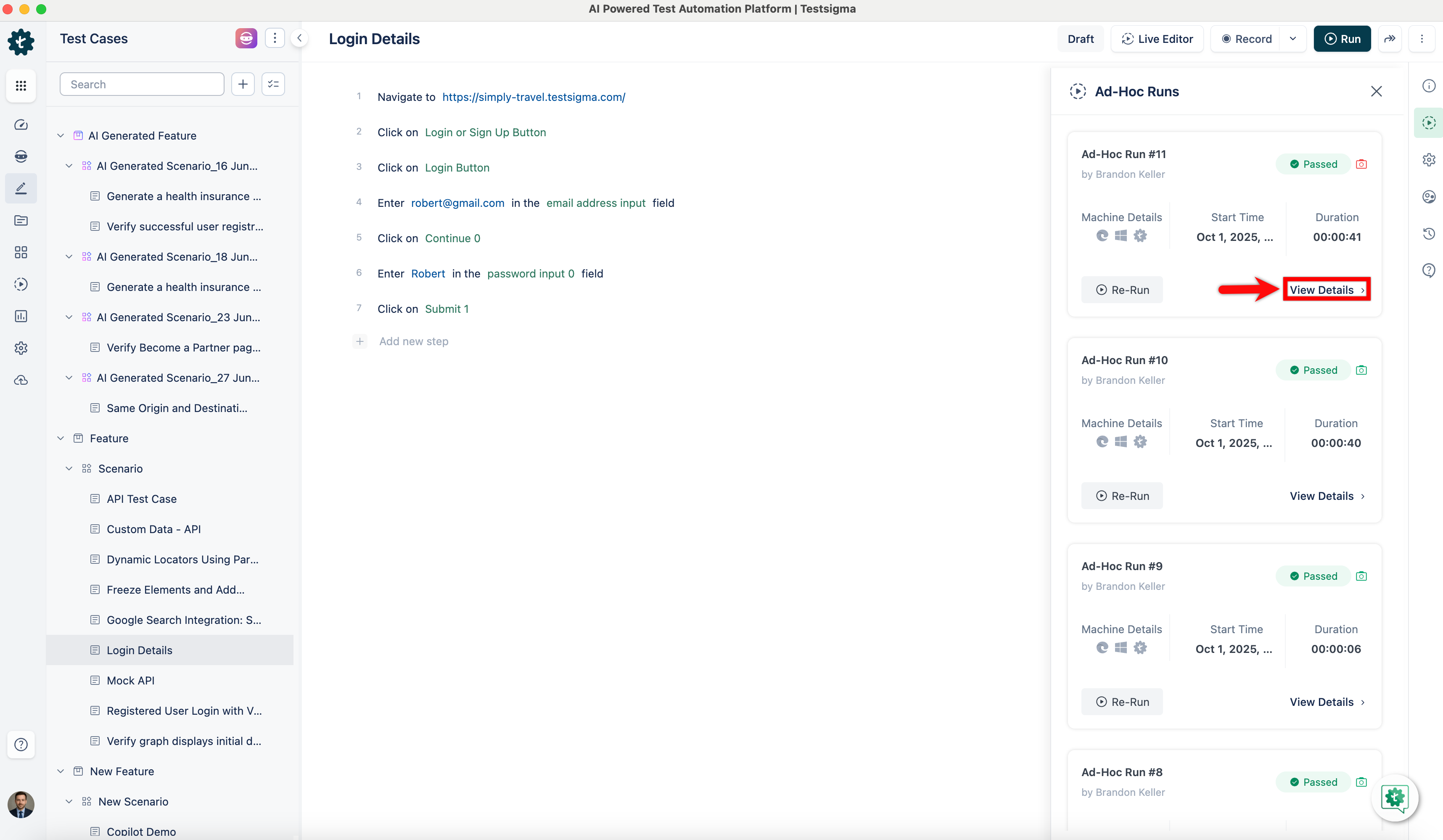

1. Click **Ad-hoc Runs** in the right navigation bar, then click **View Details** to open the test case result page.

-

+

2. Click the **Camera** icon to open the **Visual Difference** overlay screen.

+

3. Select **Select region to ignore from visual comparison** and choose the areas you want to exclude from the visual comparison.

-

+

4. You can resize or move the ignored area by selecting **Resize/Move Ignored Regions** and adjusting the selected region on the screen as needed.

5. Review the regions you marked as ignored and click **Save**.

+

---

@@ -133,20 +153,20 @@ If you don’t want to compare entire images, you can focus on specific areas us

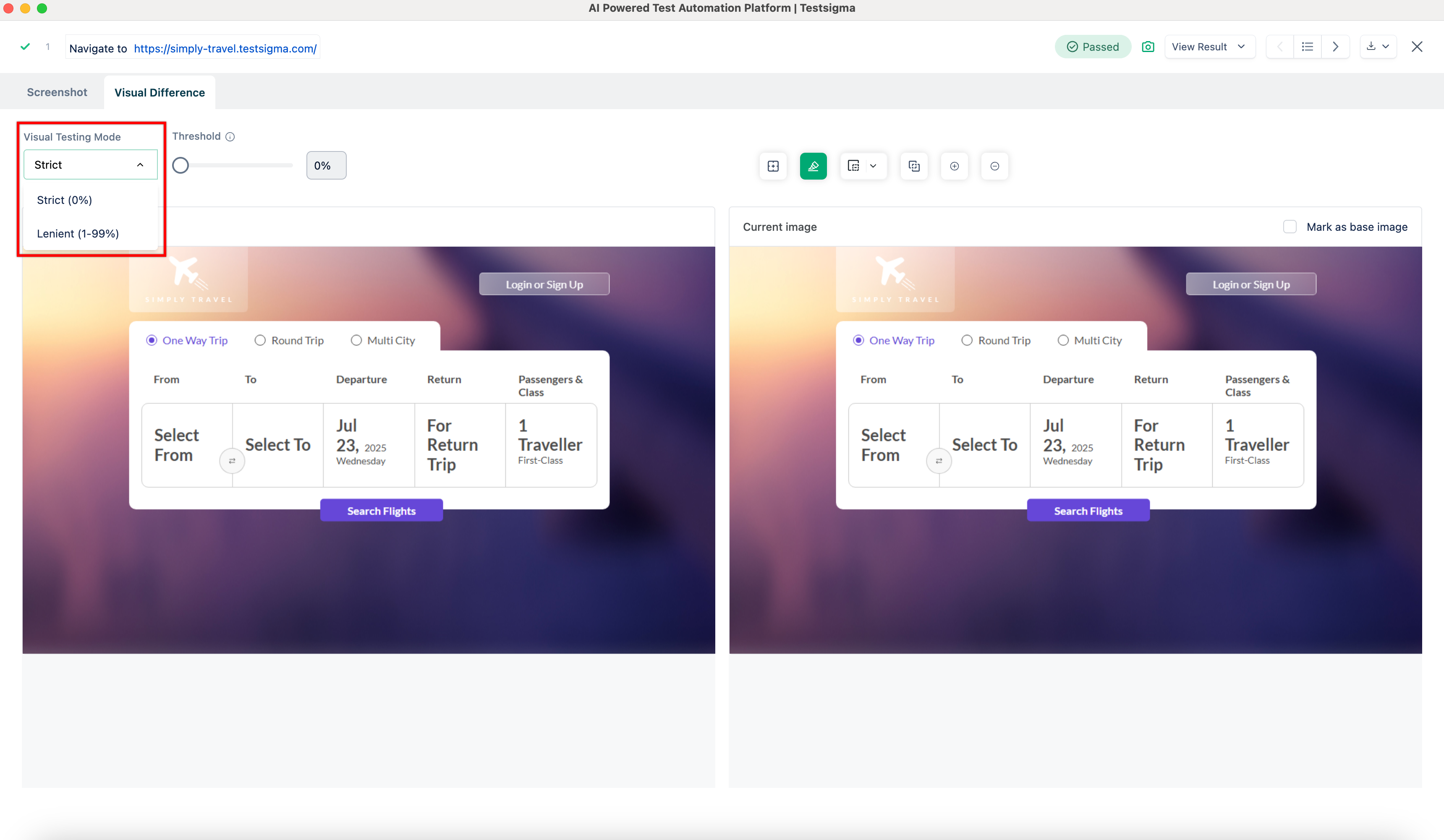

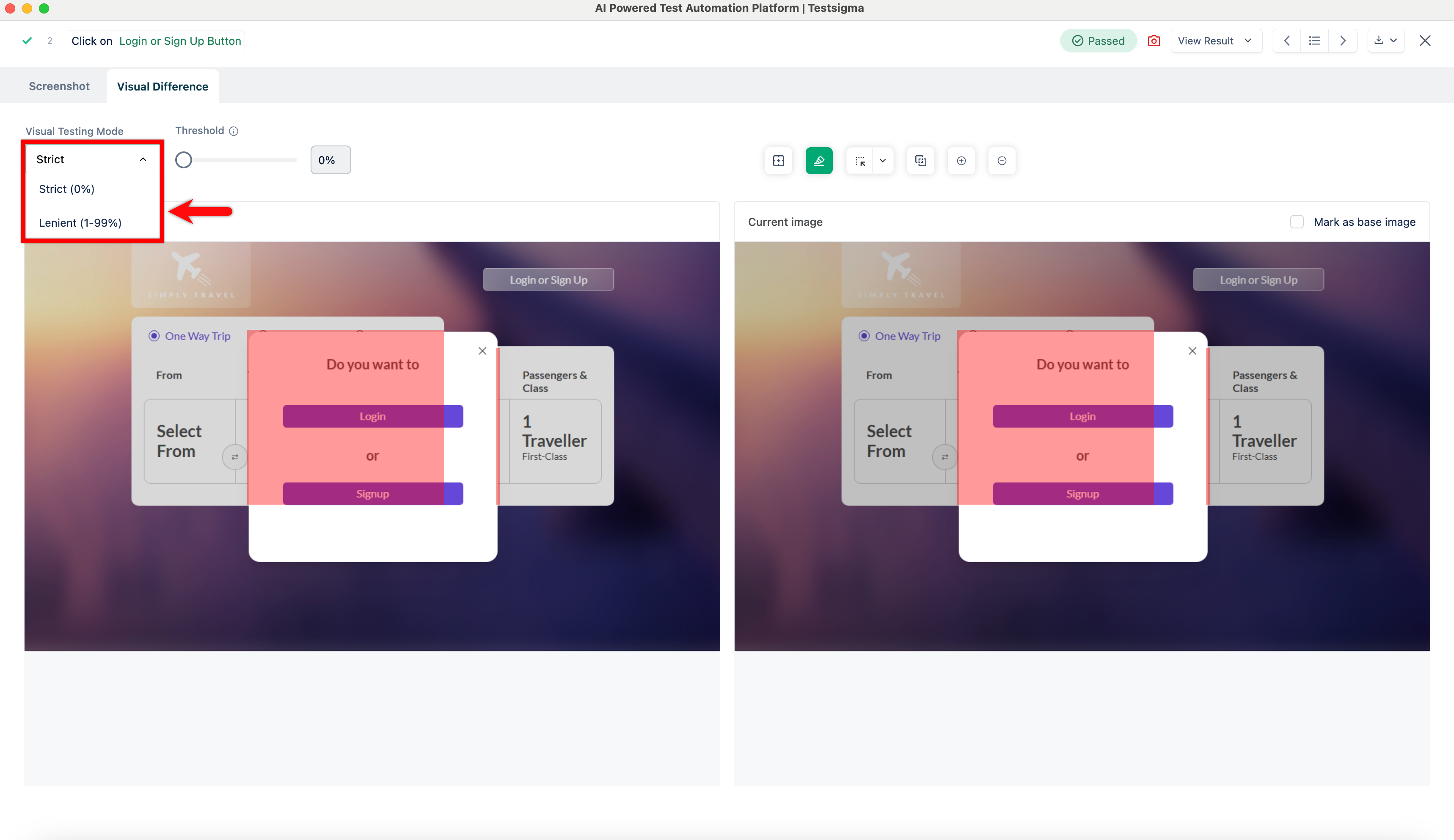

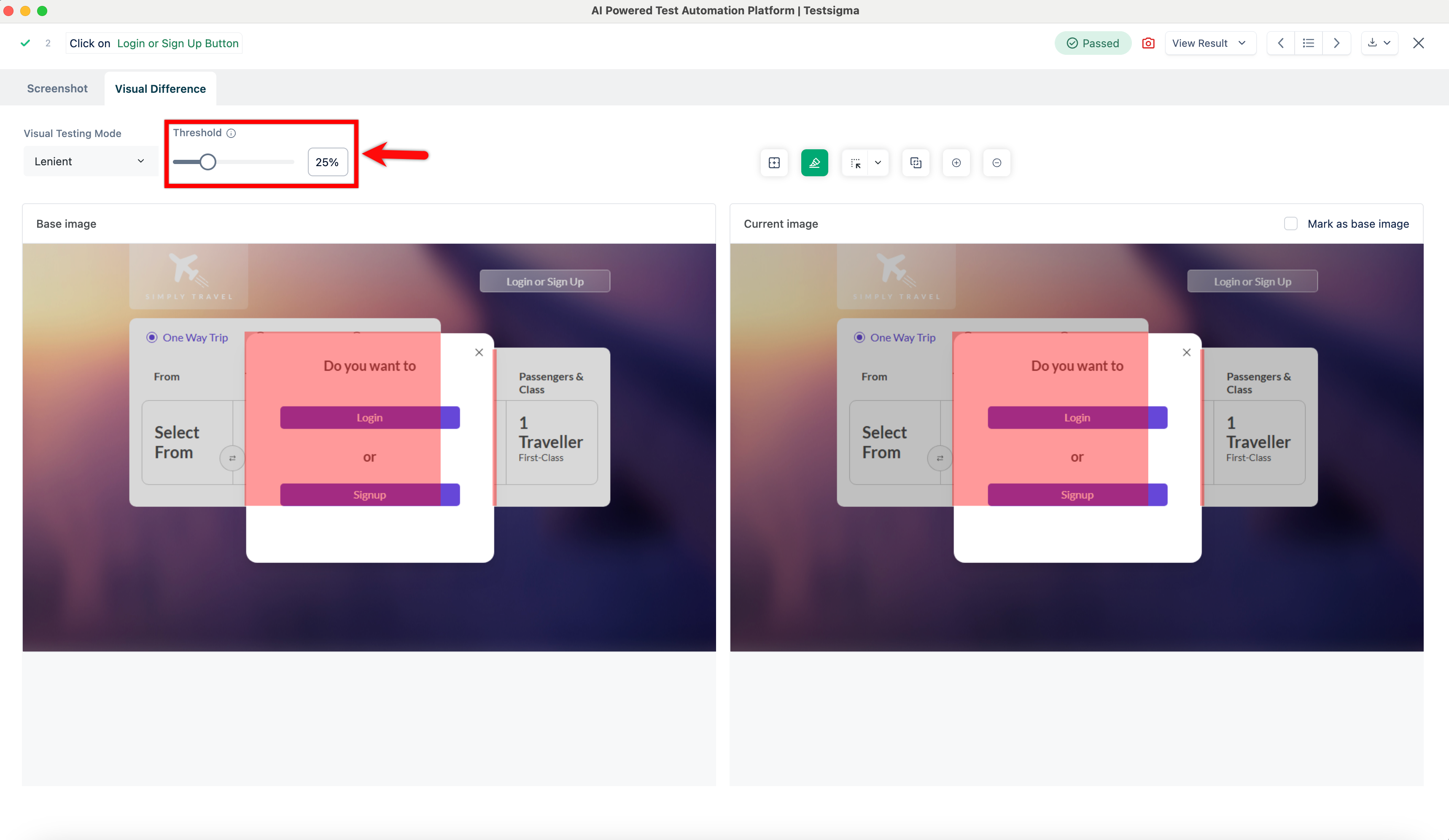

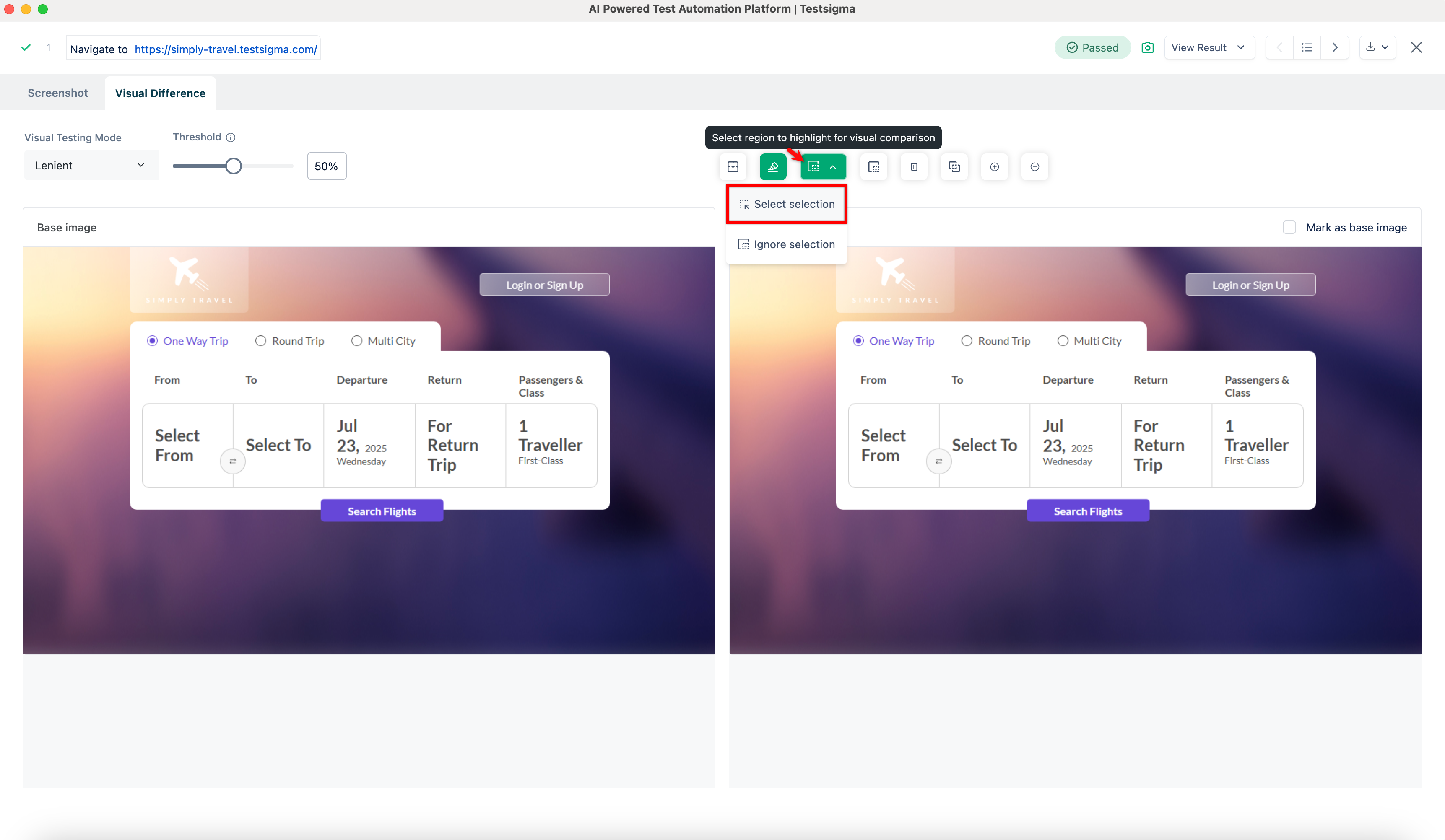

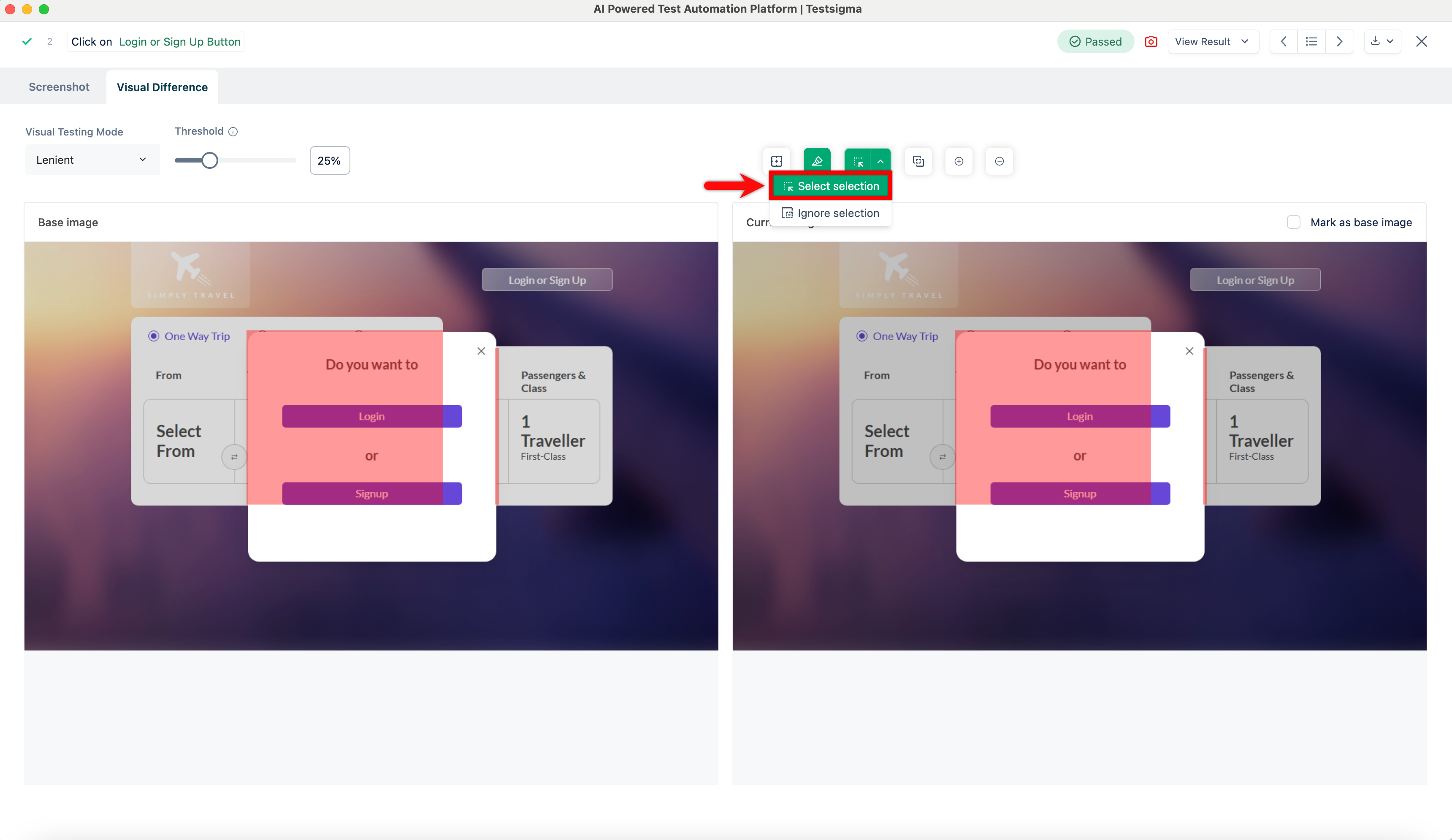

### **Steps to Set the Visual Testing Mode**

1. Click **Ad-hoc Runs** in the **Utility Panel**, then click **View Details** to open the test case result page.

-

+

2. Click the **Camera** icon to open the **Visual Difference** overlay screen.

-

+

3. Expand the **Visual Testing Mode** field and select **Strict** or **Lenient**.

-

+

4. If you select **Lenient**, enter the desired percentage in the **Threshold** field.

-

+

[[info | **NOTE**:]]

| To apply **Lenient** mode to a specific area of the base image, click the **Select region to highlight for visual comparison** icon to enable it. Then expand the option and choose **Select selection**.

-|

+|

[[info | **NOTE**:]]

| **AI Insights** in Testsigma provides a detailed UI Difference Analysis Report whenever visual differences are detected between the base and current images.