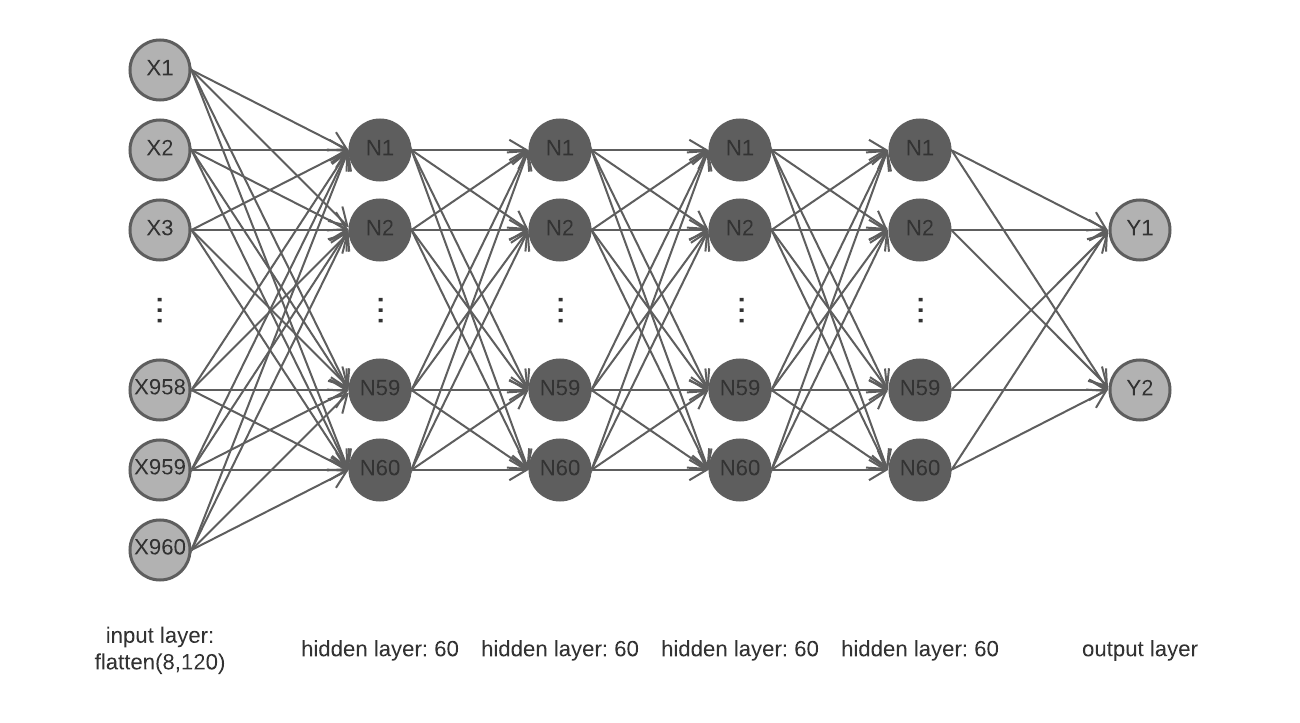

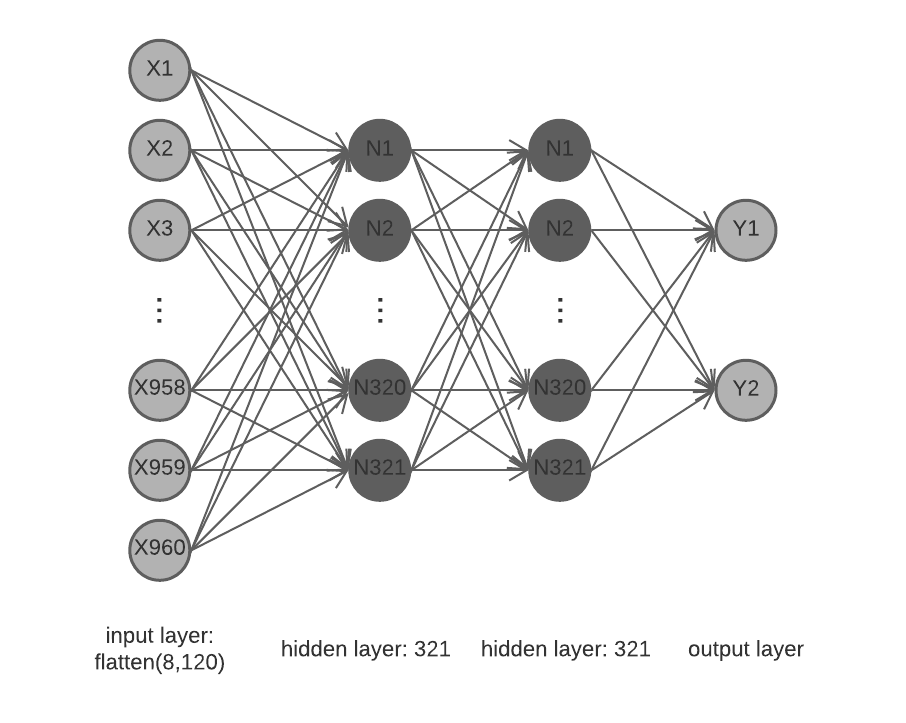

the diagram below depicts the architecture of the neural network currently used in the recorded demos. As I have not yet had the chance to formally learn about neural networks - as I am just finishing up my second year - this project has given me great insight on the topic;

This architecture was created primarily via experimentation. I experimented with different numbers of hidden layers and the amount of neurons within each layer.

Throughout my experimentation process, I found the following general cases:

- models with minimal hidden layers AND minimal hidden neurons did not perform well

- models with multiple hidden layers AND minimal hidden neurons did not perform well

- models with multiple hidden layers AND multiple hidden neurons did perform adequately

- models with minimal hidden layers AND multiple hidden neurons did ??? (next experiment)

for case 3 however, it must be noted that when the number of hidden neurons were exaggerated and each uniformly distributed hidden layer approached N neurons (N = size of input) the architecture appeared to extrapolate my motor functions less precisely; presumably it worked more like a memory bank and buffered all of my data's features.

When I decreased the number of hidden neurons across each layer, that is when it tended to process the data more actively, discovering key consistencies across the data.

As I gained more knowledge and understanding on the topics of neural networks (from sources online), I recreated the next neural network architecture that I would like to experiment with. The greatest concern I had in the previous neural network architecture was that there were too many hidden layers and hence would decrease my model's accuracy.

In the next model, I plan on decreasing the number of hidden layers (which worked against me before), but in exchange I will increase the number of hidden neurons, testing my previous hypothesis that more hidden layers were not redundant.