-

Notifications

You must be signed in to change notification settings - Fork 2

NeuralNetworks

Sathiyanarayanan S edited this page Jan 12, 2021

·

4 revisions

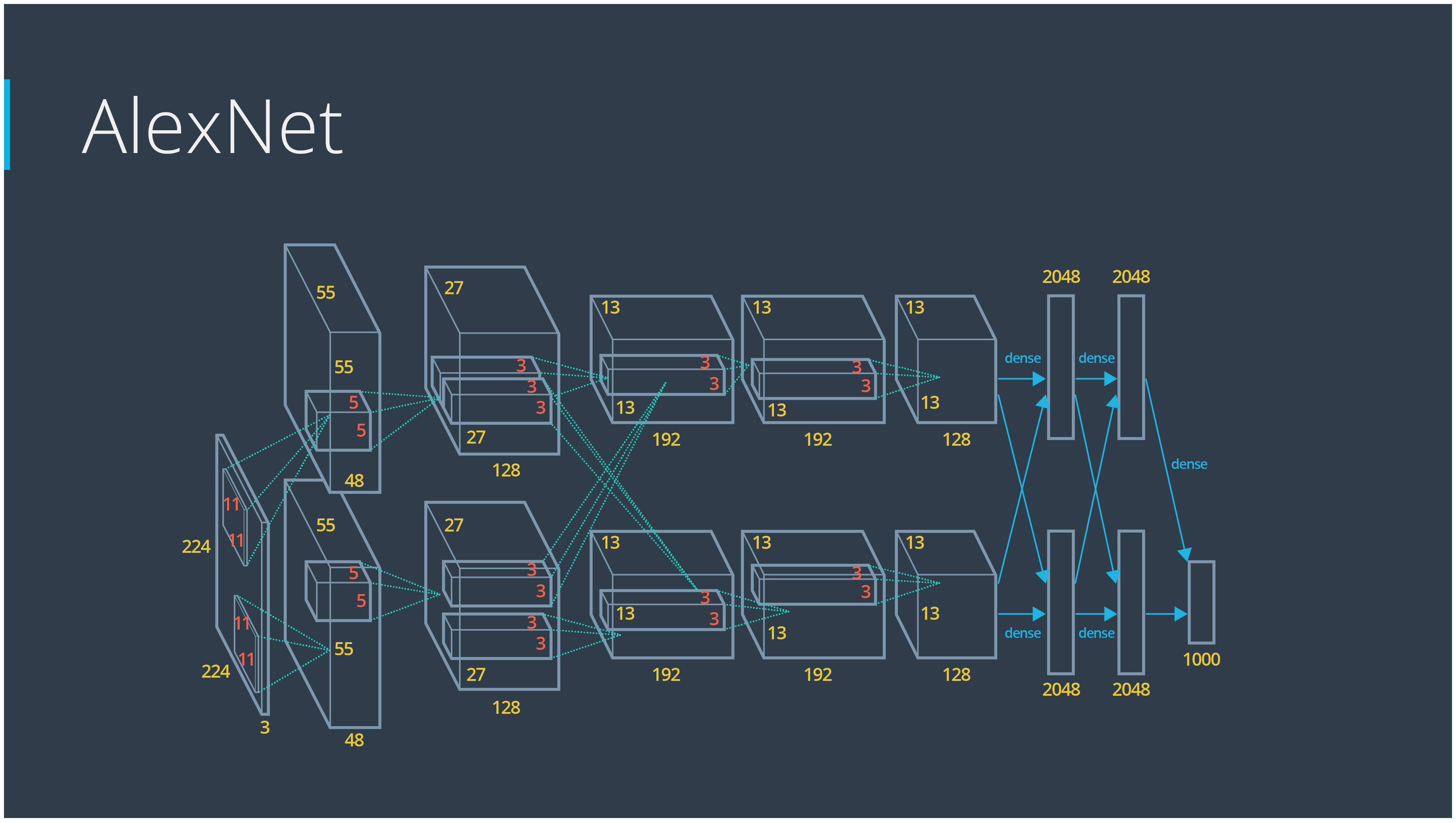

For Alexnet we usually pass 224224 images (227227 with padding), What happens if we pass image with less resolution (pixel) / more resolution? Does it fail / overfit / poor accuracy?

With fully connected layer it will fail even if the resolution changes by 1 pixel. Because at the point of flattening number of nodes expected by fully connected layer will not match.