Learn from book building a Computer Vision Applications using Artificial Neural Networks by Shamshad Ansari Publication: Apress

install opencv packages: pip install opencv-python

install numpy packages: pip install numpy

install scikit image packages: pip install scikit-image

Currently work in progress. Below here are some list of code that are completed.

- Resizing

- Cropping: Crop the image to get only the face of the lena

- Image Arithematic

- Addition of two Images

- Substraction of two Images

- Bitwise Operation

- Bitwise Operation

- Masking.

- Masking Using Bitwise AND Operation -Splitting and Merginig channel

- Splitting channel

- Merging of all channel

- Noise reduction using smoothing and blurring

Smoothing, also called blurring, is an important image processing technique to reduce noise present in an image

- Smoothing/Blurring by Mean Filtering or Averaging

Salt and pepper noise: Contains random occurrences of black and white pixels

Impulse noise: Means random occurrences of white pixels

Gaussian noise: Where the intensity variation follows a Gaussian normal distribution

- Smoothing Using the Gaussian Technique

Gaussian filtering is one of the most effective blurring techniques in image processing. blurring technique gives a more natural smoothing result compared to the averaging technique.

-- The image represented by the NumPy array.

–– The k×k matrix as the kernel height and width.

–– sigmaX and sigmaY is a standard deviation in the X and Y directions.

- Median Blurring

The previous three blurring techniques yield blurred images with the side effect that we lose the edges in the image.To blur an image while preserving the edges, we use bilateral blurring, which is an enhancement over Gaussian blurring.

- Bilateral blurring.

- Binarization with thresholding

Image binarization is the process of converting a grayscale image into a binary—a black-and- white—image. We apply a technique called thresholding to binarize an image.

- Binarization Using Simple Thresholding

- Binarization Using Adaptive Thresholding

Adaptive thresholding is used to binarize a grayscale image that has a varying degree of pixel intensity, and one single threshold value may not be suitable to extract the information from the image.

- Ostu binarization

In the simple thresholding, we select a global threshold that is arbitrarily selected. It is difficult to know what the right value of the threshold is, so we may need to do trial-and-error experiments a few times before you get the right value. Even if you get an ideal value for one case, it may not work with other images that have different pixel intensity characteristics.

Otsu’s method determines an optimal global threshold value from the image histogram

- Ostu's Binarization

Edge detection involves a set of methods to find points in an image where the brightness of pixels changes distinctly.

- Sobel Derivatives(cv2.Sobel() function)

The Sobel method is a combination of Gaussian smoothing and Sobel differentiation, which computes an approximation of the gradient of an image intensity function. Because of the Gaussian smoothing, this method is resistant to noise.

- Sobel and Schar Gradient Detection

-

Note: A data type, cv2.CV_64F, which is a 64-bit float. Why? The transition from black-to-white is considered a positive slope, while the transition from white-to-black is a negative slope. An 8-bit unsigned integer cannot hold a negative number. Therefore, we need to use a 64-bit float; otherwise, we will lose gradients when the transition from white to black happens.

-

Laplacian Derivatives (cv2.Laplacian() Function)

The Laplacian operator calculates the second derivative of the pixel intensity function to determine the edges in the image.

- Edge Detection Using Laplacian Derivatives

- Canny Edge Detection Canny edge detection is one of the most popular edge detection methods in image processing. This is a multistep process. It first blurs the image to reduce noise and then computes Sobel gradients in the X and Y directions, suppresses the edges where nonmaxima is calculated, and finally determines whether a pixel is “edge-like” or not by applying hysteresis thresholding.

- Canny Edge Detection

- Contours: Contours are curves joining continuous points of the same intensity. Determining contours is useful for object identification, face detection, and recognition.

- Contour Detection and Drawing

- Calculate the histogram of the image.

- Histogram of a Grayscale Image

- Histogram of Three Channels of RGB Color Image

- Histogram Equalizer Histogram equalization is an image processing technique to adjust the contrast of an image. It is a method of redistributing the pixel intensities in such a way that the intensities of the under-populated pixels are equalized to the intensities of over-populated pixel intensities

- Histogram Equalization

- GLCM The gray-level co-occurrence matrix (GLCM) is the distribution of simultaneously occurring pixel values within a given offset. An offset is the position (distance and direction) of adjacent pixels. As the name implies, the GLCM is always calculated for a grayscale image.

- GLCM Calculation Using the greycomatrix() Function the GLCM is not directly used as a feature, but we use this to calculate some useful statistics, which gives us an idea about the texture of the image.

Contrast - Measures the local variations in the GLCM. Correlation - Measures the joint probability occurrence of the specified pixel pairs. Energy - Provides the sum of squared elements in the GLCM. Also known as uniformity or the angular second moment Homogeneity - Measures the closeness of the distribution of elements in the GLCM to the GLCM diagonal.

- Calculation of Image statistic from the GLCM

- HOG: Histogram of Oriented gradient important feature description for object detection structural shape and appearance of an object in an image The HOG algorithm computes the occurrences of gradient orientation in localized portions of the image.

- Program to built HOG

- LBP: Local binary patterns

- LBP Image and Histogram Calculation and Comparison with Original Image

Feature selection is the process of selecting variables or attributes that are relevant and useful in model training. This is a process of eliminating unnecessary or irrelevant features and selecting a subset of features that are strong contributors to the learning of the model.

Feature Extraction - Process of creating Feature

Feature Selection - Process of removing unecessary feature

-

Filter Method: Filtering is a process that allows you to do preprocessing to select the feature subset. In this process, you determine a correlation between a feature and the target variable and determine their relationship based on statistical scores.

-

Wrapper Method:In the wrapper method, you use a subset of features and train the model. Evaluate the model, and based on the result, either add or remove features and retrain the model. 1. Forward Selection, 2. Backward Elimination, 3. Recursive Feature Elimination

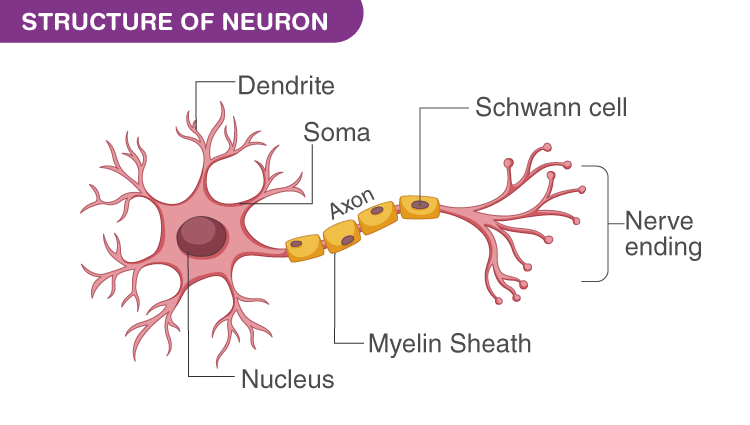

An ANN is a computing system that is designed to work the way human brain works. A human body has a billions of neurons with trillions interconnections amongs them. These interconnected neurons are called as neural network.

Computer scientists were inspired by the human vision system and tried to mimic

neural networks by creating a computer system that learns and functions the way our

brains do. This learning system is called an artificial neural network (ANN).